猫狗数据集使用top1和top5准确率衡量模型

Posted xiximayou

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了猫狗数据集使用top1和top5准确率衡量模型相关的知识,希望对你有一定的参考价值。

数据集下载地址:

链接:https://pan.baidu.com/s/1l1AnBgkAAEhh0vI5_loWKw

提取码:2xq4

创建数据集:https://www.cnblogs.com/xiximayou/p/12398285.html

读取数据集:https://www.cnblogs.com/xiximayou/p/12422827.html

进行训练:https://www.cnblogs.com/xiximayou/p/12448300.html

保存模型并继续进行训练:https://www.cnblogs.com/xiximayou/p/12452624.html

加载保存的模型并测试:https://www.cnblogs.com/xiximayou/p/12459499.html

划分验证集并边训练边验证:https://www.cnblogs.com/xiximayou/p/12464738.html

使用学习率衰减策略并边训练边测试:https://www.cnblogs.com/xiximayou/p/12468010.html

利用tensorboard可视化训练和测试过程:https://www.cnblogs.com/xiximayou/p/12482573.html

从命令行接收参数:https://www.cnblogs.com/xiximayou/p/12488662.html

epoch、batchsize、step之间的关系:https://www.cnblogs.com/xiximayou/p/12405485.html

之前使用的仅仅是top1准确率。在图像分类中,一般使用top1和top5来衡量分类模型的好坏。下面来看看。

首先在util下新建一个acc.py文件,向里面加入计算top1和top5准确率的代码:

import torch def accu(output, target, topk=(1,)): """Computes the accuracy over the k top predictions for the specified values of k""" with torch.no_grad(): maxk = max(topk) batch_size = target.size(0) _, pred = output.topk(maxk, 1, True, True) pred = pred.t() correct = pred.eq(target.view(1, -1).expand_as(pred)) res = [] for k in topk: correct_k = correct[:k].view(-1).float().sum(0, keepdim=True) res.append(correct_k.mul_(100.0 / batch_size)) return res

重点就是topk()函数:

torch.topk(input, k, dim=None, largest=True, sorted=True, out=None) -> (Tensor, LongTensor)

input:输入张量

k:指定返回的前几位的值

dim:排序的维度

largest:返回最大值

sorted:返回值是否排序

out:可选输出张量

需要注意的是我们这里只有两类,因此不存在top5。因此如果设置参数topk=(1,5),则会报错:RuntimeError:invalid argument 5:k not in range for dimension at /pytorch/ate ...

因此我们只能设置topk=(1,2),而且top2的值肯定是100%。最终res中是一个二维数组,第一位存储的是top1准确率,第二位存储的是top2准确率。

然后修改对应的train.py:

import torch from tqdm import tqdm from tensorflow import summary import datetime from utils import acc """ current_time = str(datetime.datetime.now().timestamp()) train_log_dir = ‘/content/drive/My Drive/colab notebooks/output/tsboardx/train/‘ + current_time test_log_dir = ‘/content/drive/My Drive/colab notebooks/output/tsboardx/test/‘ + current_time val_log_dir = ‘/content/drive/My Drive/colab notebooks/output/tsboardx/val/‘ + current_time train_summary_writer = summary.create_file_writer(train_log_dir) val_summary_writer = summary.create_file_writer(val_log_dir) test_summary_writer = summary.create_file_writer(test_log_dir) """ class Trainer: def __init__(self,criterion,optimizer,model): self.criterion=criterion self.optimizer=optimizer self.model=model def get_lr(self): for param_group in self.optimizer.param_groups: return param_group[‘lr‘] def loop(self,num_epochs,train_loader,val_loader,test_loader,scheduler=None,acc1=0.0): self.acc1=acc1 for epoch in range(1,num_epochs+1): lr=self.get_lr() print("epoch:{},lr:{:.6f}".format(epoch,lr)) self.train(train_loader,epoch,num_epochs) self.val(val_loader,epoch,num_epochs) self.test(test_loader,epoch,num_epochs) if scheduler is not None: scheduler.step() def train(self,dataloader,epoch,num_epochs): self.model.train() with torch.enable_grad(): self._iteration_train(dataloader,epoch,num_epochs) def val(self,dataloader,epoch,num_epochs): self.model.eval() with torch.no_grad(): self._iteration_val(dataloader,epoch,num_epochs) def test(self,dataloader,epoch,num_epochs): self.model.eval() with torch.no_grad(): self._iteration_test(dataloader,epoch,num_epochs) def _iteration_train(self,dataloader,epoch,num_epochs): #total_step=len(dataloader) #tot_loss = 0.0 #correct = 0 train_loss=AverageMeter() train_top1=AverageMeter() train_top2=AverageMeter() #for i ,(images, labels) in enumerate(dataloader): #res=[] for images, labels in tqdm(dataloader,ncols=80): images = images.cuda() labels = labels.cuda() # Forward pass outputs = self.model(images) #_, preds = torch.max(outputs.data,1) pred1_train,pred2_train=acc.accu(outputs,labels,topk=(1,2)) loss = self.criterion(outputs, labels) train_loss.update(loss.item(),images.size(0)) train_top1.update(pred1_train[0],images.size(0)) train_top2.update(pred2_train[0],images.size(0)) # Backward and optimizer self.optimizer.zero_grad() loss.backward() self.optimizer.step() #tot_loss += loss.data """ if (i+1) % 2 == 0: print(‘Epoch: [{}/{}], Step: [{}/{}], Loss: {:.4f}‘ .format(epoch, num_epochs, i+1, total_step, loss.item())) """ #correct += torch.sum(preds == labels.data).to(torch.float32) ### Epoch info #### #epoch_loss = tot_loss/len(dataloader.dataset) #epoch_acc = correct/len(dataloader.dataset) #print(‘train loss: {:.4f},train acc: {:.4f}‘.format(epoch_loss,epoch_acc)) print(">>>[{}] train_loss:{:.4f} top1:{:.4f} top2:{:.4f}".format("train", train_loss.avg, train_top1.avg, train_top2.avg)) """ with train_summary_writer.as_default(): summary.scalar(‘loss‘, train_loss.avg, epoch) summary.scalar(‘accuracy‘, train_top1.avg, epoch) """ """ if epoch==num_epochs: state = { ‘model‘: self.model.state_dict(), ‘optimizer‘:self.optimizer.state_dict(), ‘epoch‘: epoch, ‘train_loss‘:train_loss.avg, ‘train_acc‘:train_top1.avg, } save_path="/content/drive/My Drive/colab notebooks/output/" torch.save(state,save_path+"/resnet18_final_v2"+".t7") """ t_loss = train_loss.avg, t_top1 = train_top1.avg t_top2 = train_top2.avg return t_loss,t_top1,t_top2 def _iteration_val(self,dataloader,epoch,num_epochs): #total_step=len(dataloader) #tot_loss = 0.0 #correct = 0 #for i ,(images, labels) in enumerate(dataloader): val_loss=AverageMeter() val_top1=AverageMeter() val_top2=AverageMeter() for images, labels in tqdm(dataloader,ncols=80): images = images.cuda() labels = labels.cuda() # Forward pass outputs = self.model(images) #_, preds = torch.max(outputs.data,1) pred1_val,pred2_val=acc.accu(outputs,labels,topk=(1,2)) loss = self.criterion(outputs, labels) val_loss.update(loss.item(),images.size(0)) val_top1.update(pred1_val[0],images.size(0)) val_top2.update(pred2_val[0],images.size(0)) #tot_loss += loss.data #correct += torch.sum(preds == labels.data).to(torch.float32) """ if (i+1) % 2 == 0: print(‘Epoch: [{}/{}], Step: [{}/{}], Loss: {:.4f}‘ .format(1, 1, i+1, total_step, loss.item())) """ ### Epoch info #### #epoch_loss = tot_loss/len(dataloader.dataset) #epoch_acc = correct/len(dataloader.dataset) #print(‘val loss: {:.4f},val acc: {:.4f}‘.format(epoch_loss,epoch_acc)) print(">>>[{}] val_loss:{:.4f} top1:{:.4f} top2:{:.4f}".format("val", val_loss.avg, val_top1.avg, val_top2.avg)) """ with val_summary_writer.as_default(): summary.scalar(‘loss‘, val_loss.avg, epoch) summary.scalar(‘accuracy‘, val_top1.avg, epoch) """ t_loss = val_loss.avg, t_top1 = val_top1.avg t_top2 = val_top2.avg return t_loss,t_top1,t_top2 def _iteration_test(self,dataloader,epoch,num_epochs): #total_step=len(dataloader) #tot_loss = 0.0 #correct = 0 #for i ,(images, labels) in enumerate(dataloader): test_loss=AverageMeter() test_top1=AverageMeter() test_top2=AverageMeter() for images, labels in tqdm(dataloader,ncols=80): images = images.cuda() labels = labels.cuda() # Forward pass outputs = self.model(images) #_, preds = torch.max(outputs.data,1) pred1_test,pred2_test=acc.accu(outputs,labels,topk=(1,2)) loss = self.criterion(outputs, labels) test_loss.update(loss.item(),images.size(0)) test_top1.update(pred1_test[0],images.size(0)) test_top2.update(pred2_test[0],images.size(0)) #tot_loss += loss.data #correct += torch.sum(preds == labels.data).to(torch.float32) """ if (i+1) % 2 == 0: print(‘Epoch: [{}/{}], Step: [{}/{}], Loss: {:.4f}‘ .format(1, 1, i+1, total_step, loss.item())) """ ### Epoch info #### #epoch_loss = tot_loss/len(dataloader.dataset) #epoch_acc = correct/len(dataloader.dataset) #print(‘test loss: {:.4f},test acc: {:.4f}‘.format(epoch_loss,epoch_acc)) print(">>>[{}] test_loss:{:.4f} top1:{:.4f} top2:{:.4f}".format("test", test_loss.avg, test_top1.avg, test_top2.avg)) t_loss = test_loss.avg, t_top1 = test_top1.avg t_top2 = test_top2.avg """ with test_summary_writer.as_default(): summary.scalar(‘loss‘, test_loss.avg, epoch) summary.scalar(‘accuracy‘, test_top1.avg, epoch) """ """ if epoch_acc > self.acc1: state = { "model": self.model.state_dict(), "optimizer": self.optimizer.state_dict(), "epoch": epoch, "epoch_loss": test_loss.avg, "epoch_acc": test_top1.avg, } save_path="/content/drive/My Drive/colab notebooks/output/" print("在第{}个epoch取得最好的测试准确率,准确率为:{:.4f}".format(epoch,test_loss.avg)) torch.save(state,save_path+"/resnet18_best_v2"+".t7") self.acc1=max(self.acc1,test_loss.avg) """ return t_loss,t_top1,t_top2 class AverageMeter(object): def __init__(self): self.reset() def reset(self): self.val = 0 self.avg = 0 self.sum = 0 self.count = 0 def update(self, val, n=1): self.val = val self.sum += float(val) * n self.count += n self.avg = self.sum / self.count

我们新建了一个AverageMeter类来存储结果。

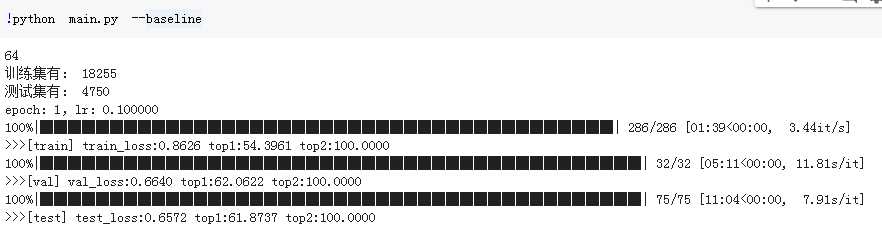

最终结果:

下一节:加载预训练的模型并进行微调。

以上是关于猫狗数据集使用top1和top5准确率衡量模型的主要内容,如果未能解决你的问题,请参考以下文章