URP学习之三--ForwardRenderer

Posted shenyibo

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了URP学习之三--ForwardRenderer相关的知识,希望对你有一定的参考价值。

上一章讲到了URP的RenderSingleCamera函数的Renderer之前,这次我们继续,开始了解Unity封装的ForwardRenderer是个什么神秘物体。

为了方便,先把上次讲到的方法贴在这里:

1 public static void RenderSingleCamera(ScriptableRenderContext context, Camera camera) 2 { 3 if (!camera.TryGetCullingParameters(IsStereoEnabled(camera), out var cullingParameters)) 4 return; 5 6 var settings = asset; 7 UniversalAdditionalCameraData additionalCameraData = null; 8 if (camera.cameraType == CameraType.Game || camera.cameraType == CameraType.VR) 9 camera.gameObject.TryGetComponent(out additionalCameraData); 10 11 InitializeCameraData(settings, camera, additionalCameraData, out var cameraData); 12 SetupPerCameraShaderConstants(cameraData); 13 14 ScriptableRenderer renderer = (additionalCameraData != null) ? additionalCameraData.scriptableRenderer : settings.scriptableRenderer; 15 if (renderer == null) 16 { 17 Debug.LogWarning(string.Format("Trying to render {0} with an invalid renderer. Camera rendering will be skipped.", camera.name)); 18 return; 19 } 20 21 string tag = (asset.debugLevel >= PipelineDebugLevel.Profiling) ? camera.name: k_RenderCameraTag; 22 CommandBuffer cmd = CommandBufferPool.Get(tag); 23 using (new ProfilingSample(cmd, tag)) 24 { 25 renderer.Clear(); 26 renderer.SetupCullingParameters(ref cullingParameters, ref cameraData); 27 28 context.ExecuteCommandBuffer(cmd); 29 cmd.Clear(); 30 31 #if UNITY_EDITOR 32 33 // Emit scene view UI 34 if (cameraData.isSceneViewCamera) 35 ScriptableRenderContext.EmitWorldGeometryForSceneView(camera); 36 #endif 37 38 var cullResults = context.Cull(ref cullingParameters); 39 InitializeRenderingData(settings, ref cameraData, ref cullResults, out var renderingData); 40 41 renderer.Setup(context, ref renderingData); 42 renderer.Execute(context, ref renderingData); 43 } 44 45 context.ExecuteCommandBuffer(cmd); 46 CommandBufferPool.Release(cmd); 47 context.Submit();

我们从line 14开始学习:

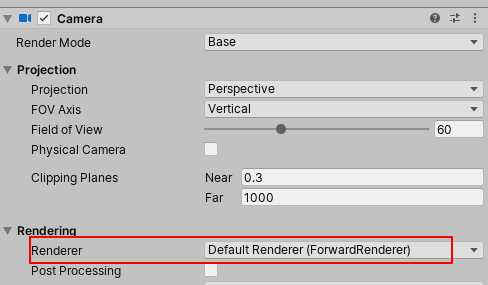

我们可以看到Renderer是从相机参数中获取的,即这个位置:

这也就意味着我们可以一个相机一个Renderer,比如A相机Forward,B相机Deffered(当然,前提时支持多相机,目前可以在git上pull到camerastack,然而没有了depth only的flag,这里不是重点)

接下来就是Renderer对象执行Clear方法,我们看一下ForwardRenderer中有哪些东西:

const int k_DepthStencilBufferBits = 32; const string k_CreateCameraTextures = "Create Camera Texture"; ColorGradingLutPass m_ColorGradingLutPass; DepthOnlyPass m_DepthPrepass; MainLightShadowCasterPass m_MainLightShadowCasterPass; AdditionalLightsShadowCasterPass m_AdditionalLightsShadowCasterPass; ScreenSpaceShadowResolvePass m_ScreenSpaceShadowResolvePass; DrawObjectsPass m_RenderOpaqueForwardPass; DrawSkyboxPass m_DrawSkyboxPass; CopyDepthPass m_CopyDepthPass; CopyColorPass m_CopyColorPass; DrawObjectsPass m_RenderTransparentForwardPass; InvokeOnRenderObjectCallbackPass m_OnRenderObjectCallbackPass; PostProcessPass m_PostProcessPass; PostProcessPass m_FinalPostProcessPass; FinalBlitPass m_FinalBlitPass; CapturePass m_CapturePass;

扑面而来的就是一堆Pass,熟悉的味道,还是按照Pass组织渲染,大概看了以下Pass的种类,基本上了解了可以做的操作和比之前优化的地方,总的来说和之前内置管线差的不太多。

先让这些Pass来这里混个眼熟,之后会频繁提到(哈哈,其实是不知道放在哪个位置好)

然后我们回到刚才那个Renderer的Clear方法(在Renderer基类中):

internal void Clear() { this.m_CameraColorTarget = (RenderTargetIdentifier) BuiltinRenderTextureType.CameraTarget; this.m_CameraDepthTarget = (RenderTargetIdentifier) BuiltinRenderTextureType.CameraTarget; ScriptableRenderer.m_ActiveColorAttachment = (RenderTargetIdentifier) BuiltinRenderTextureType.CameraTarget; ScriptableRenderer.m_ActiveDepthAttachment = (RenderTargetIdentifier) BuiltinRenderTextureType.CameraTarget; this.m_FirstCameraRenderPassExecuted = false; ScriptableRenderer.m_InsideStereoRenderBlock = false; this.m_ActiveRenderPassQueue.Clear(); }

主要进行的操作时重置各种渲染对象以及执行状态,清空了Pass队列。

之后就是SetupCullingParameters方法:

public override void SetupCullingParameters(ref ScriptableCullingParameters cullingParameters, ref CameraData cameraData) { Camera camera = cameraData.camera; // TODO: PerObjectCulling also affect reflection probes. Enabling it for now. // if (asset.additionalLightsRenderingMode == LightRenderingMode.Disabled || // asset.maxAdditionalLightsCount == 0) // { // cullingParameters.cullingOptions |= CullingOptions.DisablePerObjectCulling; // } // If shadow is disabled, disable shadow caster culling if (Mathf.Approximately(cameraData.maxShadowDistance, 0.0f)) cullingParameters.cullingOptions &= ~CullingOptions.ShadowCasters; cullingParameters.shadowDistance = cameraData.maxShadowDistance; }

可以看到基本上是对于是否有reflection probe和有shadow情况下的culling处理。

接下来调用cull方法就不说了,SRP篇讲过,再往后就是InitializeRenderingData方法:

static void InitializeRenderingData(UniversalRenderPipelineAsset settings, ref CameraData cameraData, ref CullingResults cullResults, out RenderingData renderingData) { var visibleLights = cullResults.visibleLights; int mainLightIndex = GetMainLightIndex(settings, visibleLights); bool mainLightCastShadows = false; bool additionalLightsCastShadows = false; if (cameraData.maxShadowDistance > 0.0f) { mainLightCastShadows = (mainLightIndex != -1 && visibleLights[mainLightIndex].light != null && visibleLights[mainLightIndex].light.shadows != LightShadows.None); // If additional lights are shaded per-pixel they cannot cast shadows if (settings.additionalLightsRenderingMode == LightRenderingMode.PerPixel) { for (int i = 0; i < visibleLights.Length; ++i) { if (i == mainLightIndex) continue; Light light = visibleLights[i].light; // LWRP doesn‘t support additional directional lights or point light shadows yet if (visibleLights[i].lightType == LightType.Spot && light != null && light.shadows != LightShadows.None) { additionalLightsCastShadows = true; break; } } } } renderingData.cullResults = cullResults; renderingData.cameraData = cameraData; InitializeLightData(settings, visibleLights, mainLightIndex, out renderingData.lightData); InitializeShadowData(settings, visibleLights, mainLightCastShadows, additionalLightsCastShadows && !renderingData.lightData.shadeAdditionalLightsPerVertex, out renderingData.shadowData); InitializePostProcessingData(settings, out renderingData.postProcessingData); renderingData.supportsDynamicBatching = settings.supportsDynamicBatching; renderingData.perObjectData = GetPerObjectLightFlags(renderingData.lightData.additionalLightsCount); bool isOffscreenCamera = cameraData.camera.targetTexture != null && !cameraData.isSceneViewCamera; renderingData.killAlphaInFinalBlit = !Graphics.preserveFramebufferAlpha && PlatformNeedsToKillAlpha() && !isOffscreenCamera; }

代码前半部分是在处理要不要阴影,如果没找到主光,不要,主光以外目前只支持SpotLight的阴影。

后半部分基本上就是在填充renderingData的内容,其中需要注意的几个方法

1.InitializeLightData方法:

static void InitializeLightData(UniversalRenderPipelineAsset settings, NativeArray<VisibleLight> visibleLights, int mainLightIndex, out LightData lightData) { int maxPerObjectAdditionalLights = UniversalRenderPipeline.maxPerObjectLights; int maxVisibleAdditionalLights = UniversalRenderPipeline.maxVisibleAdditionalLights; lightData.mainLightIndex = mainLightIndex; if (settings.additionalLightsRenderingMode != LightRenderingMode.Disabled) { lightData.additionalLightsCount = Math.Min((mainLightIndex != -1) ? visibleLights.Length - 1 : visibleLights.Length, maxVisibleAdditionalLights); lightData.maxPerObjectAdditionalLightsCount = Math.Min(settings.maxAdditionalLightsCount, maxPerObjectAdditionalLights); } else { lightData.additionalLightsCount = 0; lightData.maxPerObjectAdditionalLightsCount = 0; } lightData.shadeAdditionalLightsPerVertex = settings.additionalLightsRenderingMode == LightRenderingMode.PerVertex; lightData.visibleLights = visibleLights; lightData.supportsMixedLighting = settings.supportsMixedLighting; }

我们可以看到对于每个物体可以接受多少盏灯光的限制,UniversalRenderPipeline.maxPerObjectLights属性限定了不同Level每个物体接受灯光数量的上限,如下:

public static int maxPerObjectLights { // No support to bitfield mask and int[] in gles2. Can‘t index fast more than 4 lights. // Check Lighting.hlsl for more details. get => (SystemInfo.graphicsDeviceType == GraphicsDeviceType.OpenGLES2) ? 4 : 8; }

GLES 2最多四盏灯 其余最多八盏灯。

2.InitializeShadowData方法

static void InitializeShadowData(UniversalRenderPipelineAsset settings, NativeArray<VisibleLight> visibleLights, bool mainLightCastShadows, bool additionalLightsCastShadows, out ShadowData shadowData) { m_ShadowBiasData.Clear(); for (int i = 0; i < visibleLights.Length; ++i) { Light light = visibleLights[i].light; UniversalAdditionalLightData data = null; if (light != null) { #if UNITY_2019_3_OR_NEWER light.gameObject.TryGetComponent(out data); #else data = light.gameObject.GetComponent<LWRPAdditionalLightData>(); #endif } if (data && !data.usePipelineSettings) m_ShadowBiasData.Add(new Vector4(light.shadowBias, light.shadowNormalBias, 0.0f, 0.0f)); else m_ShadowBiasData.Add(new Vector4(settings.shadowDepthBias, settings.shadowNormalBias, 0.0f, 0.0f)); } shadowData.bias = m_ShadowBiasData; // Until we can have keyword stripping forcing single cascade hard shadows on gles2 bool supportsScreenSpaceShadows = SystemInfo.graphicsDeviceType != GraphicsDeviceType.OpenGLES2; shadowData.supportsMainLightShadows = SystemInfo.supportsShadows && settings.supportsMainLightShadows && mainLightCastShadows; // we resolve shadows in screenspace when cascades are enabled to save ALU as computing cascade index + shadowCoord on fragment is expensive shadowData.requiresScreenSpaceShadowResolve = shadowData.supportsMainLightShadows && supportsScreenSpaceShadows && settings.shadowCascadeOption != ShadowCascadesOption.NoCascades; int shadowCascadesCount; switch (settings.shadowCascadeOption) { case ShadowCascadesOption.FourCascades: shadowCascadesCount = 4; break; case ShadowCascadesOption.TwoCascades: shadowCascadesCount = 2; break; default: shadowCascadesCount = 1; break; } shadowData.mainLightShadowCascadesCount = (shadowData.requiresScreenSpaceShadowResolve) ? shadowCascadesCount : 1; shadowData.mainLightShadowmapWidth = settings.mainLightShadowmapResolution; shadowData.mainLightShadowmapHeight = settings.mainLightShadowmapResolution; switch (shadowData.mainLightShadowCascadesCount) { case 1: shadowData.mainLightShadowCascadesSplit = new Vector3(1.0f, 0.0f, 0.0f); break; case 2: shadowData.mainLightShadowCascadesSplit = new Vector3(settings.cascade2Split, 1.0f, 0.0f); break; default: shadowData.mainLightShadowCascadesSplit = settings.cascade4Split; break; } shadowData.supportsAdditionalLightShadows = SystemInfo.supportsShadows && settings.supportsAdditionalLightShadows && additionalLightsCastShadows; shadowData.additionalLightsShadowmapWidth = shadowData.additionalLightsShadowmapHeight = settings.additionalLightsShadowmapResolution; shadowData.supportsSoftShadows = settings.supportsSoftShadows && (shadowData.supportsMainLightShadows || shadowData.supportsAdditionalLightShadows); shadowData.shadowmapDepthBufferBits = 16; }

里面需要注意的几点:默认的光照数据统一从pipelineasset中读,如果需要特殊的光照数据,需要在对应的光加上UniversalAdditionalLightData组件;

屏幕空间阴影需要设备GLES 2以上才支持

阴影质量由shadowmap分辨率和cascade数共同决定

3.InitializePostProcessingData方法:

static void InitializePostProcessingData(UniversalRenderPipelineAsset settings, out PostProcessingData postProcessingData) { postProcessingData.gradingMode = settings.supportsHDR ? settings.colorGradingMode : ColorGradingMode.LowDynamicRange; postProcessingData.lutSize = settings.colorGradingLutSize; }

这个方法很简短,主要是针对ColorGrading的相关参数。后面的调用基本上都是数据赋值,我们跳过。

接下来就是renderer.Setup(context, ref renderingData)方法

/// <inheritdoc /> public override void Setup(ScriptableRenderContext context, ref RenderingData renderingData) { Camera camera = renderingData.cameraData.camera; ref CameraData cameraData = ref renderingData.cameraData; RenderTextureDescriptor cameraTargetDescriptor = renderingData.cameraData.cameraTargetDescriptor; // Special path for depth only offscreen cameras. Only write opaques + transparents. bool isOffscreenDepthTexture = camera.targetTexture != null && camera.targetTexture.format == RenderTextureFormat.Depth; if (isOffscreenDepthTexture) { ConfigureCameraTarget(BuiltinRenderTextureType.CameraTarget, BuiltinRenderTextureType.CameraTarget); for (int i = 0; i < rendererFeatures.Count; ++i) rendererFeatures[i].AddRenderPasses(this, ref renderingData); EnqueuePass(m_RenderOpaqueForwardPass); EnqueuePass(m_DrawSkyboxPass); EnqueuePass(m_RenderTransparentForwardPass); return; } bool mainLightShadows = m_MainLightShadowCasterPass.Setup(ref renderingData); bool additionalLightShadows = m_AdditionalLightsShadowCasterPass.Setup(ref renderingData); bool resolveShadowsInScreenSpace = mainLightShadows && renderingData.shadowData.requiresScreenSpaceShadowResolve; // Depth prepass is generated in the following cases: // - We resolve shadows in screen space // - Scene view camera always requires a depth texture. We do a depth pre-pass to simplify it and it shouldn‘t matter much for editor. // - If game or offscreen camera requires it we check if we can copy the depth from the rendering opaques pass and use that instead. bool requiresDepthPrepass = renderingData.cameraData.isSceneViewCamera || (cameraData.requiresDepthTexture && (!CanCopyDepth(ref renderingData.cameraData))); requiresDepthPrepass |= resolveShadowsInScreenSpace; // TODO: There‘s an issue in multiview and depth copy pass. Atm forcing a depth prepass on XR until // we have a proper fix. if (cameraData.isStereoEnabled && cameraData.requiresDepthTexture) requiresDepthPrepass = true; bool createColorTexture = RequiresIntermediateColorTexture(ref renderingData, cameraTargetDescriptor) || rendererFeatures.Count != 0; // If camera requires depth and there‘s no depth pre-pass we create a depth texture that can be read // later by effect requiring it. bool createDepthTexture = cameraData.requiresDepthTexture && !requiresDepthPrepass; bool postProcessEnabled = cameraData.postProcessEnabled; m_ActiveCameraColorAttachment = (createColorTexture) ? m_CameraColorAttachment : RenderTargetHandle.CameraTarget; m_ActiveCameraDepthAttachment = (createDepthTexture) ? m_CameraDepthAttachment : RenderTargetHandle.CameraTarget; bool intermediateRenderTexture = createColorTexture || createDepthTexture; if (intermediateRenderTexture) CreateCameraRenderTarget(context, ref cameraData); ConfigureCameraTarget(m_ActiveCameraColorAttachment.Identifier(), m_ActiveCameraDepthAttachment.Identifier()); // if rendering to intermediate render texture we don‘t have to create msaa backbuffer int backbufferMsaaSamples = (intermediateRenderTexture) ? 1 : cameraTargetDescriptor.msaaSamples; if (Camera.main == camera && camera.cameraType == CameraType.Game && camera.targetTexture == null) SetupBackbufferFormat(backbufferMsaaSamples, renderingData.cameraData.isStereoEnabled); for (int i = 0; i < rendererFeatures.Count; ++i) { rendererFeatures[i].AddRenderPasses(this, ref renderingData); } int count = activeRenderPassQueue.Count; for (int i = count - 1; i >= 0; i--) { if(activeRenderPassQueue[i] == null) activeRenderPassQueue.RemoveAt(i); } bool hasAfterRendering = activeRenderPassQueue.Find(x => x.renderPassEvent == RenderPassEvent.AfterRendering) != null; if (mainLightShadows) EnqueuePass(m_MainLightShadowCasterPass); if (additionalLightShadows) EnqueuePass(m_AdditionalLightsShadowCasterPass); if (requiresDepthPrepass) { m_DepthPrepass.Setup(cameraTargetDescriptor, m_DepthTexture); EnqueuePass(m_DepthPrepass); } if (resolveShadowsInScreenSpace) { m_ScreenSpaceShadowResolvePass.Setup(cameraTargetDescriptor); EnqueuePass(m_ScreenSpaceShadowResolvePass); } if (postProcessEnabled) { m_ColorGradingLutPass.Setup(m_ColorGradingLut); EnqueuePass(m_ColorGradingLutPass); } EnqueuePass(m_RenderOpaqueForwardPass); if (camera.clearFlags == CameraClearFlags.Skybox && RenderSettings.skybox != null) EnqueuePass(m_DrawSkyboxPass); // If a depth texture was created we necessarily need to copy it, otherwise we could have render it to a renderbuffer if (createDepthTexture) { m_CopyDepthPass.Setup(m_ActiveCameraDepthAttachment, m_DepthTexture); EnqueuePass(m_CopyDepthPass); } if (renderingData.cameraData.requiresOpaqueTexture) { // TODO: Downsampling method should be store in the renderer isntead of in the asset. // We need to migrate this data to renderer. For now, we query the method in the active asset. Downsampling downsamplingMethod = UniversalRenderPipeline.asset.opaqueDownsampling; m_CopyColorPass.Setup(m_ActiveCameraColorAttachment.Identifier(), m_OpaqueColor, downsamplingMethod); EnqueuePass(m_CopyColorPass); } EnqueuePass(m_RenderTransparentForwardPass); EnqueuePass(m_OnRenderObjectCallbackPass); bool afterRenderExists = renderingData.cameraData.captureActions != null || hasAfterRendering; bool requiresFinalPostProcessPass = postProcessEnabled && renderingData.cameraData.antialiasing == AntialiasingMode.FastApproximateAntialiasing; // if we have additional filters // we need to stay in a RT if (afterRenderExists) { bool willRenderFinalPass = (m_ActiveCameraColorAttachment != RenderTargetHandle.CameraTarget); // perform post with src / dest the same if (postProcessEnabled) { m_PostProcessPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, m_AfterPostProcessColor, m_ActiveCameraDepthAttachment, m_ColorGradingLut, requiresFinalPostProcessPass, !willRenderFinalPass); EnqueuePass(m_PostProcessPass); } //now blit into the final target if (m_ActiveCameraColorAttachment != RenderTargetHandle.CameraTarget) { if (renderingData.cameraData.captureActions != null) { m_CapturePass.Setup(m_ActiveCameraColorAttachment); EnqueuePass(m_CapturePass); } if (requiresFinalPostProcessPass) { m_FinalPostProcessPass.SetupFinalPass(m_ActiveCameraColorAttachment); EnqueuePass(m_FinalPostProcessPass); } else { m_FinalBlitPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment); EnqueuePass(m_FinalBlitPass); } } } else { if (postProcessEnabled) { if (requiresFinalPostProcessPass) { m_PostProcessPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, m_AfterPostProcessColor, m_ActiveCameraDepthAttachment, m_ColorGradingLut, true, false); EnqueuePass(m_PostProcessPass); m_FinalPostProcessPass.SetupFinalPass(m_AfterPostProcessColor); EnqueuePass(m_FinalPostProcessPass); } else { m_PostProcessPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, RenderTargetHandle.CameraTarget, m_ActiveCameraDepthAttachment, m_ColorGradingLut, false, true); EnqueuePass(m_PostProcessPass); } } else if (m_ActiveCameraColorAttachment != RenderTargetHandle.CameraTarget) { m_FinalBlitPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment); EnqueuePass(m_FinalBlitPass); } } #if UNITY_EDITOR if (renderingData.cameraData.isSceneViewCamera) { m_SceneViewDepthCopyPass.Setup(m_DepthTexture); EnqueuePass(m_SceneViewDepthCopyPass); } #endif }

这个方法最主要的作用就是根据配置确定是否加入对应的Pass参与渲染,首先我们看到代码:

如果是渲染深度纹理的相机,则只加入了RenderFeature、不透明物体、天空盒、半透明物体的Pass(RenderFeature就是自定义的一些Pass,之后会讲到)

但是理论上来说,一般渲染深度纹理是不带半透物体的,半透物体也不会写入深度,但这里带上半透Pass,具体原因有待我们探究。

接下来是ShadowCasterPass的数据SetUp,根据返回值确定是否开启屏幕空间阴影。之后根据诸多因素确定是否需要predepthpass。

而后就是判断是否需要ColorTexture(绘制了不透明物体和天空盒的RT),再往后是对msaa的影响。

接下来加入了许多Pass到渲染队列:两个ShadowCaster(主光和非主光),DepthPrePass,ScreenSpaceShadowResolvePass,ColorGradingLutPass,RenderOpaqueForwardPass,DrawSkyboxPass,CopyDepthPass,CopyColorPass,TransparentForwardPass,RenderObjectCallbackPass,PostProcessPass,CapturePass,FinalBlitPass,每个pass是否加入渲染队列基本上都有对应的条件,具体每个pass在做什么事情其实光看名字就清楚了,思路和默认管线基本上差不多。但是考虑到之后对URP的扩展和熟练运用,这几个pass之后还会细细的讲一下。

最后就到了Execute方法执行了:

public void Execute(ScriptableRenderContext context, ref RenderingData renderingData) { Camera camera = renderingData.cameraData.camera; this.SetCameraRenderState(context, ref renderingData.cameraData); ScriptableRenderer.SortStable(this.m_ActiveRenderPassQueue); float time = Application.isPlaying ? Time.time : Time.realtimeSinceStartup; float deltaTime = Time.deltaTime; float smoothDeltaTime = Time.smoothDeltaTime; this.SetShaderTimeValues(time, deltaTime, smoothDeltaTime, (CommandBuffer) null); NativeArray<RenderPassEvent> blockEventLimits = new NativeArray<RenderPassEvent>(3, Allocator.Temp, NativeArrayOptions.ClearMemory); blockEventLimits[ScriptableRenderer.RenderPassBlock.BeforeRendering] = RenderPassEvent.BeforeRenderingPrepasses; blockEventLimits[ScriptableRenderer.RenderPassBlock.MainRendering] = RenderPassEvent.AfterRenderingPostProcessing; blockEventLimits[ScriptableRenderer.RenderPassBlock.AfterRendering] = (RenderPassEvent) 2147483647; NativeArray<int> blockRanges = new NativeArray<int>(blockEventLimits.Length + 1, Allocator.Temp, NativeArrayOptions.ClearMemory); this.FillBlockRanges(blockEventLimits, blockRanges); blockEventLimits.Dispose(); this.SetupLights(context, ref renderingData); this.ExecuteBlock(ScriptableRenderer.RenderPassBlock.BeforeRendering, blockRanges, context, ref renderingData, false); bool isStereoEnabled = renderingData.cameraData.isStereoEnabled; context.SetupCameraProperties(camera, isStereoEnabled); this.SetShaderTimeValues(time, deltaTime, smoothDeltaTime, (CommandBuffer) null); if (isStereoEnabled) this.BeginXRRendering(context, camera); this.ExecuteBlock(ScriptableRenderer.RenderPassBlock.MainRendering, blockRanges, context, ref renderingData, false); this.DrawGizmos(context, camera, GizmoSubset.PreImageEffects); this.ExecuteBlock(ScriptableRenderer.RenderPassBlock.AfterRendering, blockRanges, context, ref renderingData, false); if (isStereoEnabled) this.EndXRRendering(context, camera); this.DrawGizmos(context, camera, GizmoSubset.PostImageEffects); this.InternalFinishRendering(context); blockRanges.Dispose(); }

首先设置相机渲染状态,SetCameraRenderState方法:

private void SetCameraRenderState(ScriptableRenderContext context, ref CameraData cameraData) { CommandBuffer commandBuffer = CommandBufferPool.Get("Clear Render State"); commandBuffer.DisableShaderKeyword(ShaderKeywordStrings.MainLightShadows); commandBuffer.DisableShaderKeyword(ShaderKeywordStrings.MainLightShadowCascades); commandBuffer.DisableShaderKeyword(ShaderKeywordStrings.AdditionalLightsVertex); commandBuffer.DisableShaderKeyword(ShaderKeywordStrings.AdditionalLightsPixel); commandBuffer.DisableShaderKeyword(ShaderKeywordStrings.AdditionalLightShadows); commandBuffer.DisableShaderKeyword(ShaderKeywordStrings.SoftShadows); commandBuffer.DisableShaderKeyword(ShaderKeywordStrings.MixedLightingSubtractive); VolumeManager.instance.Update(cameraData.volumeTrigger, cameraData.volumeLayerMask); context.ExecuteCommandBuffer(commandBuffer); CommandBufferPool.Release(commandBuffer); }

我们可以看到主要是重置了一些keyword。接下来就是对pass队列进行排序,排序规则按照每个pass的renderPassEvent大小来进行,event枚举如下:

public enum RenderPassEvent { BeforeRendering = 0, BeforeRenderingShadows = 50, // 0x00000032 AfterRenderingShadows = 100, // 0x00000064 BeforeRenderingPrepasses = 150, // 0x00000096 AfterRenderingPrePasses = 200, // 0x000000C8 BeforeRenderingOpaques = 250, // 0x000000FA AfterRenderingOpaques = 300, // 0x0000012C BeforeRenderingSkybox = 350, // 0x0000015E AfterRenderingSkybox = 400, // 0x00000190 BeforeRenderingTransparents = 450, // 0x000001C2 AfterRenderingTransparents = 500, // 0x000001F4 BeforeRenderingPostProcessing = 550, // 0x00000226 AfterRenderingPostProcessing = 600, // 0x00000258 AfterRendering = 1000, // 0x000003E8 }

接下来就是统一全局时间,之后将渲染事件整合成三个阶段:渲染前,主渲染,渲染后,然后SetUp灯光:

public void Setup(ScriptableRenderContext context, ref RenderingData renderingData) { int additionalLightsCount = renderingData.lightData.additionalLightsCount; bool additionalLightsPerVertex = renderingData.lightData.shadeAdditionalLightsPerVertex; CommandBuffer commandBuffer = CommandBufferPool.Get("Setup Light Constants"); this.SetupShaderLightConstants(commandBuffer, ref renderingData); CoreUtils.SetKeyword(commandBuffer, ShaderKeywordStrings.AdditionalLightsVertex, additionalLightsCount > 0 & additionalLightsPerVertex); CoreUtils.SetKeyword(commandBuffer, ShaderKeywordStrings.AdditionalLightsPixel, additionalLightsCount > 0 && !additionalLightsPerVertex); CoreUtils.SetKeyword(commandBuffer, ShaderKeywordStrings.MixedLightingSubtractive, renderingData.lightData.supportsMixedLighting && this.m_MixedLightingSetup == MixedLightingSetup.Subtractive); context.ExecuteCommandBuffer(commandBuffer); CommandBufferPool.Release(commandBuffer); }

这是ForwardRenderer中一个ForwardLights类的一个方法,主要设置了shader光照常量和光照相关keyword。

接下来是执行渲染事件:

private void ExecuteBlock( int blockIndex, NativeArray<int> blockRanges, ScriptableRenderContext context, ref RenderingData renderingData, bool submit = false) { int blockRange1 = blockRanges[blockIndex + 1]; for (int blockRange2 = blockRanges[blockIndex]; blockRange2 < blockRange1; ++blockRange2) { ScriptableRenderPass activeRenderPass = this.m_ActiveRenderPassQueue[blockRange2]; this.ExecuteRenderPass(context, activeRenderPass, ref renderingData); } if (!submit) return; context.Submit(); }

可以看到执行了对应的渲染队列Pass的ExecuteRenderPass方法,最后submit

从这里可以看出,AfterRendering、MainRendering、BeforeRendering每个渲染事件执行完毕都会进行一次提交。

最后执行InternalFinishRendering方法完成渲染:

private void InternalFinishRendering(ScriptableRenderContext context) { CommandBuffer commandBuffer = CommandBufferPool.Get("Release Resources"); for (int index = 0; index < this.m_ActiveRenderPassQueue.Count; ++index) this.m_ActiveRenderPassQueue[index].FrameCleanup(commandBuffer); this.FinishRendering(commandBuffer); this.Clear(); context.ExecuteCommandBuffer(commandBuffer); CommandBufferPool.Release(commandBuffer); }

主要cleanup了一下所有的pass,释放了RT,重置渲染对象,清空pass队列。至此,整个URP的流程我们大致过了一遍,到现在为止我们看到的内容,主要都是URP的一个结构,核心内容都在每一个Pass中,下节我们会依次讲一讲Pass中的内容。

以上是关于URP学习之三--ForwardRenderer的主要内容,如果未能解决你的问题,请参考以下文章