kerberos系列之flink认证配置

Posted bainianminguo

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了kerberos系列之flink认证配置相关的知识,希望对你有一定的参考价值。

大数据安全系列的其它文章

https://www.cnblogs.com/bainianminguo/p/12548076.html-----------安装kerberos

https://www.cnblogs.com/bainianminguo/p/12548334.html-----------hadoop的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12548175.html-----------zookeeper的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12584732.html-----------hive的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12584880.html-----------es的search-guard认证

https://www.cnblogs.com/bainianminguo/p/12639821.html-----------flink的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12639887.html-----------spark的kerberos认证

今天的博客介绍大数据安全系列之flink的kerberos配置

一、flink安装

1、解压安装包

tar -zxvf flink-1.8.0-bin-scala_2.11.tgz -C /usr/local/

2、重命名安装目录

[root@cluster2-host1 local]# mv flink-1.8.0/ flink

3、修改环境变量文件

export FLINK_HOME=/usr/local/flink

export PATH=${PATH}:${FLINK_HOME}/bin

[root@cluster2-host1 data]# source /etc/profile

[root@cluster2-host1 data]# echo $FLINK_HOME

/usr/local/flink

4、修改flink的配置文件

[root@cluster2-host1 conf]# vim flink-conf.yaml [root@cluster2-host1 conf]# pwd /usr/local/flink/conf

jobmanager.rpc.address: cluster2-host1

修改slaver文件

[root@cluster2-host1 conf]# vim slaves [root@cluster2-host1 conf]# pwd /usr/local/flink/conf

cluster2-host2 cluster2-host3

修改master文件

[root@cluster2-host1 bin]# cat /usr/local/flink/conf/masters cluster2-host1

修改yarn-site.xml文件

<property> <name>yarn.nodemanager.vmem-pmem-ratio</name> <value>5</value> </property>

5、创建flink用户

[root@cluster2-host3 hadoop]# useradd flink -g flink [root@cluster2-host3 hadoop]# passwd flink Changing password for user flink. New password: BAD PASSWORD: The password fails the dictionary check - it is based on a dictionary word Retype new password:

6、修改flink安装目录的属主和属组

[root@cluster2-host3 hadoop]# chown -R flink:flink /usr/local/flink/

7、启动flink验证安装步骤

[root@cluster2-host1 bin]# ./start-cluster.sh Starting cluster. [INFO] 1 instance(s) of standalonesession are already running on cluster2-host1. Starting standalonesession daemon on host cluster2-host1. Starting taskexecutor daemon on host cluster2-host2. Starting taskexecutor daemon on host cluster2-host3.

检查进程

[root@cluster2-host1 bin]# jps 10400 Secur 30817 StandaloneSessionClusterEntrypoint 12661 ResourceManager 12805 NodeManager 4998 QuorumPeerMain 30935 Jps 2631 NameNode

登陆页面

http://10.87.18.34:8081/#/overview

关闭flink,上面的standalone的启动方法,下面启动flink-session模式

拷贝hadoop的依赖包到flink的lib目录

scp flink-shaded-hadoop2-uber-2.7.5-1.8.0.jar /usr/local/flink/lib/

启动flink-session模式

./yarn-session.sh -n 2 -s 6 -jm 1024 -tm 1024 -nm test -d

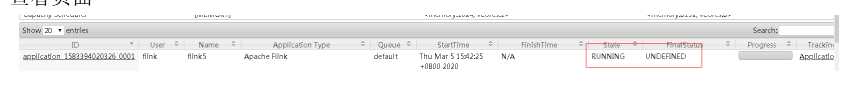

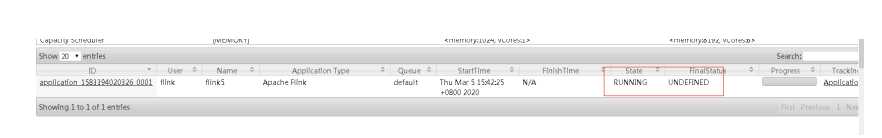

检查yanr的页面

二、配置flink的kerberos的配置

1、创建flink的kerberos认证主体文件

kadmin.local: addprinc flink/cluster2-host1 kadmin.local: addprinc flink/cluster2-host2 kadmin.local: addprinc flink/cluster2-host3

kadmin.local: ktadd -norandkey -k /etc/security/keytab/flink.keytab flink/cluster2-host1 kadmin.local: ktadd -norandkey -k /etc/security/keytab/flink.keytab flink/cluster2-host2 kadmin.local: ktadd -norandkey -k /etc/security/keytab/flink.keytab flink/cluster2-host3

2、拷贝keytab文件到其它节点

[root@cluster2-host1 bin]# scp /etc/security/keytab/flink.keytab root@cluster2-host2:/usr/local/flink/ flink.keytab 100% 1580 1.5KB/s 00:00 [root@cluster2-host1 bin]# scp /etc/security/keytab/flink.keytab root@cluster2-host3:/usr/local/flink/ flink.keytab

3、修改flink的配置文件

security.kerberos.login.use-ticket-cache: true security.kerberos.login.keytab: /usr/local/flink/flink.keytab security.kerberos.login.principal: flink/cluster2-host3 yarn.log-aggregation-enable: true

4、启动yarn-session,看到如下操作,则配置完成

flink@cluster2-host1 bin]$ ./yarn-session.sh -n 2 -s 6 -jm 1024 -tm 1024 -nm flink5 -d 2020-03-05 02:42:23,706 INFO org.apache.hadoop.security.UserGroupInformation - Login successful for user flink/cluster2-host1 using keytab file /usr/local/flink/flink.keytab

查看页面

检查进程

[root@cluster2-host1 sbin]# jps 6118 ResourceManager 15975 NameNode 22472 -- process information unavailable 6779 NodeManager 23483 YarnSessionClusterEntrypoint 24717 Master 9790 QuorumPeerMain 25534 Jps 20239 Secur

5、flink的kerberos的配置完成

以上是关于kerberos系列之flink认证配置的主要内容,如果未能解决你的问题,请参考以下文章