Tensorboard那些事

Posted fiona-y

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Tensorboard那些事相关的知识,希望对你有一定的参考价值。

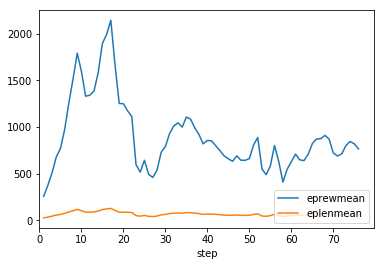

from tensorboard.backend.event_processing import event_accumulator #加载日志数据 ea=event_accumulator.EventAccumulator(r‘G:proj_drlopenai-2020-03-02-20-25-20-731960 bevents.out.tfevents.1583152456.sharmen-System-Product-Name‘) ea.Reload() print(ea.scalars.Keys()) eprewmean=ea.scalars.Items(‘eprewmean‘) print(len(eprewmean)) print([(i.step,i.value) for i in eprewmean]) import matplotlib.pyplot as plt fig=plt.figure(figsize=(6,4)) ax1=fig.add_subplot(111) eprewmean=ea.scalars.Items(‘eprewmean‘) ax1.plot([i.step for i in eprewmean],[i.value for i in eprewmean],label=‘eprewmean‘) ax1.set_xlim(0) eplenmean=ea.scalars.Items(‘eplenmean‘) ax1.plot([i.step for i in eplenmean],[i.value for i in eplenmean],label=‘eplenmean‘) ax1.set_xlabel("step") ax1.set_ylabel("") plt.legend(loc=‘lower right‘) plt.show()

训练结束后,会生成

events.out.tfevents.1583152456.sharmen-System-Product-Name文件,上面的代码时将该结果文件给做出图、将数据提取出来

输出结果包括:

[‘eprewmean‘, ‘misc/serial_timesteps‘, ‘misc/nupdates‘, ‘misc/time_elapsed‘, ‘eplenmean‘, ‘misc/total_timesteps‘, ‘loss/approxkl‘, ‘loss/clipfrac‘, ‘loss/policy_entropy‘, ‘misc/explained_variance‘, ‘loss/value_loss‘, ‘loss/policy_loss‘, ‘fps‘]

76

[(1, 257.5685119628906), (2, 376.15533447265625), (3, 510.7492980957031), (4, 678.4371337890625), (5, 770.0081787109375), (6, 972.59619140625), (7, 1255.8502197265625), (8, 1514.59814453125), (9, 1789.6971435546875), (10, 1595.138427734375), (11, 1329.5426025390625), (12, 1340.0447998046875), (13, 1386.5687255859375), (14, 1583.6361083984375), (15, 1895.1531982421875), (16, 1989.6558837890625), (17, 2142.414306640625), (18, 1665.0595703125), (19, 1251.9947509765625), (20, 1248.9090576171875), (21, 1172.8516845703125), (22, 1111.635498046875), (23, 597.84619140625), (24, 517.642333984375), (25, 644.049560546875), (26, 494.3590393066406), (27, 460.5877990722656), (28, 538.0994262695312), (29, 730.3182983398438), (30, 791.7052001953125), (31, 929.94189453125), (32, 1011.2576904296875), (33, 1045.2503662109375), (34, 998.0652465820312), (35, 1106.904296875), (36, 1084.27099609375), (37, 991.21826171875), (38, 919.396484375), (39, 819.7557983398438), (40, 856.4457397460938), (41, 851.87646484375), (42, 799.0846557617188), (43, 746.997802734375), (44, 689.9527587890625), (45, 660.1163940429688), (46, 633.2286376953125), (47, 691.8467407226562), (48, 644.5111083984375), (49, 643.9899291992188), (50, 661.7527465820312), (51, 807.190185546875), (52, 888.402587890625), (53, 551.6712646484375), (54, 489.34478759765625), (55, 584.1572875976562), (56, 801.443359375), (57, 630.820556640625), (58, 410.12908935546875), (59, 548.2344360351562), (60, 628.5557250976562), (61, 709.9279174804688), (62, 648.9661254882812), (63, 640.58349609375), (64, 707.22265625), (65, 822.0987548828125), (66, 871.9246826171875), (67, 874.5511474609375), (68, 910.3705444335938), (69, 871.1287841796875), (70, 722.210693359375), (71, 689.532958984375), (72, 712.7176513671875), (73, 800.1871337890625), (74, 845.607177734375), (75, 819.856689453125), (76, 766.2372436523438)]

?

大概是这个样子的。

参考地址:https://blog.csdn.net/nima1994/article/details/82844988

如何打开tensorboard:

如何打开tensorboard文件:tensorboard --logdir G:/Python/Python_study/logs --host=127.0.0.1

Windows系统,anaconda3.6,tensorflow1.12.0

参考地址:https://jingyan.baidu.com/article/e9fb46e1c55ac93520f7666b.html

以上是关于Tensorboard那些事的主要内容,如果未能解决你的问题,请参考以下文章