深度残差网络+自适应参数化ReLU激活函数(调参记录1)

Posted shisuzanian

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了深度残差网络+自适应参数化ReLU激活函数(调参记录1)相关的知识,希望对你有一定的参考价值。

本文采用了深度残差网络和自适应参数化ReLU激活函数,构造了一个网络(有9个残差模块,卷积核的个数比较少,最少是8个,最多是32个),在Cifar10数据集上进行了初步的尝试。

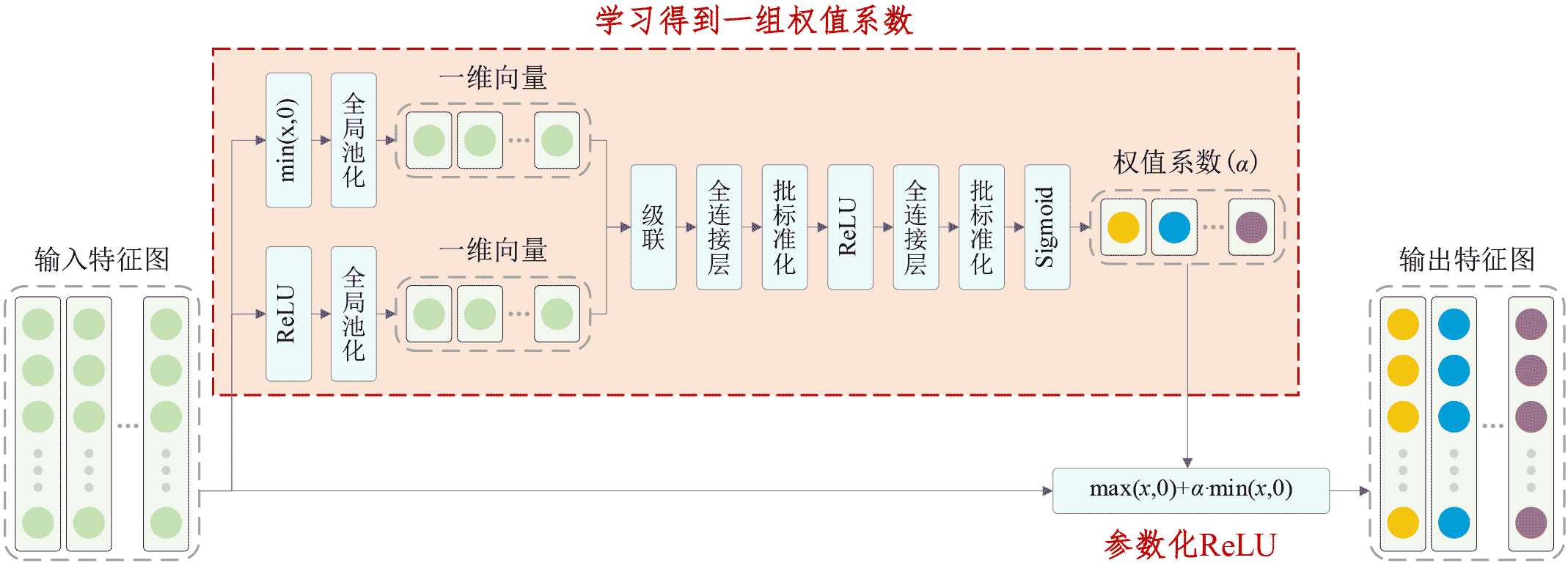

其中,自适应参数化ReLU激活函数原本是应用在基于振动信号的故障诊断,是参数化ReLU的一种改进,其基本原理如下图所示:

具体的keras代码如下:

1 #!/usr/bin/env python3 2 # -*- coding: utf-8 -*- 3 """ 4 Created on Tue Apr 14 04:17:45 2020 5 Implemented using TensorFlow 1.0.1 and Keras 2.2.1 6 7 Minghang Zhao, Shisheng Zhong, Xuyun Fu, Baoping Tang, Shaojiang Dong, Michael Pecht, 8 Deep Residual Networks with Adaptively Parametric Rectifier Linear Units for Fault Diagnosis, 9 IEEE Transactions on Industrial Electronics, 2020, DOI: 10.1109/TIE.2020.2972458 10 11 @author: Minghang Zhao 12 """ 13 14 from __future__ import print_function 15 import keras 16 import numpy as np 17 from keras.datasets import cifar10 18 from keras.layers import Dense, Conv2D, BatchNormalization, Activation, Minimum 19 from keras.layers import AveragePooling2D, Input, GlobalAveragePooling2D, Concatenate, Reshape 20 from keras.regularizers import l2 21 from keras import backend as K 22 from keras.models import Model 23 from keras import optimizers 24 from keras.preprocessing.image import ImageDataGenerator 25 from keras.callbacks import LearningRateScheduler 26 K.set_learning_phase(1) 27 28 # The data, split between train and test sets 29 (x_train, y_train), (x_test, y_test) = cifar10.load_data() 30 31 # Noised data 32 x_train = x_train.astype(‘float32‘) / 255. 33 x_test = x_test.astype(‘float32‘) / 255. 34 x_test = x_test-np.mean(x_train) 35 x_train = x_train-np.mean(x_train) 36 print(‘x_train shape:‘, x_train.shape) 37 print(x_train.shape[0], ‘train samples‘) 38 print(x_test.shape[0], ‘test samples‘) 39 40 # convert class vectors to binary class matrices 41 y_train = keras.utils.to_categorical(y_train, 10) 42 y_test = keras.utils.to_categorical(y_test, 10) 43 44 # Schedule the learning rate, multiply 0.1 every 400 epoches 45 def scheduler(epoch): 46 if epoch % 400 == 0 and epoch != 0: 47 lr = K.get_value(model.optimizer.lr) 48 K.set_value(model.optimizer.lr, lr * 0.1) 49 print("lr changed to {}".format(lr * 0.1)) 50 return K.get_value(model.optimizer.lr) 51 52 # An adaptively parametric rectifier linear unit (APReLU) 53 def aprelu(inputs): 54 # get the number of channels 55 channels = inputs.get_shape().as_list()[-1] 56 # get a zero feature map 57 zeros_input = keras.layers.subtract([inputs, inputs]) 58 # get a feature map with only positive features 59 pos_input = Activation(‘relu‘)(inputs) 60 # get a feature map with only negative features 61 neg_input = Minimum()([inputs,zeros_input]) 62 # define a network to obtain the scaling coefficients 63 scales_p = GlobalAveragePooling2D()(pos_input) 64 scales_n = GlobalAveragePooling2D()(neg_input) 65 scales = Concatenate()([scales_n, scales_p]) 66 scales = Dense(channels//4, activation=‘linear‘, kernel_initializer=‘he_normal‘, kernel_regularizer=l2(1e-4))(scales) 67 scales = BatchNormalization()(scales) 68 scales = Activation(‘relu‘)(scales) 69 scales = Dense(channels, activation=‘linear‘, kernel_initializer=‘he_normal‘, kernel_regularizer=l2(1e-4))(scales) 70 scales = BatchNormalization()(scales) 71 scales = Activation(‘sigmoid‘)(scales) 72 scales = Reshape((1,1,channels))(scales) 73 # apply a paramtetric relu 74 neg_part = keras.layers.multiply([scales, neg_input]) 75 return keras.layers.add([pos_input, neg_part]) 76 77 # Residual Block 78 def residual_block(incoming, nb_blocks, out_channels, downsample=False, 79 downsample_strides=2): 80 81 residual = incoming 82 in_channels = incoming.get_shape().as_list()[-1] 83 84 for i in range(nb_blocks): 85 86 identity = residual 87 88 if not downsample: 89 downsample_strides = 1 90 91 residual = BatchNormalization()(residual) 92 residual = aprelu(residual) 93 residual = Conv2D(out_channels, 3, strides=(downsample_strides, downsample_strides), 94 padding=‘same‘, kernel_initializer=‘he_normal‘, 95 kernel_regularizer=l2(1e-4))(residual) 96 97 residual = BatchNormalization()(residual) 98 residual = aprelu(residual) 99 residual = Conv2D(out_channels, 3, padding=‘same‘, kernel_initializer=‘he_normal‘, 100 kernel_regularizer=l2(1e-4))(residual) 101 102 # Downsampling 103 if downsample_strides > 1: 104 identity = AveragePooling2D(pool_size=(1,1), strides=(2,2))(identity) 105 106 # Zero_padding to match channels 107 if in_channels != out_channels: 108 zeros_identity = keras.layers.subtract([identity, identity]) 109 identity = keras.layers.concatenate([identity, zeros_identity]) 110 in_channels = out_channels 111 112 residual = keras.layers.add([residual, identity]) 113 114 return residual 115 116 117 # define and train a model 118 inputs = Input(shape=(32, 32, 3)) 119 net = Conv2D(8, 3, padding=‘same‘, kernel_initializer=‘he_normal‘, kernel_regularizer=l2(1e-4))(inputs) 120 net = residual_block(net, 3, 8, downsample=False) 121 net = residual_block(net, 1, 16, downsample=True) 122 net = residual_block(net, 2, 16, downsample=False) 123 net = residual_block(net, 1, 32, downsample=True) 124 net = residual_block(net, 2, 32, downsample=False) 125 net = BatchNormalization()(net) 126 net = aprelu(net) 127 net = GlobalAveragePooling2D()(net) 128 outputs = Dense(10, activation=‘softmax‘, kernel_initializer=‘he_normal‘, kernel_regularizer=l2(1e-4))(net) 129 model = Model(inputs=inputs, outputs=outputs) 130 sgd = optimizers.SGD(lr=0.1, decay=0., momentum=0.9, nesterov=True) 131 model.compile(loss=‘categorical_crossentropy‘, optimizer=sgd, metrics=[‘accuracy‘]) 132 133 # data augmentation 134 datagen = ImageDataGenerator( 135 # randomly rotate images in the range (deg 0 to 180) 136 rotation_range=30, 137 # randomly flip images 138 horizontal_flip=True, 139 # randomly shift images horizontally 140 width_shift_range=0.125, 141 # randomly shift images vertically 142 height_shift_range=0.125) 143 144 reduce_lr = LearningRateScheduler(scheduler) 145 # fit the model on the batches generated by datagen.flow(). 146 model.fit_generator(datagen.flow(x_train, y_train, batch_size=100), 147 validation_data=(x_test, y_test), epochs=1000, 148 verbose=1, callbacks=[reduce_lr], workers=4) 149 150 # get results 151 K.set_learning_phase(0) 152 DRSN_train_score1 = model.evaluate(x_train, y_train, batch_size=100, verbose=0) 153 print(‘Train loss:‘, DRSN_train_score1[0]) 154 print(‘Train accuracy:‘, DRSN_train_score1[1]) 155 DRSN_test_score1 = model.evaluate(x_test, y_test, batch_size=100, verbose=0) 156 print(‘Test loss:‘, DRSN_test_score1[0]) 157 print(‘Test accuracy:‘, DRSN_test_score1[1])

部分实验结果如下:

Epoch 755/1000 19s 39ms/step - loss: 0.3548 - acc: 0.9084 - val_loss: 0.4584 - val_acc: 0.8794 Epoch 756/1000 19s 38ms/step - loss: 0.3526 - acc: 0.9098 - val_loss: 0.4647 - val_acc: 0.8727 Epoch 757/1000 19s 38ms/step - loss: 0.3516 - acc: 0.9083 - val_loss: 0.4516 - val_acc: 0.8815 Epoch 758/1000 19s 38ms/step - loss: 0.3508 - acc: 0.9098 - val_loss: 0.4639 - val_acc: 0.8785 Epoch 759/1000 19s 38ms/step - loss: 0.3565 - acc: 0.9078 - val_loss: 0.4542 - val_acc: 0.8751 Epoch 760/1000 19s 38ms/step - loss: 0.3556 - acc: 0.9077 - val_loss: 0.4681 - val_acc: 0.8729 Epoch 761/1000 19s 38ms/step - loss: 0.3519 - acc: 0.9089 - val_loss: 0.4459 - val_acc: 0.8824 Epoch 762/1000 19s 39ms/step - loss: 0.3523 - acc: 0.9085 - val_loss: 0.4528 - val_acc: 0.8766 Epoch 763/1000 19s 38ms/step - loss: 0.3565 - acc: 0.9071 - val_loss: 0.4621 - val_acc: 0.8773 Epoch 764/1000 19s 38ms/step - loss: 0.3532 - acc: 0.9084 - val_loss: 0.4570 - val_acc: 0.8751 Epoch 765/1000 19s 38ms/step - loss: 0.3561 - acc: 0.9068 - val_loss: 0.4551 - val_acc: 0.8780 Epoch 766/1000 19s 38ms/step - loss: 0.3515 - acc: 0.9093 - val_loss: 0.4583 - val_acc: 0.8796 Epoch 767/1000 19s 38ms/step - loss: 0.3532 - acc: 0.9083 - val_loss: 0.4591 - val_acc: 0.8805 Epoch 768/1000 19s 38ms/step - loss: 0.3531 - acc: 0.9088 - val_loss: 0.4725 - val_acc: 0.8733 Epoch 769/1000 19s 38ms/step - loss: 0.3556 - acc: 0.9082 - val_loss: 0.4599 - val_acc: 0.8796 Epoch 770/1000 19s 38ms/step - loss: 0.3540 - acc: 0.9087 - val_loss: 0.4635 - val_acc: 0.8792 Epoch 771/1000 19s 39ms/step - loss: 0.3549 - acc: 0.9068 - val_loss: 0.4534 - val_acc: 0.8769 Epoch 772/1000 19s 38ms/step - loss: 0.3560 - acc: 0.9080 - val_loss: 0.4550 - val_acc: 0.8790 Epoch 773/1000 19s 38ms/step - loss: 0.3569 - acc: 0.9066 - val_loss: 0.4524 - val_acc: 0.8788 Epoch 774/1000 19s 38ms/step - loss: 0.3542 - acc: 0.9071 - val_loss: 0.4542 - val_acc: 0.8802 Epoch 775/1000 19s 38ms/step - loss: 0.3532 - acc: 0.9085 - val_loss: 0.4764 - val_acc: 0.8734 Epoch 776/1000 19s 38ms/step - loss: 0.3549 - acc: 0.9072 - val_loss: 0.4720 - val_acc: 0.8732 Epoch 777/1000 19s 39ms/step - loss: 0.3537 - acc: 0.9078 - val_loss: 0.4567 - val_acc: 0.8778 Epoch 778/1000 19s 38ms/step - loss: 0.3537 - acc: 0.9073 - val_loss: 0.4579 - val_acc: 0.8759 Epoch 779/1000 19s 38ms/step - loss: 0.3538 - acc: 0.9090 - val_loss: 0.4735 - val_acc: 0.8716 Epoch 780/1000 19s 38ms/step - loss: 0.3584 - acc: 0.9066 - val_loss: 0.4611 - val_acc: 0.8756 Epoch 781/1000 19s 38ms/step - loss: 0.3558 - acc: 0.9077 - val_loss: 0.4480 - val_acc: 0.8815 Epoch 782/1000 19s 38ms/step - loss: 0.3546 - acc: 0.9073 - val_loss: 0.4704 - val_acc: 0.8767 Epoch 783/1000 19s 38ms/step - loss: 0.3547 - acc: 0.9082 - val_loss: 0.4604 - val_acc: 0.8808 Epoch 784/1000 19s 38ms/step - loss: 0.3502 - acc: 0.9099 - val_loss: 0.4570 - val_acc: 0.8805 Epoch 785/1000 19s 39ms/step - loss: 0.3517 - acc: 0.9109 - val_loss: 0.4589 - val_acc: 0.8781 Epoch 786/1000 19s 38ms/step - loss: 0.3530 - acc: 0.9077 - val_loss: 0.4554 - val_acc: 0.8791 Epoch 787/1000 19s 38ms/step - loss: 0.3563 - acc: 0.9073 - val_loss: 0.4650 - val_acc: 0.8742 Epoch 788/1000 19s 38ms/step - loss: 0.3544 - acc: 0.9093 - val_loss: 0.4657 - val_acc: 0.8739 Epoch 789/1000 19s 38ms/step - loss: 0.3521 - acc: 0.9096 - val_loss: 0.4550 - val_acc: 0.8786 Epoch 790/1000 19s 38ms/step - loss: 0.3507 - acc: 0.9092 - val_loss: 0.4748 - val_acc: 0.8742 Epoch 791/1000 19s 38ms/step - loss: 0.3505 - acc: 0.9094 - val_loss: 0.4734 - val_acc: 0.8731 Epoch 792/1000 19s 39ms/step - loss: 0.3565 - acc: 0.9088 - val_loss: 0.4669 - val_acc: 0.8729 Epoch 793/1000 19s 39ms/step - loss: 0.3502 - acc: 0.9100 - val_loss: 0.4652 - val_acc: 0.8771 Epoch 794/1000 19s 38ms/step - loss: 0.3514 - acc: 0.9088 - val_loss: 0.4752 - val_acc: 0.8723 Epoch 795/1000 19s 38ms/step - loss: 0.3538 - acc: 0.9084 - val_loss: 0.4646 - val_acc: 0.8786 Epoch 796/1000 19s 38ms/step - loss: 0.3541 - acc: 0.9080 - val_loss: 0.4740 - val_acc: 0.8780 Epoch 797/1000 19s 38ms/step - loss: 0.3544 - acc: 0.9076 - val_loss: 0.4562 - val_acc: 0.8796 Epoch 798/1000 19s 38ms/step - loss: 0.3551 - acc: 0.9080 - val_loss: 0.4681 - val_acc: 0.8738 Epoch 799/1000 19s 38ms/step - loss: 0.3515 - acc: 0.9091 - val_loss: 0.4545 - val_acc: 0.8801 Epoch 800/1000 19s 38ms/step - loss: 0.3545 - acc: 0.9088 - val_loss: 0.4552 - val_acc: 0.8809 Epoch 801/1000 lr changed to 0.0009999999776482583 19s 38ms/step - loss: 0.3211 - acc: 0.9201 - val_loss: 0.4238 - val_acc: 0.8918 Epoch 802/1000 19s 38ms/step - loss: 0.3056 - acc: 0.9259 - val_loss: 0.4218 - val_acc: 0.8925 Epoch 803/1000 19s 39ms/step - loss: 0.2945 - acc: 0.9303 - val_loss: 0.4213 - val_acc: 0.8942 Epoch 804/1000 19s 38ms/step - loss: 0.2926 - acc: 0.9300 - val_loss: 0.4196 - val_acc: 0.8944 Epoch 805/1000 19s 38ms/step - loss: 0.2877 - acc: 0.9332 - val_loss: 0.4221 - val_acc: 0.8914 Epoch 806/1000 19s 38ms/step - loss: 0.2899 - acc: 0.9309 - val_loss: 0.4191 - val_acc: 0.8930 Epoch 807/1000 19s 38ms/step - loss: 0.2853 - acc: 0.9334 - val_loss: 0.4184 - val_acc: 0.8939 Epoch 808/1000 19s 38ms/step - loss: 0.2868 - acc: 0.9313 - val_loss: 0.4171 - val_acc: 0.8934 Epoch 809/1000 19s 38ms/step - loss: 0.2806 - acc: 0.9335 - val_loss: 0.4172 - val_acc: 0.8920 Epoch 810/1000 19s 38ms/step - loss: 0.2769 - acc: 0.9356 - val_loss: 0.4212 - val_acc: 0.8934 Epoch 811/1000 19s 38ms/step - loss: 0.2816 - acc: 0.9338 - val_loss: 0.4186 - val_acc: 0.8899 Epoch 812/1000 19s 38ms/step - loss: 0.2791 - acc: 0.9347 - val_loss: 0.4183 - val_acc: 0.8924 Epoch 813/1000 19s 39ms/step - loss: 0.2788 - acc: 0.9345 - val_loss: 0.4217 - val_acc: 0.8918 Epoch 814/1000 19s 39ms/step - loss: 0.2765 - acc: 0.9362 - val_loss: 0.4181 - val_acc: 0.8947 Epoch 815/1000 19s 39ms/step - loss: 0.2778 - acc: 0.9345 - val_loss: 0.4234 - val_acc: 0.8934 Epoch 816/1000 19s 38ms/step - loss: 0.2760 - acc: 0.9347 - val_loss: 0.4227 - val_acc: 0.8913 Epoch 817/1000 19s 39ms/step - loss: 0.2740 - acc: 0.9358 - val_loss: 0.4221 - val_acc: 0.8954 Epoch 818/1000 19s 39ms/step - loss: 0.2733 - acc: 0.9369 - val_loss: 0.4209 - val_acc: 0.8929 Epoch 819/1000 19s 39ms/step - loss: 0.2741 - acc: 0.9354 - val_loss: 0.4224 - val_acc: 0.8921 Epoch 820/1000 19s 39ms/step - loss: 0.2732 - acc: 0.9354 - val_loss: 0.4211 - val_acc: 0.8927 Epoch 821/1000 19s 38ms/step - loss: 0.2730 - acc: 0.9356 - val_loss: 0.4227 - val_acc: 0.8937 Epoch 822/1000 19s 39ms/step - loss: 0.2737 - acc: 0.9361 - val_loss: 0.4218 - val_acc: 0.8938 Epoch 823/1000 19s 39ms/step - loss: 0.2691 - acc: 0.9367 - val_loss: 0.4243 - val_acc: 0.8930 Epoch 824/1000 19s 39ms/step - loss: 0.2712 - acc: 0.9372 - val_loss: 0.4230 - val_acc: 0.8934 Epoch 825/1000 19s 39ms/step - loss: 0.2676 - acc: 0.9385 - val_loss: 0.4271 - val_acc: 0.8913 Epoch 826/1000 19s 39ms/step - loss: 0.2703 - acc: 0.9356 - val_loss: 0.4237 - val_acc: 0.8921 Epoch 827/1000 19s 39ms/step - loss: 0.2673 - acc: 0.9379 - val_loss: 0.4238 - val_acc: 0.8922 Epoch 828/1000 19s 39ms/step - loss: 0.2682 - acc: 0.9370 - val_loss: 0.4199 - val_acc: 0.8946 Epoch 829/1000 19s 39ms/step - loss: 0.2678 - acc: 0.9368 - val_loss: 0.4235 - val_acc: 0.8921 Epoch 830/1000 19s 39ms/step - loss: 0.2639 - acc: 0.9380 - val_loss: 0.4213 - val_acc: 0.8934 Epoch 831/1000 19s 38ms/step - loss: 0.2653 - acc: 0.9377 - val_loss: 0.4210 - val_acc: 0.8946 Epoch 832/1000 19s 39ms/step - loss: 0.2662 - acc: 0.9373 - val_loss: 0.4214 - val_acc: 0.8946 Epoch 833/1000 19s 39ms/step - loss: 0.2663 - acc: 0.9383 - val_loss: 0.4239 - val_acc: 0.8908 Epoch 834/1000 19s 39ms/step - loss: 0.2629 - acc: 0.9385 - val_loss: 0.4236 - val_acc: 0.8909 Epoch 835/1000 19s 39ms/step - loss: 0.2654 - acc: 0.9375 - val_loss: 0.4201 - val_acc: 0.8931 Epoch 836/1000 19s 39ms/step - loss: 0.2651 - acc: 0.9382 - val_loss: 0.4227 - val_acc: 0.8921 Epoch 837/1000 19s 38ms/step - loss: 0.2660 - acc: 0.9376 - val_loss: 0.4253 - val_acc: 0.8911 Epoch 838/1000 19s 38ms/step - loss: 0.2627 - acc: 0.9371 - val_loss: 0.4218 - val_acc: 0.8926 Epoch 839/1000 19s 38ms/step - loss: 0.2644 - acc: 0.9380 - val_loss: 0.4216 - val_acc: 0.8921 Epoch 840/1000 19s 39ms/step - loss: 0.2617 - acc: 0.9389 - val_loss: 0.4208 - val_acc: 0.8927 Epoch 841/1000 19s 39ms/step - loss: 0.2622 - acc: 0.9392 - val_loss: 0.4220 - val_acc: 0.8930 Epoch 842/1000 19s 39ms/step - loss: 0.2632 - acc: 0.9381 - val_loss: 0.4228 - val_acc: 0.8927 Epoch 843/1000 19s 39ms/step - loss: 0.2607 - acc: 0.9390 - val_loss: 0.4271 - val_acc: 0.8915 Epoch 844/1000 19s 39ms/step - loss: 0.2608 - acc: 0.9397 - val_loss: 0.4238 - val_acc: 0.8909 Epoch 845/1000 19s 39ms/step - loss: 0.2606 - acc: 0.9392 - val_loss: 0.4214 - val_acc: 0.8920 Epoch 846/1000 19s 38ms/step - loss: 0.2612 - acc: 0.9391 - val_loss: 0.4245 - val_acc: 0.8928 Epoch 847/1000 19s 38ms/step - loss: 0.2559 - acc: 0.9409 - val_loss: 0.4236 - val_acc: 0.8924 Epoch 848/1000 19s 39ms/step - loss: 0.2571 - acc: 0.9405 - val_loss: 0.4235 - val_acc: 0.8920 Epoch 849/1000 19s 39ms/step - loss: 0.2621 - acc: 0.9381 - val_loss: 0.4209 - val_acc: 0.8946 Epoch 850/1000 19s 38ms/step - loss: 0.2567 - acc: 0.9389 - val_loss: 0.4245 - val_acc: 0.8943 Epoch 851/1000 19s 38ms/step - loss: 0.2567 - acc: 0.9392 - val_loss: 0.4223 - val_acc: 0.8917 Epoch 852/1000 19s 38ms/step - loss: 0.2596 - acc: 0.9383 - val_loss: 0.4222 - val_acc: 0.8915 Epoch 853/1000 19s 38ms/step - loss: 0.2542 - acc: 0.9406 - val_loss: 0.4214 - val_acc: 0.8935 Epoch 854/1000 19s 38ms/step - loss: 0.2572 - acc: 0.9403 - val_loss: 0.4232 - val_acc: 0.8905 Epoch 855/1000 19s 38ms/step - loss: 0.2573 - acc: 0.9394 - val_loss: 0.4231 - val_acc: 0.8906 Epoch 856/1000 19s 39ms/step - loss: 0.2558 - acc: 0.9394 - val_loss: 0.4230 - val_acc: 0.8926 Epoch 857/1000 19s 38ms/step - loss: 0.2557 - acc: 0.9400 - val_loss: 0.4244 - val_acc: 0.8921 Epoch 858/1000 19s 39ms/step - loss: 0.2590 - acc: 0.9380 - val_loss: 0.4252 - val_acc: 0.8914 Epoch 859/1000 19s 39ms/step - loss: 0.2598 - acc: 0.9382 - val_loss: 0.4204 - val_acc: 0.8921 Epoch 860/1000 19s 39ms/step - loss: 0.2544 - acc: 0.9415 - val_loss: 0.4240 - val_acc: 0.8900 Epoch 861/1000 19s 39ms/step - loss: 0.2533 - acc: 0.9413 - val_loss: 0.4221 - val_acc: 0.8930 Epoch 862/1000 19s 38ms/step - loss: 0.2524 - acc: 0.9408 - val_loss: 0.4222 - val_acc: 0.8909 Epoch 863/1000 19s 38ms/step - loss: 0.2516 - acc: 0.9407 - val_loss: 0.4189 - val_acc: 0.8934 Epoch 864/1000 19s 38ms/step - loss: 0.2577 - acc: 0.9394 - val_loss: 0.4234 - val_acc: 0.8925 Epoch 865/1000 19s 38ms/step - loss: 0.2585 - acc: 0.9391 - val_loss: 0.4206 - val_acc: 0.8916 Epoch 866/1000 19s 39ms/step - loss: 0.2546 - acc: 0.9403 - val_loss: 0.4188 - val_acc: 0.8926 Epoch 867/1000 19s 39ms/step - loss: 0.2524 - acc: 0.9409 - val_loss: 0.4200 - val_acc: 0.8927 Epoch 868/1000 19s 39ms/step - loss: 0.2511 - acc: 0.9409 - val_loss: 0.4215 - val_acc: 0.8918 Epoch 869/1000 19s 38ms/step - loss: 0.2529 - acc: 0.9404 - val_loss: 0.4174 - val_acc: 0.8924 Epoch 870/1000 19s 39ms/step - loss: 0.2571 - acc: 0.9390 - val_loss: 0.4217 - val_acc: 0.8927 Epoch 871/1000 19s 38ms/step - loss: 0.2521 - acc: 0.9401 - val_loss: 0.4194 - val_acc: 0.8922 Epoch 872/1000 19s 39ms/step - loss: 0.2525 - acc: 0.9403 - val_loss: 0.4219 - val_acc: 0.8916 Epoch 873/1000 19s 38ms/step - loss: 0.2548 - acc: 0.9397 - val_loss: 0.4215 - val_acc: 0.8909 Epoch 874/1000 19s 39ms/step - loss: 0.2507 - acc: 0.9415 - val_loss: 0.4220 - val_acc: 0.8919 Epoch 875/1000 19s 39ms/step - loss: 0.2505 - acc: 0.9406 - val_loss: 0.4207 - val_acc: 0.8925 Epoch 876/1000 19s 39ms/step - loss: 0.2492 - acc: 0.9419 - val_loss: 0.4204 - val_acc: 0.8921 Epoch 877/1000 19s 38ms/step - loss: 0.2540 - acc: 0.9399 - val_loss: 0.4251 - val_acc: 0.8885 Epoch 878/1000 19s 38ms/step - loss: 0.2503 - acc: 0.9411 - val_loss: 0.4234 - val_acc: 0.8917 Epoch 879/1000 19s 38ms/step - loss: 0.2516 - acc: 0.9398 - val_loss: 0.4210 - val_acc: 0.8905 Epoch 880/1000 19s 38ms/step - loss: 0.2526 - acc: 0.9401 - val_loss: 0.4258 - val_acc: 0.8894 Epoch 881/1000 19s 38ms/step - loss: 0.2527 - acc: 0.9397 - val_loss: 0.4214 - val_acc: 0.8919 Epoch 882/1000 19s 39ms/step - loss: 0.2532 - acc: 0.9399 - val_loss: 0.4269 - val_acc: 0.8884 Epoch 883/1000 19s 39ms/step - loss: 0.2512 - acc: 0.9405 - val_loss: 0.4226 - val_acc: 0.8914 Epoch 884/1000 19s 38ms/step - loss: 0.2471 - acc: 0.9415 - val_loss: 0.4224 - val_acc: 0.8920 Epoch 885/1000 19s 39ms/step - loss: 0.2474 - acc: 0.9414 - val_loss: 0.4277 - val_acc: 0.8906 Epoch 886/1000 19s 39ms/step - loss: 0.2466 - acc: 0.9419 - val_loss: 0.4293 - val_acc: 0.8885 Epoch 887/1000 19s 39ms/step - loss: 0.2488 - acc: 0.9408 - val_loss: 0.4277 - val_acc: 0.8907 Epoch 888/1000 19s 39ms/step - loss: 0.2478 - acc: 0.9415 - val_loss: 0.4227 - val_acc: 0.8905 Epoch 889/1000 19s 38ms/step - loss: 0.2467 - acc: 0.9401 - val_loss: 0.4265 - val_acc: 0.8892 Epoch 890/1000 19s 38ms/step - loss: 0.2491 - acc: 0.9400 - val_loss: 0.4250 - val_acc: 0.8904 Epoch 891/1000 19s 39ms/step - loss: 0.2485 - acc: 0.9409 - val_loss: 0.4211 - val_acc: 0.8909 Epoch 892/1000 19s 38ms/step - loss: 0.2454 - acc: 0.9410 - val_loss: 0.4259 - val_acc: 0.8916 Epoch 893/1000 19s 39ms/step - loss: 0.2479 - acc: 0.9390 - val_loss: 0.4224 - val_acc: 0.8914 Epoch 894/1000 19s 38ms/step - loss: 0.2485 - acc: 0.9419 - val_loss: 0.4281 - val_acc: 0.8903 Epoch 895/1000 19s 38ms/step - loss: 0.2449 - acc: 0.9425 - val_loss: 0.4278 - val_acc: 0.8873 Epoch 896/1000 19s 39ms/step - loss: 0.2453 - acc: 0.9427 - val_loss: 0.4281 - val_acc: 0.8903 Epoch 897/1000 19s 38ms/step - loss: 0.2502 - acc: 0.9401 - val_loss: 0.4210 - val_acc: 0.8919 Epoch 898/1000 19s 38ms/step - loss: 0.2494 - acc: 0.9399 - val_loss: 0.4239 - val_acc: 0.8909 Epoch 899/1000 19s 38ms/step - loss: 0.2475 - acc: 0.9422 - val_loss: 0.4238 - val_acc: 0.8929 Epoch 900/1000 19s 38ms/step - loss: 0.2475 - acc: 0.9416 - val_loss: 0.4256 - val_acc: 0.8885 Epoch 901/1000 19s 39ms/step - loss: 0.2439 - acc: 0.9416 - val_loss: 0.4240 - val_acc: 0.8910 Epoch 902/1000 19s 38ms/step - loss: 0.2470 - acc: 0.9407 - val_loss: 0.4253 - val_acc: 0.8905 Epoch 903/1000 19s 38ms/step - loss: 0.2433 - acc: 0.9420 - val_loss: 0.4210 - val_acc: 0.8920 Epoch 904/1000 19s 39ms/step - loss: 0.2434 - acc: 0.9427 - val_loss: 0.4239 - val_acc: 0.8900 Epoch 905/1000 19s 39ms/step - loss: 0.2463 - acc: 0.9408 - val_loss: 0.4223 - val_acc: 0.8914 Epoch 906/1000 19s 38ms/step - loss: 0.2455 - acc: 0.9408 - val_loss: 0.4255 - val_acc: 0.8909 Epoch 907/1000 19s 39ms/step - loss: 0.2421 - acc: 0.9426 - val_loss: 0.4230 - val_acc: 0.8916 Epoch 908/1000 19s 38ms/step - loss: 0.2448 - acc: 0.9412 - val_loss: 0.4216 - val_acc: 0.8891 Epoch 909/1000 19s 38ms/step - loss: 0.2439 - acc: 0.9422 - val_loss: 0.4255 - val_acc: 0.8896 Epoch 910/1000 19s 38ms/step - loss: 0.2439 - acc: 0.9416 - val_loss: 0.4298 - val_acc: 0.8902 Epoch 911/1000 19s 39ms/step - loss: 0.2430 - acc: 0.9419 - val_loss: 0.4245 - val_acc: 0.8892 Epoch 912/1000 19s 39ms/step - loss: 0.2432 - acc: 0.9421 - val_loss: 0.4245 - val_acc: 0.8908 Epoch 913/1000 19s 39ms/step - loss: 0.2444 - acc: 0.9420 - val_loss: 0.4239 - val_acc: 0.8911 Epoch 914/1000 19s 39ms/step - loss: 0.2449 - acc: 0.9418 - val_loss: 0.4221 - val_acc: 0.8918 Epoch 915/1000 19s 39ms/step - loss: 0.2445 - acc: 0.9415 - val_loss: 0.4293 - val_acc: 0.8876 Epoch 916/1000 19s 39ms/step - loss: 0.2445 - acc: 0.9412 - val_loss: 0.4254 - val_acc: 0.8889 Epoch 917/1000 19s 39ms/step - loss: 0.2452 - acc: 0.9405 - val_loss: 0.4275 - val_acc: 0.8877 Epoch 918/1000 19s 39ms/step - loss: 0.2446 - acc: 0.9400 - val_loss: 0.4255 - val_acc: 0.8894 Epoch 919/1000 19s 38ms/step - loss: 0.2456 - acc: 0.9398 - val_loss: 0.4240 - val_acc: 0.8930 Epoch 920/1000 19s 38ms/step - loss: 0.2444 - acc: 0.9412 - val_loss: 0.4228 - val_acc: 0.8909 Epoch 921/1000 19s 39ms/step - loss: 0.2431 - acc: 0.9422 - val_loss: 0.4204 - val_acc: 0.8900 Epoch 922/1000 19s 39ms/step - loss: 0.2441 - acc: 0.9403 - val_loss: 0.4206 - val_acc: 0.8915 Epoch 923/1000 19s 38ms/step - loss: 0.2431 - acc: 0.9415 - val_loss: 0.4196 - val_acc: 0.8918 Epoch 924/1000 19s 38ms/step - loss: 0.2437 - acc: 0.9419 - val_loss: 0.4246 - val_acc: 0.8885 Epoch 925/1000 19s 38ms/step - loss: 0.2414 - acc: 0.9414 - val_loss: 0.4226 - val_acc: 0.8898 Epoch 926/1000 19s 39ms/step - loss: 0.2386 - acc: 0.9429 - val_loss: 0.4181 - val_acc: 0.8909 Epoch 927/1000 19s 38ms/step - loss: 0.2411 - acc: 0.9416 - val_loss: 0.4233 - val_acc: 0.8912 Epoch 928/1000 19s 39ms/step - loss: 0.2432 - acc: 0.9414 - val_loss: 0.4277 - val_acc: 0.8889 Epoch 929/1000 19s 39ms/step - loss: 0.2416 - acc: 0.9415 - val_loss: 0.4297 - val_acc: 0.8875 Epoch 930/1000 19s 39ms/step - loss: 0.2427 - acc: 0.9415 - val_loss: 0.4267 - val_acc: 0.8879 Epoch 931/1000 19s 39ms/step - loss: 0.2408 - acc: 0.9426 - val_loss: 0.4289 - val_acc: 0.8884 Epoch 932/1000 19s 39ms/step - loss: 0.2408 - acc: 0.9415 - val_loss: 0.4275 - val_acc: 0.8882 Epoch 933/1000 19s 39ms/step - loss: 0.2393 - acc: 0.9421 - val_loss: 0.4283 - val_acc: 0.8890 Epoch 934/1000 19s 38ms/step - loss: 0.2392 - acc: 0.9424 - val_loss: 0.4261 - val_acc: 0.8884 Epoch 935/1000 19s 38ms/step - loss: 0.2407 - acc: 0.9421 - val_loss: 0.4295 - val_acc: 0.8863 Epoch 936/1000 19s 39ms/step - loss: 0.2447 - acc: 0.9418 - val_loss: 0.4253 - val_acc: 0.8879 Epoch 937/1000 19s 39ms/step - loss: 0.2371 - acc: 0.9427 - val_loss: 0.4255 - val_acc: 0.8892 Epoch 938/1000 19s 39ms/step - loss: 0.2400 - acc: 0.9422 - val_loss: 0.4239 - val_acc: 0.8894 Epoch 939/1000 19s 39ms/step - loss: 0.2407 - acc: 0.9413 - val_loss: 0.4246 - val_acc: 0.8899 Epoch 940/1000 19s 39ms/step - loss: 0.2413 - acc: 0.9424 - val_loss: 0.4252 - val_acc: 0.8894 Epoch 941/1000 19s 38ms/step - loss: 0.2415 - acc: 0.9415 - val_loss: 0.4256 - val_acc: 0.8911 Epoch 942/1000 19s 38ms/step - loss: 0.2373 - acc: 0.9431 - val_loss: 0.4280 - val_acc: 0.8876 Epoch 943/1000 19s 39ms/step - loss: 0.2385 - acc: 0.9422 - val_loss: 0.4260 - val_acc: 0.8885 Epoch 944/1000 19s 39ms/step - loss: 0.2376 - acc: 0.9424 - val_loss: 0.4200 - val_acc: 0.8904 Epoch 945/1000 19s 39ms/step - loss: 0.2392 - acc: 0.9422 - val_loss: 0.4242 - val_acc: 0.8915 Epoch 946/1000 19s 39ms/step - loss: 0.2407 - acc: 0.9414 - val_loss: 0.4230 - val_acc: 0.8907 Epoch 947/1000 19s 39ms/step - loss: 0.2383 - acc: 0.9432 - val_loss: 0.4200 - val_acc: 0.8893 Epoch 948/1000 19s 39ms/step - loss: 0.2386 - acc: 0.9430 - val_loss: 0.4262 - val_acc: 0.8881 Epoch 949/1000 19s 38ms/step - loss: 0.2386 - acc: 0.9416 - val_loss: 0.4197 - val_acc: 0.8903 Epoch 950/1000 19s 38ms/step - loss: 0.2371 - acc: 0.9431 - val_loss: 0.4196 - val_acc: 0.8883 Epoch 951/1000 19s 38ms/step - loss: 0.2363 - acc: 0.9429 - val_loss: 0.4231 - val_acc: 0.8875 Epoch 952/1000 19s 39ms/step - loss: 0.2369 - acc: 0.9426 - val_loss: 0.4250 - val_acc: 0.8924 Epoch 953/1000 19s 39ms/step - loss: 0.2341 - acc: 0.9428 - val_loss: 0.4218 - val_acc: 0.8898 Epoch 954/1000 19s 39ms/step - loss: 0.2349 - acc: 0.9438 - val_loss: 0.4262 - val_acc: 0.8906 Epoch 955/1000 19s 39ms/step - loss: 0.2365 - acc: 0.9425 - val_loss: 0.4246 - val_acc: 0.8875 Epoch 956/1000 19s 39ms/step - loss: 0.2355 - acc: 0.9432 - val_loss: 0.4250 - val_acc: 0.8886 Epoch 957/1000 19s 38ms/step - loss: 0.2395 - acc: 0.9412 - val_loss: 0.4229 - val_acc: 0.8897 Epoch 958/1000 19s 39ms/step - loss: 0.2374 - acc: 0.9433 - val_loss: 0.4200 - val_acc: 0.8897 Epoch 959/1000 19s 39ms/step - loss: 0.2362 - acc: 0.9428 - val_loss: 0.4183 - val_acc: 0.8929 Epoch 960/1000 19s 39ms/step - loss: 0.2382 - acc: 0.9415 - val_loss: 0.4205 - val_acc: 0.8900 Epoch 961/1000 19s 38ms/step - loss: 0.2365 - acc: 0.9421 - val_loss: 0.4164 - val_acc: 0.8894 Epoch 962/1000 19s 39ms/step - loss: 0.2351 - acc: 0.9429 - val_loss: 0.4170 - val_acc: 0.8911 Epoch 963/1000 19s 39ms/step - loss: 0.2378 - acc: 0.9416 - val_loss: 0.4160 - val_acc: 0.8890 Epoch 964/1000 19s 38ms/step - loss: 0.2349 - acc: 0.9422 - val_loss: 0.4220 - val_acc: 0.8888 Epoch 965/1000 19s 38ms/step - loss: 0.2340 - acc: 0.9426 - val_loss: 0.4218 - val_acc: 0.8891 Epoch 966/1000 19s 39ms/step - loss: 0.2350 - acc: 0.9451 - val_loss: 0.4210 - val_acc: 0.8891 Epoch 967/1000 19s 38ms/step - loss: 0.2354 - acc: 0.9426 - val_loss: 0.4207 - val_acc: 0.8877 Epoch 968/1000 19s 39ms/step - loss: 0.2362 - acc: 0.9425 - val_loss: 0.4201 - val_acc: 0.8899 Epoch 969/1000 19s 38ms/step - loss: 0.2360 - acc: 0.9433 - val_loss: 0.4179 - val_acc: 0.8904 Epoch 970/1000 19s 39ms/step - loss: 0.2344 - acc: 0.9425 - val_loss: 0.4252 - val_acc: 0.8859 Epoch 971/1000 19s 38ms/step - loss: 0.2386 - acc: 0.9420 - val_loss: 0.4170 - val_acc: 0.8902 Epoch 972/1000 19s 39ms/step - loss: 0.2328 - acc: 0.9424 - val_loss: 0.4225 - val_acc: 0.8882 Epoch 973/1000 19s 38ms/step - loss: 0.2360 - acc: 0.9425 - val_loss: 0.4192 - val_acc: 0.8875 Epoch 974/1000 19s 38ms/step - loss: 0.2357 - acc: 0.9421 - val_loss: 0.4181 - val_acc: 0.8891 Epoch 975/1000 19s 38ms/step - loss: 0.2396 - acc: 0.9398 - val_loss: 0.4192 - val_acc: 0.8890 Epoch 976/1000 19s 38ms/step - loss: 0.2340 - acc: 0.9423 - val_loss: 0.4226 - val_acc: 0.8874 Epoch 977/1000 19s 38ms/step - loss: 0.2352 - acc: 0.9417 - val_loss: 0.4165 - val_acc: 0.8914 Epoch 978/1000 19s 39ms/step - loss: 0.2317 - acc: 0.9425 - val_loss: 0.4198 - val_acc: 0.8895 Epoch 979/1000 19s 39ms/step - loss: 0.2323 - acc: 0.9436 - val_loss: 0.4183 - val_acc: 0.8903 Epoch 980/1000 19s 39ms/step - loss: 0.2324 - acc: 0.9423 - val_loss: 0.4203 - val_acc: 0.8888 Epoch 981/1000 19s 38ms/step - loss: 0.2361 - acc: 0.9408 - val_loss: 0.4165 - val_acc: 0.8897 Epoch 982/1000 19s 38ms/step - loss: 0.2305 - acc: 0.9438 - val_loss: 0.4190 - val_acc: 0.8921 Epoch 983/1000 19s 38ms/step - loss: 0.2295 - acc: 0.9445 - val_loss: 0.4186 - val_acc: 0.8916 Epoch 984/1000 19s 38ms/step - loss: 0.2322 - acc: 0.9429 - val_loss: 0.4198 - val_acc: 0.8898 Epoch 985/1000 19s 38ms/step - loss: 0.2323 - acc: 0.9431 - val_loss: 0.4230 - val_acc: 0.8909 Epoch 986/1000 19s 38ms/step - loss: 0.2330 - acc: 0.9431 - val_loss: 0.4193 - val_acc: 0.8891 Epoch 987/1000 19s 39ms/step - loss: 0.2323 - acc: 0.9422 - val_loss: 0.4195 - val_acc: 0.8916 Epoch 988/1000 19s 39ms/step - loss: 0.2318 - acc: 0.9426 - val_loss: 0.4185 - val_acc: 0.8900 Epoch 989/1000 19s 39ms/step - loss: 0.2328 - acc: 0.9412 - val_loss: 0.4197 - val_acc: 0.8923 Epoch 990/1000 19s 39ms/step - loss: 0.2329 - acc: 0.9417 - val_loss: 0.4133 - val_acc: 0.8909 Epoch 991/1000 19s 39ms/step - loss: 0.2303 - acc: 0.9431 - val_loss: 0.4166 - val_acc: 0.8911 Epoch 992/1000 19s 38ms/step - loss: 0.2300 - acc: 0.9434 - val_loss: 0.4202 - val_acc: 0.8914 Epoch 993/1000 19s 39ms/step - loss: 0.2359 - acc: 0.9406 - val_loss: 0.4194 - val_acc: 0.8882 Epoch 994/1000 19s 39ms/step - loss: 0.2295 - acc: 0.9430 - val_loss: 0.4191 - val_acc: 0.8905 Epoch 995/1000 19s 39ms/step - loss: 0.2283 - acc: 0.9442 - val_loss: 0.4219 - val_acc: 0.8877 Epoch 996/1000 19s 38ms/step - loss: 0.2299 - acc: 0.9426 - val_loss: 0.4232 - val_acc: 0.8877 Epoch 997/1000 19s 38ms/step - loss: 0.2315 - acc: 0.9433 - val_loss: 0.4219 - val_acc: 0.8898 Epoch 998/1000 19s 39ms/step - loss: 0.2311 - acc: 0.9419 - val_loss: 0.4189 - val_acc: 0.8889 Epoch 999/1000 19s 39ms/step - loss: 0.2278 - acc: 0.9436 - val_loss: 0.4226 - val_acc: 0.8899 Epoch 1000/1000 19s 38ms/step - loss: 0.2294 - acc: 0.9432 - val_loss: 0.4192 - val_acc: 0.8898 Train loss: 0.2049155475348234 Train accuracy: 0.9527800017595291 Test loss: 0.4191611827909946 Test accuracy: 0.8898000001907349

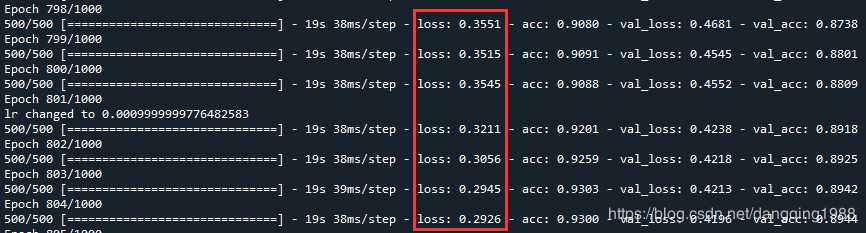

此外还发现,如下图所示,在学习率为0.01的时候,原本loss都不下降了,一直在0.35左右波动;当学习率下降为0.001的时候,loss瞬间降到了0.32,紧接着又降到了0.29。似乎降低学习率很有利于loss下降。

Minghang Zhao, Shisheng Zhong, Xuyun Fu, Baoping Tang, Shaojiang Dong, Michael Pecht, Deep Residual Networks with Adaptively Parametric Rectifier Linear Units for Fault Diagnosis, IEEE Transactions on Industrial Electronics, 2020, DOI: 10.1109/TIE.2020.2972458

https://ieeexplore.ieee.org/document/8998530

以上是关于深度残差网络+自适应参数化ReLU激活函数(调参记录1)的主要内容,如果未能解决你的问题,请参考以下文章