004 ceph存储池

Posted zyxnhr

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了004 ceph存储池相关的知识,希望对你有一定的参考价值。

介绍:前面已经使用deploy和ansble部署了ceph集群,现在对集群的池进行简单介绍和简单操作

一、部分概念

池是ceph存储集群的逻辑分区,用于存储对象

对象存储到池中时,使用CRUSH规则将该对象分配到池中的一个PG,PG根据池的配置和CRUSH算法自动映射一组OSD池中PG数量对性能有重要影响。通常而言,池应当配置为每个OSD包含的100-200个归置组

创建池时。ceph会检查每个OSD的PG数量是否会超过200.如果超过,ceph不会创建这个池。ceph3.0安装时不创建存储池。

二、存储池(复制池)

2.1 创建复制池

ceph osd pool create <pool-name> <pg-num> [pgp-num] [replicated] [crush-ruleset-name] [expected-num-objects]

pool-name 存储池的名称

pg-num 存储池的pg总数

pgp-num 存储池的pg的有效数,通常与pg相等

replicated 指定为复制池,即使不指定,默认也是创建复制池

crush-ruleset-name 用于这个池的crush规则的名字,默认为osd_pool_default_crush_replicated_ruleset

expected-num-objects 池中预期的对象数量。如果事先知道这个值,ceph可于创建池时在OSD的文件系统上准备文件夹结构。否则,ceph会在运行时重组目录结构,因为对象数量会有所增加。这种重组一会带来延迟影响

[[email protected] ceph]# ceph osd pool create testpool 128

没有写的参数即使用默认值

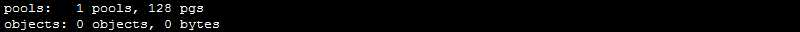

[[email protected] ceph]# ceph -s

查询集群有哪些pool

[[email protected] ceph]# ceph osd pool ls

[[email protected] ceph]# ceph osd lspools

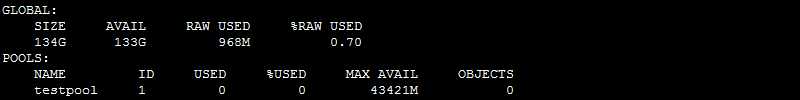

[[email protected] ceph]# ceph df

注:创建了池后,无法减少PG的数量,只能增加

如果创建池时不指定副本数量,则默认为3,可通过osd_pool_default_size参数修改,还可以通过如下命令修改:ceph osd pool set pool-name size number-of-replicas osd_pool_default_min_size参数可用于设置最对象可用的最小副本数,默认为2

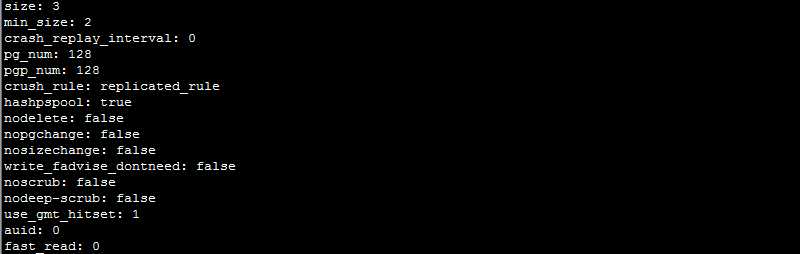

查看pool属性

[[email protected] ceph]# ceph osd pool get testpool all

2.2 为池启用ceph应用

创建池后,必须显式指定能够使用它的ceph应用类型:(ceph块设备 ceph对象网关 ceph文件系统)

如果不显示指定类型,集群将显示HEALTH_WARN状态(使用ceph health detail命令查看)

为池关联应用类型:

ceph osd pool application enable pool-name app

指定池为块设备

[[email protected] ceph]# ceph osd pool application enable testpool rbd enabled application ‘rbd‘ on pool ‘testpool‘ [[email protected] ceph]# [[email protected] ceph]# ceph osd pool ls detail pool 1 ‘testpool‘ replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 128 pgp_num 128 last_change 33 flags hashpspool stripe_width 0 application rbd

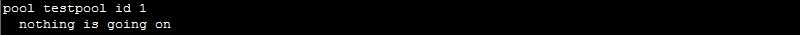

[[email protected] ceph]# ceph osd pool stats

[[email protected] ceph]# ceph osd pool stats testpool

2.3 设置配额

[[email protected] ceph]# ceph osd pool set-quota testpool max_bytes 1048576 set-quota max_bytes = 1048576 for pool testpool [[email protected] ceph]# [[email protected] ceph]# ceph osd pool set-quota testpool max_bytes 0 set-quota max_bytes = 0 for pool testpool

2.4 池的重命名

[[email protected] ceph]# ceph osd pool rename testpool mytestpool pool ‘testpool‘ renamed to ‘mytestpool‘ [[email protected] ceph]# ceph osd pool ls mytestpool

2.5 数据处理

[[email protected] ceph]# rados -p mytestpool put test /root/anaconda-ks.cfg

查看数据

[[email protected] ceph]# rados -p mytestpool ls

查看数据内容,只能下载下来,进行源文件对比

[[email protected] ceph]# rados -p mytestpool get test /root/111

[[email protected] ceph]# diff /root/111 /root/anaconda-ks.cfg

命名修改后,不影响数据

[[email protected] ceph]#ceph osd pool rename mytestpool testpool pool ‘mytestpool‘ renamed to ‘testpool‘ [[email protected] ceph]#rados -p testpool ls test

上传与下载数据

[[email protected] ceph]# echo "111111111111" >> /root/111 [[email protected] ceph]# rados -p testpool put test /root/111 [[email protected] ceph]# rados -p testpool ls test [[email protected] ceph]# rados -p testpool get test /root/222 [[email protected] ceph]# diff /root/222 /root/anaconda-ks.cfg 240d239 < 111111111111

2.6 池的快照

创建池快照

[[email protected] ceph]# ceph osd pool mksnap testpool testpool-snap-20190316 created pool testpool snap testpool-snap-20190316 [[email protected] ceph]# rados lssnap -p testpool 1 testpool-snap-20190316 2019.03.16 22:27:34 1 snaps

再上传一个数据

[[email protected] ceph]# rados -p testpool put test2 /root/anaconda-ks.cfg [[email protected] ceph]# rados -p testpool ls test2 test

使用快照的场景:(防止误删除,防止误修改,防止新增错误文件)

ceph针对文件回退

[[email protected] ceph]# ceph osd pool mksnap testpool testpool-snap-2 created pool testpool snap testpool-snap-2 [[email protected] ceph]# rados lssnap -p testpool 1 testpool-snap-20190316 2019.03.16 22:27:34 2 testpool-snap-2 2019.03.16 22:31:15 2 snaps

文件删除并恢复

[email protected] ceph]# rados -p testpool rm test [[email protected] ceph]# rados -p testpool get test /root/333 error getting testpool/test: (2) No such file or directory [[email protected] ceph]# rados -p testpool -s testpool-snap-2 get test /root/444 selected snap 2 ‘testpool-snap-2‘ [[email protected] ceph]# ll /root/444 #可以直接从444恢复test文件 -rw-r--r-- 1 root root 7317 Mar 16 22:34 /root/444 [[email protected] ceph]# rados -p testpool rollback test testpool-snap-2 从快照中还原 rolled back pool testpool to snapshot testpool-snap-2 [[email protected] ceph]# rados -p testpool get test /root/555 [[email protected] ceph]# diff /root/444 /root/555 #对比文件没有区别,还原成功

2.7 配置池属性

[[email protected] ceph]# ceph osd pool get testpool min_size min_size: 2 [[email protected] ceph]# ceph osd pool set testpool min_size 1 set pool 1 min_size to 1 [[email protected] ceph]# ceph osd pool get testpool min_size min_size: 1 [[email protected] ceph]# ceph osd pool set testpool min_size 2 set pool 1 min_size to 2 [[email protected] ceph]# ceph osd pool get testpool min_size min_size: 2

三、存储池(纠删码池)

3.1 纠删码池的特性

纠删码池使用纠删码而非复制来保护对象数据

相对于复制池,纠删码池会节约存储空间,但是需要更多的计算资源

纠删码池只能用于对象存储

纠删码池不支持快照

3.2 创建纠删码池

3.2.1 语法

ceph osd pool create <pool-name> <pg-num> [pgp-num] erasure [erasure-code-profile] [cursh-ruleset-name] [expected_num_objects]

erasure用于指定创建一个纠删码池

erasure-code-profile是要使用的profile的名称,可以使用ceph osd erasure-code-profile set 命令创建新的profile。profile定义使用的插件类型以及k和m的值。默认情况下,ceph使用default profile

查看默认profile

[[email protected] ceph]# ceph osd erasure-code-profile get default

k=2 m=1 plugin=jerasure technique=reed_sol_van

3.2.2 自定义一个profile

[[email protected] ceph]# ceph osd erasure-code-profile set EC-profile k=3 m=2

[[email protected] ceph]# ceph osd erasure-code-profile get EC-profile

crush-device-class= crush-failure-domain=host crush-root=default jerasure-per-chunk-alignment=false k=3 m=2 plugin=jerasure technique=reed_sol_van w=8

3.2.3 创建一个纠删码池

[[email protected] ceph]# ceph osd pool create EC-pool 64 64 erasure EC-profile pool ‘EC-pool‘ created [[email protected] ceph]# ceph osd pool ls testpool EC-pool [[email protected] ceph]# ceph osd pool ls detail pool 1 ‘testpool‘ replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 128 pgp_num 128 last_change 42 flags hashpspool stripe_width 0 application rbd snap 1 ‘testpool-snap-20190316‘ 2019-03-16 22:27:34.150433 snap 2 ‘testpool-snap-2‘ 2019-03-16 22:31:15.430823 pool 2 ‘EC-pool‘ erasure size 5 min_size 4 crush_rule 1 object_hash rjenkins pg_num 64 pgp_num 64 last_change 46 flags hashpspool stripe_width 12288

3.3 关于纠删码池的其他操作

列出现有的配置:ceph osd erasure-code-profile ls

[[email protected] ceph]# ceph osd erasure-code-profile ls EC-profile default

删除现有的配置:ceph osd erasure-code-profile rm profile-name

查看纠删码池状态:ceph osd dump |grep -i EC-pool

[[email protected] ceph]# ceph osd dump |grep -i EC-pool pool 2 ‘EC-pool‘ erasure size 5 min_size 4 crush_rule 1 object_hash rjenkins pg_num 64 pgp_num 64 last_change 46 flags hashpspool stripe_width 12288

添加数据到纠删码池:rados -p EC-pool ls,rados -p EC-pool put object1 hello.txt

查看数据状态:ceph osd map EC-pool object1

读取数据:rados -p EC-pool get object1 /tmp/object1

以上是关于004 ceph存储池的主要内容,如果未能解决你的问题,请参考以下文章