k8s 集群搭建

Posted kingle-study

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了k8s 集群搭建相关的知识,希望对你有一定的参考价值。

一,环境介绍

| master | node1 | node2 | |

| IP | 192.168.0.164 | 192.168.0.165 | 192.168.0.167 |

| 环境 | centos 7 | centos 7 | centos 7 |

二,配置安装

三台节点操作实例:"

01,配置yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

02,安装ETCD

yum install -y etcd

节点加入:

master节点加入:(IP 192.168.1.164)

etcd -name infra1 -initial-advertise-peer-urls http://192.168.0.164:2380 -listen-peer-urls http://192.168.0.164:2380 -listen-client-urls http://192.168.0.164:2379,http://127.0.0.1:2379 -advertise-client-urls http://192.168.0.164:2379 -initial-cluster-token etcd-cluster-1 -initial-cluster infra1=http://192.168.0.164:2380,infra2=http://192.168.0.165:2380,infra3=http://192.168.0.167:2380 -initial-cluster-state new

node1节点加入:(IP 192.168.1.165)

etcd -name infra2 -initial-advertise-peer-urls http://192.168.0.165:2380 -listen-peer-urls http://192.168.0.165:2380 -listen-client-urls http://192.168.0.165:2379,http://127.0.0.1:2379 -advertise-client-urls http://192.168.0.165:2379 -initial-cluster-token etcd-cluster-1 -initial-cluster infra1=http://192.168.0.164:2380,infra2=http://192.168.0.165:2380,infra3=http://192.168.0.167:2380 -initial-cluster-state new

node2节点加入:(IP 192.168.1.167)

etcd -name infra3 -initial-advertise-peer-urls http://192.168.0.167:2380 -listen-peer-urls http://192.168.0.167:2380 -listen-client-urls http://192.168.0.167:2379,http://127.0.0.1:2379 -advertise-client-urls http://192.168.0.167:2379 -initial-cluster-token etcd-cluster-1 -initial-cluster infra1=http://192.168.0.164:2380,infra2=http://192.168.0.165:2380,infra3=http://192.168.0.167:2380 -initial-cluster-state new

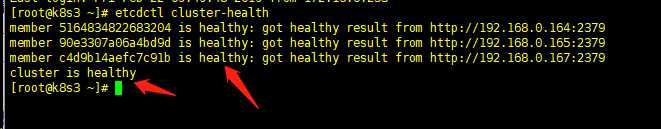

ECTD节点检查:

启动后另起一个窜口输入命令

etcdctl cluster-health

说明成功

节点管理:(更改ip即可完成多台)

vim /usr/lib/systemd/system/etcd.service

[Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify WorkingDirectory=/root ExecStart=etcd -name infra1 -initial-advertise-peer-urls http://192.168.0.164:2380 -listen-peer-urls http://192.168.0.164:2380 -listen-client-urls http://192.168.0.164:2379,http://127.0.0.1:2379 -advertise-client-urls http://192.168.0.164:2379 -initial-cluster-token etcd-cluster-1 -initial-cluster infra1=http://192.168.0.164:2380,infra2=http://192.168.0.165:2380,infra3=http://192.168.0.167:2380 -initial-cluster-state new Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target

#!/bin/bash echo ‘################ Prerequisites...‘ systemctl stop firewalld systemctl disable firewalld yum -y install ntp systemctl start ntpd systemctl enable ntpd echo ‘################ Installing flannel...‘ #安装flannel yum install flannel -y echo ‘################ Add subnets for flannel...‘ A_SUBNET=172.17.0.0/16 B_SUBNET=192.168.0.0/16 C_SUBNET=10.254.0.0/16 FLANNEL_SUBNET=$A_SUBNET SERVICE_SUBNET=$B_SUBNET OCCUPIED_IPs=(`ifconfig -a | grep ‘inet ‘ | cut -d ‘:‘ -f 2 |cut -d ‘ ‘ -f 1 | grep -v ‘^127‘`) for ip in ${OCCUPIED_IPs[@]};do if [ $(ipcalc -n $ip/${A_SUBNET#*/}) == $(ipcalc -n ${A_SUBNET}) ];then FLANNEL_SUBNET=$C_SUBNET SERVICE_SUBNET=$B_SUBNET break fi if [ $(ipcalc -n $ip/${B_SUBNET#*/}) == $(ipcalc -n ${B_SUBNET}) ];then FLANNEL_SUBNET=$A_SUBNET SERVICE_SUBNET=$C_SUBNET break fi if [ $(ipcalc -n $ip/${C_SUBNET#*/}) == $(ipcalc -n ${C_SUBNET}) ];then FLANNEL_SUBNET=$A_SUBNET SERVICE_SUBNET=$B_SUBNET break fi done while ((1));do sleep 2 etcdctl cluster-health flag=$? if [ $flag == 0 ];then etcdctl mk /coreos.com/network/config ‘{"Network":"‘${FLANNEL_SUBNET}‘"}‘ break fi done echo ‘################ Starting flannel...‘ echo -e "FLANNEL_ETCD="http://192.168.0.164:2379,http://192.168.0.165:2379,http://192.168.0.167:2379" FLANNEL_ETCD_KEY="/coreos.com/network"" > /etc/sysconfig/flanneld systemctl enable flanneld systemctl start flanneld echo ‘################ Installing K8S...‘ yum -y install kubernetes echo ‘KUBE_API_ADDRESS="--address=0.0.0.0" KUBE_API_PORT="--port=8080" KUBELET_PORT="--kubelet_port=10250" KUBE_ETCD_SERVERS="--etcd_servers=http://192.168.0.164:2379,http://192.168.0.165:2379,http://192.168.0.167:2379" KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=‘${SERVICE_SUBNET}‘" KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" KUBE_API_ARGS=""‘ > /etc/kubernetes/apiserver echo ‘################ Start K8S components...‘ for SERVICES in kube-apiserver kube-controller-manager kube-scheduler; do systemctl restart $SERVICES systemctl enable $SERVICES systemctl status $SERVICES done #!/bin/bash echo ‘################ Prerequisites...‘ #关闭firewall 开启ntp时间同步 systemctl stop firewalld systemctl disable firewalld yum -y install ntp systemctl start ntpd systemctl enable ntpd #安装kubernetes所需要的几个软件 yum -y install kubernetes docker flannel bridge-utils #此处使用了一个vip 命名为vip 实际部署时需要替换为你的集群的vip 使用此ip的服务有 kube-master(8080) registry(5000) skydns(53) echo ‘################ Configuring nodes...‘ echo ‘################ Configuring nodes > Find Kube master...‘ KUBE_REGISTRY_IP="192.168.0.169" KUBE_MASTER_IP="192.168.0.170" echo ‘################ Configuring nodes > Configuring Minion...‘ echo -e "KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow_privileged=false" KUBE_MASTER="--master=http://${KUBE_MASTER_IP}:8080"" > /etc/kubernetes/config echo ‘################ Configuring nodes > Configuring kubelet...‘ #取每个node机器的eth0的ip作为标识 KUBE_NODE_IP=`ifconfig eth0 | grep "inet " | awk ‘{print $2}‘` #api_servers 使用master1 master2 master3的ip数组形式 echo -e "KUBELET_ADDRESS="--address=0.0.0.0" KUBELET_PORT="--port=10250" KUBELET_HOSTNAME="--hostname_override=${KUBE_NODE_IP}" KUBELET_API_SERVER="--api_servers=http://192.168.0.164:8080,http://192.168.0.165:8080,http://192.168.0.167:8080" KUBELET_ARGS="--cluster-dns=vip --cluster-domain=k8s --pod-infra-container-image=${KUBE_REGISTRY_IP}:5000/pause:latest"" > /etc/kubernetes/kubelet #flannel读取etcd配置信息 为本机的docker0分配ip 保证node集群子网互通 echo ‘################ Configuring flannel...‘ echo -e "FLANNEL_ETCD="http://192.168.0.162:2379,http://192.168.0.165:2379,http://192.168.0.167:2379" FLANNEL_ETCD_KEY="/coreos.com/network"" > /etc/sysconfig/flanneld echo ‘################ Accept private registry...‘ echo "OPTIONS=‘--selinux-enabled --insecure-registry ${KUBE_REGISTRY_IP}:5000‘ DOCKER_CERT_PATH=/etc/docker" > /etc/sysconfig/docker echo ‘################ Start K8S Components...‘ systemctl daemon-reload for SERVICES in kube-proxy flanneld; do systemctl restart $SERVICES systemctl enable $SERVICES systemctl status $SERVICES done echo ‘################ Resolve interface conflicts...‘ systemctl stop docker ifconfig docker0 down brctl delbr docker0 echo ‘################ Accept private registry...‘ echo -e "OPTIONS=‘--selinux-enabled --insecure-registry ${KUBE_REGISTRY_IP}:5000‘ DOCKER_CERT_PATH=/etc/docker" > /etc/sysconfig/docker for SERVICES in docker kubelet; do systemctl restart $SERVICES systemctl enable $SERVICES systemctl status $SERVICES done

以上是关于k8s 集群搭建的主要内容,如果未能解决你的问题,请参考以下文章