scrapy meta不用pipe用命令-o

Posted cxhzy

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了scrapy meta不用pipe用命令-o相关的知识,希望对你有一定的参考价值。

1. spider代码:

# -*- coding: utf-8 -*- import scrapy from tencent1.items import Tencent1Item import json class Mytest1Spider(scrapy.Spider): name = ‘tc1‘ start_urls = [‘https://hr.tencent.com/position.php?lid=&tid=&keywords=python&start=0#a/‘] def parse(self, response): item = Tencent1Item() tr = response.xpath("//tr[@class=‘even‘]|//tr[@class=‘odd‘]") for i in tr: item[‘job_name‘]=i.xpath(‘./td[1]/a/text()‘).extract_first() item[‘job_type‘] = i.xpath(‘./td[2]/text()‘).extract_first() item[‘job_num‘] = i.xpath(‘./td[3]/text()‘).extract_first() item[‘job_place‘] = i.xpath(‘./td[4]/text()‘).extract_first() item[‘job_time‘] = i.xpath(‘./td[5]/text()‘).extract_first() # print(item) url1 = i.xpath(‘./td[1]/a/@href‘).extract_first() url1 = ‘https://hr.tencent.com/{}‘.format(url1) yield scrapy.Request(url=url1,meta={‘job_item‘:item},callback=self.parse_detail) # #下一页网址 # url_next = response.xpath(‘//a[@id = "next"]/@href‘).extract_first() # if ‘50‘in url_next: # return # url_next = ‘https://hr.tencent.com/{}‘.format(url_next) # print(url_next) # yield scrapy.Request(url_next) def parse_detail(self,response): item = response.meta[‘job_item‘] data = response.xpath(‘//ul[@class="squareli"]/li/text()‘).extract() item[‘job_detail‘] = ‘ ‘.join(data) return item

2. items代码:

import scrapy class Tencent1Item(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() job_name = scrapy.Field() job_type = scrapy.Field() job_num = scrapy.Field() job_place = scrapy.Field() job_time = scrapy.Field() job_detail = scrapy.Field()

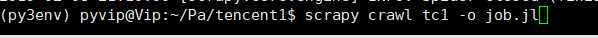

3. 命令,(job.jl 是文件名字)

以上是关于scrapy meta不用pipe用命令-o的主要内容,如果未能解决你的问题,请参考以下文章