docker 日志方案

Posted charlieroro

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了docker 日志方案相关的知识,希望对你有一定的参考价值。

docker logs默认会显示命令的标准输出(STDOUT)和标准错误(STDERR)。下面使用echo.sh和Dockerfile创建一个名为echo.v1的镜像,echo.sh会一直输出”hello“

[[email protected] docker]# cat echo.sh #!/bin/sh while true;do echo hello;sleep 2;done

[[email protected] docker]# cat Dockerfile FROM busybox:latest WORKDIR /home COPY echo.sh /home CMD [ "sh", "-c", "/home/echo.sh" ]

# chmod 777 echo.sh # docker build -t echo:v1 .

运行上述镜像,在对于的容器进程目录下可以看到该进程打开个4个文件,其中fd为10的即是运行的shell 脚本,

# ps -ef|grep echo root 11198 11181 0 09:04 pts/0 00:00:01 /bin/sh /home/echo.sh root 24346 21490 0 12:30 pts/5 00:00:00 grep --color=auto echo [[email protected] docker]# cd /proc/11198/fd [[email protected] fd]# ll lrwx------. 1 root root 64 Jan 28 12:30 0 -> /dev/pts/0 lrwx------. 1 root root 64 Jan 28 12:30 1 -> /dev/pts/0 lr-x------. 1 root root 64 Jan 28 12:30 10 -> /home/echo.sh lrwx------. 1 root root 64 Jan 28 12:30 2 -> /dev/pts/0

执行docker logs -f CONTAINER_ID 跟踪容器输出,fd为1的文件为docker logs记录的输出,可以直接导入一个自定义的字符串,如echo ”你好“ > 1,可以在docker log日志中看到如下输出

hello

hello

你好

hello

docker支持多种插件,可以在docker启动时通过命令行传递log driver,也可以通过配置docker的daemon.json文件来设置dockerd的log driver。docker默认使用json-file的log driver,使用如下命令查看当前系统的log driver

# docker info --format ‘{{.LoggingDriver}}‘

json-file

下面使用journald来作为log driver

# docker run -itd --log-driver=journald echo:v1 8a8c828fa673c0bea8005d3f53e50b2112b4c8682d7e04100affeba25ebd588c

# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 8a8c828fa673 echo:v1 "sh -c /home/echo.sh" 2 minutes ago Up 2 minutes vibrant_curie

# journalctl CONTAINER_NAME=vibrant_curie --all

在journalctl中可以看到有如下log日志,8a8c828fa673就是上述容器的ID

-- Logs begin at Fri 2019-01-25 10:15:42 CST, end at Mon 2019-01-28 13:12:55 CST. -- Jan 28 13:08:47 . 8a8c828fa673[9709]: hello Jan 28 13:08:49 . 8a8c828fa673[9709]: hello ...

同时使用docker inspect查看该容器配置,可以看到log driver变为了journald,

"LogConfig": { "Type": "journald", "Config": {} },

生产中一般使用日志收集工具来对服务日志进行收集和解析,下面介绍使用fluentd来采集日志,fluentd支持多种插件,支持多种日志的输入输出方式,插件使用方式可以参考官网。下载官方镜像

docker pull fluent/fluentd

首先创建一个fluentd的配置文件,该配置文件用于接收远端日志,并打印到标准输出

# cat fluentd.conf <source> @type forward </source> <match *> @type stdout </match>

创建2个docker images,echo:v1和echo:v2,内容如下

# cat echo.sh ---echo:v1 #!/bin/sh while true;do echo "docker1 -> 11111" echo "docker1,this is docker1" echo "docker1,12132*)(" sleep 2 done

# cat echo.sh ----echo:v2 #!/bin/sh while true;do echo "docker2 -> 11111" echo "docker2,this is docker1" echo "docker2,12132*)(" sleep 2 done

首先启动fluentd,然后启动echo:v1,fluentd使用本地配置文件替换/home/fluentd/fluentd.conf替换默认配置文件,fluentd-address用于指定fluentd的地址,更多选项参见fluentd logging driver

# docker run -it --rm -p 24224:24224 -v /home/fluentd/fluentd.conf:/fluentd/etc/fluentd.conf -e FLUENTD_CONF=fluentd.conf fluent/fluentd:latest # docker run --rm --name=docker1 --log-driver=fluentd --log-opt tag="{{.Name}}" --log-opt fluentd-address=192.168.80.189:24224 echo:v1

fluentd默认绑定地址为0.0.0.0,即接收本机所有接口IP的数据,绑定端口为指定的端口24224,fluentd启动时有如下输出

[info]: #0 listening port port=24224 bind="0.0.0.0"

在fluentd界面上可以看到echo:v1重定向过来的输出,下面加粗的docker1为容器启动时设置的tag值,docker支持tag模板,可以参考Customize log driver output

2019-01-29 07:46:24.000000000 +0000 docker1: {"container_name":"/docker1","source":"stdout","log":"docker1 -> 11111","container_id":"74c0af9defd10d33db0e197f0dd3af382a5c06a858f06bdd2f0f49e43bf0a25e"} 2019-01-29 07:46:24.000000000 +0000 docker1: {"container_id":"74c0af9defd10d33db0e197f0dd3af382a5c06a858f06bdd2f0f49e43bf0a25e","container_name":"/docker1","source":"stdout","log":"docker1,this is docker1"} 2019-01-29 07:46:24.000000000 +0000 docker1: {"container_id":"74c0af9defd10d33db0e197f0dd3af382a5c06a858f06bdd2f0f49e43bf0a25e","container_name":"/docker1","source":"stdout","log":"docker1,12132*)("}

上述场景中,当fluentd退出后,echo:v1也会随之退出,可以在容器启动时使用fluentd-async-connect来避免因fluentd退出或未启动而导致容器异常,如下图,当fluentd未启动也不会导致容器启动失败

docker run --rm --name=docker1 --log-driver=fluentd --log-opt tag="docker1.{{.Name}}" --log-opt fluentd-async-connect=true --log-opt fluentd-address=192.168.80.189:24224 echo:v1

上述场景输出直接重定向到标准输出,也可以使用插件重定向到文件,fluentd使用如下配置文件,日志文件会重定向到/home/fluent目录下,match用于匹配echo:v1的输出(tag="docker1.{{.Name}}"),这样就可以过滤掉echo:v2的输出

# cat fluentd.conf <source> @type forward </source> <match docker1.*> @type file path /home/fluent/ </match>

使用如下方式启动fluentd

# docker run -it --rm -p 24224:24224 -v /home/fluentd/fluentd.conf:/fluentd/etc/fluentd.conf -v /home/fluent:/home/fluent -e FLUENTD_CONF=fluentd.conf fluent/fluentd:latest

在/home/fluent下面可以看到有生成的日志文件

# ll total 8 -rw-r--r--. 1 charlie charlie 2404 Jan 29 17:14 buffer.b58095399160f67b3b56a8f76791e3f1a.log -rw-r--r--. 1 charlie charlie 68 Jan 29 17:14 buffer.b58095399160f67b3b56a8f76791e3f1a.log.meta

上述展示了使用fluentd的标准输出来显示docker logs以及使用file来持久化日志。生产中一般使用elasticsearch作为日志的存储和搜索引擎,使用kibana为log日志提供显示界面。可以在这里获取各个版本的elasticsearch和kibana镜像以及使用文档,本次使用6.5版本的elasticsearch和kibana。注:启动elasticsearch时需要设置sysctl -w vm.max_map_count=262144

fluentd使用elasticsearch时需要在镜像中安装elasticsearch的plugin,也可以直接下载包含elasticsearch plugin的docker镜像,如果没有k8s.gcr.io/fluentd-elasticsearch的访问权限,可以pull这里的镜像。

使用docker-compose来启动elasticsearch,kibana和fluentd,文件结构如下

# ll -rw-r--r--. 1 root root 1287 Jan 31 16:51 docker-compose.yml -rw-r--r--. 1 root root 196 Jan 30 11:56 elasticsearch.yml -rw-r--r--. 1 root root 332 Jan 31 16:48 fluentd.conf -rw-r--r--. 1 root root 1408 Jan 30 12:07 kibana.yml

centos上docker-compose的安装可以参见这里。docker-compose.yml以及各组件的配置如下。它们共同部署在同一个brige esnet上,同时注意kibana.yml和fluentd.yml中使用elasticsearch的service名字作为host。kibana的所有配置可以参见kibana.yml

# cat docker-compose.yml version: ‘2‘ services: elasticsearch: image: docker.elastic.co/elasticsearch/elasticsearch:6.5.4 container_name: elasticsearch environment: - http.host=0.0.0.0 - transport.host=0.0.0.0 - "ES_JAVA_OPTS=-Xms1g -Xmx1g" volumes: - esdata:/usr/share/elasticsearch/data - ./elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml ports: - 9200:9200 - 9300:9300 networks: - esnet ulimits: memlock: soft: -1 hard: -1 nofile: soft: 65536 hard: 65536 mem_limit: 2g cap_add: - IPC_LOCK kibana: image: docker.elastic.co/kibana/kibana:6.5.4 depends_on: - elasticsearch container_name: kibana environment: - SERVER_HOST=0.0.0.0 volumes: - ./kibana.yml:/usr/share/kibana/config/kibana.yml ports: - 5601:5601 networks: - esnet flunted: image: fluentd-elasticsearch:v2.4.0 depends_on: - elasticsearch container_name: flunted environment: - FLUENTD_CONF=fluentd.conf volumes: - ./fluentd.conf:/etc/fluent/fluent.conf ports: - 24224:24224 networks: - esnet volumes: esdata: driver: local networks: esnet:

# cat elasticsearch.yml cluster.name: "chimeo-docker-cluster" node.name: "chimeo-docker-single-node" network.host: 0.0.0.0

# cat kibana.yml #kibana is served by a back end server. This setting specifies the port to use. server.port: 5601 # Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values. # The default is ‘localhost‘, which usually means remote machines will not be able to connect. # To allow connections from remote users, set this parameter to a non-loopback address. server.host: "localhost" # The Kibana server‘s name. This is used for display purposes. server.name: "charlie" # The URLs of the Elasticsearch instances to use for all your queries. elasticsearch.url: "http://elasticsearch:9200" # Kibana uses an index in Elasticsearch to store saved searches, visualizations and # dashboards. Kibana creates a new index if the index doesn‘t already exist. kibana.index: ".kibana" # Time in milliseconds to wait for Elasticsearch to respond to pings. Defaults to the value of # the elasticsearch.requestTimeout setting. elasticsearch.pingTimeout: 5000 # Time in milliseconds to wait for responses from the back end or Elasticsearch. This value # must be a positive integer. elasticsearch.requestTimeout: 30000 # Time in milliseconds to wait for Elasticsearch at Kibana startup before retrying. elasticsearch.startupTimeout: 10000 # Set the interval in milliseconds to sample system and process performance # metrics. Minimum is 100ms. Defaults to 5000. ops.interval: 5000

# cat fluentd.conf <source> @type forward </source> <match **> @type elasticsearch log_level info include_tag_key true host elasticsearch port 9200 logstash_format true chunk_limit_size 10M flush_interval 5s max_retry_wait 30 disable_retry_limit num_threads 8 </match>

使用如下命令启动即可

# docker-compose up

启动一个使用fluentd的容器。注:测试过程中可以不加fluentd-async-connect=true,可以判定该容器是否能连接到fluentd

docker run -it --rm --name=docker1 --log-driver=fluentd --log-opt tag="fluent.{{.Name}}" --log-opt fluentd-async-connect=true --log-opt fluentd-address=127.0.0.1:24224 echo:v1

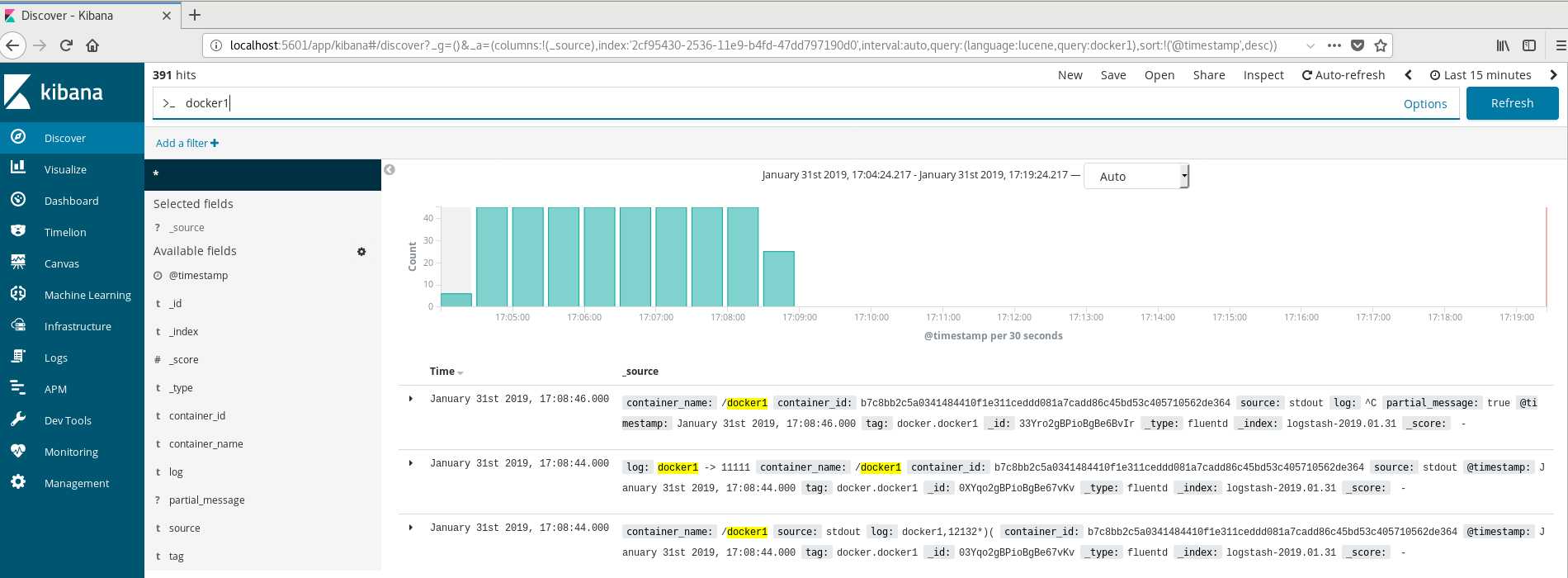

打开本地浏览器,输入kibana的默认url:http://localhost:5601,创建index后就可以看到echo:v1容器的打印日志

在使用到kubernetes时,fluentd一般以DaemonSet方式部署到每个node节点,采集node节点的log日志。也可以以sidecar的方式采集同pod的容器服务的日志。更多参见Logging Architecture

TIPS:

- fluentd给elasticsearch推送数据是以chunk为单位的,如果chunk过大可能导致elasticsearch因为payload过大而无法接收,需要设置chunk_limit_size大小,参考Fluentd-ElasticSearch配置

参考:

https://stackoverflow.com/questions/44002643/how-to-use-the-official-docker-elasticsearch-container

以上是关于docker 日志方案的主要内容,如果未能解决你的问题,请参考以下文章