爬虫--使用scrapy爬取糗事百科并在txt文件中持久化存储

Posted sunian

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了爬虫--使用scrapy爬取糗事百科并在txt文件中持久化存储相关的知识,希望对你有一定的参考价值。

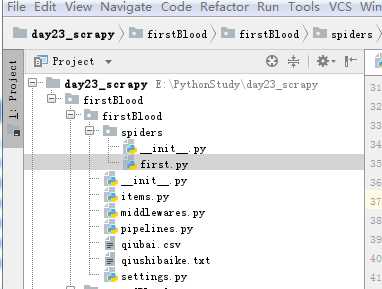

工程目录结构

spiders下的first源码

# -*- coding: utf-8 -*- import scrapy from firstBlood.items import FirstbloodItem class FirstSpider(scrapy.Spider): #爬虫文件的名称 #当有多个爬虫文件时,可以通过名称定位到指定的爬虫文件 name = ‘first‘ #allowed_domains 允许的域名 跟start_url互悖 #allowed_domains = [‘www.xxx.com‘] #start_url 请求的url列表,会被自动的请求发送 start_urls = [‘https://www.qiushibaike.com/text/‘] def parse(self, response): ‘‘‘ 解析请求的响应 可以使用正则,XPATH ,因为scrapy 集成了XPATH,建议使用XAPTH 解析得到一个selector :param response: :return: ‘‘‘ all_data = [] div_list=response.xpath(‘//div[@id="content-left"]/div‘) for div in div_list: #author=div.xpath(‘./div[1]/a[2]/h2/text()‘)#author 拿到的不是之前理解的源码数据而 # 是selector对象,我们只需将selector类型对象下的data对象拿到即可 #author=author[0].extract() #如果存在匿名用户时,将会报错(匿名用户的数据结构与登录的用户名的数据结构不一样) ‘‘‘ 改进版‘‘‘ author = div.xpath(‘./div[1]/a[2]/h2/text()| ./div[1]/span[2]/h2/text()‘)[0].extract() content=div.xpath(‘.//div[@class="content"]/span//text()‘).extract() content=‘‘.join(content) #print(author+‘:‘+content.strip(‘ ‘)) #基于终端的存储 # dic={ # ‘author‘:author, # ‘content‘:content # } # all_data.append(dic) # return all_data #持久化存储的两种方式 #1 基于终端指令:parse方法有一个返回值 #scrapy crawl first -o qiubai.csv --nolog #终端指令只能存储json,csv,xml等格式文件 #2基于管道 item = FirstbloodItem()#循环里面,每次实例化一个item对象 item[‘author‘]=author item[‘content‘]=content yield item #将item提交给管道

Items文件

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class FirstbloodItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() #item类型对象 万能对象,可以接受任意类型属性,字符串,json等 author = scrapy.Field() content = scrapy.Field()

pipeline文件

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don‘t forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html #只要涉及持久化存储的相关操作代码都需要写在该文件种 class FirstbloodPipeline(object): fp=None def open_spider(self,spider): print(‘开始爬虫‘) self.fp=open(‘./qiushibaike.txt‘,‘w‘,encoding=‘utf-8‘) def process_item(self, item, spider): ‘‘‘ 处理Item :param item: :param spider: :return: ‘‘‘ self.fp.write(item[‘author‘]+‘:‘+item[‘content‘]) print(item[‘author‘],item[‘content‘]) return item def close_spider(self,spider): print(‘爬虫结束‘) self.fp.close()

Setting文件

# -*- coding: utf-8 -*- # Scrapy settings for firstBlood project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = ‘firstBlood‘ SPIDER_MODULES = [‘firstBlood.spiders‘] NEWSPIDER_MODULE = ‘firstBlood.spiders‘ # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = ‘Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36‘ # Obey robots.txt rules #默认为True ,改为False 不遵从ROBOTS协议 反爬 ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # ‘Accept‘: ‘text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8‘, # ‘Accept-Language‘: ‘en‘, #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # ‘firstBlood.middlewares.FirstbloodSpiderMiddleware‘: 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # ‘firstBlood.middlewares.FirstbloodDownloaderMiddleware‘: 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # ‘scrapy.extensions.telnet.TelnetConsole‘: None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { ‘firstBlood.pipelines.FirstbloodPipeline‘: 300,#300 为优先级 } # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = ‘httpcache‘ #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = ‘scrapy.extensions.httpcache.FilesystemCacheStorage‘

以上是关于爬虫--使用scrapy爬取糗事百科并在txt文件中持久化存储的主要内容,如果未能解决你的问题,请参考以下文章