关于高并发下kafka producer send异步发送耗时问题的分析

Posted dafanjoy

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了关于高并发下kafka producer send异步发送耗时问题的分析相关的知识,希望对你有一定的参考价值。

最近开发网关服务的过程当中,需要用到kafka转发消息与保存日志,在进行压测的过程中由于是多线程并发操作kafka producer 进行异步send,发现send耗时有时会达到几十毫秒的阻塞,很大程度上上影响了并发的性能,而在后续的测试中发现单线程发送反而比多线程发送效率高出几倍。所以就对kafka API send 的源码进行了一下跟踪和分析,在此总结记录一下。

首先看springboot下 kafka producer 的使用

在config中进行配置,向IOC容器中注入DefaultKafkaProducerFactory生产者工厂的实例

@Bean public ProducerFactory<Object, Object> producerFactory() { return new DefaultKafkaProducerFactory<>(producerConfigs()); }

创建producer

this.producer = producerFactory.createProducer();

大家都知道springboot下IOC容器管理的实例默认都是单例模式;而DefaultKafkaProducerFactory本身也是一个单例工厂

@Override public Producer<K, V> createProducer() { if (this.transactionIdPrefix != null) { return createTransactionalProducer(); } if (this.producer == null) { synchronized (this) { if (this.producer == null) { this.producer = new CloseSafeProducer<K, V>(createKafkaProducer()); } } } return this.producer; }

我们创建的producer也是个单例。

接下来就是具体的发送,用过kafka的小伙伴都知道producer.send是个异步操作,会返回一个Future<RecordMetadata> 类型的结果。那么为什么单线程和多线程send效率会较大的差距呢,我们进入KafkaProducer内部看下producer.send的具体源码实现来找下答案

private Future<RecordMetadata> doSend(ProducerRecord<K, V> record, Callback callback) { TopicPartition tp = null; try { //保证主题的元数据可用 ClusterAndWaitTime clusterAndWaitTime = waitOnMetadata(record.topic(), record.partition(), maxBlockTimeMs); long remainingWaitMs = Math.max(0, maxBlockTimeMs - clusterAndWaitTime.waitedOnMetadataMs); Cluster cluster = clusterAndWaitTime.cluster; byte[] serializedKey; try { //序列化key serializedKey = keySerializer.serialize(record.topic(), record.headers(), record.key()); } catch (ClassCastException cce) { throw new SerializationException("Can‘t convert key of class " + record.key().getClass().getName() + " to class " + producerConfig.getClass(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG).getName() + " specified in key.serializer", cce); } byte[] serializedValue; try { //序列化Value serializedValue = valueSerializer.serialize(record.topic(), record.headers(), record.value()); } catch (ClassCastException cce) { throw new SerializationException("Can‘t convert value of class " + record.value().getClass().getName() + " to class " + producerConfig.getClass(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG).getName() + " specified in value.serializer", cce); } //计算出具体的partition int partition = partition(record, serializedKey, serializedValue, cluster); tp = new TopicPartition(record.topic(), partition); setReadOnly(record.headers()); Header[] headers = record.headers().toArray(); int serializedSize = AbstractRecords.estimateSizeInBytesUpperBound(apiVersions.maxUsableProduceMagic(), compressionType, serializedKey, serializedValue, headers); ensureValidRecordSize(serializedSize); long timestamp = record.timestamp() == null ? time.milliseconds() : record.timestamp(); log.trace("Sending record {} with callback {} to topic {} partition {}", record, callback, record.topic(), partition); // producer callback will make sure to call both ‘callback‘ and interceptor callback Callback interceptCallback = new InterceptorCallback<>(callback, this.interceptors, tp); if (transactionManager != null && transactionManager.isTransactional()) transactionManager.maybeAddPartitionToTransaction(tp); //向队列容器中添加数据 RecordAccumulator.RecordAppendResult result = accumulator.append(tp, timestamp, serializedKey, serializedValue, headers, interceptCallback, remainingWaitMs); if (result.batchIsFull || result.newBatchCreated) { log.trace("Waking up the sender since topic {} partition {} is either full or getting a new batch", record.topic(), partition); this.sender.wakeup(); } return result.future; // handling exceptions and record the errors; // for API exceptions return them in the future, // for other exceptions throw directly } catch (ApiException e) { log.debug("Exception occurred during message send:", e); if (callback != null) callback.onCompletion(null, e); this.errors.record(); this.interceptors.onSendError(record, tp, e); return new FutureFailure(e); } catch (InterruptedException e) { this.errors.record(); this.interceptors.onSendError(record, tp, e); throw new InterruptException(e); } catch (BufferExhaustedException e) { this.errors.record(); this.metrics.sensor("buffer-exhausted-records").record(); this.interceptors.onSendError(record, tp, e); throw e; } catch (KafkaException e) { this.errors.record(); this.interceptors.onSendError(record, tp, e); throw e; } catch (Exception e) { // we notify interceptor about all exceptions, since onSend is called before anything else in this method this.interceptors.onSendError(record, tp, e); throw e; } }

这里除了前面做的一些序列化操作和判断,最关键的就是向队列容器中执行添加数据操作

RecordAccumulator.RecordAppendResult result = accumulator.append(tp, timestamp, serializedKey,

serializedValue, headers, interceptCallback, remainingWaitMs);

accumulator是RecordAccumulator这个类的一个实例,RecordAccumulator类是一个队列容器类;它的内部维护了一个ConcurrentMap,每一个TopicPartition都对应一个专属的消息队列。

private final ConcurrentMap<TopicPartition, Deque<ProducerBatch>> batches;

我们进入accumulator.append内部看下具体的实现

public RecordAppendResult append(TopicPartition tp, long timestamp, byte[] key, byte[] value, Header[] headers, Callback callback, long maxTimeToBlock) throws InterruptedException { // We keep track of the number of appending thread to make sure we do not miss batches in // abortIncompleteBatches(). appendsInProgress.incrementAndGet(); ByteBuffer buffer = null; if (headers == null) headers = Record.EMPTY_HEADERS; try { //根据TopicPartition拿到对应的批处理队列 Deque<ProducerBatch> dq = getOrCreateDeque(tp); //同步队列,保证线程安全 synchronized (dq) { if (closed) throw new IllegalStateException("Cannot send after the producer is closed."); //把序列化后的数据放入队列,并返回结果 RecordAppendResult appendResult = tryAppend(timestamp, key, value, headers, callback, dq); if (appendResult != null) return appendResult; } // we don‘t have an in-progress record batch try to allocate a new batch byte maxUsableMagic = apiVersions.maxUsableProduceMagic(); int size = Math.max(this.batchSize, AbstractRecords.estimateSizeInBytesUpperBound(maxUsableMagic, compression, key, value, headers)); log.trace("Allocating a new {} byte message buffer for topic {} partition {}", size, tp.topic(), tp.partition()); buffer = free.allocate(size, maxTimeToBlock); synchronized (dq) { // Need to check if producer is closed again after grabbing the dequeue lock. if (closed) throw new IllegalStateException("Cannot send after the producer is closed."); RecordAppendResult appendResult = tryAppend(timestamp, key, value, headers, callback, dq); if (appendResult != null) { // Somebody else found us a batch, return the one we waited for! Hopefully this doesn‘t happen often... return appendResult; } MemoryRecordsBuilder recordsBuilder = recordsBuilder(buffer, maxUsableMagic); ProducerBatch batch = new ProducerBatch(tp, recordsBuilder, time.milliseconds()); FutureRecordMetadata future = Utils.notNull(batch.tryAppend(timestamp, key, value, headers, callback, time.milliseconds())); dq.addLast(batch); incomplete.add(batch); // Don‘t deallocate this buffer in the finally block as it‘s being used in the record batch buffer = null; return new RecordAppendResult(future, dq.size() > 1 || batch.isFull(), true); } } finally { if (buffer != null) free.deallocate(buffer); appendsInProgress.decrementAndGet(); } }

在getOrCreateDeque中我们根据TopicPartition从ConcurrentMap获取对应队列,没有的话就初始化一个。

private Deque<ProducerBatch> getOrCreateDeque(TopicPartition tp) { Deque<ProducerBatch> d = this.batches.get(tp); if (d != null) return d; d = new ArrayDeque<>(); Deque<ProducerBatch> previous = this.batches.putIfAbsent(tp, d); if (previous == null) return d; else return previous; }

更关键的是为了保证并发时的线程安全,执行 RecordAppendResult appendResult = tryAppend(timestamp, key, value, headers, callback, dq)时,Deque<ProducerBatch>必然需要同步处理。

synchronized (dq) { if (closed) throw new IllegalStateException("Cannot send after the producer is closed."); RecordAppendResult appendResult = tryAppend(timestamp, key, value, headers, callback, dq); if (appendResult != null) return appendResult; }

在这里我们可以看出,多线程高并发情况下,dq会处在比较大的资源竞争,虽然是基于内存的操作,每个线程持有锁的时间极短,但相比单线程情况,高并发情况下线程开辟较多,锁竞争和cpu上下文切换都比较频繁,会造成一定的性能损耗,产生阻塞耗时。

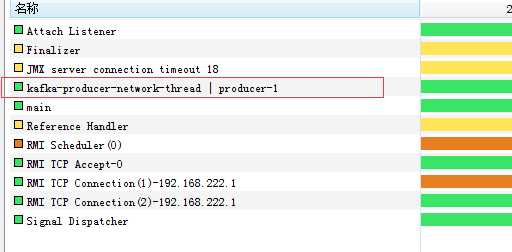

分析到这里你就会发现,其实KafkaProducer这个异步发送是建立在生产者和消费者模式上的,send的真正操作并不是直接异步发送,而是把数据放在一个中间队列中。那么既然有生产者在往内存队列中放入数据,那么必然会有一个专有的线程负责把这些数据真正发送出去。我们通过监控jvm线程信息可以看到,KafkaProducer创建后确实会启动一个守护线程用于消息的发送。

OK,我们再回到 KafkaProducer中,会看到里面有这样两个对象,Sender就是kafka发送数据的后台线程

private final Sender sender; private final Thread ioThread;

在KafkaProducer的构造函数中会启动Sender线程

this.sender = new Sender(logContext, client, this.metadata, this.accumulator, maxInflightRequests == 1, config.getInt(ProducerConfig.MAX_REQUEST_SIZE_CONFIG), acks, retries, metricsRegistry.senderMetrics, Time.SYSTEM, this.requestTimeoutMs, config.getLong(ProducerConfig.RETRY_BACKOFF_MS_CONFIG), this.transactionManager, apiVersions); String ioThreadName = NETWORK_THREAD_PREFIX + " | " + clientId; this.ioThread = new KafkaThread(ioThreadName, this.sender, true); this.ioThread.start();

进入Sender内部可以看到这个线程的作用就是一直轮询发送数据。

public void run() { log.debug("Starting Kafka producer I/O thread."); // main loop, runs until close is called while (running) { try { run(time.milliseconds()); } catch (Exception e) { log.error("Uncaught error in kafka producer I/O thread: ", e); } } log.debug("Beginning shutdown of Kafka producer I/O thread, sending remaining records."); // okay we stopped accepting requests but there may still be // requests in the accumulator or waiting for acknowledgment, // wait until these are completed. while (!forceClose && (this.accumulator.hasUndrained() || this.client.inFlightRequestCount() > 0)) { try { run(time.milliseconds()); } catch (Exception e) { log.error("Uncaught error in kafka producer I/O thread: ", e); } } if (forceClose) { // We need to fail all the incomplete batches and wake up the threads waiting on // the futures. log.debug("Aborting incomplete batches due to forced shutdown"); this.accumulator.abortIncompleteBatches(); } try { this.client.close(); } catch (Exception e) { log.error("Failed to close network client", e); } log.debug("Shutdown of Kafka producer I/O thread has completed."); } /** * Run a single iteration of sending * * @param now The current POSIX time in milliseconds */ void run(long now) { if (transactionManager != null) { try { if (transactionManager.shouldResetProducerStateAfterResolvingSequences()) // Check if the previous run expired batches which requires a reset of the producer state. transactionManager.resetProducerId(); if (!transactionManager.isTransactional()) { // this is an idempotent producer, so make sure we have a producer id maybeWaitForProducerId(); } else if (transactionManager.hasUnresolvedSequences() && !transactionManager.hasFatalError()) { transactionManager.transitionToFatalError(new KafkaException("The client hasn‘t received acknowledgment for " + "some previously sent messages and can no longer retry them. It isn‘t safe to continue.")); } else if (transactionManager.hasInFlightTransactionalRequest() || maybeSendTransactionalRequest(now)) { // as long as there are outstanding transactional requests, we simply wait for them to return client.poll(retryBackoffMs, now); return; } // do not continue sending if the transaction manager is in a failed state or if there // is no producer id (for the idempotent case). if (transactionManager.hasFatalError() || !transactionManager.hasProducerId()) { RuntimeException lastError = transactionManager.lastError(); if (lastError != null) maybeAbortBatches(lastError); client.poll(retryBackoffMs, now); return; } else if (transactionManager.hasAbortableError()) { accumulator.abortUndrainedBatches(transactionManager.lastError()); } } catch (AuthenticationException e) { // This is already logged as error, but propagated here to perform any clean ups. log.trace("Authentication exception while processing transactional request: {}", e); transactionManager.authenticationFailed(e); } } long pollTimeout = sendProducerData(now); client.poll(pollTimeout, now); }

通过上面的分析我们可以看出producer.send操作本身其实是个基于内存的存储操作,耗时几乎可以忽略不计,但由于高并发情况下,线程同步会有一定的性能损耗,当然这个损耗在一般的应用场景下几乎是可以忽略不计的,但如果是数据量比较大,高并发的场景下会比较明显。

针对上面的问题分析,这里说下我个人的一些总结:

1、首先避免多线程操作producer发送数据,你可以采用生产者消费者模式把producer.send从你的多线程操作中解耦出来,维护一个你要发送的消息队列,单独开辟一个线程操作;

2、可能有的小伙伴会问,那么多创建几个producer的实例或者维护一个producer池可以吗,我原本也是这个想法,只是在测试中发现效果也不是很理想,我估计是由于创建producer实例过多,导致线程数量也跟着增加,本身的业务线程再加上kafka的线程,线程上下文切换比较频繁,CPU资源压力比较大,效率也不如单线程操作;

3、这个问题其实真是针对API操作来讲的,send操作并不是真正的数据发送,真正的数据发送由守护线程进行;按照kafka本身的设计思想,如果操作本身就成为了你性能的瓶颈,你应该考虑的是集群部署,负载均衡;

4、无锁才是真正的高性能;

以上是关于关于高并发下kafka producer send异步发送耗时问题的分析的主要内容,如果未能解决你的问题,请参考以下文章