k8s集群安装

Posted guarderming

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了k8s集群安装相关的知识,希望对你有一定的参考价值。

一、K8s介绍

1、K8s简介

官网链接:https://www.kubernetes.org.cn/k8s

Kubernetes一个用于容器集群的自动化部署、扩容以及运维的开源平台。

(1)使用Kubernetes,你可以快速高效地响应客户需求:

动态地对应用进行扩容。

无缝地发布新特性。

仅使用需要的资源以优化硬件使用。

(2)Kubernetes是:

简洁的:轻量级,简单,易上手

可移植的:公有,私有,混合,多重云(multi-cloud)

可扩展的: 模块化, 插件化, 可挂载, 可组合

可自愈的: 自动布置, 自动重启, 自动复制

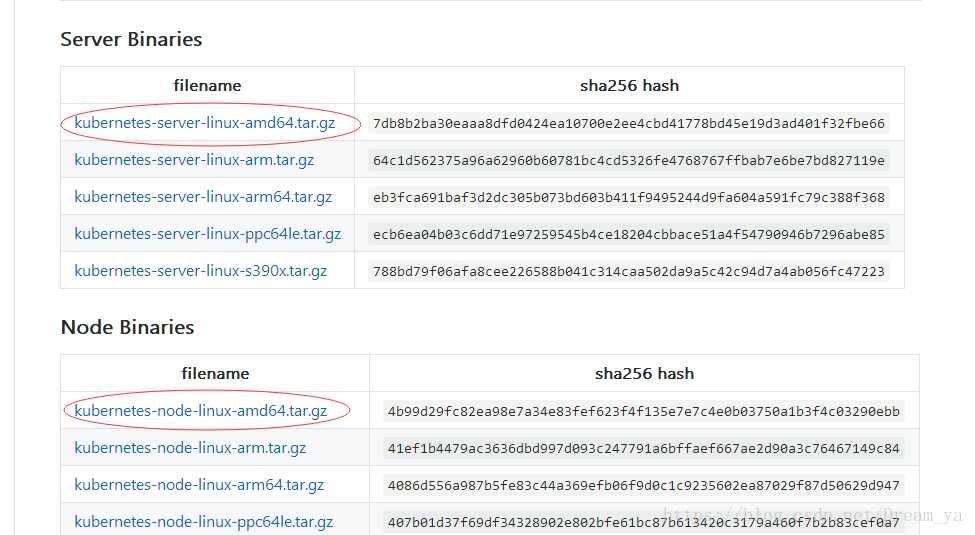

2、下载地址

下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.8.md#v1813

百度网盘下载地址: https://pan.baidu.com/s/1rXo9XXi6k8GuwT7-kFKf9w 密码: 6tmc

二、实验环境

selinux iptables off

1、安装说明

注意:配置文件中的注释只是为了说明对应功能,安装时需删除,否则会报错,并且不同版本的配置文件并不兼容!!!

主机名 IP 系统版本 安装服务 功能说明

server1 10.10.10.1 rhel7.3 docker、etcd、api-server、scheduler、controller-manager、kubelet、flannel、docker-compose、harbor 作为集群的Master、服务环境控制相关模块、api网管控制相关模块、平台管理控制台模块

server2 10.10.10.2 rhel7.3 docker、etcd、kubelet、proxy、flannel 服务节点、用于容器化服务部署和运行

server3 10.10.10.3 rhel7.3 docker、etcd、kubelet、proxy、flannel 服务节点、用于容器化服务部署和运行

[[email protected] mnt]# cat /etc/hosts

10.10.10.1 server1

10.10.10.2 server2

10.10.10.3 server3

2、把swap分区关闭(3台):

swapoff -a

我们基础环境就准备好了,下面进行k8s的环境的安装!!!

三、安装Docker(3台)

由于k8s基于docker,docker版本:docker-ce-18.03.1.ce-1.el7

1、如果之前已经安装了docker:

yum remove docker

docker-client

docker-client-latest

docker-common

docker-latest

docker-latest-logrotate

docker-logrotate

docker-selinux

docker-engine-selinux

docker-engine

注意:如果你使用的系统不是7.3的话(例如7.0),需要把yum源换成7.3的yum源,由于依赖的版本过高无法安装!!!

2、安装

[[email protected] mnt]# ls /mnt

container-selinux-2.21-1.el7.noarch.rpm

libsemanage-python-2.5-8.el7.x86_64.rpm

docker-ce-18.03.1.ce-1.el7.centos.x86_64.rpm

pigz-2.3.4-1.el7.x86_64.rpm docker-engine-1.8.2-1.el7.rpm

policycoreutils-2.5-17.1.el7.x86_64.rpm

libsemanage-2.5-8.el7.x86_64.rpm

policycoreutils-python-2.5- 17.1.el7.x86_64.rpm

[[email protected] mnt]# yum install -y /mnt/*

[[email protected] mnt]# systemctl enable docker

[[email protected] mnt]# systemctl start docker

[[email protected] mnt]# systemctl status docker ###可以发现生成doker0网卡、172.17.0.1/16

3、测试

[[email protected] mnt]# docker version

Client:

Version: 18.03.1-ce

API version: 1.37

Go version: go1.9.5

Git commit: 9ee9f40

Built: Thu Apr 26 07:20:16 2018

OS/Arch: linux/amd64

Experimental: false

Orchestrator: swarm

Server:

Engine:

Version: 18.03.1-ce

API version: 1.37 (minimum version 1.12)

Go version: go1.9.5

Git commit: 9ee9f40

Built: Thu Apr 26 07:23:58 2018

OS/Arch: linux/amd64

Experimental: false

4、如果想进行IP更改(可跳过)

[[email protected] ~]# vim /etc/docker/daemon.json

{

"bip": "172.17.0.1/24"

}

[[email protected] ~]# systemctl restart docker

[[email protected] ~]# ip addr show docker0

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:23:2d:56:4b brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/24 brd 172.17.0.255 scope global docker0

valid_lft forever preferred_lft forever

四、安装etcd(3台)

1、建立安装存放的目录

[[email protected] mnt]# mkdir -p /usr/local/kubernetes/{bin,config}

[[email protected] mnt]# vim /etc/profile ###为了方便能够直接执行命令,加入声明

export PATH=$PATH:/usr/local/kubernetes/bin

[[email protected] mnt]# . /etc/profile 或 source /etc/profile

[[email protected] mnt]# mkdir -p /var/lib/etcd/ ###建立工作目录--WorkingDirectory(3台)

2、解压etcd,移动命令到对应位置

[[email protected] ~]# tar xf /root/etcd-v3.1.7-linux-amd64.tar.gz

[[email protected] ~]# mv /root/etcd-v3.1.7-linux-amd64/etcd* /usr/local/kubernetes/bin/

3、etcd systemd配置文件

[[email protected] ~]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=simple

WorkingDirectory=/var/lib/etcd

EnvironmentFile=-/usr/local/kubernetes/config/etcd.conf

ExecStart=/usr/local/kubernetes/bin/etcd

--name=${ETCD_NAME}

--data-dir=${ETCD_DATA_DIR}

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS}

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS}

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS}

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS}

--initial-cluster=${ETCD_INITIAL_CLUSTER}

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN}

--initial-cluster-state=${ETCD_INITIAL_CLUSTER_STATE}

Type=notify

[Install]

WantedBy=multi-user.target

4、etcd配置文件

[[email protected] ~]# cat /usr/local/kubernetes/config/etcd.conf

#[member]

ETCD_NAME="etcd01" ###修改为本机对应的名字,etcd02,etcd03

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.10.10.1:2380" ###修改为本机IP

ETCD_INITIAL_CLUSTER="etcd01=http://10.10.10.1:2380,etcd02=http://10.10.10.2:2380,etcd03=http://10.10.10.3:2380" ###把IP更换成集群IP

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://10.10.10.1:2379" ###修改为本机IP

[[email protected] ~]# systemctl enable etcd

[[email protected] ~]# systemctl start etcd

注意:etcd启动的话3台同时启动,否则启动会失败!!!

5、3台都安装完成我们可以进行测试:

[[email protected] ~]# etcdctl cluster-health

member 2cc6b104fe5377ef is healthy: got healthy result from http://10.10.10.3:2379

member 74565e08b84745a6 is healthy: got healthy result from http://10.10.10.2:2379

member af08b45e1ab8a099 is healthy: got healthy result from http://10.10.10.1:2379

cluster is healthy

[[email protected] kubernetes]# etcdctl member list ###集群自己选择出leader

2cc6b104fe5377ef: name=etcd03 peerURLs=http://10.10.10.3:2380 clientURLs=http://10.10.10.3:2379 isLeader=true

74565e08b84745a6: name=etcd02 peerURLs=http://10.10.10.2:2380 clientURLs=http://10.10.10.2:2379 isLeader=false

af08b45e1ab8a099: name=etcd01 peerURLs=http://10.10.10.1:2380 clientURLs=http://10.10.10.1:2379 isLeader=false

五、安装Master节点组件

1、移动二进制到bin目录下

[[email protected] ~]# tar xf /root/kubernetes-server-linux-amd64.tar.gz

[[email protected] kubernetes]# mv /root/kubernetes/server/bin/{kube-apiserver,kube-scheduler,kube-controller-manager,kubectl,kubelet} /usr/local/kubernetes/bin

2、apiserver安装

(1)apiserver配置文件

[[email protected] ~]# vim /usr/local/kubernetes/config/kube-apiserver

#启用日志标准错误

KUBE_LOGTOSTDERR="--logtostderr=true"

#日志级别

KUBE_LOG_LEVEL="--v=4"

#Etcd服务地址

KUBE_ETCD_SERVERS="--etcd-servers=http://10.10.10.1:2379"

#API服务监听地址

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

#API服务监听端口

KUBE_API_PORT="--insecure-port=8080"

#对集群中成员提供API服务地址

KUBE_ADVERTISE_ADDR="--advertise-address=10.10.10.1"

#允许容器请求特权模式,默认false

KUBE_ALLOW_PRIV="--allow-privileged=false"

#集群分配的IP范围,自定义但是要跟后面的kubelet(服务节点)的配置DNS在一个区间

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.0.0.0/24"

(2)apiserver systemd配置文件

[[email protected] ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kube-apiserver

ExecStart=/usr/local/kubernetes/bin/kube-apiserver

${KUBE_LOGTOSTDERR}

${KUBE_LOG_LEVEL}

${KUBE_ETCD_SERVERS}

${KUBE_API_ADDRESS}

${KUBE_API_PORT}

${KUBE_ADVERTISE_ADDR}

${KUBE_ALLOW_PRIV}

${KUBE_SERVICE_ADDRESSES}

Restart=on-failure

[Install]

WantedBy=multi-user.target

[[email protected] ~]# systemctl enable kube-apiserver

[[email protected] ~]# systemctl start kube-apiserver

3、安装Scheduler

(1)scheduler配置文件

[[email protected] ~]# vim /usr/local/kubernetes/config/kube-scheduler

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=4"

KUBE_MASTER="--master=10.10.10.1:8080"

KUBE_LEADER_ELECT="--leader-elect"

(2)scheduler systemd配置文件

[[email protected] ~]# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kube-scheduler

ExecStart=/usr/local/kubernetes/bin/kube-scheduler

${KUBE_LOGTOSTDERR}

${KUBE_LOG_LEVEL}

${KUBE_MASTER}

${KUBE_LEADER_ELECT}

Restart=on-failure

[Install]

WantedBy=multi-user.target

[[email protected] ~]# systemctl enable kube-scheduler

[[email protected] ~]# systemctl start kube-scheduler

4、controller-manager安装

(1)controller-manage配置文件

[[email protected] ~]# vim /usr/local/kubernetes/config/kube-controller-manager

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=4"

KUBE_MASTER="--master=10.10.10.1:8080"

(2)controller-manage systemd配置文件

[[email protected] ~]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kube-controller-manager

ExecStart=/usr/local/kubernetes/bin/kube-controller-manager

${KUBE_LOGTOSTDERR}

${KUBE_LOG_LEVEL}

${KUBE_MASTER}

${KUBE_LEADER_ELECT}

Restart=on-failure

[Install]

WantedBy=multi-user.target

[[email protected] ~]# systemctl enable kube-controller-manager

[[email protected] ~]# systemctl start kube-controller-manager

5、安装kubelet

安装完此服务,kubectl get nodes就可以看到Master,也可以选择不安装!!!

(1)kubeconfig配置文件

[[email protected] ~]# vim /usr/local/kubernetes/config/kubelet.kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

server: http://10.10.10.1:8080 ###Master的IP,即自身IP

name: local

contexts:

- context:

cluster: local

name: local

current-context: local

(2)kubelet配置文件

[[email protected] ~]# vim /usr/local/kubernetes/config/kubelet

# 启用日志标准错误

KUBE_LOGTOSTDERR="--logtostderr=true"

# 日志级别

KUBE_LOG_LEVEL="--v=4"

# Kubelet服务IP地址

NODE_ADDRESS="--address=10.10.10.1"

# Kubelet服务端口

NODE_PORT="--port=10250"

# 自定义节点名称

NODE_HOSTNAME="--hostname-override=10.10.10.1"

# kubeconfig路径,指定连接API服务器

KUBELET_KUBECONFIG="--kubeconfig=/usr/local/kubernetes/config/kubelet.kubeconfig"

# 允许容器请求特权模式,默认false

KUBE_ALLOW_PRIV="--allow-privileged=false"

# DNS信息,DNS的IP

KUBELET_DNS_IP="--cluster-dns=10.0.0.2"

KUBELET_DNS_DOMAIN="--cluster-domain=cluster.local"

# 禁用使用Swap

KUBELET_SWAP="--fail-swap-on=false"

(3)kubelet systemd配置文件

[[email protected] ~]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kubelet

ExecStart=/usr/local/kubernetes/bin/kubelet

${KUBE_LOGTOSTDERR}

${KUBE_LOG_LEVEL}

${NODE_ADDRESS}

${NODE_PORT}

${NODE_HOSTNAME}

${KUBELET_KUBECONFIG}

${KUBE_ALLOW_PRIV}

${KUBELET_DNS_IP}

${KUBELET_DNS_DOMAIN}

${KUBELET_SWAP}

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

(4)启动服务,并设置开机启动:

[[email protected] ~]# swapoff -a ###启动之前要先关闭swap

[[email protected] ~]# systemctl enable kubelet

[[email protected] ~]# systemctl start kubelet

注意:服务启动先启动etcd,再启动apiserver,其他无顺序!!!

六、安装node(server2和server3)

1、移动二进制到bin目录

[[email protected] ~]# tar xf /root/kubernetes-node-linux-amd64.tar.gz

[[email protected] ~]# mv /root/kubernetes/node/bin/{kubelet,kube-proxy} /usr/local/kubernetes/bin/

2、安装kubelet

(1)kubeconfig配置文件

注意:kubeconfig文件用于kubelet连接master apiserver

[[email protected] ~]# vim /usr/local/kubernetes/config/kubelet.kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

server: http://10.10.10.1:8080

name: local

contexts:

- context:

cluster: local

name: local

current-context: local

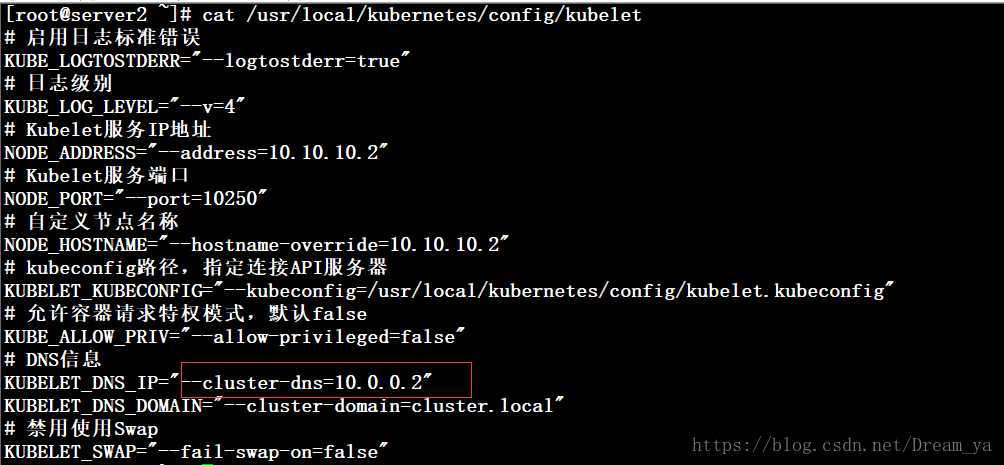

(2)kubelet配置文件

[[email protected] ~]# cat /usr/local/kubernetes/config/kubelet

#启用日志标准错误

KUBE_LOGTOSTDERR="--logtostderr=true"

#日志级别

KUBE_LOG_LEVEL="--v=4"

#Kubelet服务IP地址(自身IP)

NODE_ADDRESS="--address=10.10.10.2"

#Kubelet服务端口

NODE_PORT="--port=10250"

#自定义节点名称(自身IP)

NODE_HOSTNAME="--hostname-override=10.10.10.2"

#kubeconfig路径,指定连接API服务器

KUBELET_KUBECONFIG="--kubeconfig=/usr/local/kubernetes/config/kubelet.kubeconfig"

#允许容器请求特权模式,默认false

KUBE_ALLOW_PRIV="--allow-privileged=false"

#DNS信息,跟上面给的地址段对应

KUBELET_DNS_IP="--cluster-dns=10.0.0.2"

KUBELET_DNS_DOMAIN="--cluster-domain=cluster.local"

#禁用使用Swap

KUBELET_SWAP="--fail-swap-on=false"

(3)kubelet systemd配置文件

[[email protected] ~]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kubelet

ExecStart=/usr/local/kubernetes/bin/kubelet

${KUBE_LOGTOSTDERR}

${KUBE_LOG_LEVEL}

${NODE_ADDRESS}

${NODE_PORT}

${NODE_HOSTNAME}

${KUBELET_KUBECONFIG}

${KUBE_ALLOW_PRIV}

${KUBELET_DNS_IP}

${KUBELET_DNS_DOMAIN}

${KUBELET_SWAP}

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

(4)启动服务,并设置开机启动:

[[email protected] ~]# swapoff -a ###启动之前要先关闭swap

[[email protected] ~]# systemctl enable kubelet

[[email protected] ~]# systemctl start kubelet

3、安装proxy

(1)proxy配置文件

[[email protected] ~]# cat /usr/local/kubernetes/config/kube-proxy

#启用日志标准错误

KUBE_LOGTOSTDERR="--logtostderr=true"

#日志级别

KUBE_LOG_LEVEL="--v=4"

#自定义节点名称(自身IP)

NODE_HOSTNAME="--hostname-override=10.10.10.2"

#API服务地址(MasterIP)

KUBE_MASTER="--master=http://10.10.10.1:8080"

(2)proxy systemd配置文件

[[email protected] ~]# cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/usr/local/kubernetes/config/kube-proxy

ExecStart=/usr/local/kubernetes/bin/kube-proxy

${KUBE_LOGTOSTDERR}

${KUBE_LOG_LEVEL}

${NODE_HOSTNAME}

${KUBE_MASTER}

Restart=on-failure

[Install]

WantedBy=multi-user.target

[[email protected] ~]# systemctl enable kube-proxy

[[email protected] ~]# systemctl restart kube-proxy

七、安装flannel(3台)

1、移动二进制到bin目录

[[email protected] ~]# tar xf flannel-v0.7.1-linux-amd64.tar.gz

[[email protected] ~]# mv /root/{flanneld,mk-docker-opts.sh} /usr/local/kubernetes/bin/

2、flannel配置文件

[[email protected] kubernetes]# vim /usr/local/kubernetes/config/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs,自身IP

FLANNEL_ETCD="http://10.10.10.1:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment,etcd-key的目录

FLANNEL_ETCD_KEY="/atomic.io/network"

# Any additional options that you want to pass,根据自己的网卡名填写

FLANNEL_OPTIONS="--iface=eth0"

3、flannel systemd配置文件

[[email protected] kubernetes]# vim /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/usr/local/kubernetes/config/flanneld

EnvironmentFile=-/etc/sysconfig/docker-network

ExecStart=/usr/local/kubernetes/bin/flanneld -etcd-endpoints=${FLANNEL_ETCD} -etcd-prefix=${FLANNEL_ETCD_KEY} $FLANNEL_OPTIONS

ExecStartPost=/usr/local/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

4、设置etcd-key

注意: 在一台上设置即可,会同步过去!!!

[[email protected] ~]# etcdctl mkdir /atomic.io/network

###下面的IP跟你docker本身的IP地址一个网段

[[email protected] ~]# etcdctl mk /atomic.io/network/config "{ "Network": "172.17.0.0/16", "SubnetLen": 24, "Backend": { "Type": "vxlan" } }"

{ "Network": "172.17.0.0/16", "SubnetLen": 24, "Backend": { "Type": "vxlan" } }

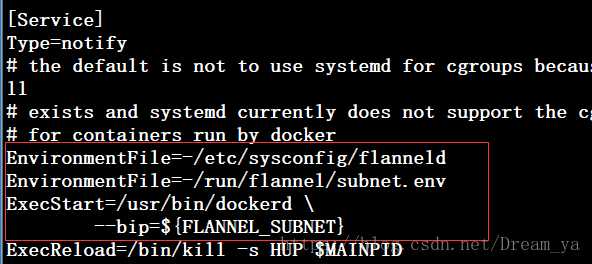

5、设置docker配置

因为docker要使用flanneld,所以在其配置中加入EnvironmentFile=-/etc/sysconfig/flanneld,EnvironmentFile=-/run/flannel/subnet.env,--bip=${FLANNEL_SUBNET}

[[email protected] ~]# vim /usr/lib/systemd/system/docker.service

6、启动flannel和docker

[[email protected] ~]# systemctl enable flanneld.service

[[email protected] ~]# systemctl restart flanneld.service

[[email protected] ~]# systemctl daemon-reload

[[email protected] ~]# systemctl restart docker.service

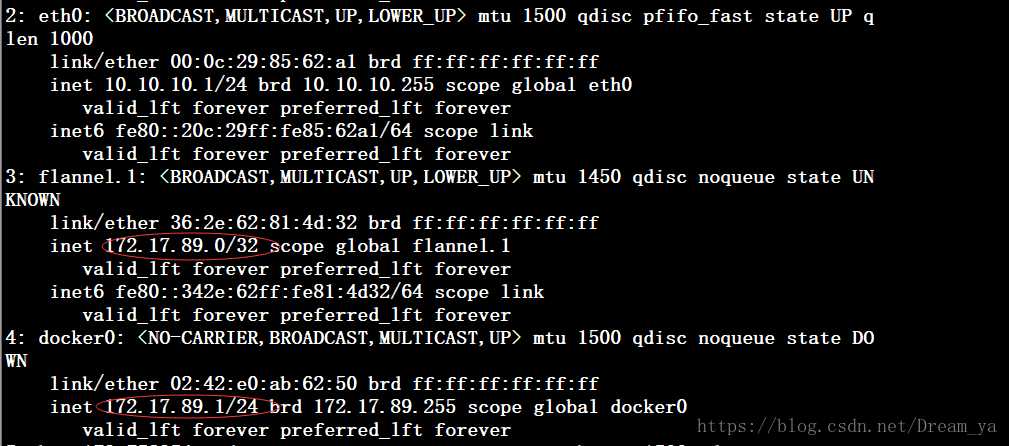

7、测试flannel

ip addr ###发现flanneld生成的IP和Docker的IP在同一个网段即完成

8、集群测试

如果Master中没有装kubelet,kubectl get nodes就看不到Master!!!

[[email protected] ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.10.10.1 Ready <none> 1d v1.8.13

10.10.10.2 Ready <none> 1d v1.8.13

10.10.10.3 Ready <none> 1d v1.8.13

[[email protected] ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

这样一个简单的K8s集群就安装完成了,下面介绍下harbor安装以及插件的安装!!!

八、harbor安装

此处我是用harbor来管理我的镜像仓库,它的介绍我就不多介绍了!!!

注意:如果要使用本地镜像可以不用安装harbor,但是要安装busybox,并设置yaml配置文件imagePullPolicy: Never或imagePullPolicy: IfNotPresent在image同级下加入即可,默认方式为always使用网络的镜像。

1、安装docker-compose

(1)下载方法

https://github.com/docker/compose/releases/ ###官网下载地址

[[email protected] ~]# wget https://github.com/docker/compose/releases/download/1.22.0/docker-compose-Linux-x86_64

(2)安装

[[email protected] ~]# cp docker-compose-Linux-x86_64 /usr/local/kubernetes/bin/docker-compose

[[email protected] ~]# chmod +x /usr/local/kubernetes/bin/docker-compose

(3)查看版本

[[email protected] ~]# docker-compose -version

docker-compose version 1.22.0, build f46880fe

2、安装harbor

(1)下载方法

官网安装参考链接:https://github.com/vmware/harbor/blob/master/docs/installation_guide.md

https://github.com/vmware/harbor/releases#install ###下载地址

[[email protected] ~]# wget http://harbor.orientsoft.cn/harbor-v1.5.0/harbor-offline-installer-v1.5.0.tgz

(2)解压tar包

[[email protected] ~]# tar xf harbor-offline-installer-v1.5.0.tgz -C /usr/local/kubernetes/

(3)配置harbor.cfg

[[email protected] ~]# grep -v "^#" /usr/local/kubernetes/harbor/harbor.cfg

_version = 1.5.0

###修改为本机IP即可

hostname = 10.10.10.1

ui_url_protocol = http

max_job_workers = 50

customize_crt = on

ssl_cert = /data/cert/server.crt

ssl_cert_key = /data/cert/server.key

secretkey_path = /data

admiral_url = NA

log_rotate_count = 50

log_rotate_size = 200M

http_proxy =

https_proxy =

no_proxy = 127.0.0.1,localhost,ui

email_identity =

email_server = smtp.mydomain.com

email_server_port = 25

email_username = [email protected]

email_password = abc

email_from = admin <[email protected]>

email_ssl = false

email_insecure = false

harbor_admin_password = Harbor12345

auth_mode = db_auth

ldap_url = ldaps://ldap.mydomain.com

ldap_basedn = ou=people,dc=mydomain,dc=com

ldap_uid = uid

ldap_scope = 2

ldap_timeout = 5

ldap_verify_cert = true

ldap_group_basedn = ou=group,dc=mydomain,dc=com

ldap_group_filter = objectclass=group

ldap_group_gid = cn

ldap_group_scope = 2

self_registration = on

token_expiration = 30

project_creation_restriction = everyone

db_host = mysql

db_password = root123

db_port = 3306

db_user = root

redis_url =

clair_db_host = postgres

clair_db_password = password

clair_db_port = 5432

clair_db_username = postgres

clair_db = postgres

uaa_endpoint = uaa.mydomain.org

uaa_clientid = id

uaa_clientsecret = secret

uaa_verify_cert = true

uaa_ca_cert = /path/to/ca.pem

registry_storage_provider_name = filesystem

registry_storage_provider_config =

(4)安装

[[email protected] ~]# cd /usr/local/kubernetes/harbor/ ###一定要进入此目录,日志放在/var/log/harbor/

[[email protected] harbor]# ./prepare

[[email protected] harbor]# ./install.sh

(5)查看生成的镜像

[[email protected] harbor]# docker ps ###状态为up

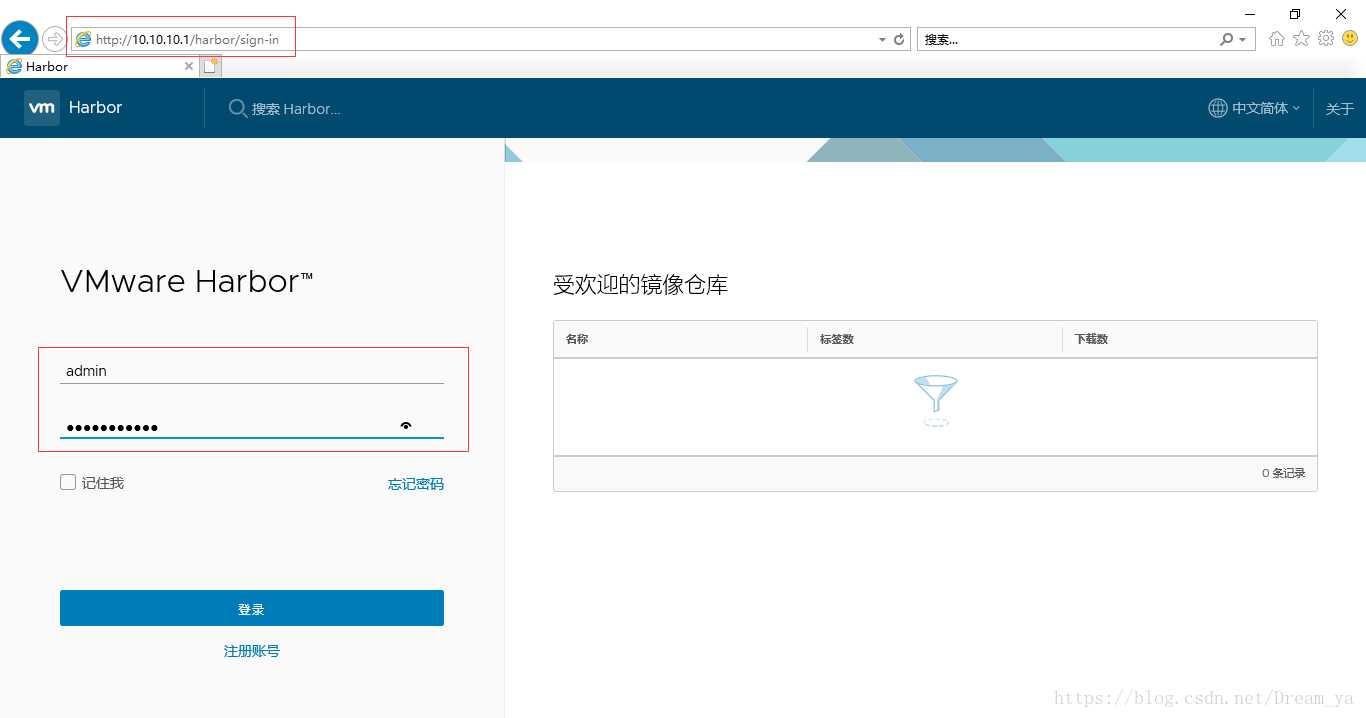

此时你也可以通过浏览器输入http:10.10.10.1(Master的IP)进行登陆,默认账号:admin默认密码:Harbor12345!!!

(6)加入认证

<1> server1(Mster)

[[email protected] harbor]# vim /etc/sysconfig/docker

OPTIONS=‘--selinux-enabled --log-driver=journald --signature-verification=false --insecure-registry=10.10.10.1‘

<2> 三台都加入

[[email protected] ~]# vim /etc/docker/daemon.json

{

"insecure-registries": [

"10.10.10.1"

]

}

(7)测试

<1> shell中登陆

[[email protected] ~]# docker login 10.10.10.1 ###账号:admin 密码:Harbor12345

Username: admin

Password:

Login Succeeded

登陆成功后,我们便可以使用harbor仓库了!!!

<2> 浏览器中登陆

(8)登陆报错:

<1> 报错:

[[email protected] ~]# docker login 10.10.10.1

Error response from daemon: Get http://10.10.10.1/v2/: unauthorized: authentication required

<2> 解决方法:

加入认证,上面有写,或者就是密码输入错误!!!

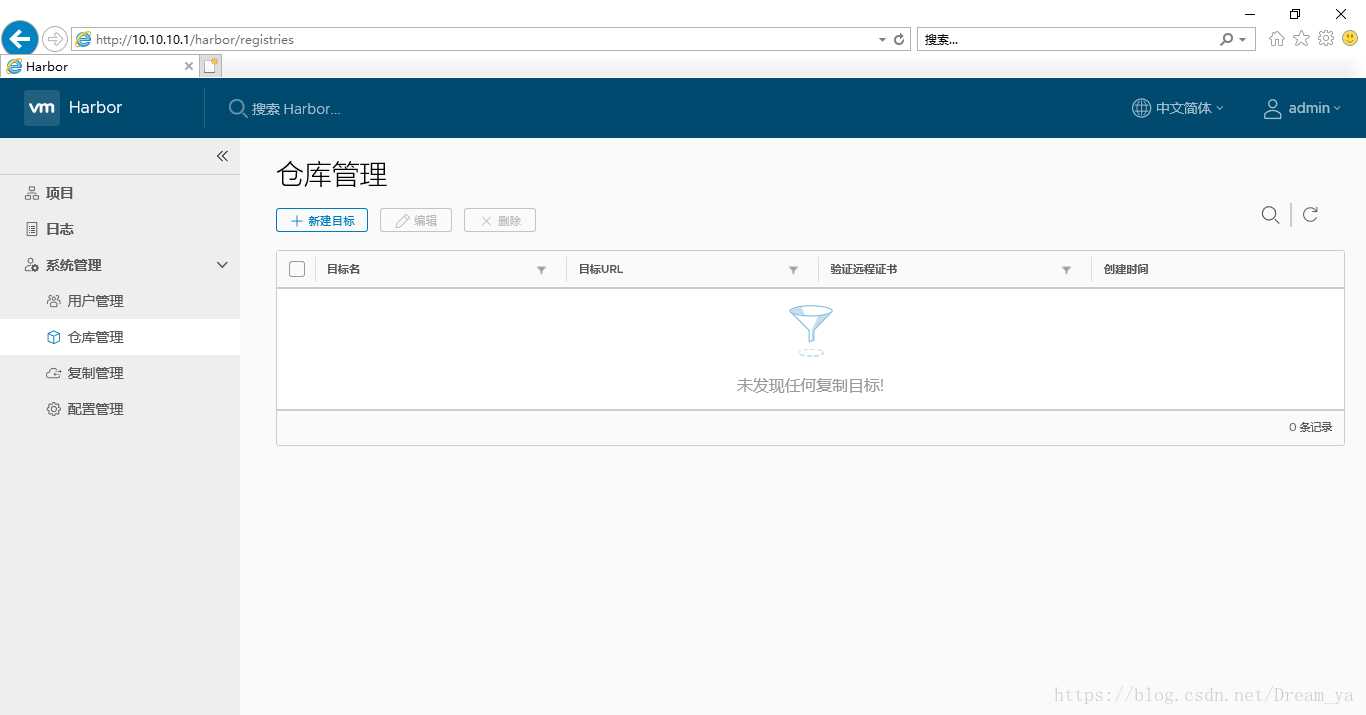

(9)harbor基本操作

<1> 下线

# cd /usr/local/kubernetes/harbor/

# docker-compose down -v 或 docker-compose stop

<2> 修改配置

修改harbor.cfg和docker-compose.yml

<3> 重新部署上线

# cd /usr/local/kubernetes/harbor/

# ./prepare

# docker-compose up -d 或 docker-compose start

3、harbor使用

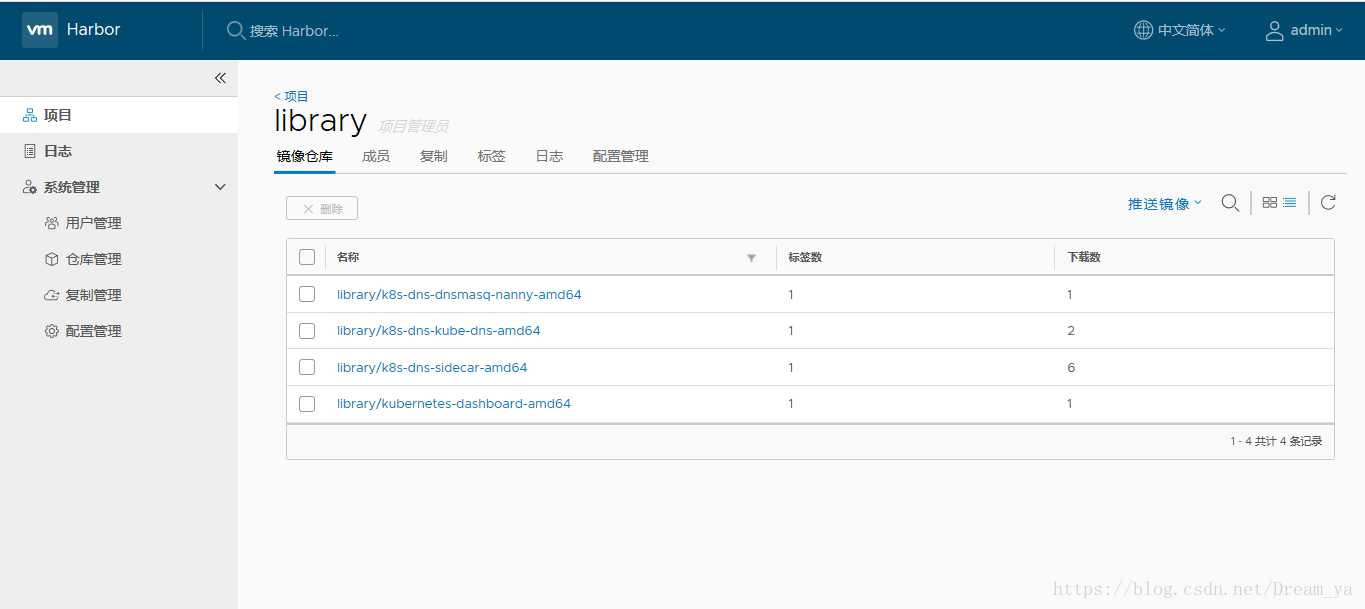

用浏览器登陆你可以发现其默认是有个项目library,也可以自己创建,我们来使用此项目!!!

(1)对镜像进行处理

###打包及删除

docker tag gcr.io/google_containers/kubernetes-dashboard-amd64:v1.8.3 10.10.10.1/library/kubernetes-dashboard-amd64:v1.8.3

docker rmi gcr.io/google_containers/kubernetes-dashboard-amd64:v1.8.3

docker tag gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7 10.10.10.1/library/k8s-dns-sidecar-amd64:1.14.7

docker rmi gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

docker tag gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7 10.10.10.1/library/k8s-dns-kube-dns-amd64:1.14.7

docker rmi gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

docker tag gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7 10.10.10.1/library/k8s-dns-dnsmasq-nanny-amd64:1.14.7

docker rmi gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

###推送到harbor上

docker push 10.10.10.1/library/kubernetes-dashboard-amd64:v1.8.3

docker push 10.10.10.1/library/k8s-dns-sidecar-amd64:1.14.7

docker push 10.10.10.1/library/k8s-dns-kube-dns-amd64:1.14.7

docker push 10.10.10.1/library/k8s-dns-dnsmasq-nanny-amd64:1.14.7

(2)通过浏览器测试

九、kube-dns安装

下载地址:https://github.com/kubernetes/kubernetes/tree/release-1.8/cluster/addons/dns

(1)我们需要的是这几个包:

kubedns-sa.yaml

kubedns-svc.yaml.base

kubedns-cm.yaml

kubedns-controller.yaml.base

[[email protected] data]# unzip kubernetes-release-1.8.zip

[[email protected] dns]# pwd

/data/kubernetes-release-1.8/cluster/addons/dns

[[email protected] dns]# cp {kubedns-svc.yaml.base,kubedns-cm.yaml,kubedns-controller.yaml.base,kubedns-sa.yaml} /root

(2)clusterIP查看

[[email protected] ~]# cat /usr/local/kubernetes/config/kubelet

(3)4个yaml可以合并成此kube-dns.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2 ###此地址是在kubelet的DNS地址

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ‘‘

spec:

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

volumes:

- name: kube-dns-config

configMap:

name: kube-dns

optional: true

#imagePullSecrets:

#- name: registrykey-aliyun-vpc

containers:

- name: kubedns

image: 10.10.10.1/library/k8s-dns-kube-dns-amd64:1.14.7

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

livenessProbe:

httpGet:

path: /healthcheck/kubedns

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that‘s available.

initialDelaySeconds: 3

timeoutSeconds: 5

args:

- --domain=cluster.local

- --dns-port=10053

- --config-dir=/kube-dns-config

- --kube-master-url=http://10.10.10.1:8080 ###修改为集群地址

- --v=2

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

volumeMounts:

- name: kube-dns-config

mountPath: /kube-dns-config

- name: dnsmasq

image: 10.10.10.1/library/k8s-dns-dnsmasq-nanny-amd64:1.14.7

livenessProbe:

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- -v=2

- -logtostderr

- -configDir=/etc/k8s/dns/dnsmasq-nanny

- -restartDnsmasq=true

- --

- -k

- --cache-size=1000

- --no-negcache

- --log-facility=-

- --server=/cluster.local/127.0.0.1#10053

- --server=/in-addr.arpa/127.0.0.1#10053

- --server=/ip6.arpa/127.0.0.1#10053

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

# see: https://github.com/kubernetes/kubernetes/issues/29055 for details

resources:

requests:

cpu: 150m

memory: 20Mi

volumeMounts:

- name: kube-dns-config

mountPath: /etc/k8s/dns/dnsmasq-nanny

- name: sidecar

image: 10.10.10.1/library/k8s-dns-sidecar-amd64:1.14.7

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,SRV

- --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,SRV

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 20Mi

cpu: 10m

dnsPolicy: Default # Don‘t use cluster DNS.

(4)创建及删除

# kubectl create -f kube-dns.yaml ###创建

# kubectl delete -f kube-dns.yaml ###删除,此步骤不用执行

(5)查看

[[email protected] ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-dns-5855d8c4f7-sbb7x 3/3 Running 0

[[email protected] ~]# kubectl describe pod -n kube-system kube-dns-5855d8c4f7-sbb7x ###报错的话,可以查看报错信息

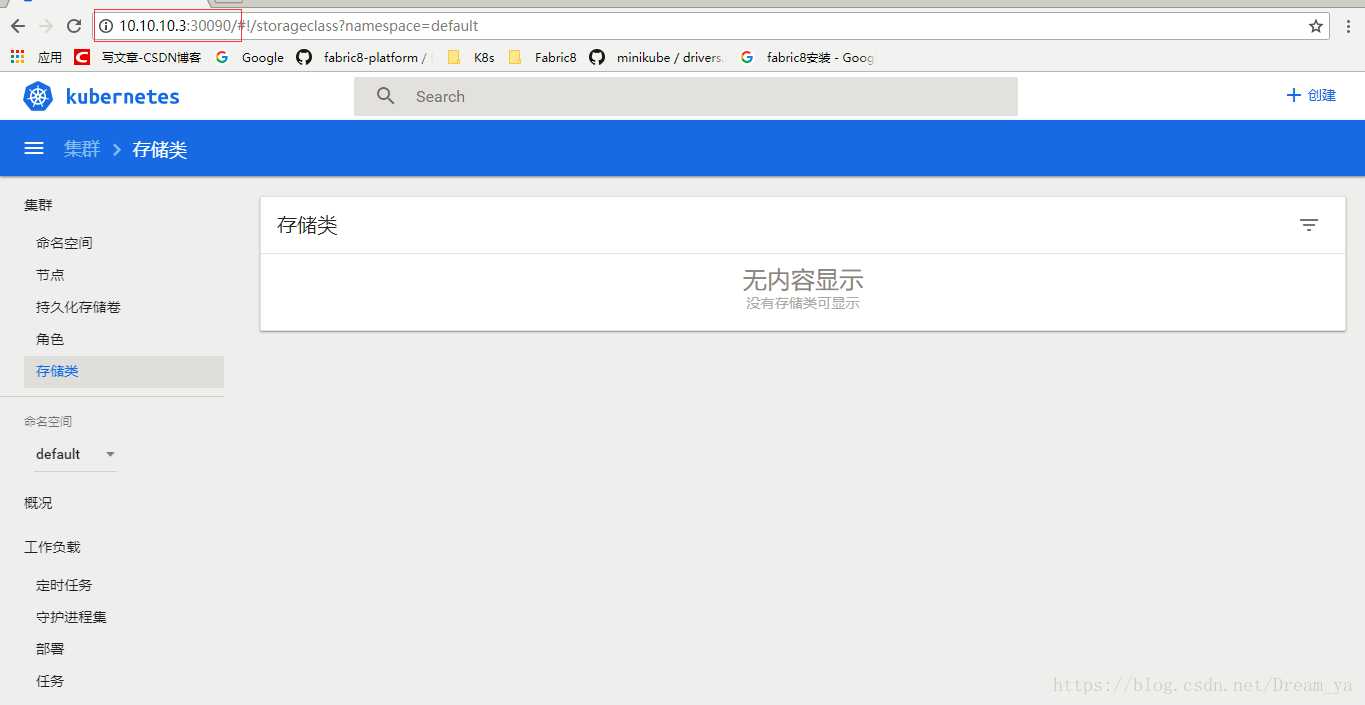

十、安装dashboard

1、配置kube-dashboard.yaml

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: 10.10.10.1/library/kubernetes-dashboard-amd64:v1.8.3

ports:

- containerPort: 9090

protocol: TCP

args:

- --apiserver-host=http://10.10.10.1:8080 ###修改为Master的IP

volumeMounts:

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 80

targetPort: 9090

nodePort: 30090

selector:

k8s-app: kubernetes-dashboard

2、创建dashboard

# kubectl create -f kube-dashboard.yaml

3、查看node的IP

[[email protected] dashboard]# kubectl get pods -o wide --namespace kube-system

NAME READY STATUS RESTARTS AGE IP NODE

kube-dns-5855d8c4f7-sbb7x 3/3 Running 0 1h 172.17.89.3 10.10.10.1

kubernetes-dashboard-7f8b5f54f9-gqjsh 1/1 Running 0 1h 172.17.39.2 10.10.10.3

[[email protected] dashboard]# kubectl get pods -o wide --namespace kube-system

NAME READY STATUS RESTARTS AGE IP NODE

kube-dns-5855d8c4f7-sbb7x 3/3 Running 0 1h 172.17.89.3 10.10.10.1

kubernetes-dashboard-7f8b5f54f9-gqjsh 1/1 Running 0 1h 172.17.39.2 10.10.10.3

4、测试

(1)浏览器

http://10.10.10.3:30090

(2)结果

以上是关于k8s集群安装的主要内容,如果未能解决你的问题,请参考以下文章