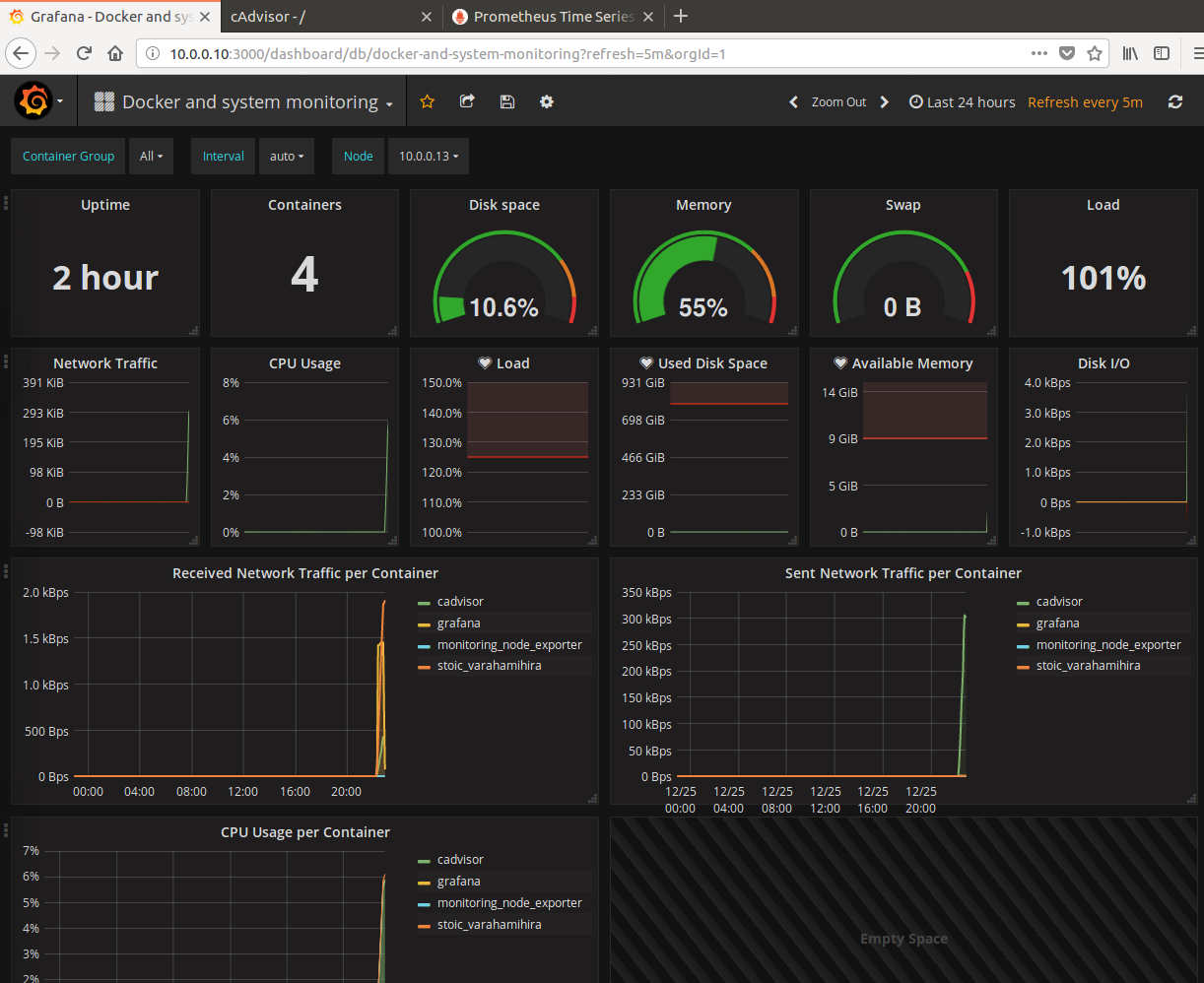

Docker Docker监控平台

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Docker Docker监控平台相关的知识,希望对你有一定的参考价值。

监控维度

- 主机维度

- 主机cpu

- 主机内存

- 主机本地镜像情况

- 主机上容器运行情况

- 镜像维度

- 镜像的基本信息

- 镜像与容器的基本信息

- 镜像构建的历史信息(层级依赖信息)

- 容器维度

- 容器基本信息

- 容器的运行状态信息

- 容器用量信息

docker监控命令

docker ps

docker images

docker stats

# 注意:docker stats只有选择libcontainer作为执行驱动时才可以使用

# docker stats时常会有一些限制,使用stats api会显示更多的信息

echo -e "GET /containers/tools/stats HTTP/1.0

" | nc -U /var/run/docker.sock

docker inspac

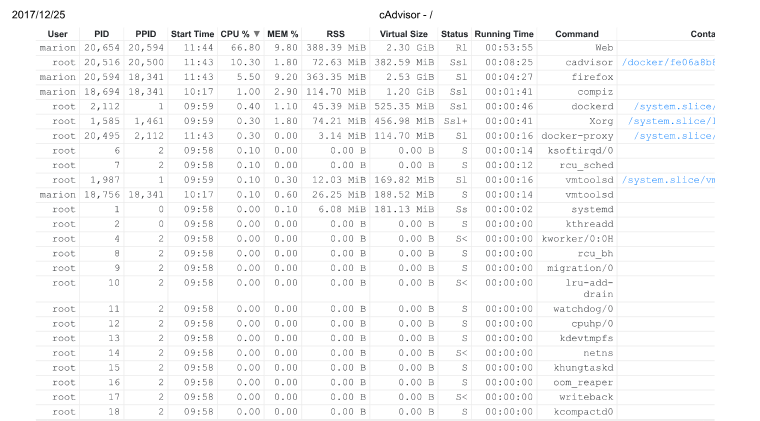

docker top

docker portcAdvisor

google cAdvisor的一些特性:

- 拥有远程管理的丰富api支持api docs

- 有web ui管理界面

- 是google除支持k8s外的另一个项目

- 监控信息输出到influxdb数据库进行存储和读取,支持很多其他的插件

- 支持将容器的统计信息一prometheus标准指标形式输出并存储在/metrics HTTP服务端点

sudo docker run --volume=/:/rootfs:ro --volume=/var/run:/var/run:rw --volume=/sys:/sys:ro --volume=/var/lib/docker/:/var/lib/docker:ro --volume=/dev/disk/:/dev/disk:ro --publish=8080:8080 --detach=true --name=cadvisor google/cadvisor:latestDataDog

SoundCloud的Promtheus

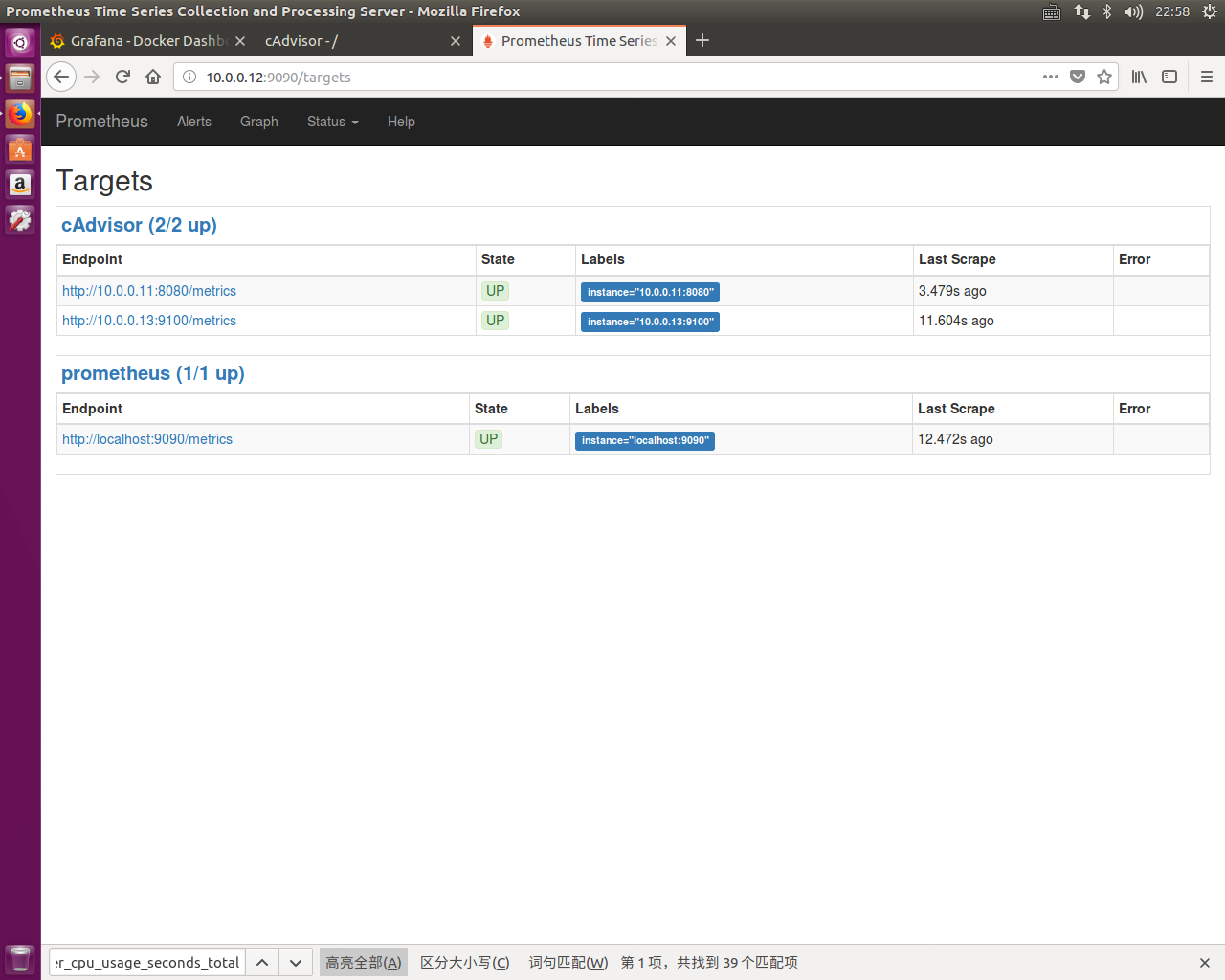

Promtheus是一个开源服务监控系统与时间序列数据库,结合exporter使用,exporter是基于prometheus开放的Http接口二次开发用来抓取应用程序的指标数据

Global setting

network configure

docker network create --driver bridge --subnet 10.0.0.0/24 --gateway 10.0.0.1 monitorGrafana configure

# pull grafana image

docker pull grafana/grafanagrafana目录

- 配置文件

/etc/grafana/ - sqlite3 database file

/var/lib/grafana

grafana在docker中的环境变量

GF_SERVER_ROOT_URL=http://grafana.server.name指定grafana的访问路径GF_SECURITY_ADMIN_PASSWORD=secret指定grafana的登录密码GF_INSTALL_PLUGINS=grafana-clock-panel,grafana-simple-json-datasource指定安装插件的变量

构建持久性存储

docker run -d -v /var/lib/grafana --name grafana-storage busybox:latest启动容器

# 启动Grafana容器

docker run -d -p 3000:3000 --name grafana --volumes-from grafana-storage -e "GF_INSTALL_PLUGINS=grafana-clock-panel,grafana-simple-json-datasource" -e "GF_SERVER_ROOT_URL=http://10.0.0.10:3000" -e "GF_SECURITY_ADMIN_PASSWORD=marion" --network monitor --ip 10.0.0.10 --restart always grafana/grafana

# 查看配置文件以及数据目录的挂载位置

docker inspect grafana

cAdvisor

sudo docker run --volume=/:/rootfs:ro --volume=/var/run:/var/run:rw --volume=/sys:/sys:ro --volume=/var/lib/docker/:/var/lib/docker:ro --volume=/dev/disk/:/dev/disk:ro --detach=true --name=cadvisor --network monitor --ip 10.0.0.11 google/cadvisor:latestDeploy by docker-compose yaml file

docker-compose.yml

prometheus:

image: prom/prometheus:latest

container_name: monitoring_prometheus

restart: unless-stopped

volumes:

- ./data/prometheus/config:/etc/prometheus/

- ./data/prometheus/data:/prometheus

command:

- ‘-config.file=/etc/prometheus/prometheus.yml‘

- ‘-storage.local.path=/prometheus‘

- ‘-alertmanager.url=http://alertmanager:9093‘

expose:

- 9090

ports:

- 9090:9090

links:

- cadvisor:cadvisor

- node-exporter:node-exporter

node-exporter:

image: prom/node-exporter:latest

container_name: monitoring_node_exporter

restart: unless-stopped

expose:

- 9100

cadvisor:,

image: google/cadvisor:latest

container_name: monitoring_cadvisor

restart: unless-stopped

volumes:

- /:/rootfs:ro

- /var/run:/var/run:rw

- /sys:/sys:ro

- /var/lib/docker/:/var/lib/docker:ro

expose:

- 8080

grafana:

image: grafana/grafana:latest

container_name: monitoring_grafana

restart: unless-stopped

links:

- prometheus:prometheus

volumes:

- ./data/grafana:/var/lib/grafana

environment:

- GF_SECURITY_ADMIN_PASSWORD=MYPASSWORT

- GF_USERS_ALLOW_SIGN_UP=false

- GF_SERVER_DOMAIN=myrul.com

- GF_SMTP_ENABLED=true

- GF_SMTP_HOST=smtp.gmail.com:587

- [email protected]

- GF_SMTP_PASSWORD=mypassword

- [email protected]

prometheus.yml

# my global config

global:

scrape_interval: 120s # By default, scrape targets every 15 seconds.

evaluation_interval: 120s # By default, scrape targets every 15 seconds.

# scrape_timeout is set to the global default (10s).

# Attach these labels to any time series or alerts when communicating with

# external systems (federation, remote storage, Alertmanager).

external_labels:

monitor: ‘my-project‘

# Load and evaluate rules in this file every ‘evaluation_interval‘ seconds.

rule_files:

# - "alert.rules"

# - "first.rules"

# - "second.rules"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it‘s Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: ‘prometheus‘

# Override the global default and scrape targets from this job every 5 seconds.

scrape_interval: 120s

# metrics_path defaults to ‘/metrics‘

# scheme defaults to ‘http‘.

static_configs:

- targets: [‘localhost:9090‘,‘cadvisor:8080‘,‘node-exporter:9100‘, ‘nginx-exporter:9113‘]command

docker-compose up -dsysdig

docker pull sysdig/sysdig

docker run -i -t --name sysdig --privileged -v /var/run/docker.sock:/host/var/run/docker.sock -v /dev:/host/dev -v /proc:/host/proc:ro -v /boot:/host/boot:ro -v /lib/modules:/host/lib/modules:ro -v /usr:/host/usr:ro sysdig/sysdig

docker container exec -it sysdig bash

csysdigWeave Scope

常用的容器监控工具

以上是关于Docker Docker监控平台的主要内容,如果未能解决你的问题,请参考以下文章

云原生之Docker实战使用Docker部署Linux系统监控平台Netdata