Scrapy框架学习爬取360摄影美图

Posted wdl1078390625

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Scrapy框架学习爬取360摄影美图相关的知识,希望对你有一定的参考价值。

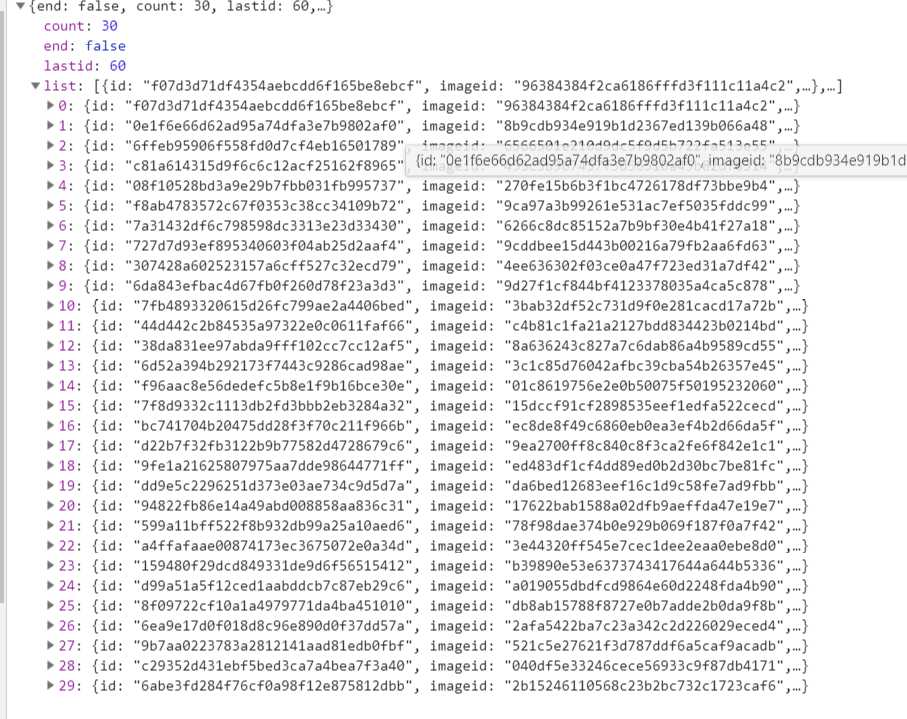

我们要爬取的网站为http://image.so.com/z?ch=photography,打开开发者工具,页面往下拉,观察到出现了如图所示Ajax请求,

其中list就是图片的详细信息,接着观察到每个Ajax请求的sn值会递增30,当sn为30时,返回前30张图片,当sn为60时,返回第31到60张图片,所以我们每次抓取时需要改变sn的值。接下来实现这个项目。

首先新建一个项目:scrapy startproject images360

新建一个Spider:scrapy genspider images images.so.com

在settings.py中定义爬取的最大量:MAX_PAGE=10

定义一个Item以接收Spider返回的Item:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class ImageItem(scrapy.Item): collection = table = ‘images‘ id = scrapy.Field() url = scrapy.Field() title = scrapy.Field() thumb = scrapy.Field()

修改images.py:

# -*- coding: utf-8 -*- import scrapy from scrapy import Spider,Request from urllib.parse import urlencode import json from images360.items import ImageItem class ImagesSpider(scrapy.Spider): name = ‘images‘ allowed_domains = [‘images.so.com‘] start_urls = [‘http://images.so.com/‘] def start_requests(self): data = {‘ch‘:‘photography‘,‘listtype‘:‘new‘} base_url = ‘https://image.so.com/zj?‘ for page in range(1,self.settings.get(‘MAX_PAGE‘)+1): data[‘sn‘] = page * 30 params = urlencode(data) url = base_url + params yield Request(url,self.parse) def parse(self, response): result = json.loads(response.text) for image in result.get(‘list‘): item = ImageItem() item[‘id‘] = image.get(‘imageid‘) item[‘url‘] = image.get(‘qhimg_url‘) item[‘title‘] = image.get(‘group_title‘) item[‘thumb‘] = image.get(‘qhimg_thumb_url‘) yield item

利用urlencode()方法将data转化为URL的get参数,每次爬取30张图片直到爬取完成。

修改settings.py中ROBOTSTXT_OBEY变量为False,这个变量代表是否遵守网站的爬取规则,若不修改则无法爬取。

接下来我们要把爬取到的数据存入数据库,新建数据库以及表的操作在此不再赘述。创建好数据库及表后,我们需实现一个Item Pipeline以实现存入数据库的操作:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don‘t forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html from scrapy import Request from scrapy.exceptions import DropItem import pymysql class MysqlPipeline(): def __init__(self,host,database,user,password,port): self.host = host self.database = database self.user = user self.password = password self.port = port @classmethod def from_crawler(cls,crawler): return cls( host=crawler.settings.get(‘MYSQL_HOST‘), database=crawler.settings.get(‘MYSQL_DATABASE‘), user=crawler.settings.get(‘MYSQL_USER‘), password=crawler.settings.get(‘MYSQL_PASSWORD‘), port=crawler.settings.get(‘MYSQL_PORT‘), ) def open_spider(self,spider): self.db = pymysql.connect(self.host,self.user,self.password, self.database,charset=‘utf8‘,port=self.port) self.cursor = self.db.cursor() def close_spider(self,spider): self.db.close() def process_item(self,item,spider): data = dict(item) keys = ‘, ‘.join(data.keys()) values = ‘, ‘.join([‘%s‘] * len(data)) sql = ‘insert into %s (%s) value (%s)‘ %(item.table,keys,values) self.cursor.execute(sql,tuple(data.values())) self.db.commit() return item

这里需要在settings.py中添加几个关于MySQL配置的变量,如下所示:

MYSQL_HOST = ‘localhost‘

MYSQL_DATABASE = ‘images360‘

MYSQL_PORT = 3306

MYSQL_USER = ‘root‘

MYSQL_PASSWORD = ‘123456‘

scrapy提供了专门处理下载的Pipeline。首先定义存储文件的路径,在settings.py中添加:IMAGES_STORE = ‘./images‘

定义ImagePipeline:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don‘t forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html from scrapy import Request from scrapy.exceptions import DropItem from scrapy.pipelines.images import ImagesPipeline import pymysql class ImagePipeline(ImagesPipeline): def file_path(self,request,response=None,info=None): url = request.url file_name = url.split(‘/‘)[-1] return file_name def item_completed(self,results,item,info): image_paths = [x[‘path‘] for ok,x in results if ok] if not image_paths: raise DropItem(‘Image Downloaded Failed‘) return item def get_media_requests(self,item,info): yield Request(item[‘url‘]) class MysqlPipeline(): def __init__(self,host,database,user,password,port): self.host = host self.database = database self.user = user self.password = password self.port = port @classmethod def from_crawler(cls,crawler): return cls( host=crawler.settings.get(‘MYSQL_HOST‘), database=crawler.settings.get(‘MYSQL_DATABASE‘), user=crawler.settings.get(‘MYSQL_USER‘), password=crawler.settings.get(‘MYSQL_PASSWORD‘), port=crawler.settings.get(‘MYSQL_PORT‘), ) def open_spider(self,spider): self.db = pymysql.connect(self.host,self.user,self.password, self.database,charset=‘utf8‘,port=self.port) self.cursor = self.db.cursor() def close_spider(self,spider): self.db.close() def process_item(self,item,spider): data = dict(item) keys = ‘, ‘.join(data.keys()) values = ‘, ‘.join([‘%s‘] * len(data)) sql = ‘insert into %s (%s) value (%s)‘ %(item.table,keys,values) self.cursor.execute(sql,tuple(data.values())) self.db.commit() return item

get_media_requests()方法取出Item对象的URL字段,生成Request对象发送给Scheduler,等待执行下载。

file_path()方法返回图片保存的文件名。

item_complete()方法当图片下载成功时返回Item说明下载成功,否则抛出DropItem异常,忽略这张图片。

最后需在settings.py文件中设置ITEM_PIPELINES以启动item管道:

ITEM_PIPELINES = { ‘images360.pipelines.ImagePipeline‘: 300, ‘images360.pipelines.MysqlPipeline‘: 301 }

大功告成,现在可以进行爬取了~输入scrapy crawl images即可完成爬取。

以上是关于Scrapy框架学习爬取360摄影美图的主要内容,如果未能解决你的问题,请参考以下文章