hive安装教程本地模式

Posted pu20065226

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了hive安装教程本地模式相关的知识,希望对你有一定的参考价值。

1.安装模式介绍:

Hive官网上介绍了Hive的3种安装方式,分别对应不同的应用场景。

a、内嵌模式(元数据保村在内嵌的derby种,允许一个会话链接,尝试多个会话链接时会报错)

b、本地模式(本地安装mysql 替代derby存储元数据)

c、远程模式(远程安装mysql 替代derby存储元数据)

2.安装准备:

前提:已经安装java JDK1.7以上,hadoop可用,mysql可用。

下载:

http://mirror.bit.edu.cn/apache/hive/hive-1.2.2/

解压

[[email protected] Hive]$ pwd

/usr/hadoop/Hive

[[email protected] Hive]$tar -xvf apache-hive-1.2.2-bin.tar.gz

3.mysql建用户授权,建库

[[email protected]]$ mysql -uroot -p MariaDB [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | test | +--------------------+ 4 rows in set (0.00 sec) MariaDB [(none)]> create user hive identified by ‘hive‘ -> ; Query OK, 0 rows affected (0.11 sec) MariaDB [(none)]> select user from mysql.user; +--------------+ | user | +--------------+ | hive | | replicate | | root | | sample | | sample_col | | sample_table | | root | | replicate | | root | | | | root | | | | root | +--------------+ 13 rows in set (0.00 sec) MariaDB [(none)]> grant all privileges on *.* to [email protected]‘%‘ identified by ‘hive‘; Query OK, 0 rows affected (0.03 sec) MariaDB [(none)]> flush privileges; Query OK, 0 rows affected (0.01 sec)

然后用新用户登陆库

mysql -uhive -phive MariaDB [(none)]> create database hive; Query OK, 1 row affected (0.00 sec)

MariaDB [hive]> use hive Database changed MariaDB [hive]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | hive | | mysql | | performance_schema | | test | +--------------------+ 5 rows in set (0.00 sec) MariaDB [hive]>

4.主机root添加环境变量

su - root [[email protected]-master bin]# vi /etc/profile export JAVA_HOME=/usr/java/jdk1.7.0_79 export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export HIVE_HOME=/usr/hadoop/Hive/apache-hive-1.2.2-bin export HADOOP_HOME=/usr/hadoop/hadoop-2.7.5 export PATH=$HIVE_HOME/bin:/usr/hadoop/Hbase/hbase-1.3.1/bin:$HADOOP_HOME/bin:$JAVA_HOME/bin:$PATH [[email protected]-master bin]# source /etc/profile

5.配置Hive

前提:将jdbc拷贝到lib下:/usr/hadoop/Hive/apache-hive-1.2.2-bin/lib/mariadb-java-client-1.7.4.jar 自己先测试jdbc能连库能用

配置hive-site.xml

[[email protected] conf]$ su - hadoop [[email protected]-master conf]$ pwd /usr/hadoop/Hive/apache-hive-1.2.2-bin/conf

[[email protected] conf]$ cp hive-default.xml.template hive-site.xml

[[email protected] conf]echo ‘‘>hive-site.xml [[email protected]-master conf]$ vi hive-site.xml <configuration> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> </property> <property> <name>hive.metastore.local</name> <value>true</value> </property> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mariadb://192.168.48.129:3306/hive</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>hive</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>org.mariadb.jdbc.Driver</value> </property> <property> <name>datanucleus.schema.autoCreateAll</name> <value>true</value> </property> <property> <name>hive.metastore.schema.verification</name> <value>false</value> </property> </configuration>

配置hive-env.sh

[[email protected] conf]$ cp hive-env.sh.template hive-env.sh [[email protected]-master conf]$ vi hive-env.sh # Set HADOOP_HOME to point to a specific hadoop install directory export HADOOP_HOME=/usr/hadoop/hadoop-2.7.5 # Hive Configuration Directory can be controlled by: export HIVE_CONF_DIR=/usr/hadoop/Hive/apache-hive-1.2.2-bin/conf # Folder containing extra ibraries required for hive compilation/execution can be controlled by: # export HIVE_AUX_JARS_PATH=

6.在HDFS上建立/tmp和/user/hive/warehouse目录,并赋予组用户写权限。

hadoop dfs -mkdir /tmp hadoop dfs -mkdir -p /user/hive/warehouse hadoop dfs -chmod g+w /tmp hadoop dfs -chmod g+w /user/hive/warehouse

7.启动

[[email protected] ~]$ hive 18/10/22 19:10:42 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist Logging initialized using configuration in jar:file:/usr/hadoop/Hive/apache-hive-1.2.2-bin/lib/hive-common-1.2.2.jar!/hive-log4j.properties hive>

成功安装

8.查看mysql,hive库下多了很多表

[[email protected] ~]$ mysql -uhive -phive Welcome to the MariaDB monitor. Commands end with ; or g. Your MariaDB connection id is 373 Server version: 5.5.41-MariaDB-log MariaDB Server Copyright (c) 2000, 2014, Oracle, MariaDB Corporation Ab and others. Type ‘help;‘ or ‘h‘ for help. Type ‘c‘ to clear the current input statement. MariaDB [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | hive | | mysql | | performance_schema | | test | +--------------------+ 5 rows in set (0.01 sec) MariaDB [(none)]> use hive Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed MariaDB [hive]> show tables; +---------------------------+ | Tables_in_hive | +---------------------------+ | BUCKETING_COLS | | CDS | | COLUMNS_V2 | | DATABASE_PARAMS | | DBS | | FUNCS | | FUNC_RU | | GLOBAL_PRIVS | | PARTITIONS | | PARTITION_KEYS | | PARTITION_KEY_VALS | | PARTITION_PARAMS | | PART_COL_STATS | | ROLES | | SDS | | SD_PARAMS | | SEQUENCE_TABLE | | SERDES | | SERDE_PARAMS | | SKEWED_COL_NAMES | | SKEWED_COL_VALUE_LOC_MAP | | SKEWED_STRING_LIST | | SKEWED_STRING_LIST_VALUES | | SKEWED_VALUES | | SORT_COLS | | TABLE_PARAMS | | TAB_COL_STATS | | TBLS | | VERSION | +---------------------------+ 29 rows in set (0.00 sec) MariaDB [hive]>

9.查看hdfs变化

[hado[email protected] ~]$ hadoop dfs -lsr / DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. lsr: DEPRECATED: Please use ‘ls -R‘ instead. drwxr-xr-x - hadoop supergroup 0 2018-03-19 19:54 /system drwx-w---- - hadoop supergroup 0 2018-10-22 19:10 /tmp drwx------ - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn drwx------ - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn/staging drwx------ - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn/staging/hadoop drwx------ - hadoop supergroup 0 2018-02-22 23:41 /tmp/hadoop-yarn/staging/hadoop/.staging drwxr-xr-x - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn/staging/history drwxrwxrwt - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn/staging/history/done_intermediate drwxrwx--- - hadoop supergroup 0 2018-02-22 23:41 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop -rwxrwx--- 3 hadoop supergroup 60426 2018-02-22 23:41 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop/job_1519369589927_0001-1519371280230-hadoop-word+count-1519371687036-5-1-SUCCEEDED-default-1519371298933.jhist -rwxrwx--- 3 hadoop supergroup 353 2018-02-22 23:41 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop/job_1519369589927_0001.summary -rwxrwx--- 3 hadoop supergroup 120116 2018-02-22 23:41 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop/job_1519369589927_0001_conf.xml drwx-wx-wx - hadoop supergroup 0 2018-10-22 19:10 /tmp/hive drwx------ - hadoop supergroup 0 2018-10-22 19:10 /tmp/hive/hadoop drwx------ - hadoop supergroup 0 2018-10-22 19:10 /tmp/hive/hadoop/f0cbabab-0d18-4b1a-8d77-f7efb35ca986 drwx------ - hadoop supergroup 0 2018-10-22 19:10 /tmp/hive/hadoop/f0cbabab-0d18-4b1a-8d77-f7efb35ca986/_tmp_space.db -rw-r--r-- 3 hadoop supergroup 18 2018-02-23 22:39 /upload drwxr-xr-x - hadoop supergroup 0 2018-10-22 03:11 /user drwxr-xr-x - hadoop supergroup 0 2018-02-23 22:38 /user/hadoop drwxr-xr-x - hadoop supergroup 0 2018-02-22 23:41 /user/hadoop/output -rw-r--r-- 3 hadoop supergroup 0 2018-02-22 23:41 /user/hadoop/output/_SUCCESS -rw-r--r-- 3 hadoop supergroup 6757 2018-02-22 23:41 /user/hadoop/output/part-r-00000 drwxr-xr-x - hadoop supergroup 0 2018-02-23 22:45 /user/hadoop/upload -rw-r--r-- 3 hadoop supergroup 18 2018-02-23 22:45 /user/hadoop/upload/my-local.txt drwxr-xr-x - hadoop supergroup 0 2018-10-22 03:11 /user/hive drwxrwxr-x - hadoop supergroup 0 2018-10-22 03:11 /user/hive/warehouse drwxr-xr-x - hadoop supergroup 0 2018-03-16 01:32 /user/test22 drwxr-xr-x - hadoop supergroup 0 2018-03-16 02:02 /user/test22/input -rw-r--r-- 3 hadoop supergroup 4277 2018-03-16 02:02 /user/test22/input/hadoop-env.sh -rw-r--r-- 3 hadoop supergroup 1449 2018-03-16 02:02 /user/test22/input/httpfs-env.sh -rw-r--r-- 3 hadoop supergroup 1527 2018-03-16 02:02 /user/test22/input/kms-env.sh -rw-r--r-- 3 hadoop supergroup 1383 2018-03-16 02:02 /user/test22/input/mapred-env.sh -rw-r--r-- 3 hadoop supergroup 4567 2018-03-16 02:02 /user/test22/input/yarn-env.sh drwxr-xr-x - hadoop supergroup 0 2018-03-16 01:32 /user/test22/output drwxr-xr-x - hadoop supergroup 0 2018-03-16 01:32 /user/test22/output/count -rw-r--r-- 3 hadoop supergroup 0 2018-03-16 01:32 /user/test22/output/count/_SUCCESS -rw-r--r-- 3 hadoop supergroup 6757 2018-03-16 01:32 /user/test22/output/count/part-r-00000

10.测试

hive中建库建表

进入hive 创建一个测试库,一个测试表

[[email protected] ~]$ hive 18/10/22 19:10:42 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist Logging initialized using configuration in jar:file:/usr/hadoop/Hive/apache-hive-1.2.2-bin/lib/hive-common-1.2.2.jar!/hive-log4j.properties hive> > ; hive> create database hive_test; OK Time taken: 2.558 seconds hive> show databases; OK default hive_test Time taken: 0.774 seconds, Fetched: 2 row(s) hive>

准备数据

[[email protected] pendingLoadData]$ pwd /usr/hadoop/Hive/pendingLoadData [[email protected]-master pendingLoadData]$ cat pepole.txt 1001 aaaa 1002 bbbb 1003 cccc 1004 dddd

创建测试表

[[email protected] pendingLoadData]$ hive

hive> use hive_test; OK Time taken: 0.263 seconds hive> create table dep(id int,name string) row format delimited fields terminated by ‘ ‘; OK Time taken: 0.843 seconds hive> load data local inpath ‘/usr/hadoop/Hive/pendingLoadData/pepole.txt‘ into table hive_test.dep; Loading data to table hive_test.dep Table hive_test.dep stats: [numFiles=1, totalSize=3901] OK Time taken: 2.898 seconds hive> load data local inpath ‘/usr/hadoop/Hive/pendingLoadData/pepole.txt‘ into table hive_test.dep; Loading data to table hive_test.dep Table hive_test.dep stats: [numFiles=1, numRows=0, totalSize=40, rawDataSize=0] OK Time taken: 0.355 seconds hive> select * from dep; OK 1001 aaaa 1002 bbbb 1003 cccc 1004 dddd Time taken: 0.119 seconds, Fetched: 4 row(s) hive>

查看mysql

[[email protected] ~]$ mysql -uhive -phive MariaDB [(none)]> use hive MariaDB [hive]> select * from DBS; +-------+-----------------------+------------------------------------------------------------+-----------+------------+------------+ | DB_ID | DESC | DB_LOCATION_URI | NAME | OWNER_NAME | OWNER_TYPE | +-------+-----------------------+------------------------------------------------------------+-----------+------------+------------+ | 1 | Default Hive database | hdfs://hadoop-master:9000/user/hive/warehouse | default | public | ROLE | | 2 | NULL | hdfs://hadoop-master:9000/user/hive/warehouse/hive_test.db | hive_test | hadoop | USER | +-------+-----------------------+------------------------------------------------------------+-----------+------------+------------+ 2 rows in set (0.04 sec) MariaDB [hive]> select * from TBLS; +--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+ | TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER | RETENTION | SD_ID | TBL_NAME | TBL_TYPE | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT | +--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+ | 1 | 1540262810 | 2 | 0 | hadoop | 0 | 1 | dep | MANAGED_TABLE | NULL | NULL | +--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+ 1 row in set (0.00 sec)

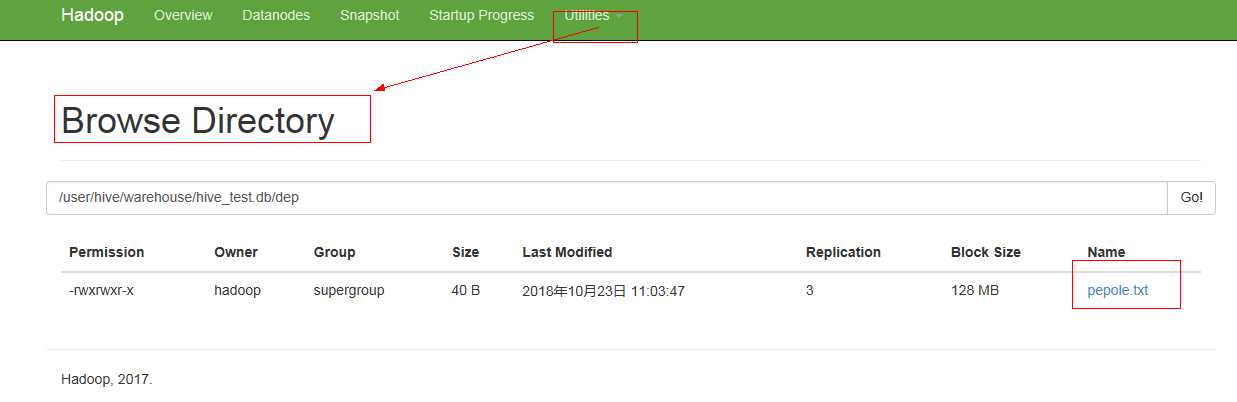

查看hdfs

[[email protected] pendingLoadData]$ hadoop dfs -lsr / DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. lsr: DEPRECATED: Please use ‘ls -R‘ instead. drwxr-xr-x - hadoop supergroup 0 2018-03-19 19:54 /system drwx-w---- - hadoop supergroup 0 2018-10-22 19:10 /tmp drwx------ - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn drwx------ - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn/staging drwx------ - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn/staging/hadoop drwx------ - hadoop supergroup 0 2018-02-22 23:41 /tmp/hadoop-yarn/staging/hadoop/.staging drwxr-xr-x - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn/staging/history drwxrwxrwt - hadoop supergroup 0 2018-02-22 23:34 /tmp/hadoop-yarn/staging/history/done_intermediate drwxrwx--- - hadoop supergroup 0 2018-02-22 23:41 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop -rwxrwx--- 3 hadoop supergroup 60426 2018-02-22 23:41 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop/job_1519369589927_0001-1519371280230-hadoop-word+count-1519371687036-5-1-SUCCEEDED-default-1519371298933.jhist -rwxrwx--- 3 hadoop supergroup 353 2018-02-22 23:41 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop/job_1519369589927_0001.summary -rwxrwx--- 3 hadoop supergroup 120116 2018-02-22 23:41 /tmp/hadoop-yarn/staging/history/done_intermediate/hadoop/job_1519369589927_0001_conf.xml drwx-wx-wx - hadoop supergroup 0 2018-10-22 19:10 /tmp/hive drwx------ - hadoop supergroup 0 2018-10-22 19:10 /tmp/hive/hadoop drwx------ - hadoop supergroup 0 2018-10-22 20:03 /tmp/hive/hadoop/f0cbabab-0d18-4b1a-8d77-f7efb35ca986 drwx------ - hadoop supergroup 0 2018-10-22 19:10 /tmp/hive/hadoop/f0cbabab-0d18-4b1a-8d77-f7efb35ca986/_tmp_space.db -rw-r--r-- 3 hadoop supergroup 18 2018-02-23 22:39 /upload drwxr-xr-x - hadoop supergroup 0 2018-10-22 03:11 /user drwxr-xr-x - hadoop supergroup 0 2018-02-23 22:38 /user/hadoop drwxr-xr-x - hadoop supergroup 0 2018-02-22 23:41 /user/hadoop/output -rw-r--r-- 3 hadoop supergroup 0 2018-02-22 23:41 /user/hadoop/output/_SUCCESS -rw-r--r-- 3 hadoop supergroup 6757 2018-02-22 23:41 /user/hadoop/output/part-r-00000 drwxr-xr-x - hadoop supergroup 0 2018-02-23 22:45 /user/hadoop/upload -rw-r--r-- 3 hadoop supergroup 18 2018-02-23 22:45 /user/hadoop/upload/my-local.txt drwxr-xr-x - hadoop supergroup 0 2018-10-22 03:11 /user/hive drwxrwxr-x - hadoop supergroup 0 2018-10-22 19:30 /user/hive/warehouse drwxrwxr-x - hadoop supergroup 0 2018-10-22 20:03 /user/hive/warehouse/hive_test.db drwxrwxr-x - hadoop supergroup 0 2018-10-22 20:03 /user/hive/warehouse/hive_test.db/dep -rwxrwxr-x 3 hadoop supergroup 40 2018-10-22 20:03 /user/hive/warehouse/hive_test.db/dep/pepole.txt drwxr-xr-x - hadoop supergroup 0 2018-03-16 01:32 /user/test22

通过hadoop web查看 http://xx:xx:xx:xx:50070

问题及方案

问题1.

java.sql.SQLException: Unable to open a test connection to the given database. JDBC url = jdbc:mariadb://192.168.48.129:3306/hive, username = hive. Terminating connection pool (set lazyInit to true if you expect to start your database after your app). Original Exception: ------ java.sql.SQLSyntaxErrorException: Access denied for user ‘hive‘@‘%‘ to database ‘hive‘ 解决:mysql -uhive -phive 看有没有权限访问hive库(没有的话,可能能hive库是在别的用户下建的,先删除后,在用hive用户登陆,然后建库)

问题2.

Caused by: javax.jdo.JDOException: Couldnt obtain a new sequence (unique id) : (conn=362) Cannot execute statement: impossible to write to binary log since BINLOG_FORMAT = STATEMENT and at least one table uses a storage engine limited to row-based logging. InnoDB is limited to row-logging when transaction isolation level is READ COMMITTED or READ UNCOMMITTED. 解决:mysql>set global binlog_format=‘ROW‘ 说明: 这是因为,mysql默认的binlog_format是STATEMENT。 从 MySQL 5.1.12 开始,可以用以下三种模式来实现:基于SQL语句的复制(statement-based replication, SBR),基于行的复制(row-based replication, RBR),混合模式复制(mixed-based replication, MBR)。相应地,binlog的格式也有三种:STATEMENT,ROW,MIXED。 如果你采用默认隔离级别REPEATABLE-READ,那么建议binlog_format=ROW。如果你是READ-COMMITTED隔离级别,binlog_format=MIXED和binlog_format=ROW效果是一样的,binlog记录的格式都是ROW,对主从复制来说是很安全的参数。 Query OK, 0 rows affected (0.02 sec) mysql> SET GLOBAL binlog_format=ROW; Query OK, 0 rows affected (0.00 sec) 但是这样只是一次性的,重启后失效。 方案二 永久生效,需要修改my.ini # binary logging format - ROW binlog_format=ROW

以上是关于hive安装教程本地模式的主要内容,如果未能解决你的问题,请参考以下文章