FFmpeg简单转码程序--视频剪辑

Posted hustlijian

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了FFmpeg简单转码程序--视频剪辑相关的知识,希望对你有一定的参考价值。

学习了雷神的文章,慕斯人分享精神,感其英年而逝,不胜唏嘘。他有分享一个转码程序《最简单的基于FFMPEG的转码程序》其中使用了filter(参考了ffmpeg.c中的流程),他曾说想再编写一个不需要filter的版本,可惜未有机会。恰好工作中有相关ffmpeg处理内容,故狗尾续貂,撰写本文。

相关流程:

1.打开输入文件

2.打开输出文件

3.设置解码环境

4.设置输出流信息

5.设置编码环境

6.打开输入流循环读取,解码再编码写入

7.fflush解码和编码ctx

8.关闭文件

本文的代码,为了支持视频精确剪辑,因为GOP关键帧问题,需要使用解码再编码,在编码中对时间做校验

使用方式:

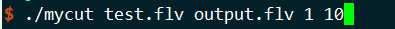

./mycut input output start end

如,截取1到10秒的视频:

代码如下:

// mycut.cpp

extern "C"

{

#include <libavutil/time.h>

#include <libavutil/timestamp.h>

#include <libavformat/avformat.h>

#include <libavformat/avio.h>

#include <libavfilter/avfiltergraph.h>

#include <libavfilter/buffersink.h>

#include <libavfilter/buffersrc.h>

#include <libavutil/opt.h>

#include <libavutil/imgutils.h>

#include <sys/types.h>

#include <unistd.h>

#include <sys/socket.h>

#include <arpa/inet.h>

#include <strings.h>

#include <stdio.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <errno.h>

#include <sys/file.h>

#include <signal.h>

#include <sys/wait.h>

#include <time.h> // time_t, tm, time, localtime, strftime

#include <sys/time.h> // time_t, tm, time, localtime, strftime

}

#define TIME_DEN 1000

// Returns the local date/time formatted as 2014-03-19 11:11:52

char* getFormattedTime(void);

// Remove path from filename

#define __SHORT_FILE__ (strrchr(__FILE__, ‘/‘) ? strrchr(__FILE__, ‘/‘) + 1 : __FILE__)

// Main log macro

//#define __LOG__(format, loglevel, ...) printf("%s %-5s [%s] [%s:%d] " format "

", getFormattedTime(), loglevel, __func__, __SHORT_FILE__, __LINE__, ## __VA_ARGS__)

#define __LOG__(format, loglevel, ...) printf("%ld %-5s [%s] [%s:%d] " format "

", current_timestamp(), loglevel, __func__, __SHORT_FILE__, __LINE__, ## __VA_ARGS__)

// Specific log macros with

#define LOGDEBUG(format, ...) __LOG__(format, "DEBUG", ## __VA_ARGS__)

#define LOGWARN(format, ...) __LOG__(format, "WARN", ## __VA_ARGS__)

#define LOGERROR(format, ...) __LOG__(format, "ERROR", ## __VA_ARGS__)

#define LOGINFO(format, ...) __LOG__(format, "INFO", ## __VA_ARGS__)

// Returns the local date/time formatted as 2014-03-19 11:11:52

char* getFormattedTime(void) {

time_t rawtime;

struct tm* timeinfo;

time(&rawtime);

timeinfo = localtime(&rawtime);

// Must be static, otherwise won‘t work

static char _retval[26];

strftime(_retval, sizeof(_retval), "%Y-%m-%d %H:%M:%S", timeinfo);

return _retval;

}

long long current_timestamp() {

struct timeval te;

gettimeofday(&te, NULL); // get current time

long long milliseconds = te.tv_sec*1000LL + te.tv_usec/1000; // calculate milliseconds

// printf("milliseconds: %lld

", milliseconds);

return milliseconds;

}

static int encode_and_save_pkt(AVCodecContext *enc_ctx, AVFormatContext *ofmt_ctx, AVStream *out_stream)

{

AVPacket enc_pkt;

av_init_packet(&enc_pkt);

enc_pkt.data = NULL;

enc_pkt.size = 0;

int ret = 0;

while (ret >= 0)

{

ret = avcodec_receive_packet(enc_ctx, &enc_pkt);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

{

ret = 0;

break;

}

else if (ret < 0)

{

printf("[avcodec_receive_packet]Error during encoding, ret:%d

", ret);

break;

}

LOGDEBUG("encode type:%d pts:%d dts:%d

", enc_ctx->codec_type, enc_pkt.pts, enc_pkt.dts);

/* rescale output packet timestamp values from codec to stream timebase */

av_packet_rescale_ts(&enc_pkt, enc_ctx->time_base, out_stream->time_base);

enc_pkt.stream_index = out_stream->index;

ret = av_interleaved_write_frame(ofmt_ctx, &enc_pkt);

if (ret < 0)

{

printf("write frame error, ret:%d

", ret);

break;

}

av_packet_unref(&enc_pkt);

}

return ret;

}

static int decode_and_send_frame(AVCodecContext *dec_ctx, AVCodecContext *enc_ctx, int start, int end)

{

AVFrame *frame = av_frame_alloc();

int ret = 0;

while (ret >= 0)

{

ret = avcodec_receive_frame(dec_ctx, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

{

ret = 0;

break;

}

else if (ret < 0)

{

printf("Error while receiving a frame from the decoder");

break;

}

int pts = frame->pts;

LOGDEBUG("decode type:%d pts:%d

", dec_ctx->codec_type, pts);

if ((pts < start*TIME_DEN) || (pts > end*TIME_DEN)) { // 数据裁剪

continue;

}

frame->pict_type = AV_PICTURE_TYPE_NONE;

// 修改pts

frame->pts = pts - start*TIME_DEN;

//printf("pts:%d

", frame->pts);

ret = avcodec_send_frame(enc_ctx, frame);

if (ret < 0)

{

printf("Error sending a frame for encoding

");

break;

}

}

av_frame_free(&frame);

return ret;

}

static int open_decodec_context(int *stream_idx,

AVCodecContext **dec_ctx, AVFormatContext *fmt_ctx, enum AVMediaType type)

{

int ret, stream_index;

AVStream *st;

AVCodec *dec = NULL;

AVDictionary *opts = NULL;

ret = av_find_best_stream(fmt_ctx, type, -1, -1, &dec, 0);

if (ret < 0)

{

fprintf(stderr, "Could not find %s stream in input file

",

av_get_media_type_string(type));

return ret;

}

else

{

stream_index = ret;

st = fmt_ctx->streams[stream_index];

/* Allocate a codec context for the decoder */

*dec_ctx = avcodec_alloc_context3(dec);

if (!*dec_ctx)

{

fprintf(stderr, "Failed to allocate the %s codec context

",

av_get_media_type_string(type));

return AVERROR(ENOMEM);

}

/* Copy codec parameters from input stream to output codec context */

if ((ret = avcodec_parameters_to_context(*dec_ctx, st->codecpar)) < 0)

{

fprintf(stderr, "Failed to copy %s codec parameters to decoder context

",

av_get_media_type_string(type));

return ret;

}

if ((*dec_ctx)->codec_type == AVMEDIA_TYPE_VIDEO)

(*dec_ctx)->framerate = av_guess_frame_rate(fmt_ctx, st, NULL);

/* Init the decoders, with or without reference counting */

av_dict_set_int(&opts, "refcounted_frames", 1, 0);

if ((ret = avcodec_open2(*dec_ctx, dec, &opts)) < 0)

{

fprintf(stderr, "Failed to open %s codec

",

av_get_media_type_string(type));

return ret;

}

*stream_idx = stream_index;

}

return 0;

}

static int set_encode_option(AVCodecContext *dec_ctx, AVDictionary **opt)

{

const char *profile = avcodec_profile_name(dec_ctx->codec_id, dec_ctx->profile);

if (profile)

{

if (!strcasecmp(profile, "high"))

{

av_dict_set(opt, "profile", "high", 0);

}

}

else

{

av_dict_set(opt, "profile", "main", 0);

}

av_dict_set(opt, "threads", "16", 0);

av_dict_set(opt, "preset", "slow", 0);

av_dict_set(opt, "level", "4.0", 0);

return 0;

}

static int open_encodec_context(int stream_index, AVCodecContext **oenc_ctx, AVFormatContext *fmt_ctx, enum AVMediaType type)

{

int ret;

AVStream *st;

AVCodec *encoder = NULL;

AVDictionary *opts = NULL;

AVCodecContext *enc_ctx;

st = fmt_ctx->streams[stream_index];

/* find encoder for the stream */

encoder = avcodec_find_encoder(st->codecpar->codec_id);

if (!encoder)

{

fprintf(stderr, "Failed to find %s codec

",

av_get_media_type_string(type));

return AVERROR(EINVAL);

}

enc_ctx = avcodec_alloc_context3(encoder);

if (!enc_ctx)

{

printf("Failed to allocate the encoder context

");

return AVERROR(ENOMEM);

}

AVCodecContext *dec_ctx = st->codec;

if (type == AVMEDIA_TYPE_VIDEO)

{

enc_ctx->height = dec_ctx->height;

enc_ctx->width = dec_ctx->width;

enc_ctx->sample_aspect_ratio = dec_ctx->sample_aspect_ratio;

enc_ctx->bit_rate = dec_ctx->bit_rate;

enc_ctx->rc_max_rate = dec_ctx->bit_rate;

enc_ctx->rc_buffer_size = dec_ctx->bit_rate;

enc_ctx->bit_rate_tolerance = 0;

// use yuv420P

enc_ctx->pix_fmt = AV_PIX_FMT_YUV420P;

// set frame rate

enc_ctx->time_base.num = 1;

enc_ctx->time_base.den = TIME_DEN;

enc_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

enc_ctx->has_b_frames = false;

enc_ctx->max_b_frames = 0;

enc_ctx->gop_size = 120;

set_encode_option(dec_ctx, &opts);

}

else if (type == AVMEDIA_TYPE_AUDIO)

{

enc_ctx->sample_rate = dec_ctx->sample_rate;

enc_ctx->channel_layout = dec_ctx->channel_layout;

enc_ctx->channels = av_get_channel_layout_nb_channels(enc_ctx->channel_layout);

/* take first format from list of supported formats */

enc_ctx->sample_fmt = encoder->sample_fmts[0];

enc_ctx->time_base = (AVRational){1, TIME_DEN};

enc_ctx->bit_rate = dec_ctx->bit_rate;

enc_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

}

else

{

ret = avcodec_copy_context(enc_ctx, st->codec);

if (ret < 0)

{

fprintf(stderr, "Failed to copy context from input to output stream codec context

");

return ret;

}

}

if ((ret = avcodec_open2(enc_ctx, encoder, &opts)) < 0)

{

fprintf(stderr, "Failed to open %s codec

",

av_get_media_type_string(type));

return ret;

}

*oenc_ctx = enc_ctx;

return 0;

}

int test_cut(char *input_file, char *output_file, int start, int end)

{

int ret = 0;

av_register_all();

// 输入流

AVFormatContext *ifmt_ctx = NULL;

AVCodecContext *video_dec_ctx = NULL;

AVCodecContext *audio_dec_ctx = NULL;

char *flv_name = input_file;

int video_stream_idx = 0;

int audio_stream_idx = 1;

// 输出流

AVFormatContext *ofmt_ctx = NULL;

AVCodecContext *audio_enc_ctx = NULL;

AVCodecContext *video_enc_ctx = NULL;

if ((ret = avformat_open_input(&ifmt_ctx, flv_name, 0, 0)) < 0)

{

printf("Could not open input file ‘%s‘ ret:%d

", flv_name, ret);

goto end;

}

if ((ret = avformat_find_stream_info(ifmt_ctx, 0)) < 0)

{

printf("Failed to retrieve input stream information");

goto end;

}

if (open_decodec_context(&video_stream_idx, &video_dec_ctx, ifmt_ctx, AVMEDIA_TYPE_VIDEO) < 0)

{

printf("fail to open vedio decode context, ret:%d

", ret);

goto end;

}

if (open_decodec_context(&audio_stream_idx, &audio_dec_ctx, ifmt_ctx, AVMEDIA_TYPE_AUDIO) < 0)

{

printf("fail to open audio decode context, ret:%d

", ret);

goto end;

}

av_dump_format(ifmt_ctx, 0, input_file, 0);

// 设置输出

avformat_alloc_output_context2(&ofmt_ctx, NULL, NULL, output_file);

if (!ofmt_ctx)

{

printf("can not open ouout context");

goto end;

}

// video stream

AVStream *out_stream;

out_stream = avformat_new_stream(ofmt_ctx, NULL);

if (!out_stream)

{

printf("Failed allocating output stream

");

ret = AVERROR_UNKNOWN;

goto end;

}

if ((ret = open_encodec_context(video_stream_idx, &video_enc_ctx, ifmt_ctx, AVMEDIA_TYPE_VIDEO)) < 0)

{

printf("video enc ctx init err

");

goto end;

}

ret = avcodec_parameters_from_context(out_stream->codecpar, video_enc_ctx);

if (ret < 0)

{

printf("Failed to copy codec parameters

");

goto end;

}

video_enc_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

out_stream->time_base = video_enc_ctx->time_base;

out_stream->codecpar->codec_tag = 0;

// audio stream

out_stream = avformat_new_stream(ofmt_ctx, NULL);

if (!out_stream)

{

printf("Failed allocating output stream

");

ret = AVERROR_UNKNOWN;

goto end;

}

if ((ret = open_encodec_context(audio_stream_idx, &audio_enc_ctx, ifmt_ctx, AVMEDIA_TYPE_AUDIO)) < 0)

{

printf("audio enc ctx init err

");

goto end;

}

ret = avcodec_parameters_from_context(out_stream->codecpar, audio_enc_ctx);

if (ret < 0)

{

printf("Failed to copy codec parameters

");

goto end;

}

audio_enc_ctx->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

out_stream->time_base = audio_enc_ctx->time_base;

out_stream->codecpar->codec_tag = 0;

av_dump_format(ofmt_ctx, 0, output_file, 1);

// 打开文件

if (!(ofmt_ctx->oformat->flags & AVFMT_NOFILE))

{

ret = avio_open(&ofmt_ctx->pb, output_file, AVIO_FLAG_WRITE);

if (ret < 0)

{

printf("Could not open output file ‘%s‘

", output_file);

goto end;

}

}

ret = avformat_write_header(ofmt_ctx, NULL);

if (ret < 0)

{

printf("Error occurred when opening output file

");

goto end;

}

AVPacket flv_pkt;

int stream_index;

while (1)

{

AVPacket *pkt = &flv_pkt;

ret = av_read_frame(ifmt_ctx, pkt);

if (ret < 0)

{

break;

}

if (pkt->stream_index == video_stream_idx && (pkt->flags & AV_PKT_FLAG_KEY))

{

printf("pkt.dts = %ld pkt.pts = %ld pkt.stream_index = %d is key frame

", pkt->dts, pkt->pts, pkt->stream_index);

}

stream_index = pkt->stream_index;

if (pkt->stream_index == video_stream_idx)

{

ret = avcodec_send_packet(video_dec_ctx, pkt);

LOGDEBUG("read type:%d pts:%d dts:%d

", video_dec_ctx->codec_type, pkt->pts, pkt->dts);

ret = decode_and_send_frame(video_dec_ctx, video_enc_ctx, start, end);

ret = encode_and_save_pkt(video_enc_ctx, ofmt_ctx, ofmt_ctx->streams[0]);

if (ret < 0)

{

printf("re encode video error, ret:%d

", ret);

}

}

else if (pkt->stream_index == audio_stream_idx)

{

ret = avcodec_send_packet(audio_dec_ctx, pkt);

LOGDEBUG("read type:%d pts:%d dts:%d

", audio_dec_ctx->codec_type, pkt->pts, pkt->dts);

ret = decode_and_send_frame(audio_dec_ctx, audio_enc_ctx, start, end);

ret = encode_and_save_pkt(audio_enc_ctx, ofmt_ctx, ofmt_ctx->streams[1]);

if (ret < 0)

{

printf("re encode audio error, ret:%d

", ret);

}

}

av_packet_unref(pkt);

}

// fflush

// fflush encode

avcodec_send_packet(video_dec_ctx, NULL);

decode_and_send_frame(video_dec_ctx, video_enc_ctx, start, end);

avcodec_send_packet(audio_dec_ctx, NULL);

decode_and_send_frame(audio_dec_ctx, audio_enc_ctx, start, end);

// fflush decode

avcodec_send_frame(video_enc_ctx, NULL);

encode_and_save_pkt(video_enc_ctx, ofmt_ctx, ofmt_ctx->streams[0]);

avcodec_send_frame(audio_enc_ctx, NULL);

encode_and_save_pkt(audio_enc_ctx, ofmt_ctx, ofmt_ctx->streams[1]);

LOGDEBUG("stream end

");

av_write_trailer(ofmt_ctx);

end:

avformat_close_input(&ifmt_ctx);

if (ofmt_ctx && !(ofmt_ctx->oformat->flags & AVFMT_NOFILE))

avio_closep(&ofmt_ctx->pb);

avformat_free_context(ofmt_ctx);

avcodec_free_context(&video_dec_ctx);

avcodec_free_context(&audio_dec_ctx);

return ret;

}

int main(int argc, char **argv)

{

int ret = 0;

if (argc < 5)

{

printf("Usage: %s input output start end

", argv[0]);

return 1;

}

char *input_file = argv[1];

char *output_file = argv[2];

int start = atoi(argv[3]);

int end = atoi(argv[4]);

ret = test_cut(input_file, output_file, start, end);

return ret;

}

以上是关于FFmpeg简单转码程序--视频剪辑的主要内容,如果未能解决你的问题,请参考以下文章