[Istioc]Istio部署sock-shop时rabbitmq出现CrashLoopBackOff

Posted yuxiaoba

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[Istioc]Istio部署sock-shop时rabbitmq出现CrashLoopBackOff相关的知识,希望对你有一定的参考价值。

因Istio官网自带的bookinfo服务依赖关系较少,因此想部署sock-shop进行进一步的实验。

kubectl apply -f <(istioctl kube-inject -f sockshop-demo.yaml)

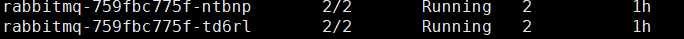

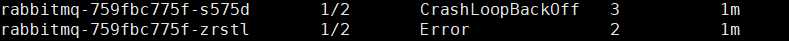

在部署完以后,rabbitmq一直处于CrashLoopBackOff和Error的状态

查看状态并没有特别明显的报错

[email protected]2-1:~# kubectl describe pod -n sock-shop rabbitmq-759fbc775f-s575d Name: rabbitmq-759fbc775f-s575d Namespace: sock-shop Node: 192.168.199.191/192.168.199.191 Start Time: Sun, 23 Sep 2018 08:48:14 +0800 Labels: name=rabbitmq pod-template-hash=3159673319 Annotations: sidecar.istio.io/status={"version":"55ca84b79cb5036ec3e54a3aed83cd77973cdcbed6bf653ff7b4f82659d68b1e","initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["istio-envoy","istio-certs... Status: Running IP: 172.20.5.51 Controlled By: ReplicaSet/rabbitmq-759fbc775f Init Containers: istio-init: Container ID: docker://b180f64c1589c3b8aae32fbcc3dcfbcb75bc758a78c4b22b538d0fda447aee9b Image: docker.io/istio/proxy_init:1.0.0 Image ID: docker-pullable://istio/[email protected]:345c40053b53b7cc70d12fb94379e5aa0befd979a99db80833cde671bd1f9fad Port: <none> Args: -p 15001 -u 1337 -m REDIRECT -i * -x -b 5672, -d State: Terminated Reason: Completed Exit Code: 0 Started: Sun, 23 Sep 2018 08:48:15 +0800 Finished: Sun, 23 Sep 2018 08:48:15 +0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-9lxtp (ro) Containers: rabbitmq: Container ID: docker://9f9062c0457bfb23d3cf8c5bbc62bff198a68dd2bbae9ef2738920650abfbe3d Image: rabbitmq:3.6.8 Image ID: docker-pullable://[email protected]:a9f4923559bbcd00b93b02e61615aef5eb6f1d1c98db51053bab0fa6b680db26 Port: 5672/TCP State: Waiting Reason: CrashLoopBackOff Last State: Terminated Reason: Error Exit Code: 1 Started: Sun, 23 Sep 2018 09:25:50 +0800 Finished: Sun, 23 Sep 2018 09:25:55 +0800 Ready: False Restart Count: 12 Limits: cpu: 1024m memory: 1Gi Requests: cpu: 0 memory: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-9lxtp (ro) istio-proxy: Container ID: docker://d96548f938c41552450cd026eba5b7ff7915feb0cc058b8ecff3959896afed90 Image: docker.io/istio/proxyv2:1.0.0 Image ID: docker-pullable://istio/[email protected]:77915a0b8c88cce11f04caf88c9ee30300d5ba1fe13146ad5ece9abf8826204c Port: <none> Args: proxy sidecar --configPath /etc/istio/proxy --binaryPath /usr/local/bin/envoy --serviceCluster istio-proxy --drainDuration 45s --parentShutdownDuration 1m0s --discoveryAddress istio-pilot.istio-system:15007 --discoveryRefreshDelay 1s --zipkinAddress zipkin.istio-system:9411 --connectTimeout 10s --statsdUdpAddress istio-statsd-prom-bridge.istio-system:9125 --proxyAdminPort 15000 --controlPlaneAuthPolicy NONE State: Running Started: Sun, 23 Sep 2018 08:48:17 +0800 Ready: True Restart Count: 0 Requests: cpu: 10m Environment: POD_NAME: rabbitmq-759fbc775f-s575d (v1:metadata.name) POD_NAMESPACE: sock-shop (v1:metadata.namespace) INSTANCE_IP: (v1:status.podIP) ISTIO_META_POD_NAME: rabbitmq-759fbc775f-s575d (v1:metadata.name) ISTIO_META_INTERCEPTION_MODE: REDIRECT Mounts: /etc/certs/ from istio-certs (ro) /etc/istio/proxy from istio-envoy (rw) /var/run/secrets/kubernetes.io/serviceaccount from default-token-9lxtp (ro) Conditions: Type Status Initialized True Ready False PodScheduled True Volumes: istio-envoy: Type: EmptyDir (a temporary directory that shares a pod‘s lifetime) Medium: Memory istio-certs: Type: Secret (a volume populated by a Secret) SecretName: istio.default Optional: true default-token-9lxtp: Type: Secret (a volume populated by a Secret) SecretName: default-token-9lxtp Optional: false QoS Class: Burstable Node-Selectors: beta.kubernetes.io/os=linux Tolerations: <none> Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulMountVolume 38m kubelet, 192.168.199.191 MountVolume.SetUp succeeded for volume "istio-envoy" Normal SuccessfulMountVolume 38m kubelet, 192.168.199.191 MountVolume.SetUp succeeded for volume "istio-certs" Normal SuccessfulMountVolume 38m kubelet, 192.168.199.191 MountVolume.SetUp succeeded for volume "default-token-9lxtp" Normal Scheduled 38m default-scheduler Successfully assigned rabbitmq-759fbc775f-s575d to 192.168.199.191 Normal Started 38m kubelet, 192.168.199.191 Started container Normal Pulled 38m kubelet, 192.168.199.191 Container image "docker.io/istio/proxy_init:1.0.0" already present on machine Normal Created 38m kubelet, 192.168.199.191 Created container Normal Pulled 38m kubelet, 192.168.199.191 Container image "docker.io/istio/proxyv2:1.0.0" already present on machine Normal Started 38m kubelet, 192.168.199.191 Started container Normal Created 38m kubelet, 192.168.199.191 Created container Normal Pulled 37m (x4 over 38m) kubelet, 192.168.199.191 Container image "rabbitmq:3.6.8" already present on machine Normal Started 37m (x4 over 38m) kubelet, 192.168.199.191 Started container Normal Created 37m (x4 over 38m) kubelet, 192.168.199.191 Created container Warning BackOff 2m (x160 over 38m) kubelet, 192.168.199.191 Back-off restarting failed container

后来看istio的github的issue发现其他人也有遇到这个问题。其原因是在rabbitmq的service中没有加入epmd pod

apiVersion: v1 kind: Service metadata: name: rabbitmq labels: name: rabbitmq namespace: sock-shop spec: ports: # the port that this service should serve on - port: 5672 targetPort: 5672 name: amqp - port: 4369 //4369时sock-shop的epmd默认端口 name: epmd selector: name: rabbitmq

然后再apply一次就发现问题解决了