k8s glusterfs heketi

Posted fengjian2016

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了k8s glusterfs heketi相关的知识,希望对你有一定的参考价值。

kubernetes集群上node节点安装glusterfs的服务端集群(DaemonSet方式),并将heketi以deployment的方式部署到kubernetes集群,主要是实现 storageclass

Heketi是一个具有resetful接口的glusterfs管理程序, Heketi提供了一个RESTful管理界面,可用于管理GlusterFS卷的生命周期。借助Heketi,Kubernetes 可以动态地配置GlusterFS卷和任何支持的持久性类型。Heketi将自动确定整个集群的brick位置,确保将brick及其副本放置在不同的故障域中。Heketi还支持任意数量的GlusterFS集群,允许云服务提供网络文件存储,而不受限于单个GlusterFS集群。”

标注:

1.每个node 节点安装 glusterfs 客户端 yum -y install glusterfs-client

2. 加载内核模块 : modprobe dm_thin_pool

3. 至少要3个节点, 每个节点上必须要一个裸硬盘。

glusterfs 和 heketi 在kubernetes 部署

1. 下载heketi 和 heketi-cli

git clone https://github.com/heketi/heketi.git

wget https://github.com/heketi/heketi/releases/download/v7.0.0/heketi-client-v7.0.0.linux.amd64.tar.gz

2. 进入到 heketi kubernetes 的安装目录

cd /root/heketi/heketi/extras/kubernetes

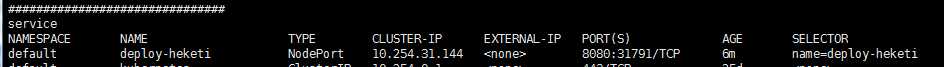

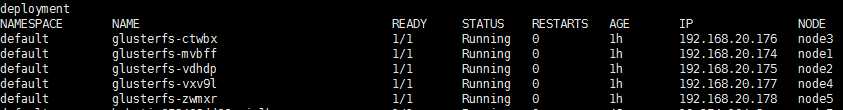

3. 部署 glusterfs daemonset 方式

kubectl create -f glusterfs-daemonset.json

4. node 节点设置标签

查看标签 kubectl get nodes --show-labels 设置标签 kubectl label node node1 storagenode-glusterfs kubectl label node node2 storagenode-glusterfs kubectl label node node3 storagenode-glusterfs kubectl label node node4 storagenode-glusterfs kubectl label node node5 storagenode-glusterfs ###删除标签命令#### kubectl label node node5 storagenode- #######

5.创建heketi 服务器账号(serviceaccount)

kubectl create -f heketi-service-account.json

6. heketi服务帐户的授权绑定相应的权限来控制gluster的pod

kubectl create clusterrolebinding heketi-gluster-admin --clusterrole=edit --serviceaccount=default:heketi-service-account

7.创建一个Kubernetes secret来保存Heketi实例的配置

kubectl create secret generic heketi-config-secret --from-file=./heketi.json

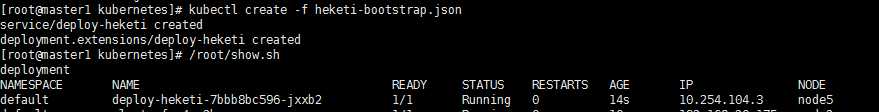

8.需要部署一个初始(bootstrap)Pod和一个服务来访问该Pod

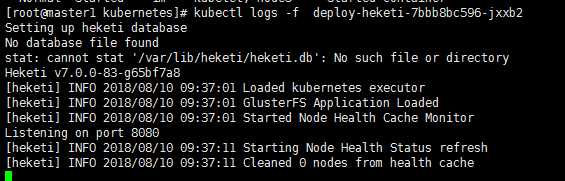

使用 bootstrap heketi 服务 是为了 把 heketi db 放置在 glusterfs卷上。

deploy-heketi服务端程序运行后, 我们可以使用heketi-cli 与服务进行通信。测试heketi 服务运行是否正常

[[email protected] kubernetes]# curl 192.168.20.178:31791/hello Hello from Heketi [[email protected] kubernetes]#

通过拓扑文件,向Heketi提供有关要管理的GlusterFS集群的信息

[[email protected] kubernetes]# cat topology.json { "clusters": [ { "nodes": [ { "node": { "hostnames": { "manage": [ "node1" ], "storage": [ "192.168.20.174" ] }, "zone": 1 }, "devices": [ { "name": "/dev/sdb", "destroydata": false } ] }, { "node": { "hostnames": { "manage": [ "node2" ], "storage": [ "192.168.20.175" ] }, "zone": 1 }, "devices": [ { "name": "/dev/sdb", "destroydata": false } ] }, { "node": { "hostnames": { "manage": [ "node3" ], "storage": [ "192.168.20.176" ] }, "zone": 1 }, "devices": [ { "name": "/dev/sdb", "destroydata": false } ] }, { "node": { "hostnames": { "manage": [ "node4" ], "storage": [ "192.168.20.177" ] }, "zone": 1 }, "devices": [ { "name": "/dev/sdb", "destroydata": false } ] }, { "node": { "hostnames": { "manage": [ "node5" ], "storage": [ "192.168.20.178" ] }, "zone": 1 }, "devices": [ { "name": "/dev/sdb", "destroydata": false } ] } ] } ] }

其实就是glusterfs 添加对端 gluster peer status

[[email protected] kubernetes]# /root/heketi/heketi-client/bin/heketi-cli -s http://192.168.20.178:31791 topology load --json=topology.json Creating cluster ... ID: 6a73c32f28af53fc4eda6d5b4c9769bf Allowing file volumes on cluster. Allowing block volumes on cluster. Creating node node1 ... ID: 46bd3a9e2ac7fe3bd80bf5d418cdcf08 Adding device /dev/sdb ... OK Creating node node2 ... ID: e50ab42920c804afd010b8f739f81d1a Adding device /dev/sdb ... OK Creating node node3 ... ID: 3b5dd4c09c8216be7c5a2d919581891b Adding device /dev/sdb ... OK Creating node node4 ... ID: b42de16ea1863e7133493876dd93d00e Adding device /dev/sdb ... OK Creating node node5 ... ID: 47d1de245a14091473cda2626e605f16 Adding device /dev/sdb ... OK You have new mail in /var/spool/mail/root

使用heketi为其存储其数据库提供一个卷

/root/heketi/heketi-client/bin/heketi-cli -s http://192.168.20.178:31791 setup-openshift-heketi-storage --image 192.168.200.10/source/heketi/heketi:dev

生成文件 heketi-storage.json

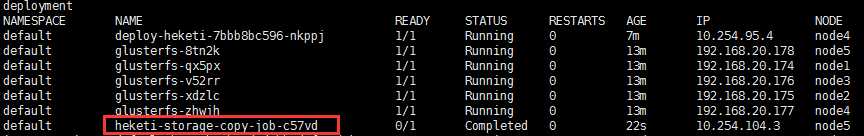

[[email protected] ~]# kubectl create -f heketi-storage.json secret/heketi-storage-secret created endpoints/heketi-storage-endpoints created service/heketi-storage-endpoints created job.batch/heketi-storage-copy-job created

heketi-storage-copy-job-c57vd status 状态是有问题的, 没有关系,继续进行。

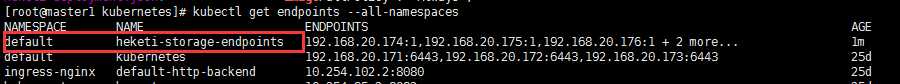

endpoints 中的 heketi-storage-endpoints 一定要存在,否则 创建 heketi-deployment.json 时会报错

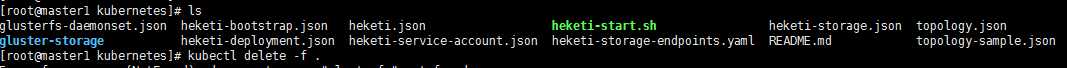

删除bootstrap Heketi实例相关的组件

[[email protected] ~]# kubectl delete all,service,jobs,deployment,secret --selector="deploy-heketi"

pod "deploy-heketi-7bbb8bc596-nkppj" deleted

service "deploy-heketi" deleted

deployment.apps "deploy-heketi" deleted

replicaset.apps "deploy-heketi-7bbb8bc596" deleted

job.batch "heketi-storage-copy-job" deleted

secret "heketi-storage-secret" deleted

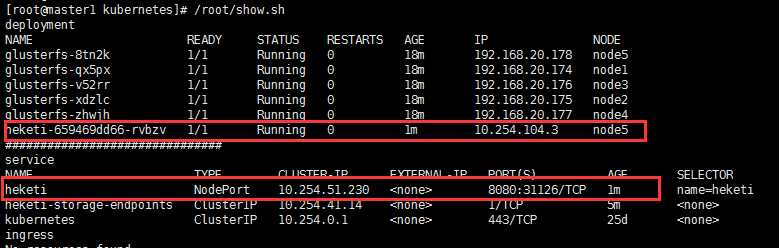

创建长期使用的Heketi实例(存储持久化的)

[[email protected] kubernetes]# kubectl create -f heketi-deployment.json secret/heketi-db-backup created service/heketi created deployment.extensions/heketi created

[[email protected] gluster-storage]# cat storage-class-slow.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: slow #-------------SC的名字 provisioner: kubernetes.io/glusterfs parameters: resturl: "http://192.168.20.177:31791" #-------------heketi service的cluster ip 和端口 restuser: "admin" #-------------随便填,因为没有启用鉴权模式 gidMin: "40000" gidMax: "50000" volumetype: "replicate:3" #-------------申请的默认为3副本模式

清理集群

清理有状态服务,清理daemonset部署的 glusterfs集群

kubectl delete secret heketi-config-secret

kubectl delete clusterrolebinding heketi-gluster-admin

2. 清理node节点gluster文件

(1)删除不运行的docker, 其中包括glusterfs docker ps -a | grep Exited | awk ‘{print $1}‘ | xargs docker rm (2) 删除/var/lib/glusterd 目录 pkill -9 gluster; rm /var/lib/glusterd/ -rf

(3) 清除vg,pv

[[email protected] ~]# vgremove vg_ebaa3e7189cabac78a48426a90a14bd9

[[email protected] ~]# pvremove /dev/sdb

(4) 删除node vg

rm -rf /dev/vg_*

以上是关于k8s glusterfs heketi的主要内容,如果未能解决你的问题,请参考以下文章