案例二(构建双主高可用HAProxy负载均衡系统)

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了案例二(构建双主高可用HAProxy负载均衡系统)相关的知识,希望对你有一定的参考价值。

在案例一介绍HAProxy高可用负载均衡集群架构中,虽然通过Keepalived实现了HAProxy的高可用,但是严重浪费了服务器资源,因为在一主一备的Keepalived环境中,只有主节点处于工作状态,而备用节点则一直处于空闲等待状态,仅当主节点出现问题时备用节点才能开始工作。对于并发量比大的Web应用系统来说,主节点可能会非常繁忙,而备用节点则十分空闲,这种服务器资源分布不均的问题,也是在做应用架构设计时必须要考虑的问题。对于一主一备资源不均衡的问题,可以通过双主互备的方式进行负载分流,下面就详细讲述双主互备的高可用集群系统是如何实现的。

1.系统架构图与实现原理

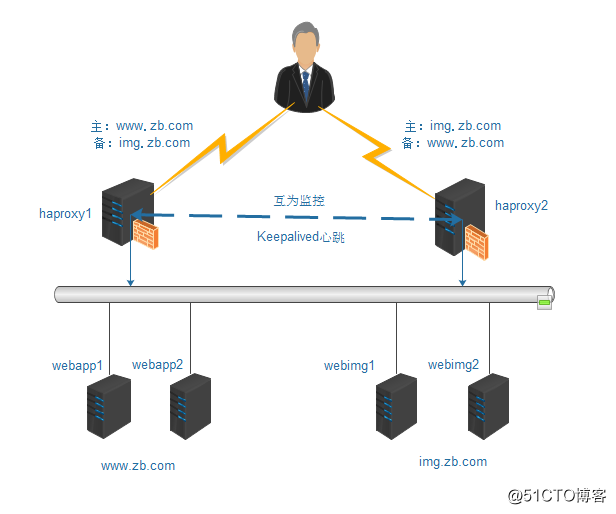

为了能充分利用服务器资源并将负载进行分流,可以在一主一备的基础上构建双主互备的高可用HAProxy负载均衡集群系统。双主互备的集群架构如图:

在这个架构中,要实现的功能是:通过haproxy1服务器将www.zb.com的访问请求发送到webapp1和webapp2两台主机上,要实现www.zb.com的负载均衡;通过haproxy2将img.zb.com的访问请求发送到webimg1和webimg2两台主机上,要实现img.zb.com的负载均衡;同时,如果haproxy1或haproxy2任何一台服务器出现故障,都会将用户访问请求发送到另一台健康的负载均衡节点,进而继续保持两个网站的负载均衡。

操作系统:

CentOS release 6.7

地址规划:

| 主机名 | 物理IP地址 | 虚拟IP地址 | 集群角色 |

| haproxy1 | 10.0.0.35 | 10.0.0.40 | 主:www.zb.com |

| 备:img.zb.com | |||

| haproxy2 | 10.0.0.36 | 10.0.0.50 | 主:img.zb.com |

| 备:www.zb.com | |||

| webapp1 | 10.0.0.150 | 无 | Backend Server |

| webapp2 | 10.0.0.151 | 无 | Backend Server |

| webimg1 | 10.0.0.152 | 无 | Backend Server |

| webimg2 | 10.0.0.8 | 无 | Backend Server |

主要:为了保证haproxy1和haproxy2服务器资源得到充分利用,这里对访问进行了分流操作,需要将www.zb.com的域名解析到10.0.0.40这个IP上,将img.zb.com域名解析到10.0.0.50这个IP上。

2.安装并配置HAProxy集群系统

在主机名为haproxy1和haproxy2的节点依次安装HAProxy并配置,配置好的haproxy.cfg文件,内容如下:

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

pidfile /var/run/haproxy.pid

maxconn 4096

user haproxy

group haproxy

daemon

nbproc 1

# turn on stats unix socket

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

retries 3

timeout connect 5s

timeout client 30s

timeout server 30s

timeout check 2s

listen admin_stats

bind 0.0.0.0:19088

mode http

log 127.0.0.1 local0 err

stats refresh 30s

stats uri /haproxy-status

stats realm welcome login Haproxy

stats auth admin:admin

stats hide-version

stats admin if TRUE

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend www

bind *:80

mode http

option httplog

option forwardfor

log global

acl host_www hdr_dom(host) -i www.zb.com

acl host_img hdr_dom(host) -i img.zb.com

use_backend server_www if host_www

use_backend server_img if host_img

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend server_www

mode http

option redispatch

option abortonclose

balance roundrobin

option httpchk GET /index.html

server web01 10.0.0.150:80 weight 6 check inter 2000 rise 2 fall 3

server web02 10.0.0.151:80 weight 6 check inter 2000 rise 2 fall 3

backend server_img

mode http

option redispatch

option abortonclose

balance roundrobin

option httpchk GET /index.html

server webimg1 10.0.0.152:80 weight 6 check inter 2000 rise 2 fall 3

server webimg2 10.0.0.8:80 weight 6 check inter 2000 rise 2 fall 3

在这个HAProxy配置中,通过ACL规则将www.zb.com站点转到webapp1、webapp2两个后端节点,将img.zb.com站点转到webimg1和webimg2两个后端服务节点,分别实现负载均衡。

最后将haproxy.conf文件分别复制到haproxy1和haproxy2两台服务器上,然后在两个负载均衡器上依次启动HAProxy服务。

3.安装并配置双主的Keepalived高可用系统

依次在主、备两个节点上安装Keepalived。keepalived.conf文件内容:

! Configuration File for keepalived

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 2

}

vrrp_instance HAProxy_HA {

state MASTER #在haproxy2主机上,此处为BACKUP

interface eth0

virtual_router_id 80 #在一个实例下,virtual_router_id是唯一的,因此在haproxy2上,virtual_router_id也为80

priority 100 #在haproxy2主机上,priority值为80

advert_int 2

authentication {

auth_type PASS

auth_pass aaaa

}

notify_master "/etc/keepalived/mail_notify.sh master"

notify_backup "/etc/keepalived/mail_notify.sh backup"

notify_fault "/etc/keepalived/mail_notify.sh fault"

track_script {

check_haproxy

}

virtual_ipaddress {

10.0.0.40/24 dev eth0

}

}

vrrp_instance HAProxy_HA2 {

state BACKUP #在haproxy2主机上,此处为MASTER

interface eth0

virtual_router_id 81 #在haproxy2主机上,此外virtual_router_id也必须为81

priority 80 #在haproxy2主机上,priority值为100

advert_int 2

authentication {

auth_type PASS

auth_pass aaaa

}

notify_master "/etc/keepalived/mail_notify.sh master"

notify_backup "/etc/keepalived/mail_notify.sh backup"

notify_fault "/etc/keepalived/mail_notify.sh fault"

track_script {

check_haproxy

}

virtual_ipaddress {

10.0.0.50/24 dev eth0

}

}

在双主互备的配置中,有两个VIP地址,在正常情况下,10.0.0.40将自动加载到haproxy1主机上,而10.0.0.50将自动加载到haproxy2主机上。这里要特别注意的是,haproxy1和haproxy2两个节点上virtual_router_id的值要互不相同,并且MASTER角色的priority值要大于BACKUP角色的priority值。

在完成所有配置修好后,依次在haproxy1和haproxy2两个节点启动Keepalived服务,并观察VIP地址是否正常加载到对应的节点上。

4.测试

在haproxy1和haproxy2节点依次启动HAProxy服务和Keepalived服务后,首先观察haproxy节点Keepalived的启动日志,信息如下:

Jul 25 10:06:34 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA) Transition to MASTER STATE

Jul 25 10:06:36 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA) Entering MASTER STATE

Jul 25 10:06:36 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA) setting protocol VIPs.

Jul 25 10:06:36 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA) Sending gratuitous ARPs on eth0 for 10.0.0.40

Jul 25 10:06:36 data-1-1 Keepalived_healthcheckers[33166]: Netlink reflector reports IP 10.0.0.40 added

Jul 25 10:06:53 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA2) Received higher prio advert

Jul 25 10:06:53 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA2) Entering BACKUP STATE

下面测试一下双主互备的故障切换功能,这里为了模拟故障,将haproxy1节点上HAProxy服务关闭,然后在haproxy1节点观察Keepalived的启动日志,信息如下:

Jul 25 14:42:09 data-1-1 Keepalived_vrrp[33167]: VRRP_Script(check_haproxy) failed

Jul 25 14:42:10 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA) Entering FAULT STATE

Jul 25 14:42:10 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA) removing protocol VIPs.

Jul 25 14:42:10 data-1-1 Keepalived_vrrp[33167]: VRRP_Instance(HAProxy_HA) Now in FAULT state

Jul 25 14:42:10 data-1-1 Keepalived_healthcheckers[33166]: Netlink reflector reports IP 10.0.0.40 removed

从切换过程看,keepalived运行完全正常。

以上是关于案例二(构建双主高可用HAProxy负载均衡系统)的主要内容,如果未能解决你的问题,请参考以下文章

[转] HaproxyKeepalived双主高可用负载均衡

Haproxy+Keepalived实现网站双主高可用-理论篇