datax的安装与使用

Posted biehongli

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了datax的安装与使用相关的知识,希望对你有一定的参考价值。

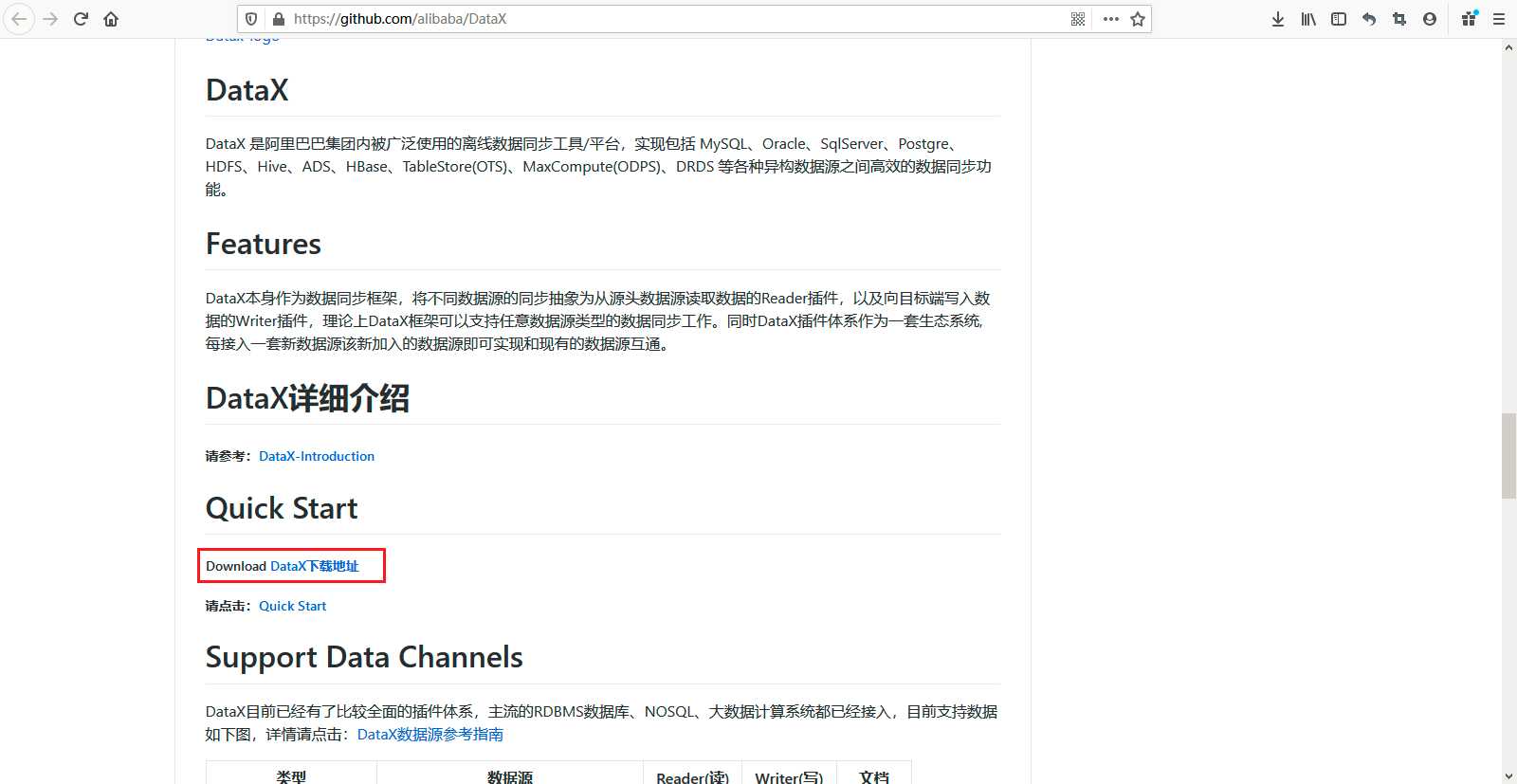

1、官网下载地址:https://github.com/alibaba/DataX

DataX 是阿里巴巴集团内被广泛使用的离线数据同步工具/平台,实现包括 mysql、Oracle、SqlServer、Postgre、HDFS、Hive、ADS、HBase、TableStore(OTS)、MaxCompute(ODPS)、DRDS 等各种异构数据源之间高效的数据同步功能。

DataX本身作为数据同步框架,将不同数据源的同步抽象为从源头数据源读取数据的Reader插件,以及向目标端写入数据的Writer插件,理论上DataX框架可以支持任意数据源类型的数据同步工作。同时DataX插件体系作为一套生态系统, 每接入一套新数据源该新加入的数据源即可实现和现有的数据源互通。

2、在页面中【Quick Start】===》【Download DataX下载地址】进行下载。下载后的包名:datax.tar.gz。这个压缩包还是有点大的,我下载的有790.95MB大小。

3、DataX目前已经有了比较全面的插件体系,主流的RDBMS数据库、NOSQL、大数据计算系统都已经接入,目前支持数据如下图:

| 类型 | 数据源 | Reader(读) | Writer(写) | 文档 |

|---|---|---|---|---|

| RDBMS 关系型数据库 | MySQL | √ | √ | 读 、写 |

| Oracle | √ | √ | 读 、写 | |

| SQLServer | √ | √ | 读 、写 | |

| PostgreSQL | √ | √ | 读 、写 | |

| DRDS | √ | √ | 读 、写 | |

| 通用RDBMS(支持所有关系型数据库) | √ | √ | 读 、写 | |

| 阿里云数仓数据存储 | ODPS | √ | √ | 读 、写 |

| ADS | √ | 写 | ||

| OSS | √ | √ | 读 、写 | |

| OCS | √ | √ | 读 、写 | |

| NoSQL数据存储 | OTS | √ | √ | 读 、写 |

| Hbase0.94 | √ | √ | 读 、写 | |

| Hbase1.1 | √ | √ | 读 、写 | |

| Phoenix4.x | √ | √ | 读 、写 | |

| Phoenix5.x | √ | √ | 读 、写 | |

| MongoDB | √ | √ | 读 、写 | |

| Hive | √ | √ | 读 、写 | |

| Cassandra | √ | √ | 读 、写 | |

| 无结构化数据存储 | TxtFile | √ | √ | 读 、写 |

| FTP | √ | √ | 读 、写 | |

| HDFS | √ | √ | 读 、写 | |

| Elasticsearch | √ | 写 | ||

| 时间序列数据库 | OpenTSDB | √ | 读 | |

| TSDB | √ | √ | 读 、写 |

4、将下载好的datax.tar.gz包上传到服务器,并进行解压缩操作,如下所示:

官网安装地址:https://github.com/alibaba/DataX/blob/master/userGuid.md

1 [root@slaver1 package]# ll datax.tar.gz 2 -rw-r--r--. 1 root root 829372407 7月 17 14:50 datax.tar.gz 3 [root@slaver1 package]# tar -zxvf datax.tar.gz -C /home/hadoop/soft/ 4 5 [root@slaver1 package]# cd ../soft/ 6 [root@slaver1 soft]# ls 7 cerebro-0.7.2 datax elasticsearch-6.7.0 filebeat-6.7.0-linux-x86_64 kibana-6.7.0-linux-x86_64 logstash-6.7.0 8 [root@slaver1 soft]# cd datax/ 9 [root@slaver1 datax]# ls 10 bin conf job lib plugin script tmp 11 [root@slaver1 datax]# ll 12 总用量 4 13 drwxr-xr-x. 2 62265 users 59 10月 12 2019 bin 14 drwxr-xr-x. 2 62265 users 68 10月 12 2019 conf 15 drwxr-xr-x. 2 62265 users 22 10月 12 2019 job 16 drwxr-xr-x. 2 62265 users 4096 10月 12 2019 lib 17 drwxr-xr-x. 4 62265 users 34 10月 12 2019 plugin 18 drwxr-xr-x. 2 62265 users 23 10月 12 2019 script 19 drwxr-xr-x. 2 62265 users 24 10月 12 2019 tmp 20 [root@slaver1 datax]#

5、将下载后的datax.tar.gz压缩包直接解压后就可以使用了,但是前提是要安装好java、python的环境。

1)、由于此服务器之前已经安装过Jdk1.8了,所以这里就忽略了,jdk通常需要自己安装的哦,如下所示:

1 [root@slaver1 datax]# java -version 2 openjdk version "1.8.0_232" 3 OpenJDK Runtime Environment (build 1.8.0_232-b09) 4 OpenJDK 64-Bit Server VM (build 25.232-b09, mixed mode) 5 [root@slaver1 datax]#

2)、Python(推荐Python2.7.X)一定要为Python2,因为后面执行datax.py的时候,里面的Python的print会执行不了,导致运行不成功,会提示你print语法要加括号,Python2中加不加都行,Python3中必须要加,否则报语法错。python版本查看(通常系统自带2.x版本)。

1 [root@slaver1 datax]# python -V 2 Python 2.7.5 3 [root@slaver1 datax]#

3)、Apache Maven 3.x (Compile DataX),通常需要自己安装的哦。

下载Maven安装包,然后进行解压缩操作,可以自己改下名称方便操作。

1 [root@slaver1 package]# wget http://mirrors.tuna.tsinghua.edu.cn/apache/maven/maven-3/3.3.9/binaries/apache-maven-3.3.9-bin.tar.gz 2 --2020-07-17 15:10:29-- http://mirrors.tuna.tsinghua.edu.cn/apache/maven/maven-3/3.3.9/binaries/apache-maven-3.3.9-bin.tar.gz 3 正在解析主机 mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)... 101.6.8.193, 2402:f000:1:408:8100::1 4 正在连接 mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)|101.6.8.193|:80... 已连接。 5 已发出 HTTP 请求,正在等待回应... 200 OK 6 长度:8491533 (8.1M) [application/x-gzip] 7 正在保存至: “apache-maven-3.3.9-bin.tar.gz” 8 9 100%[======================================================================================================================================================================================>] 8,491,533 6.32MB/s 用时 1.3s 10 11 2020-07-17 15:10:31 (6.32 MB/s) - 已保存 “apache-maven-3.3.9-bin.tar.gz” [8491533/8491533]) 12 13 [root@slaver1 package]# tar -zxvf apache-maven-3.3.9-bin.tar.gz -C /home/hadoop/soft/

配置Maven的环境变量[root@slaver1 soft]# vim /etc/profile,如下所示:

1 M2_HOME=/home/hadoop/soft/apache-maven-3.3.9 2 export PATH=${M2_HOME}/bin:${PATH}

重新加载此配置文件,如下所示:

1 [root@slaver1 soft]# source /etc/profile 2 [root@slaver1 soft]#

最后检查Maven安装是否成功,如下所示:

1 [root@slaver1 soft]# mvn -v 2 which: no javac in (/home/hadoop/soft/apache-maven-3.3.9/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin) 3 Warning: JAVA_HOME environment variable is not set. 4 Apache Maven 3.3.9 (bb52d8502b132ec0a5a3f4c09453c07478323dc5; 2015-11-11T00:41:47+08:00) 5 Maven home: /home/hadoop/soft/apache-maven-3.3.9 6 Java version: 1.8.0_232, vendor: Oracle Corporation 7 Java home: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64/jre 8 Default locale: zh_CN, platform encoding: UTF-8 9 OS name: "linux", version: "3.10.0-957.el7.x86_64", arch: "amd64", family: "unix" 10 [root@slaver1 soft]#

6、Jdk、Python、Maven都安装成功了,datax解压缩成功了,开始自检,进入bin目录,开始自检,如下所示:

1 [root@slaver1 bin]# python datax.py ../job/job.json 2 3 DataX (DATAX-OPENSOURCE-3.0), From Alibaba ! 4 Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved. 5 6 7 2020-07-17 15:33:06.296 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl 8 2020-07-17 15:33:06.322 [main] INFO Engine - the machine info => 9 10 osInfo: Oracle Corporation 1.8 25.232-b09 11 jvmInfo: Linux amd64 3.10.0-957.el7.x86_64 12 cpu num: 2 13 14 totalPhysicalMemory: -0.00G 15 freePhysicalMemory: -0.00G 16 maxFileDescriptorCount: -1 17 currentOpenFileDescriptorCount: -1 18 19 GC Names [PS MarkSweep, PS Scavenge] 20 21 MEMORY_NAME | allocation_size | init_size 22 PS Eden Space | 256.00MB | 256.00MB 23 Code Cache | 240.00MB | 2.44MB 24 Compressed Class Space | 1,024.00MB | 0.00MB 25 PS Survivor Space | 42.50MB | 42.50MB 26 PS Old Gen | 683.00MB | 683.00MB 27 Metaspace | -0.00MB | 0.00MB 28 29 30 2020-07-17 15:33:06.379 [main] INFO Engine - 31 { 32 "content":[ 33 { 34 "reader":{ 35 "name":"streamreader", 36 "parameter":{ 37 "column":[ 38 { 39 "type":"string", 40 "value":"DataX" 41 }, 42 { 43 "type":"long", 44 "value":19890604 45 }, 46 { 47 "type":"date", 48 "value":"1989-06-04 00:00:00" 49 }, 50 { 51 "type":"bool", 52 "value":true 53 }, 54 { 55 "type":"bytes", 56 "value":"test" 57 } 58 ], 59 "sliceRecordCount":100000 60 } 61 }, 62 "writer":{ 63 "name":"streamwriter", 64 "parameter":{ 65 "encoding":"UTF-8", 66 "print":false 67 } 68 } 69 } 70 ], 71 "setting":{ 72 "errorLimit":{ 73 "percentage":0.02, 74 "record":0 75 }, 76 "speed":{ 77 "byte":10485760 78 } 79 } 80 } 81 82 2020-07-17 15:33:06.475 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null 83 2020-07-17 15:33:06.480 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0 84 2020-07-17 15:33:06.481 [main] INFO JobContainer - DataX jobContainer starts job. 85 2020-07-17 15:33:06.495 [main] INFO JobContainer - Set jobId = 0 86 2020-07-17 15:33:06.591 [job-0] INFO JobContainer - jobContainer starts to do prepare ... 87 2020-07-17 15:33:06.593 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work . 88 2020-07-17 15:33:06.594 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work . 89 2020-07-17 15:33:06.594 [job-0] INFO JobContainer - jobContainer starts to do split ... 90 2020-07-17 15:33:06.598 [job-0] INFO JobContainer - Job set Max-Byte-Speed to 10485760 bytes. 91 2020-07-17 15:33:06.604 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [1] tasks. 92 2020-07-17 15:33:06.606 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [1] tasks. 93 2020-07-17 15:33:06.678 [job-0] INFO JobContainer - jobContainer starts to do schedule ... 94 2020-07-17 15:33:06.709 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups. 95 2020-07-17 15:33:06.720 [job-0] INFO JobContainer - Running by standalone Mode. 96 2020-07-17 15:33:06.806 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks. 97 2020-07-17 15:33:06.830 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated. 98 2020-07-17 15:33:06.831 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated. 99 2020-07-17 15:33:06.882 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started 100 2020-07-17 15:33:07.087 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[215]ms 101 2020-07-17 15:33:07.088 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it‘s tasks. 102 2020-07-17 15:33:16.841 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.148s | All Task WaitReaderTime 0.161s | Percentage 100.00% 103 2020-07-17 15:33:16.842 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks. 104 2020-07-17 15:33:16.843 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work. 105 2020-07-17 15:33:16.844 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work. 106 2020-07-17 15:33:16.845 [job-0] INFO JobContainer - DataX jobId [0] completed successfully. 107 2020-07-17 15:33:16.846 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /home/hadoop/soft/datax/hook 108 2020-07-17 15:33:16.851 [job-0] INFO JobContainer - 109 [total cpu info] => 110 averageCpu | maxDeltaCpu | minDeltaCpu 111 -1.00% | -1.00% | -1.00% 112 113 114 [total gc info] => 115 NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime 116 PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s 117 PS Scavenge | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s 118 119 2020-07-17 15:33:16.852 [job-0] INFO JobContainer - PerfTrace not enable! 120 2020-07-17 15:33:16.854 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.148s | All Task WaitReaderTime 0.161s | Percentage 100.00% 121 2020-07-17 15:33:16.861 [job-0] INFO JobContainer - 122 任务启动时刻 : 2020-07-17 15:33:06 123 任务结束时刻 : 2020-07-17 15:33:16 124 任务总计耗时 : 10s 125 任务平均流量 : 253.91KB/s 126 记录写入速度 : 10000rec/s 127 读出记录总数 : 100000 128 读写失败总数 : 0 129 130 [root@slaver1 bin]#

7、如何实现mysql数据库的数据表向mysql的数据库的数据表传输数据呢,如下所示:

1 [root@slaver1 job]# cat mysql2mysql.json 2 { 3 "job": { 4 "setting": { 5 "speed": { 6 "byte":1048576, 7 "channel":"4" 8 } 9 }, 10 "content": [ 11 { 12 "reader": { 13 "name": "mysqlreader", 14 "parameter": { 15 "username": "root", 16 "password": "123456", 17 "connection": [ 18 { 19 "querySql": [ 20 "SELECT id,table_name,part,source,start_time,next_time,target,start_batch,next_batch FROM data_exchange_table_time " 21 ], 22 "jdbcUrl": ["jdbc:mysql://192.168.110.133:3306/book"] 23 } 24 ] 25 } 26 }, 27 "writer": { 28 "name": "mysqlwriter", 29 "parameter": { 30 "writeMode": "insert", 31 "column": [ 32 "id", 33 "table_name", 34 "part", 35 "source", 36 "start_time", 37 "next_time", 38 "target", 39 "start_batch", 40 "next_batch" 41 ], 42 "connection": [ 43 { 44 45 "jdbcUrl": "jdbc:mysql://192.168.110.133:3306/biehl", 46 "table": ["data_exchange_table_time"] 47 } 48 ], 49 "username": "root", 50 "password": "123456", 51 "postSql": [], 52 "preSql": [], 53 } 54 } 55 } 56 ], 57 } 58 } 59 [root@slaver1 job]#

执行命令,切记首先数据源数据表必须存在,然后目标数据库的数据表结构一定要提前创建好,如下所示:

1 [root@slaver1 job]# python /home/hadoop/soft/datax/bin/datax.py /home/hadoop/soft/datax/job/mysql2mysql.json 2 3 DataX (DATAX-OPENSOURCE-3.0), From Alibaba ! 4 Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved. 5 6 7 2020-07-17 17:16:50.886 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl 8 2020-07-17 17:16:50.903 [main] INFO Engine - the machine info => 9 10 osInfo: Oracle Corporation 1.8 25.232-b09 11 jvmInfo: Linux amd64 3.10.0-957.el7.x86_64 12 cpu num: 2 13 14 totalPhysicalMemory: -0.00G 15 freePhysicalMemory: -0.00G 16 maxFileDescriptorCount: -1 17 currentOpenFileDescriptorCount: -1 18 19 GC Names [PS MarkSweep, PS Scavenge] 20 21 MEMORY_NAME | allocation_size | init_size 22 PS Eden Space | 256.00MB | 256.00MB 23 Code Cache | 240.00MB | 2.44MB 24 Compressed Class Space | 1,024.00MB | 0.00MB 25 PS Survivor Space | 42.50MB | 42.50MB 26 PS Old Gen | 683.00MB | 683.00MB 27 Metaspace | -0.00MB | 0.00MB 28 29 30 2020-07-17 17:16:50.954 [main] INFO Engine - 31 { 32 "content":[ 33 { 34 "reader":{ 35 "name":"mysqlreader", 36 "parameter":{ 37 "connection":[ 38 { 39 "jdbcUrl":[ 40 "jdbc:mysql://192.168.110.133:3306/book" 41 ], 42 "querySql":[ 43 "SELECT id,table_name,part,source,start_time,next_time,target,start_batch,next_batch FROM data_exchange_table_time " 44 ] 45 } 46 ], 47 "password":"******", 48 "username":"root" 49 } 50 }, 51 "writer":{ 52 "name":"mysqlwriter", 53 "parameter":{ 54 "column":[ 55 "id", 56 "table_name", 57 "part", 58 "source", 59 "start_time", 60 "next_time", 61 "target", 62 "start_batch", 63 "next_batch" 64 ], 65 "connection":[ 66 { 67 "jdbcUrl":"jdbc:mysql://192.168.110.133:3306/biehl", 68 "table":[ 69 "data_exchange_table_time" 70 ] 71 } 72 ], 73 "password":"******", 74 "postSql":[], 75 "preSql":[], 76 "username":"root", 77 "writeMode":"insert" 78 } 79 } 80 } 81 ], 82 "setting":{ 83 "speed":{ 84 "byte":1048576, 85 "channel":"4" 86 } 87 } 88 } 89 90 2020-07-17 17:16:51.011 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null 91 2020-07-17 17:16:51.014 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0 92 2020-07-17 17:16:51.015 [main] INFO JobContainer - DataX jobContainer starts job. 93 2020-07-17 17:16:51.018 [main] INFO JobContainer - Set jobId = 0 94 2020-07-17 17:16:51.678 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://192.168.110.133:3306/book?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true. 95 2020-07-17 17:16:52.118 [job-0] INFO OriginalConfPretreatmentUtil - table:[data_exchange_table_time] all columns:[ 96 id,table_name,part,source,start_time,next_time,target,start_batch,next_batch 97 ]. 98 2020-07-17 17:16:52.142 [job-0] INFO OriginalConfPretreatmentUtil - Write data [ 99 insert INTO %s (id,table_name,part,source,start_time,next_time,target,start_batch,next_batch) VALUES(?,?,?,?,?,?,?,?,?) 100 ], which jdbcUrl like:[jdbc:mysql://192.168.110.133:3306/biehl?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true] 101 2020-07-17 17:16:52.144 [job-0] INFO JobContainer - jobContainer starts to do prepare ... 102 2020-07-17 17:16:52.146 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do prepare work . 103 2020-07-17 17:16:52.150 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do prepare work . 104 2020-07-17 17:16:52.152 [job-0] INFO JobContainer - jobContainer starts to do split ... 105 2020-07-17 17:16:52.157 [job-0] INFO JobContainer - Job set Max-Byte-Speed to 1048576 bytes. 106 2020-07-17 17:16:52.169 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] splits to [1] tasks. 107 2020-07-17 17:16:52.171 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks. 108 2020-07-17 17:16:52.221 [job-0] INFO JobContainer - jobContainer starts to do schedule ... 109 2020-07-17 17:16:52.232 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups. 110 2020-07-17 17:16:52.238 [job-0] INFO JobContainer - Running by standalone Mode. 111 2020-07-17 17:16:52.270 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks. 112 2020-07-17 17:16:52.294 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated. 113 2020-07-17 17:16:52.297 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated. 114 2020-07-17 17:16:52.332 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started 115 2020-07-17 17:16:52.340 [0-0-0-reader] INFO CommonRdbmsReader$Task - Begin to read record by Sql: [SELECT id,table_name,part,source,start_time,next_time,target,start_batch,next_batch FROM data_exchange_table_time 116 ] jdbcUrl:[jdbc:mysql://192.168.110.133:3306/book?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true]. 117 2020-07-17 17:16:56.814 [0-0-0-reader] INFO CommonRdbmsReader$Task - Finished read record by Sql: [SELECT id,table_name,part,source,start_time,next_time,target,start_batch,next_batch FROM data_exchange_table_time 118 ] jdbcUrl:[jdbc:mysql://192.168.110.133:3306/book?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true]. 119 2020-07-17 17:16:56.971 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[4651]ms 120 2020-07-17 17:16:56.972 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it‘s tasks. 121 2020-07-17 17:17:02.330 [job-0] INFO StandAloneJobContainerCommunicator - Total 61086 records, 4112902 bytes | Speed 401.65KB/s, 6108 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 3.495s | All Task WaitReaderTime 0.792s | Percentage 100.00% 122 2020-07-17 17:17:02.332 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks. 123 2020-07-17 17:17:02.333 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do post work. 124 2020-07-17 17:17:02.343 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do post work. 125 2020-07-17 17:17:02.345 [job-0] INFO JobContainer - DataX jobId [0] completed successfully. 126 2020-07-17 17:17:02.348 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /home/hadoop/soft/datax/hook 127 2020-07-17 17:17:02.379 [job-0] INFO JobContainer - 128 [total cpu info] => 129 averageCpu | maxDeltaCpu | minDeltaCpu 130 -1.00% | -1.00% | -1.00% 131 132 133 [total gc info] => 134 NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime 135 PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s 136 PS Scavenge | 1 | 1 | 1 | 0.044s | 0.044s | 0.044s 137 138 2020-07-17 17:17:02.379 [job-0] INFO JobContainer - PerfTrace not enable! 139 2020-07-17 17:17:02.408 [job-0] INFO StandAloneJobContainerCommunicator - Total 61086 records, 4112902 bytes | Speed 401.65KB/s, 6108 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 3.495s | All Task WaitReaderTime 0.792s | Percentage 100.00% 140 2020-07-17 17:17:02.454 [job-0] INFO JobContainer - 141 任务启动时刻 : 2020-07-17 17:16:51 142 任务结束时刻 : 2020-07-17 17:17:02 143 任务总计耗时 : 11s 144 任务平均流量 : 401.65KB/s 145 记录写入速度 : 6108rec/s 146 读出记录总数 : 61086 147 读写失败总数 : 0 148 149 [root@slaver1 job]#

以上是关于datax的安装与使用的主要内容,如果未能解决你的问题,请参考以下文章