k8s kubeadm部署高可用集群

Posted abner123

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了k8s kubeadm部署高可用集群相关的知识,希望对你有一定的参考价值。

一、在生产环境中,我们k8s集群需要多master实现高可用,所以下面介绍如何通过kubeadm部署k8s高可用集群(建议生产环境master至少3个以上)

二、master部署:

1、三台maser节点上部署etcd集群

2、使用VIP进行kubeadm初始化master

注意:本次是通过物理服务器进行部署,如果使用阿里云服务器部署,由于阿里云服务器不支持VIP,可以通过SLB做负载均衡

三、环境准备;

| 节点主机: | IP地址 | 操作系统 | 虚拟IP(VIP) |

| test-k8s-master-1 | 172.18.178.236 | CentOS Linux release 7.7.1908 (Core) | 172.18.178.240 |

| test-k8s-master-2 | 172.18.178.237 | CentOS Linux release 7.7.1908 (Core) | |

| test-k8s-master-3 | 172.18.178.238 | CentOS Linux release 7.7.1908 (Core) | |

| test-k8s-node-01 | 172.18.178.239 | CentOS Linux release 7.7.1908 (Core) |

1.修改主机名并修改hosts文件(所有节点上面都要进操作):

hostnamectl set-hostname test-k8s-master-1 hostnamectl set-hostname test-k8s-master-2 hostnamectl set-hostname test-k8s-master-3 hostnamectl set-hostname test-k8s-node-01

vim /etc/hosts

172.18.178.236 test-k8s-master-1 172.18.178.237 test-k8s-master-2 172.18.178.238 test-k8s-master-3 172.18.178.239 test-k8s-node-01

2、配置yum源、安装相关的依赖包以及相关主件、配置内核优化参数等,这里我使用一个脚本直接安装(所有节点上面都需要执行)

cat init.sh

#yum源 yum install -y yum-utils device-mapper-persistent-data lvm2 wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo wget http://mirrors.aliyun.com/repo/epel-7.repo -O /etc/yum.repos.d/epel.repo cat >>/etc/yum.repos.d/kubernetes.repo <<EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF #安装K8S组件 yum install -y kubelet kubeadm kubectl docker-ce systemctl restart docker && systemctl enable docker systemctl enable kubelet ## setenforce 0 sed -i ‘s/SELINUX=enforcing/SELINUX=disabled/g‘ /etc/selinux/config sed -i ‘s/SELINUX=permissive/SELINUX=disabled/g‘ /etc/selinux/config yum install -y epel-release vim screen bash-completion mtr lrzsz wget telnet zip unzip sysstat ntpdate libcurl openssl bridge-utils nethogs dos2unix iptables-services htop nfs-utils ceph-common git mysql service firewalld stop systemctl disable firewalld.service service iptables stop systemctl disable iptables.service service postfix stop systemctl disable postfix.service wget http://mirrors.aliyun.com/repo/epel-7.repo -O /etc/yum.repos.d/epel.repo note=‘#Ansible: nptdate-time‘ task=‘*/10 * * * * /usr/sbin/ntpdate -u ntp.sjtu.edu.cn &> /dev/null‘ echo "$(crontab -l)" | grep "^${note}$" &>/dev/null || echo -e "$(crontab -l) ${note}" | crontab - echo "$(crontab -l)" | grep "^${task}$" &>/dev/null || echo -e "$(crontab -l) ${task}" | crontab - echo ‘/etc/security/limits.conf 参数调优,需重启系统后生效‘ cp -rf /etc/security/limits.conf /etc/security/limits.conf.back cat > /etc/security/limits.conf << EOF * soft nofile 655350 * hard nofile 655350 * soft nproc unlimited * hard nproc unlimited * soft core unlimited * hard core unlimited root soft nofile 655350 root hard nofile 655350 root soft nproc unlimited root hard nproc unlimited root soft core unlimited root hard core unlimited EOF echo ‘/etc/sysctl.conf 文件调优‘ cp -rf /etc/sysctl.conf /etc/sysctl.conf.back cat > /etc/sysctl.conf << EOF vm.swappiness = 0 net.ipv4.neigh.default.gc_stale_time = 120 # see details in https://help.aliyun.com/knowledge_detail/39428.html net.ipv4.conf.all.rp_filter = 0 net.ipv4.conf.default.rp_filter = 0 net.ipv4.conf.default.arp_announce = 2 net.ipv4.conf.lo.arp_announce = 2 net.ipv4.conf.all.arp_announce = 2 # see details in https://help.aliyun.com/knowledge_detail/41334.html net.ipv4.tcp_max_tw_buckets = 5000 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 1024 net.ipv4.tcp_synack_retries = 2 net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1 kernel.sysrq = 1 kernel.pid_max=1000000 EOF sysctl -p

备注:将改脚本放置在每个节点服务器上,执行 sh init.sh 即可

3、加载ipvs模块:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

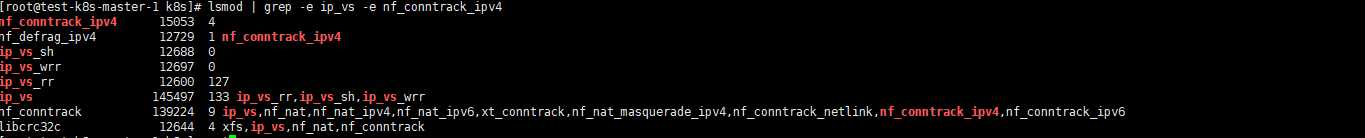

查看ipvs模块加载情况

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

4、高可用(反向代理配置):(此操作在test-k8s-master-1上面操作,如果服务器资源充足也可以单独使用一台服务器做相关反向代理 备注:所有master节点上面都需要部署)

使用nginx(upstream)或者 HAproxy(这里使用Nginx+keepalived)

1.安装Nginx和keepalived:

yum -y install nginx keepalived systemctl start keepalived && systemctl enable keepalived systemctl start nginx && systemctl enable nginx

2.配置Nginx的upstream反代:

[root@test-k8s-master-1 ~]# cd /etc/nginx

mv nginx.conf nginx.conf.default

vim nginx.conf.default

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

log_format proxy ‘$remote_addr $remote_port - [$time_local] $status $protocol ‘

‘"$upstream_addr" "$upstream_bytes_sent" "$upstream_connect_time"‘ ;

access_log /var/log/nginx/nginx-proxy.log proxy;

upstream kubernetes_lb{

server 172.18.178.236:6443 weight=5 max_fails=3 fail_timeout=30s;

server 172.18.178.237:6443 weight=5 max_fails=3 fail_timeout=30s;

server 172.18.178.238:6443 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 30s;

proxy_timeout 30s;

proxy_pass kubernetes_lb;

}

}

检查Nginx配置文件语法是否正常,后重新加载Nginx

[root@test-k8s-master-1 nginx]# nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@test-k8s-master-1 nginx]# nginx -s reload

3.keeplived配置:

[root@test-k8s-master-1 ~]# /etc/keepalived

mv keepalived.conf keepalived.conf.default

vim keepalived.conf

global_defs { notification_email { test@gmail.com } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id LVS_1 } vrrp_instance VI_1 { state MASTER interface ens192 #网卡设备名称,根据自己网卡信息进行更改 lvs_sync_daemon_inteface ens192 virtual_router_id 88 advert_int 1 priority 110 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 172.18.178.240/24 # 这就就是虚拟IP地址 } }

重启keepalived:

systemctl restart keepalived

5、初始化节点:

kubeadm config print init-defaults > kubeadm-init.yaml

下载镜像:

kubeadm config images pull --config kubeadm-init.yaml

修改kubeadm-init.yaml:

[root@test-k8s-master-1 ~]# vim kubeadm-init.yaml apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 172.18.178.237 bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock name: test-k8s-master-1 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta2 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} controlPlaneEndpoint: "172.18.178.240:7443" dns: type: CoreDNS etcd: local: dataDir: /var/lib/etcd imageRepository: k8s.gcr.io kind: ClusterConfiguration kubernetesVersion: v1.16.3 networking: dnsDomain: cluster.local podSubnet: "10.244.0.0/16" scheduler: {} --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: "ipvs"

初始化:

[root@test-k8s-master-1 ~]# kubeadm init --config kubeadm-init.yaml ...................... [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of control-plane nodes by copying certificate authorities and service account keys on each node and then running the following as root: kubeadm join 172.18.178.240:7443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:274a3c078548240887903b51e75e2cc9548343e06dcd2a3ca0c3087c3fdd3175 --control-plane Then you can join any number of worker nodes by running the following on each as root: kubeadm join 172.18.178.240:7443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:274a3c078548240887903b51e75e2cc9548343e06dcd2a3ca0c3087c3fdd3175

6、其他两个master 复制相关配置

USER=root CONTROL_PLANE_IPS="test-k8s-master-2 test-k8s-master-3" for host in ${CONTROL_PLANE_IPS}; do ssh "${USER}"@$host "mkdir -p /etc/kubernetes/pki/etcd" scp /etc/kubernetes/pki/ca.* "${USER}"@$host:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/sa.* "${USER}"@$host:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/front-proxy-ca.* "${USER}"@$host:/etc/kubernetes/pki/ scp /etc/kubernetes/pki/etcd/ca.* "${USER}"@$host:/etc/kubernetes/pki/etcd/ scp /etc/kubernetes/admin.conf "${USER}"@$host:/etc/kubernetes/ done

7、master节点加入:

kubeadm join 172.18.178.240:7443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:274a3c078548240887903b51e75e2cc9548343e06dcd2a3ca0c3087c3fdd3175 --control-plane

8、node 节点加入:

kubeadm join 172.18.178.240:7443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:274a3c078548240887903b51e75e2cc9548343e06dcd2a3ca0c3087c3fdd3175

9、安装网络插件:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml

10、相关master节点和node节点加入完成后,查看相关节点状态(以下情况说明部署成功)

[root@test-k8s-master-1 ~]# kubectl get node -A | grep master test-k8s-master-1 Ready master 233d v1.16.3 test-k8s-master-2 Ready master 233d v1.16.3 test-k8s-master-3 Ready master 233d v1.16.3

以上是关于k8s kubeadm部署高可用集群的主要内容,如果未能解决你的问题,请参考以下文章