RKE部署高可用k8s集群

Posted yxh168

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了RKE部署高可用k8s集群相关的知识,希望对你有一定的参考价值。

RKE部署环境准备

RKE是经过CNCF认证的Kubernetes发行版,并且全部组件完全在Docker容器内运行

Rancher Server只能在使用RKE或K3s安装的Kubernetes集群中运行

节点环境准备

1.开放每个节点的端口

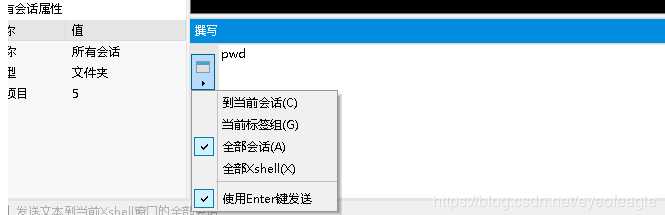

给多个窗口:发送同一命令

“查看” —>“撰写” -->“撰写栏 / 撰写窗口”

firewall-cmd --permanent --add-port=22/tcp

firewall-cmd --permanent --add-port=80/tcp

firewall-cmd --permanent --add-port=443/tcp

firewall-cmd --permanent --add-port=30000-32767/tcp

firewall-cmd --permanent --add-port=30000-32767/udp

firewall-cmd --reload

2.同步节点时间

ntpdate time1.aliyun.com

3.安装docker

任何运行Rancher Server的节点上都需要安装Docker

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

sudo yum install docker-ce-18.09.3-3.el7

4.安装kubectl

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

sudo yum install kubectl

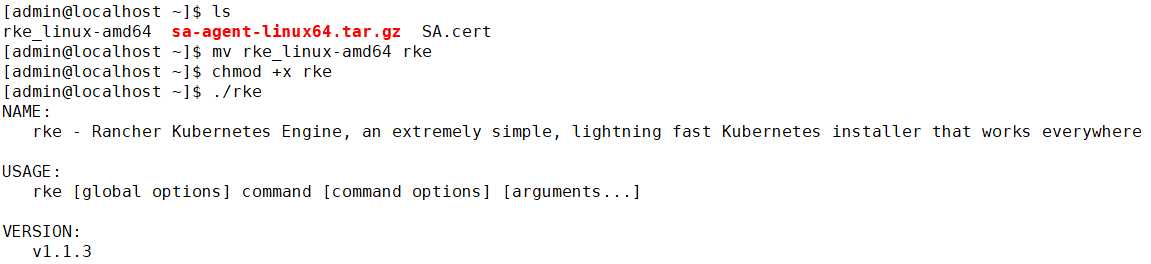

5.安装RKE

Rancher Kubernetes Engine用于构建Kubernetes集群的CLI

https://github.com/rancher/rke/releases/tag/v1.1.3

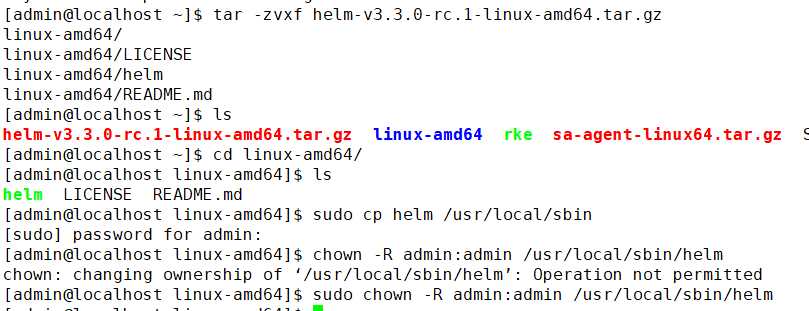

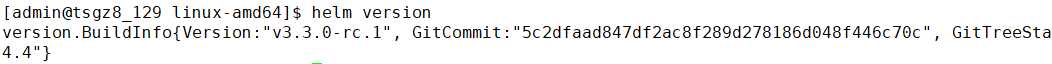

6.安装Helm

Kubernetes的软件包管理工具

7.配置ssh免密连接

在执行rke up命令的主机上执行创建ssh公私钥 并把公钥分发到各个节点上

ssh-keygen -t rsa

ssh-copy-id 192.168.30.129

ssh-copy-id 192.168.30.133

8.配置操作系统参数支持k8s集群 (所有节点上都要执行)

sudo swapoff -a

sudo vi /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

sudo sysctl -p

9.使用rke创建集群初始化配置文件

rke config --name cluster.yml

RKE使用一个名为cluster.yml确定如何在集群中的节点上部署Kubernetes

# If you intened to deploy Kubernetes in an air-gapped environment,

# please consult the documentation on how to configure custom RKE images.

nodes:

- address: "192.168.30.110"

port: "22"

internal_address: ""

role: [controlplane,etcd,worker]

hostname_override: "node1"

user: admin

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: "192.168.30.129"

port: "22"

internal_address: ""

role: [controlplane,etcd,worker]

hostname_override: "node2"

user: admin

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: "192.168.30.133"

port: "22"

internal_address: ""

role: [controlplane,etcd,worker]

hostname_override: "node3"

user: admin

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

kubelet:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

network:

plugin: flannel

options: {}

mtu: 0

node_selector: {}

update_strategy: null

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/coreos-etcd:v3.4.3-rancher1

alpine: rancher/rke-tools:v0.1.58

nginx_proxy: rancher/rke-tools:v0.1.58

cert_downloader: rancher/rke-tools:v0.1.58

kubernetes_services_sidecar: rancher/rke-tools:v0.1.58

kubedns: rancher/k8s-dns-kube-dns:1.15.2

dnsmasq: rancher/k8s-dns-dnsmasq-nanny:1.15.2

kubedns_sidecar: rancher/k8s-dns-sidecar:1.15.2

kubedns_autoscaler: rancher/cluster-proportional-autoscaler:1.7.1

coredns: rancher/coredns-coredns:1.6.9

coredns_autoscaler: rancher/cluster-proportional-autoscaler:1.7.1

nodelocal: rancher/k8s-dns-node-cache:1.15.7

kubernetes: rancher/hyperkube:v1.18.3-rancher2

flannel: rancher/coreos-flannel:v0.12.0

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/calico-node:v3.13.4

calico_cni: rancher/calico-cni:v3.13.4

calico_controllers: rancher/calico-kube-controllers:v3.13.4

calico_ctl: rancher/calico-ctl:v3.13.4

calico_flexvol: rancher/calico-pod2daemon-flexvol:v3.13.4

canal_node: rancher/calico-node:v3.13.4

canal_cni: rancher/calico-cni:v3.13.4

canal_flannel: rancher/coreos-flannel:v0.12.0

canal_flexvol: rancher/calico-pod2daemon-flexvol:v3.13.4

weave_node: weaveworks/weave-kube:2.6.4

weave_cni: weaveworks/weave-npc:2.6.4

pod_infra_container: rancher/pause:3.1

ingress: rancher/nginx-ingress-controller:nginx-0.32.0-rancher1

ingress_backend: rancher/nginx-ingress-controller-defaultbackend:1.5-rancher1

metrics_server: rancher/metrics-server:v0.3.6

windows_pod_infra_container: rancher/kubelet-pause:v0.1.4

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

restore:

restore: false

snapshot_name: ""

dns: null

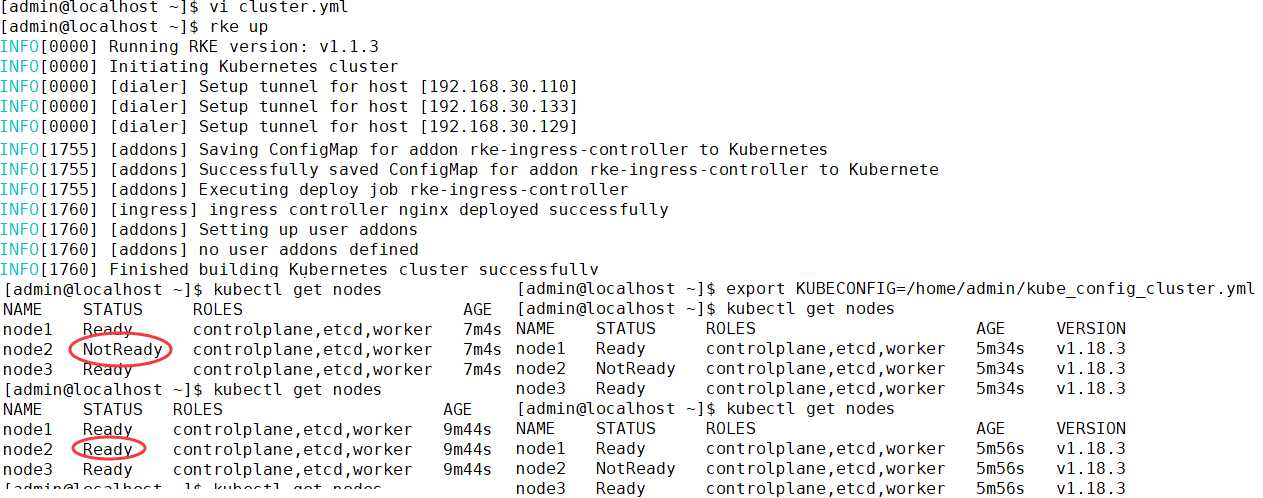

export KUBECONFIG=/home/admin/kube_config_cluster.yml

mkdir ~/.kube

cp kube_config_rancher-cluster.yml ~/.kube/config

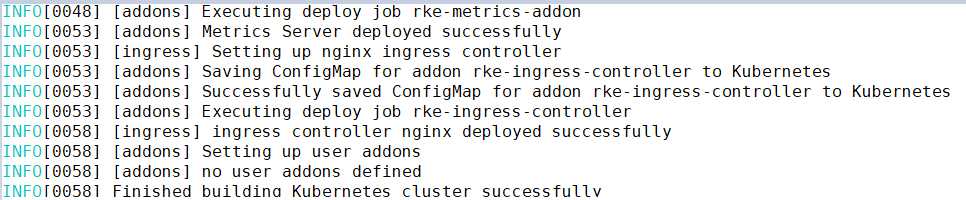

通过RKE安装k8s集群成功,启动的时候有些节点启动的比较慢。需要稍微等待一段时间.

在创建的k8s集群上安装Rancher

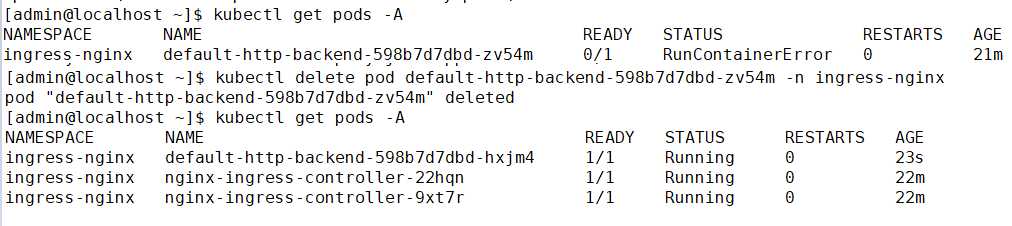

重启一个Pod的命令就是把这个Pod直接删除掉

RKE的环境清理

rancher-node-1,2,3中分别执行以下命令

mkdir rancher cat > rancher/clear.sh << EOF df -h|grep kubelet |awk -F % ‘{print $2}‘|xargs umount rm /var/lib/kubelet/* -rf rm /etc/kubernetes/* -rf rm /var/lib/rancher/* -rf rm /var/lib/etcd/* -rf rm /var/lib/cni/* -rf rm -rf /var/run/calico iptables -F && iptables -t nat -F ip link del flannel.1 docker ps -a|awk ‘{print $1}‘|xargs docker rm -f docker volume ls|awk ‘{print $2}‘|xargs docker volume rm rm -rf /var/etcd/ rm -rf /run/kubernetes/ docker rm -fv $(docker ps -aq) docker volume rm $(docker volume ls) rm -rf /etc/cni rm -rf /opt/cni systemctl restart docker EOF sh rancher/clear.sh

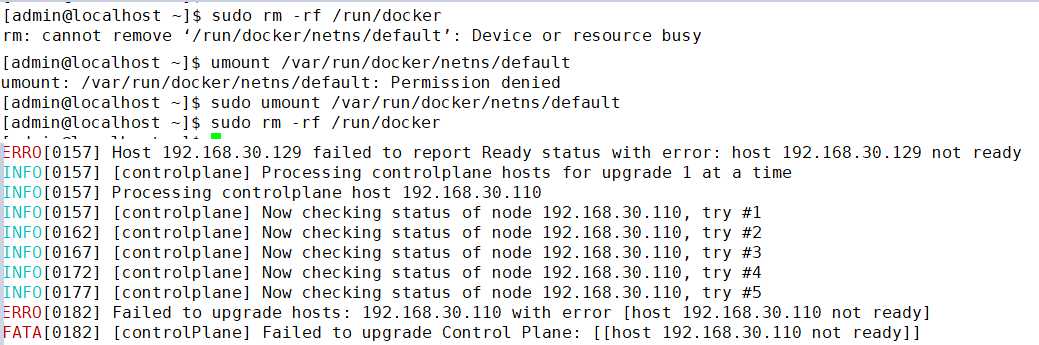

清理残留目录结束。如果还有问题可能需要卸载所有节点上的docker

首先查看Docker版本 # yum list installed | grep docker docker-ce.x86_64 18.05.0.ce-3.el7.centos @docker-ce-edge 执行卸载 # yum -y remove docker-ce.x86_64 删除存储目录 # rm -rf /etc/docker # rm -rf /run/docker # rm -rf /var/lib/dockershim # rm -rf /var/lib/docker 如果发现删除不掉,需要先 umount,如 # umount /var/lib/docker/devicemapper

rke up --config=./rancher-cluster.yml rke启动执行是幂等操作的 有时候需要多次执行才能成功

多次执行启动后

rke多次安装和卸载k8s集群问题

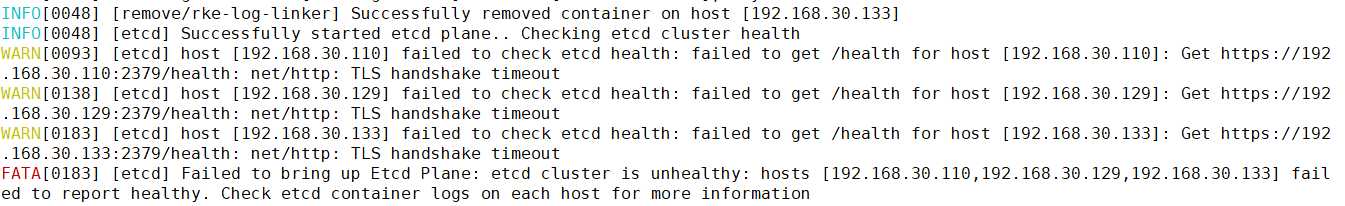

1.启动的时候提示ectd的集群健康检查失败

清空节点上所有k8s的相关目录。卸载和删除docker所有相关目录。重新安装docker

最后在执行rke启动命令

以上是关于RKE部署高可用k8s集群的主要内容,如果未能解决你的问题,请参考以下文章