ORBSLAM2代码阅读-system.cpp

Posted polipolu

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ORBSLAM2代码阅读-system.cpp相关的知识,希望对你有一定的参考价值。

代码:

/** * This file is part of ORB-SLAM2. * * Copyright (C) 2014-2016 Raúl Mur-Artal <raulmur at unizar dot es> (University of Zaragoza) * For more information see <https://github.com/raulmur/ORB_SLAM2> * * ORB-SLAM2 is free software: you can redistribute it and/or modify * it under the terms of the GNU General Public License as published by * the Free Software Foundation, either version 3 of the License, or * (at your option) any later version. * * ORB-SLAM2 is distributed in the hope that it will be useful, * but WITHOUT ANY WARRANTY; without even the implied warranty of * MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the * GNU General Public License for more details. * * You should have received a copy of the GNU General Public License * along with ORB-SLAM2. If not, see <http://www.gnu.org/licenses/>. */ #include "System.h" #include "Converter.h" #include <thread> #include <pangolin/pangolin.h> #include <iostream> // std::cout, std::fixed #include <iomanip> // std::setprecision bool has_suffix(const std::string &str, const std::string &suffix) { std::size_t index = str.find(suffix, str.size() - suffix.size()); return (index != std::string::npos); } namespace ORB_SLAM2 { System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor, const bool bUseViewer):mSensor(sensor),mbReset(false),mbActivateLocalizationMode(false), mbDeactivateLocalizationMode(false) { // Output welcome message cout << endl << "ORB-SLAM2 Copyright (C) 2014-2016 Raul Mur-Artal, University of Zaragoza." << endl << "This program comes with ABSOLUTELY NO WARRANTY;" << endl << "This is free software, and you are welcome to redistribute it" << endl << "under certain conditions. See LICENSE.txt." << endl << endl; cout << "Input sensor was set to: "; if(mSensor==MONOCULAR) cout << "Monocular" << endl; else if(mSensor==STEREO) cout << "Stereo" << endl; else if(mSensor==RGBD) cout << "RGB-D" << endl; //Check settings file cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ); if(!fsSettings.isOpened()) { cerr << "Failed to open settings file at: " << strSettingsFile << endl; exit(-1); } //Load ORB Vocabulary cout << endl << "Loading ORB Vocabulary. This could take a while..." << endl; mpVocabulary = new ORBVocabulary(); bool bVocLoad = false; // chose loading method based on file extension if (has_suffix(strVocFile, ".txt")) bVocLoad = mpVocabulary->loadFromTextFile(strVocFile); else if(has_suffix(strVocFile, ".bin")) bVocLoad = mpVocabulary->loadFromBinaryFile(strVocFile); else bVocLoad = false; if(!bVocLoad) { cerr << "Wrong path to vocabulary. " << endl; cerr << "Failed to open at: " << strVocFile << endl; exit(-1); } cout << "Vocabulary loaded!" << endl << endl; //Create KeyFrame Database mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary); //Create the Map mpMap = new Map(); //Create Drawers. These are used by the Viewer mpFrameDrawer = new FrameDrawer(mpMap); mpMapDrawer = new MapDrawer(mpMap, strSettingsFile); //Initialize the Tracking thread //(it will live in the main thread of execution, the one that called this constructor) mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer, mpMap, mpKeyFrameDatabase, strSettingsFile, mSensor); //Initialize the Local Mapping thread and launch mpLocalMapper = new LocalMapping(mpMap, mSensor==MONOCULAR); mptLocalMapping = new thread(&ORB_SLAM2::LocalMapping::Run,mpLocalMapper); //Initialize the Loop Closing thread and launch mpLoopCloser = new LoopClosing(mpMap, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR); mptLoopClosing = new thread(&ORB_SLAM2::LoopClosing::Run, mpLoopCloser); //Initialize the Viewer thread and launch mpViewer = new Viewer(this, mpFrameDrawer,mpMapDrawer,mpTracker,strSettingsFile); if(bUseViewer) mptViewer = new thread(&Viewer::Run, mpViewer); mpTracker->SetViewer(mpViewer); //Set pointers between threads mpTracker->SetLocalMapper(mpLocalMapper); mpTracker->SetLoopClosing(mpLoopCloser); mpLocalMapper->SetTracker(mpTracker); mpLocalMapper->SetLoopCloser(mpLoopCloser); mpLoopCloser->SetTracker(mpTracker); mpLoopCloser->SetLocalMapper(mpLocalMapper); } cv::Mat System::TrackStereo(const cv::Mat &imLeft, const cv::Mat &imRight, const double ×tamp) { if(mSensor!=STEREO) { cerr << "ERROR: you called TrackStereo but input sensor was not set to STEREO." << endl; exit(-1); } // Check mode change { unique_lock<mutex> lock(mMutexMode); if(mbActivateLocalizationMode) { mpLocalMapper->RequestStop(); // Wait until Local Mapping has effectively stopped while(!mpLocalMapper->isStopped()) { //usleep(1000); std::this_thread::sleep_for(std::chrono::milliseconds(1)); } mpTracker->InformOnlyTracking(true);// 定位时,只跟踪 mbActivateLocalizationMode = false; } if(mbDeactivateLocalizationMode) { mpTracker->InformOnlyTracking(false); mpLocalMapper->Release(); mbDeactivateLocalizationMode = false; } } // Check reset { unique_lock<mutex> lock(mMutexReset); if(mbReset) { mpTracker->Reset(); mbReset = false; } } return mpTracker->GrabImageStereo(imLeft,imRight,timestamp); } cv::Mat System::TrackRGBD(const cv::Mat &im, const cv::Mat &depthmap, const double ×tamp) { if(mSensor!=RGBD) { cerr << "ERROR: you called TrackRGBD but input sensor was not set to RGBD." << endl; exit(-1); } // Check mode change { unique_lock<mutex> lock(mMutexMode); if(mbActivateLocalizationMode) { mpLocalMapper->RequestStop(); // Wait until Local Mapping has effectively stopped while(!mpLocalMapper->isStopped()) { //usleep(1000); std::this_thread::sleep_for(std::chrono::milliseconds(1)); } mpTracker->InformOnlyTracking(true);// 定位时,只跟踪 mbActivateLocalizationMode = false; } if(mbDeactivateLocalizationMode) { mpTracker->InformOnlyTracking(false); mpLocalMapper->Release(); mbDeactivateLocalizationMode = false; } } // Check reset { unique_lock<mutex> lock(mMutexReset); if(mbReset) { mpTracker->Reset(); mbReset = false; } } return mpTracker->GrabImageRGBD(im,depthmap,timestamp); } cv::Mat System::TrackMonocular(const cv::Mat &im, const double ×tamp) { if(mSensor!=MONOCULAR) { cerr << "ERROR: you called TrackMonocular but input sensor was not set to Monocular." << endl; exit(-1); } // Check mode change { unique_lock<mutex> lock(mMutexMode); if(mbActivateLocalizationMode) { mpLocalMapper->RequestStop(); // Wait until Local Mapping has effectively stopped while(!mpLocalMapper->isStopped()) { //usleep(1000); std::this_thread::sleep_for(std::chrono::milliseconds(1)); } mpTracker->InformOnlyTracking(true);// 定位时,只跟踪 mbActivateLocalizationMode = false;// 防止重复执行 } if(mbDeactivateLocalizationMode) { mpTracker->InformOnlyTracking(false); mpLocalMapper->Release(); mbDeactivateLocalizationMode = false;// 防止重复执行 } } // Check reset { unique_lock<mutex> lock(mMutexReset); if(mbReset) { mpTracker->Reset(); mbReset = false; } } return mpTracker->GrabImageMonocular(im,timestamp); } void System::ActivateLocalizationMode() { unique_lock<mutex> lock(mMutexMode); mbActivateLocalizationMode = true; } void System::DeactivateLocalizationMode() { unique_lock<mutex> lock(mMutexMode); mbDeactivateLocalizationMode = true; } void System::Reset() { unique_lock<mutex> lock(mMutexReset); mbReset = true; } void System::Shutdown() { mpLocalMapper->RequestFinish(); mpLoopCloser->RequestFinish(); if(mpViewer) { mpViewer->RequestFinish(); while(!mpViewer->isFinished()) std::this_thread::sleep_for(std::chrono::milliseconds(5)); } // Wait until all thread have effectively stopped while(!mpLocalMapper->isFinished() || !mpLoopCloser->isFinished() || mpLoopCloser->isRunningGBA()) { //usleep(5000); std::this_thread::sleep_for(std::chrono::milliseconds(5)); } if(mpViewer) pangolin::BindToContext("ORB-SLAM2: Map Viewer"); } void System::SaveTrajectoryTUM(const string &filename) { cout << endl << "Saving camera trajectory to " << filename << " ..." << endl; if(mSensor==MONOCULAR) { cerr << "ERROR: SaveTrajectoryTUM cannot be used for monocular." << endl; return; } vector<KeyFrame*> vpKFs = mpMap->GetAllKeyFrames(); sort(vpKFs.begin(),vpKFs.end(),KeyFrame::lId); // Transform all keyframes so that the first keyframe is at the origin. // After a loop closure the first keyframe might not be at the origin. cv::Mat Two = vpKFs[0]->GetPoseInverse(); ofstream f; f.open(filename.c_str()); f << fixed; // Frame pose is stored relative to its reference keyframe (which is optimized by BA and pose graph). // We need to get first the keyframe pose and then concatenate the relative transformation. // Frames not localized (tracking failure) are not saved. // For each frame we have a reference keyframe (lRit), the timestamp (lT) and a flag // which is true when tracking failed (lbL). list<ORB_SLAM2::KeyFrame*>::iterator lRit = mpTracker->mlpReferences.begin(); list<double>::iterator lT = mpTracker->mlFrameTimes.begin(); list<bool>::iterator lbL = mpTracker->mlbLost.begin(); for(list<cv::Mat>::iterator lit=mpTracker->mlRelativeFramePoses.begin(), lend=mpTracker->mlRelativeFramePoses.end();lit!=lend;lit++, lRit++, lT++, lbL++) { if(*lbL) continue; KeyFrame* pKF = *lRit; cv::Mat Trw = cv::Mat::eye(4,4,CV_32F); // If the reference keyframe was culled, traverse the spanning tree to get a suitable keyframe. while(pKF->isBad()) { Trw = Trw*pKF->mTcp; pKF = pKF->GetParent(); } Trw = Trw*pKF->GetPose()*Two; cv::Mat Tcw = (*lit)*Trw; cv::Mat Rwc = Tcw.rowRange(0,3).colRange(0,3).t(); cv::Mat twc = -Rwc*Tcw.rowRange(0,3).col(3); vector<float> q = Converter::toQuaternion(Rwc); f << setprecision(6) << *lT << " " << setprecision(9) << twc.at<float>(0) << " " << twc.at<float>(1) << " " << twc.at<float>(2) << " " << q[0] << " " << q[1] << " " << q[2] << " " << q[3] << endl; } f.close(); cout << endl << "trajectory saved!" << endl; } void System::SaveKeyFrameTrajectoryTUM(const string &filename) { cout << endl << "Saving keyframe trajectory to " << filename << " ..." << endl; vector<KeyFrame*> vpKFs = mpMap->GetAllKeyFrames(); sort(vpKFs.begin(),vpKFs.end(),KeyFrame::lId); // Transform all keyframes so that the first keyframe is at the origin. // After a loop closure the first keyframe might not be at the origin. //cv::Mat Two = vpKFs[0]->GetPoseInverse(); ofstream f; f.open(filename.c_str()); f << fixed; for(size_t i=0; i<vpKFs.size(); i++) { KeyFrame* pKF = vpKFs[i]; // pKF->SetPose(pKF->GetPose()*Two); if(pKF->isBad()) continue; cv::Mat R = pKF->GetRotation().t(); vector<float> q = Converter::toQuaternion(R); cv::Mat t = pKF->GetCameraCenter(); f << setprecision(6) << pKF->mTimeStamp << setprecision(7) << " " << t.at<float>(0) << " " << t.at<float>(1) << " " << t.at<float>(2) << " " << q[0] << " " << q[1] << " " << q[2] << " " << q[3] << endl; } f.close(); cout << endl << "trajectory saved!" << endl; } void System::SaveTrajectoryKITTI(const string &filename) { cout << endl << "Saving camera trajectory to " << filename << " ..." << endl; if(mSensor==MONOCULAR) { cerr << "ERROR: SaveTrajectoryKITTI cannot be used for monocular." << endl; return; } vector<KeyFrame*> vpKFs = mpMap->GetAllKeyFrames(); sort(vpKFs.begin(),vpKFs.end(),KeyFrame::lId); // Transform all keyframes so that the first keyframe is at the origin. // After a loop closure the first keyframe might not be at the origin. cv::Mat Two = vpKFs[0]->GetPoseInverse(); ofstream f; f.open(filename.c_str()); f << fixed; // Frame pose is stored relative to its reference keyframe (which is optimized by BA and pose graph). // We need to get first the keyframe pose and then concatenate the relative transformation. // Frames not localized (tracking failure) are not saved. // For each frame we have a reference keyframe (lRit), the timestamp (lT) and a flag // which is true when tracking failed (lbL). list<ORB_SLAM2::KeyFrame*>::iterator lRit = mpTracker->mlpReferences.begin(); list<double>::iterator lT = mpTracker->mlFrameTimes.begin(); for(list<cv::Mat>::iterator lit=mpTracker->mlRelativeFramePoses.begin(), lend=mpTracker->mlRelativeFramePoses.end();lit!=lend;lit++, lRit++, lT++) { ORB_SLAM2::KeyFrame* pKF = *lRit; cv::Mat Trw = cv::Mat::eye(4,4,CV_32F); while(pKF->isBad()) { // cout << "bad parent" << endl; Trw = Trw*pKF->mTcp; pKF = pKF->GetParent(); } Trw = Trw*pKF->GetPose()*Two; cv::Mat Tcw = (*lit)*Trw; cv::Mat Rwc = Tcw.rowRange(0,3).colRange(0,3).t(); cv::Mat twc = -Rwc*Tcw.rowRange(0,3).col(3); f << setprecision(9) << Rwc.at<float>(0,0) << " " << Rwc.at<float>(0,1) << " " << Rwc.at<float>(0,2) << " " << twc.at<float>(0) << " " << Rwc.at<float>(1,0) << " " << Rwc.at<float>(1,1) << " " << Rwc.at<float>(1,2) << " " << twc.at<float>(1) << " " << Rwc.at<float>(2,0) << " " << Rwc.at<float>(2,1) << " " << Rwc.at<float>(2,2) << " " << twc.at<float>(2) << endl; } f.close(); cout << endl << "trajectory saved!" << endl; } } //namespace ORB_SLAM

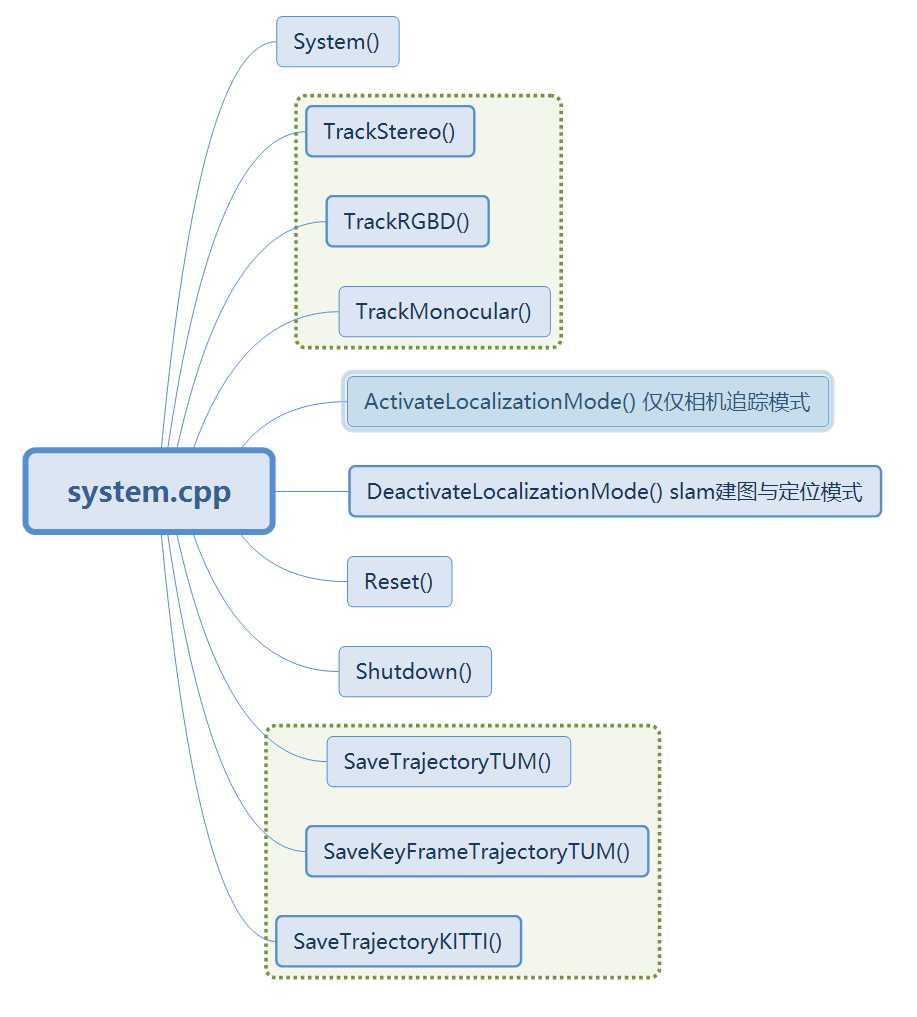

结构:

□System()

1.判断单目/双目/RGBD

2.判断setting文件是否打开(.yaml文件)

3.加载词库

1、mpVocabulary = new ORBVocabulary();

(mpVocabulary在system.h文件中有定义:ORBVocabulary* mpVocabulary;)

2、判断vocabulary文件是二进制还是txt文件,存不存在

4. 创建 KeyFrame Database

mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary);

5.创建Map

mpMap = new Map();

6.创建viewer用到的 Drawers

mpFrameDrawer = new FrameDrawer(mpMap); mpMapDrawer = new MapDrawer(mpMap, strSettingsFile);

7.初始化线程

1.初始化tracking线程

2.初始化Local Mapping线程

3.初始化Loop Closing 线程

4.初始化Viewer线程

8.数据流进入tracking线程

把前面初始化好的数据都塞进去

mpTracker->SetViewer(mpViewer); mpTracker->SetLocalMapper(mpLocalMapper); mpTracker->SetLoopClosing(mpLoopCloser); mpLocalMapper->SetTracker(mpTracker); mpLocalMapper->SetLoopCloser(mpLoopCloser); mpLoopCloser->SetTracker(mpTracker); mpLoopCloser->SetLocalMapper(mpLocalMapper);

□TrackStereo/ TrackMonocular/ TrackRGBD

1.确定一下是不是双目/单目/RGBD

2.确定什么模式:只跟踪/定位与建图模式

3.确认是否复位

4.图像处理和跟踪,具体在tracking.cpp找GrabImageMonocular/ GrabImageStereo/ GrabImageRGBD

C++知识:

1.new

“new”是C++的一个关键字,同时也是操作符。

当我们使用关键字new在堆上动态创建一个对象时,它实际上做了三件事:获得一块内存空间、调用构造函数、返回正确的指针。

定义一个类:

class A

{

int i;

public:

A(int _i) :i(_i*_i) {}

void Say() { printf("i=%d/n", i); }

};

使用new:

A* pa = new A(3);

参考:https://blog.csdn.net/nishisiyuetian/article/details/81702180

以上是关于ORBSLAM2代码阅读-system.cpp的主要内容,如果未能解决你的问题,请参考以下文章