05-Resnet18 图像分类

Posted 赵家小伙儿

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了05-Resnet18 图像分类相关的知识,希望对你有一定的参考价值。

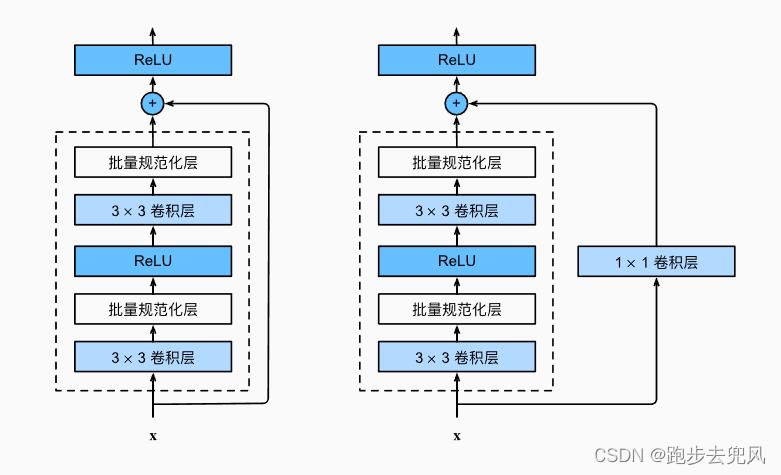

图1 Resnet的残差块

图2 Resnet18 网络架构

Cifar10 数据集的Resnet10的框架实现(Pytorch):

import torch from torch import nn # ResNet18_BasicBlock-残差单元 class ResNet18_BasicBlock(nn.Module): def __init__(self, input_channel, output_channel, stride, use_conv1_1): super(ResNet18_BasicBlock, self).__init__() # 第一层卷积 self.conv1 = nn.Conv2d(input_channel, output_channel, kernel_size=3, stride=stride, padding=1) # 第二层卷积 self.conv2 = nn.Conv2d(output_channel, output_channel, kernel_size=3, stride=1, padding=1) # 1*1卷积核,在不改变图片尺寸的情况下给通道升维 self.extra = nn.Sequential( nn.Conv2d(input_channel, output_channel, kernel_size=1, stride=stride, padding=0), nn.BatchNorm2d(output_channel) ) self.use_conv1_1 = use_conv1_1 self.bn = nn.BatchNorm2d(output_channel) self.relu = nn.ReLU(inplace=True) def forward(self, x): out = self.bn(self.conv1(x)) out = self.relu(out) out = self.bn(self.conv2(out)) # 残差连接-(B,C,H,W)维度一致才能进行残差连接 if self.use_conv1_1: out = self.extra(x) + out out = self.relu(out) return out # 构建 ResNet18 网络模型 class ResNet18(nn.Module): def __init__(self): super(ResNet18, self).__init__() self.conv1 = nn.Sequential( nn.Conv2d(3, 64, kernel_size=3, stride=3, padding=1), nn.BatchNorm2d(64) ) self.block1_1 = ResNet18_BasicBlock(input_channel=64, output_channel=64, stride=1, use_conv1_1=False) self.block1_2 = ResNet18_BasicBlock(input_channel=64, output_channel=64, stride=1, use_conv1_1=False) self.block2_1 = ResNet18_BasicBlock(input_channel=64, output_channel=128, stride=2, use_conv1_1=True) self.block2_2 = ResNet18_BasicBlock(input_channel=128, output_channel=128, stride=1, use_conv1_1=False) self.block3_1 = ResNet18_BasicBlock(input_channel=128, output_channel=256, stride=2, use_conv1_1=True) self.block3_2 = ResNet18_BasicBlock(input_channel=256, output_channel=256, stride=1, use_conv1_1=False) self.block4_1 = ResNet18_BasicBlock(input_channel=256, output_channel=512, stride=2, use_conv1_1=True) self.block4_2 = ResNet18_BasicBlock(input_channel=512, output_channel=512, stride=1, use_conv1_1=False) self.FC_layer = nn.Linear(512 * 1 * 1, 10) self.adaptive_avg_pool2d = nn.AdaptiveAvgPool2d((1,1)) self.relu = nn.ReLU(inplace=True) def forward(self, x): x = self.relu(self.conv1(x)) # ResNet18-网络模型 x = self.block1_1(x) x = self.block1_2(x) x = self.block2_1(x) x = self.block2_2(x) x = self.block3_1(x) x = self.block3_2(x) x = self.block4_1(x) x = self.block4_2(x) # 平均值池化 x = self.adaptive_avg_pool2d(x) # 数据平坦化处理,为接下来的全连接层做准备 x = x.view(x.size(0), -1) x = self.FC_layer(x) return x

classfyNet_main.py

import torch from torch.utils.data import DataLoader from torch import nn, optim from torchvision import datasets, transforms from matplotlib import pyplot as plt import time from Lenet5 import Lenet5_new from Resnet18 import ResNet18 def main(): print("Load datasets...") # transforms.RandomHorizontalFlip(p=0.5)---以0.5的概率对图片做水平横向翻转 # transforms.ToTensor()---shape从(H,W,C)->(C,H,W), 每个像素点从(0-255)映射到(0-1):直接除以255 # transforms.Normalize---先将输入归一化到(0,1),像素点通过"(x-mean)/std",将每个元素分布到(-1,1) transform_train = transforms.Compose([ transforms.RandomHorizontalFlip(p=0.5), transforms.ToTensor(), transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) ]) transform_test = transforms.Compose([ transforms.ToTensor(), transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) ]) # 内置函数下载数据集 train_dataset = datasets.CIFAR10(root="./data/Cifar10/", train=True, transform = transform_train, download=True) test_dataset = datasets.CIFAR10(root = "./data/Cifar10/", train = False, transform = transform_test, download=True) print(len(train_dataset), len(test_dataset)) Batch_size = 64 train_loader = DataLoader(train_dataset, batch_size=Batch_size, shuffle = True) test_loader = DataLoader(test_dataset, batch_size = Batch_size, shuffle = False) # 设置CUDA device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") # 初始化模型 # 直接更换模型就行,其他无需操作 # model = Lenet5_new().to(device) model = ResNet18().to(device) # 构造损失函数和优化器 criterion = nn.CrossEntropyLoss() # 多分类softmax构造损失 opt = optim.SGD(model.parameters(), lr=0.001, momentum=0.8, weight_decay=0.001) # 动态更新学习率 ------每隔step_size : lr = lr * gamma schedule = optim.lr_scheduler.StepLR(opt, step_size=10, gamma=0.6, last_epoch=-1) # 开始训练 print("Start Train...") epochs = 100 loss_list = [] train_acc_list =[] test_acc_list = [] epochs_list = [] for epoch in range(0, epochs): start = time.time() model.train() running_loss = 0.0 batch_num = 0 for i, (inputs, labels) in enumerate(train_loader): inputs, labels = inputs.to(device), labels.to(device) # 将数据送入模型训练 outputs = model(inputs) # 计算损失 loss = criterion(outputs, labels).to(device) # 重置梯度 opt.zero_grad() # 计算梯度,反向传播 loss.backward() # 根据反向传播的梯度值优化更新参数 opt.step() # 100个batch的 loss 之和 running_loss += loss.item() # loss_list.append(loss.item()) batch_num+=1 epochs_list.append(epoch) # 每一轮结束输出一下当前的学习率 lr lr_1 = opt.param_groups[0][\'lr\'] print("learn_rate:%.15f" % lr_1) schedule.step() end = time.time() print(\'epoch = %d/100, batch_num = %d, loss = %.6f, time = %.3f\' % (epoch+1, batch_num, running_loss/batch_num, end-start)) running_loss=0.0 # 每个epoch训练结束,都进行一次测试验证 model.eval() train_correct = 0.0 train_total = 0 test_correct = 0.0 test_total = 0 # 训练模式不需要反向传播更新梯度 with torch.no_grad(): # print("=======================train=======================") for inputs, labels in train_loader: inputs, labels = inputs.to(device), labels.to(device) outputs = model(inputs) pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 train_total += inputs.size(0) train_correct += torch.eq(pred, labels).sum().item() # print("=======================test=======================") for inputs, labels in test_loader: inputs, labels = inputs.to(device), labels.to(device) outputs = model(inputs) pred = outputs.argmax(dim=1) # 返回每一行中最大值元素索引 test_total += inputs.size(0) test_correct += torch.eq(pred, labels).sum().item() print("train_total = %d, Accuracy = %.5f %%, test_total= %d, Accuracy = %.5f %%" %(train_total, 100 * train_correct / train_total, test_total, 100 * test_correct / test_total)) train_acc_list.append(100 * train_correct / train_total) test_acc_list.append(100 * test_correct / test_total) # print("Accuracy of the network on the 10000 test images:%.5f %%" % (100 * test_correct / test_total)) # print("===============================================") fig = plt.figure(figsize=(4, 4)) plt.plot(epochs_list, train_acc_list, label=\'train_acc_list\') plt.plot(epochs_list, test_acc_list, label=\'test_acc_list\') plt.legend() plt.title("train_test_acc") plt.savefig(\'resnet18_cc_epoch_:04d.png\'.format(epochs)) plt.close() if __name__ == "__main__": main()

以上是关于05-Resnet18 图像分类的主要内容,如果未能解决你的问题,请参考以下文章