Python 爬取 北京市政府首都之窗信件列表-[后续补充]

Posted 初等变换不改变矩阵的秩

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Python 爬取 北京市政府首都之窗信件列表-[后续补充]相关的知识,希望对你有一定的参考价值。

日期:2020.01.23

博客期:131

星期四

【本博客的代码如若要使用,请在下方评论区留言,之后再用(就是跟我说一声)】

//博客总体说明

1、准备工作

2、爬取工作(本期博客)

3、数据处理

4、信息展示

我试着改写了一下爬虫脚本,试着运行了一下,第一次卡在了第27页,因为第27页有那个“投诉”类型是我没有料到的!出于对这个问题的解决,我重新写了代码,新的类和上一个总体类的区别有以下几点:

1、因为要使用js调取下一页的数据,所以就去网站上下载了FireFox的驱动

安装参考博客:https://www.cnblogs.com/nuomin/p/8486963.html

这个是Selenium+WebDriver的活用,这样它能够模拟人对浏览器进行操作(当然可以执行js代码!)

# 引包

from selenium import webdriver

# 使用驱动自动打开浏览器并选择页面

profile = webdriver.Firefox()

profile.get(\'http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow\')

# 浏览器执行js代码

# ----[pageTurning(3)在网页上是下一页的跳转js(不用导js文件或方法)]

profile.execute_script("pageTurning(3);")

# 关闭浏览器

profile.__close__()

2、因为要做页面跳转,所以每一次爬完页面地址以后就必须要去执行js代码!

3、因为咨询类的问题可能没有人给予回答,所以对应项应以空格代替(错误抛出)

4、第一个页面读取到文件里需要以"w+"的模式执行写文件,之后则以 “追加”的形式“a+”写文件

先说修改的Bean类(新增内容:我需要记录它每一个信件是“投诉”、“建议”还是“咨询”,所以添加了一个以这个字符串为类型的参数,进行写文件【在开头】)

1 # [ 保存的数据格式 ] 2 class Bean: 3 4 # 构造方法 5 def __init__(self,asker,responser,askTime,responseTime,title,questionSupport,responseSupport,responseUnsupport,questionText,responseText): 6 self.asker = asker 7 self.responser = responser 8 self.askTime = askTime 9 self.responseTime = responseTime 10 self.title = title 11 self.questionSupport = questionSupport 12 self.responseSupport = responseSupport 13 self.responseUnsupport = responseUnsupport 14 self.questionText = questionText 15 self.responseText = responseText 16 17 # 在控制台输出结果(测试用) 18 def display(self): 19 print("提问方:"+self.asker) 20 print("回答方:"+self.responser) 21 print("提问时间:" + self.askTime) 22 print("回答时间:" + self.responseTime) 23 print("问题标题:" + self.title) 24 print("问题支持量:" + self.questionSupport) 25 print("回答点赞数:" + self.responseSupport) 26 print("回答被踩数:" + self.responseUnsupport) 27 print("提问具体内容:" + self.questionText) 28 print("回答具体内容:" + self.responseText) 29 30 def toString(self): 31 strs = "" 32 strs = strs + self.asker; 33 strs = strs + "\\t" 34 strs = strs + self.responser; 35 strs = strs + "\\t" 36 strs = strs + self.askTime; 37 strs = strs + "\\t" 38 strs = strs + self.responseTime; 39 strs = strs + "\\t" 40 strs = strs + self.title; 41 strs = strs + "\\t" 42 strs = strs + self.questionSupport; 43 strs = strs + "\\t" 44 strs = strs + self.responseSupport; 45 strs = strs + "\\t" 46 strs = strs + self.responseUnsupport; 47 strs = strs + "\\t" 48 strs = strs + self.questionText; 49 strs = strs + "\\t" 50 strs = strs + self.responseText; 51 return strs 52 53 # 将信息附加到文件里 54 def addToFile(self,fpath, model): 55 f = codecs.open(fpath, model, \'utf-8\') 56 f.write(self.toString()+"\\n") 57 f.close() 58 59 # 将信息附加到文件里 60 def addToFile_s(self, fpath, model,kind): 61 f = codecs.open(fpath, model, \'utf-8\') 62 f.write(kind+"\\t"+self.toString() + "\\n") 63 f.close() 64 65 # --------------------[基础数据] 66 # 提问方 67 asker = "" 68 # 回答方 69 responser = "" 70 # 提问时间 71 askTime = "" 72 # 回答时间 73 responseTime = "" 74 # 问题标题 75 title = "" 76 # 问题支持量 77 questionSupport = "" 78 # 回答点赞数 79 responseSupport = "" 80 # 回答被踩数 81 responseUnsupport = "" 82 # 问题具体内容 83 questionText = "" 84 # 回答具体内容 85 responseText = ""

之后是总体的处理类

1 # [ 连续网页爬取的对象 ] 2 class WebPerConnector: 3 profile = "" 4 5 isAccess = True 6 7 # ---[定义构造方法] 8 def __init__(self): 9 self.profile = webdriver.Firefox() 10 self.profile.get(\'http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow\') 11 12 # ---[定义释放方法] 13 def __close__(self): 14 self.profile.quit() 15 16 # 获取 url 的内部 HTML 代码 17 def getHTMLText(self): 18 a = self.profile.page_source 19 return a 20 21 # 获取页面内的基本链接 22 def getFirstChanel(self): 23 index_html = self.getHTMLText() 24 index_sel = parsel.Selector(index_html) 25 links = index_sel.css(\'div #mailul\').css("a[onclick]").extract() 26 inNum = links.__len__() 27 for seat in range(0, inNum): 28 # 获取相应的<a>标签 29 pe = links[seat] 30 # 找到第一个 < 字符的位置 31 seat_turol = str(pe).find(\'>\') 32 # 找到第一个 " 字符的位置 33 seat_stnvs = str(pe).find(\'"\') 34 # 去掉空格 35 pe = str(pe)[seat_stnvs:seat_turol].replace(" ","") 36 # 获取资源 37 pe = pe[14:pe.__len__()-2] 38 pe = pe.replace("\'","") 39 # 整理成 需要关联数据的样式 40 mor = pe.split(",") 41 # ---[ 构造网址 ] 42 url_get_item = ""; 43 # 对第一个数据的判断 44 if (mor[0] == "咨询"): 45 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=" 46 else: 47 if (mor[0] == "建议"): 48 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId=" 49 else: 50 if (mor[0] == "投诉"): 51 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.complain.complainDetail.flow?originalId=" 52 url_get_item = url_get_item + mor[1] 53 54 model = "a+" 55 56 if(seat==0): 57 model = "w+" 58 59 dc = DetailConnector(url_get_item) 60 dc.getBean().addToFile_s("../testFile/emails.txt",model,mor[0]) 61 self.getChannel() 62 63 # 获取页面内的基本链接 64 def getNoFirstChannel(self): 65 index_html = self.getHTMLText() 66 index_sel = parsel.Selector(index_html) 67 links = index_sel.css(\'div #mailul\').css("a[onclick]").extract() 68 inNum = links.__len__() 69 for seat in range(0, inNum): 70 # 获取相应的<a>标签 71 pe = links[seat] 72 # 找到第一个 < 字符的位置 73 seat_turol = str(pe).find(\'>\') 74 # 找到第一个 " 字符的位置 75 seat_stnvs = str(pe).find(\'"\') 76 # 去掉空格 77 pe = str(pe)[seat_stnvs:seat_turol].replace(" ", "") 78 # 获取资源 79 pe = pe[14:pe.__len__() - 2] 80 pe = pe.replace("\'", "") 81 # 整理成 需要关联数据的样式 82 mor = pe.split(",") 83 # ---[ 构造网址 ] 84 url_get_item = ""; 85 # 对第一个数据的判断 86 if (mor[0] == "咨询"): 87 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=" 88 else: 89 if (mor[0] == "建议"): 90 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId=" 91 else: 92 if (mor[0] == "投诉"): 93 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.complain.complainDetail.flow?originalId=" 94 url_get_item = url_get_item + mor[1] 95 96 dc = DetailConnector(url_get_item) 97 dc.getBean().addToFile_s("../testFile/emails.txt", "a+",mor[0]) 98 self.getChannel() 99 100 def getChannel(self): 101 try: 102 self.profile.execute_script("pageTurning(3);") 103 time.sleep(1) 104 except: 105 print("-# END #-") 106 isAccess = False 107 108 if(self.isAccess): 109 self.getNoFirstChannel() 110 else : 111 self.__close__()

对应执行代码(这次不算测试)

wpc = WebPerConnector() wpc.getFirstChanel()

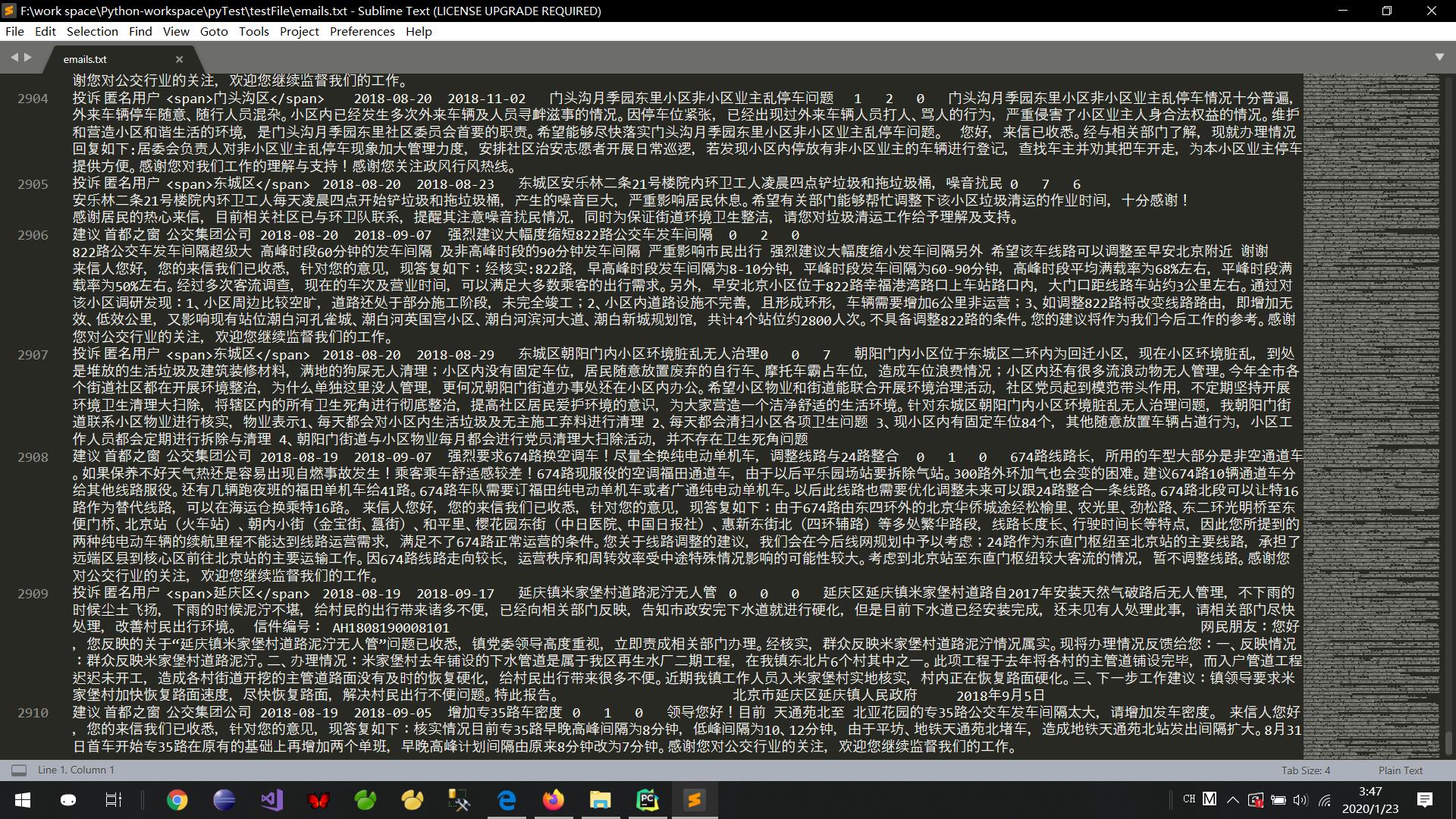

亲测可以执行到第486页,然后页面崩掉,看报错信息应该是溢出了... ...数据量的话是2910条,文件大小 3,553 KB

我将继续对代码进行改造... ...

改造完毕:(完整能够实现爬虫的python集合)

1 import parsel 2 from urllib import request 3 import codecs 4 from selenium import webdriver 5 import time 6 7 8 # [ 对字符串的特殊处理方法-集合 ] 9 class StrSpecialDealer: 10 @staticmethod 11 def getReaction(stri): 12 strs = str(stri).replace(" ","") 13 strs = strs[strs.find(\'>\')+1:strs.rfind(\'<\')] 14 strs = strs.replace("\\t","") 15 strs = strs.replace("\\r","") 16 strs = strs.replace("\\n","") 17 return strs 18 19 20 # [ 保存的数据格式 ] 21 class Bean: 22 23 # 构造方法 24 def __init__(self,asker,responser,askTime,responseTime,title,questionSupport,responseSupport,responseUnsupport,questionText,responseText): 25 self.asker = asker 26 self.responser = responser 27 self.askTime = askTime 28 self.responseTime = responseTime 29 self.title = title 30 self.questionSupport = questionSupport 31 self.responseSupport = responseSupport 32 self.responseUnsupport = responseUnsupport 33 self.questionText = questionText 34 self.responseText = responseText 35 36 # 在控制台输出结果(测试用) 37 def display(self): 38 print("提问方:"+self.asker) 39 print("回答方:"+self.responser) 40 print("提问时间:" + self.askTime) 41 print("回答时间:" + self.responseTime) 42 print("问题标题:" + self.title) 43 print("问题支持量:" + self.questionSupport) 44 print("回答点赞数:" + self.responseSupport) 45 print("回答被踩数:" + self.responseUnsupport) 46 print("提问具体内容:" + self.questionText) 47 print("回答具体内容:" + self.responseText) 48 49 def toString(self): 50 strs = "" 51 strs = strs + self.asker; 52 strs = strs + "\\t" 53 strs = strs + self.responser; 54 strs = strs + "\\t" 55 strs = strs + self.askTime; 56 strs = strs + "\\t" 57 strs = strs + self.responseTime; 58 strs = strs + "\\t" 59 strs = strs + self.title; 60 strs = strs + "\\t" 61 strs = strs + self.questionSupport; 62 strs = strs + "\\t" 63 strs = strs + self.responseSupport; 64 strs = strs + "\\t" 65 strs = strs + self.responseUnsupport; 66 strs = strs + "\\t" 67 strs = strs + self.questionText; 68 strs = strs + "\\t" 69 strs = strs + self.responseText; 70 return strs 71 72 # 将信息附加到文件里 73 def addToFile(self,fpath, model): 74 f = codecs.open(fpath, model, \'utf-8\') 75 f.write(self.toString()+"\\n") 76 f.close() 77 78 # 将信息附加到文件里 79 def addToFile_s(self, fpath, model,kind): 80 f = codecs.open(fpath, model, \'utf-8\') 81 f.write(kind+"\\t"+self.toString() + "\\n") 82 f.close() 83 84 # --------------------[基础数据] 85 # 提问方 86 asker = "" 87 # 回答方 88 responser = "" 89 # 提问时间 90 askTime = "" 91 # 回答时间 92 responseTime = "" 93 # 问题标题 94 title = "" 95 # 问题支持量 96 questionSupport = "" 97 # 回答点赞数 98 responseSupport = "" 99 # 回答被踩数 100 responseUnsupport = "" 101 # 问题具体内容 102 questionText = "" 103 # 回答具体内容 104 responseText = "" 105 106 107 # [ 连续网页爬取的对象 ] 108 class WebPerConnector: 109 profile = "" 110 111 isAccess = True 112 113 # ---[定义构造方法] 114 def __init__(self): 115 self.profile = webdriver.Firefox() 116 self.profile.get(\'http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow\') 117 118 # ---[定义释放方法] 119 def __close__(self): 120 self.profile.quit() 121 122 # 获取 url 的内部 HTML 代码 123 def getHTMLText(self): 124 a = self.profile.page_source 125 return a 126 127 # 获取页面内的基本链接 128 def getFirstChanel(self): 129 index_html = self.getHTMLText() 130 index_sel = parsel.Selector(index_html) 131 links = index_sel.css(\'div #mailul\').css("a[onclick]").extract() 132 inNum = links.__len__() 133 for seat in range(0, inNum):