高可用Hive

Posted 祝灵

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了高可用Hive相关的知识,希望对你有一定的参考价值。

上传hive,mysql安装包

下载安装包请参考这篇文章里面的网址:https://www.cnblogs.com/skyrainmom/p/17438858.html

解压包设置权限

[root@master ~]# tar xf apache-hive-2.0.0-bin.tar.gz -C /usr/local/src/

[root@master ~]# cd /usr/local/src/

[root@master src]# ls

apache-hive-2.0.0-bin hadoop hbase hive jdk sqoop zookeeper

[root@master src]# mv apache-hive-2.0.0-bin/ hive

[root@master src]# chown -R hadoop.hadoop /usr/local/src/

配置环境变量

[root@master ~]# vim /etc/profile.d/hive.sh

export HIVE_HOME=/usr/local/src/hive

export PATH=$HIVE_HOME/bin:$PATH

export HIVE_CONF_DIR=$HIVE_HOME/conf

卸载mairadb,安装mysql

[root@master ~]# rpm -qa | grep mariadb

[root@master ~]# rpm -e mariadb --nodeps

#解压安装mysql

[root@master ~]# yum -y install unzip

[root@master ~]# unzip mysql-5.7.18.zip

Archive: mysql-5.7.18.zip

creating: mysql-5.7.18/

inflating: mysql-5.7.18/mysql-community-client-5.7.18-1.el7.x86_64.rpm

inflating: mysql-5.7.18/mysql-community-common-5.7.18-1.el7.x86_64.rpm

inflating: mysql-5.7.18/mysql-community-devel-5.7.18-1.el7.x86_64.rpm

inflating: mysql-5.7.18/mysql-community-libs-5.7.18-1.el7.x86_64.rpm

inflating: mysql-5.7.18/mysql-community-server-5.7.18-1.el7.x86_64.rpm

[root@master ~]# cd mysql-5.7.18

[root@master mysql-5.7.18]# yum -y install *

.......(略)

[root@master mysql-5.7.18]# rpm -qa | grep mysql

mysql-community-client-5.7.18-1.el7.x86_64

mysql-community-server-5.7.18-1.el7.x86_64

mysql-community-libs-5.7.18-1.el7.x86_64

mysql-community-devel-5.7.18-1.el7.x86_64

mysql-community-common-5.7.18-1.el7.x86_64

修改配置文件,启动mysql

[root@master ~]# vim /etc/my.cnf

default-storage-engine=innodb

innodb_file_per_table

collation-server=utf8_general_ci

init-connect=\'SET NAMES utf8\'

character-set-server=utf8

[root@master ~]# systemctl enable --now mysqld

[root@master ~]# systemctl status mysqld

● mysqld.service - MySQL Server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2023-06-07 11:21:10 CST; 8s ago

Docs: man:mysqld(8)

http://dev.mysql.com/doc/refman/en/using-systemd.html

Process: 158985 ExecStart=/usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid $MYSQLD_OPTS>

Process: 158947 ExecStartPre=/usr/bin/mysqld_pre_systemd (code=exited, status=0/SUCCESS)

Main PID: 158987 (mysqld)

Tasks: 27 (limit: 12221)

Memory: 204.3M

CGroup: /system.slice/mysqld.service

└─158987 /usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid

Jun 07 11:21:08 master systemd[1]: Starting MySQL Server...

Jun 07 11:21:10 master systemd[1]: Started MySQL Server.

设置密码,初始化

[root@master ~]# cat /var/log/mysqld.log | grep password

2023-06-07T14:23:57.171891Z 1 [Note] A temporary password is generated for root@localhost: tl9vdn_buhWq

[root@master ~]# mysql -uroot -p\'tl9vdn_buhWq\'

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \\g.

Your MySQL connection id is 3

Server version: 5.7.18

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type \'help;\' or \'\\h\' for help. Type \'\\c\' to clear the current input statement.

mysql> set password = password(\'Password@123\');

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> quit

Bye

[root@master ~]# mysql -uroot -p\'Password@123\'

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \\g.

Your MySQL connection id is 4

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type \'help;\' or \'\\h\' for help. Type \'\\c\' to clear the current input statement.

mysql>

#初始化,没有输入\'y\'就敲回车

[root@master ~]# mysql_secure_installation

Securing the MySQL server deployment.

Enter password for user root:

The \'validate_password\' plugin is installed on the server.

The subsequent steps will run with the existing configuration

of the plugin.

Using existing password for root.

Estimated strength of the password: 100

Change the password for root ? ((Press y|Y for Yes, any other key for No) :

... skipping.

By default, a MySQL installation has an anonymous user,

allowing anyone to log into MySQL without having to have

a user account created for them. This is intended only for

testing, and to make the installation go a bit smoother.

You should remove them before moving into a production

environment.

Remove anonymous users? (Press y|Y for Yes, any other key for No) : y

Success.

Normally, root should only be allowed to connect from

\'localhost\'. This ensures that someone cannot guess at

the root password from the network.

Disallow root login remotely? (Press y|Y for Yes, any other key for No) :

... skipping.

By default, MySQL comes with a database named \'test\' that

anyone can access. This is also intended only for testing,

and should be removed before moving into a production

environment.

Remove test database and access to it? (Press y|Y for Yes, any other key for No) : y

- Dropping test database...

Success.

- Removing privileges on test database...

Success.

Reloading the privilege tables will ensure that all changes

made so far will take effect immediately.

Reload privilege tables now? (Press y|Y for Yes, any other key for No) : y

Success.

All done!

新建Hive用户与元数据

[root@master ~]# mysql -uroot -p\'Password@123\'

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \\g.

Your MySQL connection id is 7

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type \'help;\' or \'\\h\' for help. Type \'\\c\' to clear the current input statement.

mysql> create database hive_db;

Query OK, 1 row affected (0.00 sec)

mysql> grant all privileges on *.* to hive@"%" identified by \'Password@123\';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> grant all privileges on *.* to root@"%" identified by \'Password@123\';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> quit

Bye

配置Hive参数

[root@master ~]# su - hadoop

Last login: Wed May 31 12:48:03 CST 2023 on pts/0

[hadoop@master ~]$ cp /usr/local/src/hive/conf/hive-default.xml.template /usr/local/src/hive/conf/hive-site.xml

[hadoop@master ~]$ vi /usr/local/src/hive/conf/hive-site.xml

#更改参数

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://master:3306/hive_db?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>Password@123</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/usr/local/src/hive/tmp</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/usr/local/src/hive/tmp/$hive.session.id_resources</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/usr/local/src/hive/tmp</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/usr/local/src/hive/tmp/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

<property>

<name>hive.server2.webui.host</name>

<value>master</value>

<description>The host address the HiveServer2 WebUI will listen on</description>

</property>

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

<description>The port the HiveServer2 WebUI will listen on. This can beset to 0 or a negative integer to disable the web UI</description>

</property>

#启动HDFS所有服务,创建目录,注意操作节点的切换

[hadoop@master ~]$ zkServer.sh start

[hadoop@slave1 ~]$ zkServer.sh start

[hadoop@slave2 ~]$ zkServer.sh start

[hadoop@master ~]$ yarn-daemon.sh start proxyserver

[hadoop@master ~]$ mr-jobhistory-daemon.sh start historyserver

[hadoop@master ~]$ start-all.sh

[hadoop@slave1 ~]$ yarn-daemon.sh start resourcemanager

[hadoop@master ~]$ start-hbase.sh

[hadoop@master ~]$ jps

3041 QuorumPeerMain

3697 JournalNode

3187 JobHistoryServer

3973 ResourceManager

4759 Jps

3880 DFSZKFailoverController

3370 NameNode

4092 NodeManager

4638 HMaster

3487 DataNode

[hadoop@slave1 ~]$ jps

1441 DataNode

2081 Jps

1605 DFSZKFailoverController

1514 JournalNode

1371 NameNode

1263 QuorumPeerMain

1727 NodeManager

1935 ResourceManager

1999 HRegionServer

[hadoop@slave2 ~]$ jps

1264 QuorumPeerMain

1524 NodeManager

1365 DataNode

1765 HRegionServer

1437 JournalNode

1903 Jps

[hadoop@master ~]$ hadoop fs -mkdir -p /user/hive/warehouse

[hadoop@master ~]$ hadoop fs -chmod g+w /user/hive/warehouse

[hadoop@master ~]$ mkdir -p /usr/local/src/hive/tmp

#配置 hive-env.xml,文件末尾添加如下内容

[hadoop@master ~]$ cp /usr/local/src/hive/conf/hive-env.sh.template /usr/local/src/hive/conf/hive-env.sh

[hadoop@master ~]$ vi /usr/local/src/hive/conf/hive-env.sh

export JAVA_HOME=/usr/local/src/jdk

export HADOOP_HOME=/usr/local/src/hadoop

export HIVE_CONF_DIR=/usr/local/src/hive/conf

export HIVE_AUX_JARS_PATH=/usr/local/src/hive/lib

数据库的初始化

#将 Hive 连接 MySQL 的驱动器文件上传至 Hive 的 lib 文件夹下

[hadoop@master ~]$ su - root

[root@master ~]# cp mysql-connector-java-5.1.46.jar /usr/local/src/hive/lib/

[root@master ~]# chown -R hadoop.hadoop /usr/local/src/

[hadoop@master ~]$ schematool -initSchema -dbType mysql

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://master:3306/hive_db?createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: hive

Wed Jun 07 23:21:16 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Starting metastore schema initialization to 2.0.0

Initialization script hive-schema-2.0.0.mysql.sql

Wed Jun 07 23:21:17 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Initialization script completed

Wed Jun 07 23:21:22 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

schemaTool completed

查看数据库表

[hadoop@master ~]$ mysql -uroot -p\'Password@123\'

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \\g.

Your MySQL connection id is 11

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type \'help;\' or \'\\h\' for help. Type \'\\c\' to clear the current input statement.

mysql> use hive_db;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+---------------------------+

| Tables_in_hive_db |

+---------------------------+

| AUX_TABLE |

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_COMPACTIONS |

| COMPLETED_TXN_COMPONENTS |

| DATABASE_PARAMS |

| DBS |

| DB_PRIVS |

| DELEGATION_TOKENS |

| FUNCS |

| FUNC_RU |

| GLOBAL_PRIVS |

| HIVE_LOCKS |

| IDXS |

| INDEX_PARAMS |

| MASTER_KEYS |

| NEXT_COMPACTION_QUEUE_ID |

| NEXT_LOCK_ID |

| NEXT_TXN_ID |

| NOTIFICATION_LOG |

| NOTIFICATION_SEQUENCE |

| NUCLEUS_TABLES |

| PARTITIONS |

| PARTITION_EVENTS |

| PARTITION_KEYS |

| PARTITION_KEY_VALS |

| PARTITION_PARAMS |

| PART_COL_PRIVS |

| PART_COL_STATS |

| PART_PRIVS |

| ROLES |

| ROLE_MAP |

| SDS |

| SD_PARAMS |

| SEQUENCE_TABLE |

| SERDES |

| SERDE_PARAMS |

| SKEWED_COL_NAMES |

| SKEWED_COL_VALUE_LOC_MAP |

| SKEWED_STRING_LIST |

| SKEWED_STRING_LIST_VALUES |

| SKEWED_VALUES |

| SORT_COLS |

| TABLE_PARAMS |

| TAB_COL_STATS |

| TBLS |

| TBL_COL_PRIVS |

| TBL_PRIVS |

| TXNS |

| TXN_COMPONENTS |

| TYPES |

| TYPE_FIELDS |

| VERSION |

+---------------------------+

55 rows in set (0.00 sec)

mysql> quit

Bye

启动Hive,验证

[hadoop@master ~]$ hive

.........

Wed Jun 07 23:24:18 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

hive> show databases;

OK

default

Time taken: 0.821 seconds, Fetched: 1 row(s)

hive> create database hive_test_db;

OK

Time taken: 0.45 seconds

hive> use hive_test_db;

OK

Time taken: 0.053 seconds

hive> create table t_user(id int, name string);

OK

Time taken: 0.777 seconds

hive> show tables;

OK

t_user

Time taken: 0.048 seconds, Fetched: 1 row(s)

#打开 MySQL 数据库,使用实验二配置过的 hive_db 数据库,此时注意,hive 创建的表统一都在 hive_db 数据库的 TBLS 表中。当创建表存在,既基于 MySQL 存储元数据的 Hive 组件搭建完毕

[hadoop@master ~]$ mysql -uroot -p\'Password@123\'

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \\g.

Your MySQL connection id is 20

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type \'help;\' or \'\\h\' for help. Type \'\\c\' to clear the current input statement.

mysql> use hive_db;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from TBLS;

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+

| TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER | RETENTION | SD_ID | TBL_NAME | TBL_TYPE | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT |

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+

| 1 | 1686151649 | 2 | 0 | hadoop | 0 | 1 | t_user | MANAGED_TABLE | NULL | NULL |

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+

1 row in set (0.00 sec)

beeline CLI远程访问Hive

#修改 Hadoop 的 core-site.xml 文件,使用 beeline CLI 访问 Hive,需要在 Hadoop 集群中为 Hive 赋予代理用户权限

[hadoop@master ~]$ cd /usr/local/src/hadoop/etc/hadoop/

[hadoop@master hadoop]$ vim core-site.xml

#添加如下配置,slave节点同样需要

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

[hadoop@slave1 ~]$ vim /usr/local/src/hadoop/etc/hadoop/core-site.xml

[hadoop@slave2 ~]$ vim /usr/local/src/hadoop/etc/hadoop/core-site.xml

启动 hiveserver2 服务,等待时间较长,此页面不要关闭

[hadoop@master ~]$ stop-all.sh

[hadoop@master ~]$ start-all.sh

[hadoop@master ~]$ hiveserver2

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Wed Jun 07 23:39:18 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Wed Jun 07 23:39:19 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Wed Jun 07 23:39:19 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Wed Jun 07 23:39:19 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Wed Jun 07 23:39:21 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Wed Jun 07 23:39:21 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Wed Jun 07 23:39:21 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Wed Jun 07 23:39:21 CST 2023 WARN: Establishing SSL connection without server\'s identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn\'t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to \'false\'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

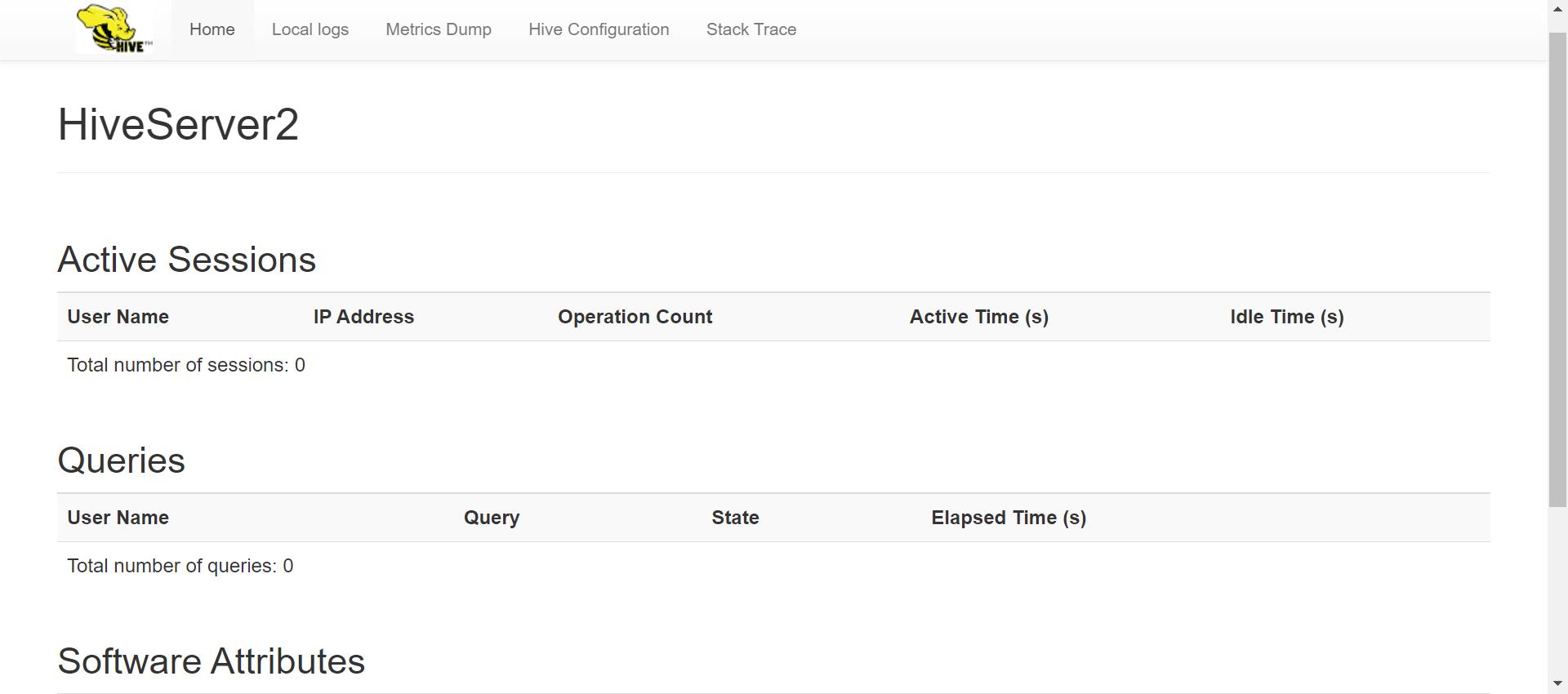

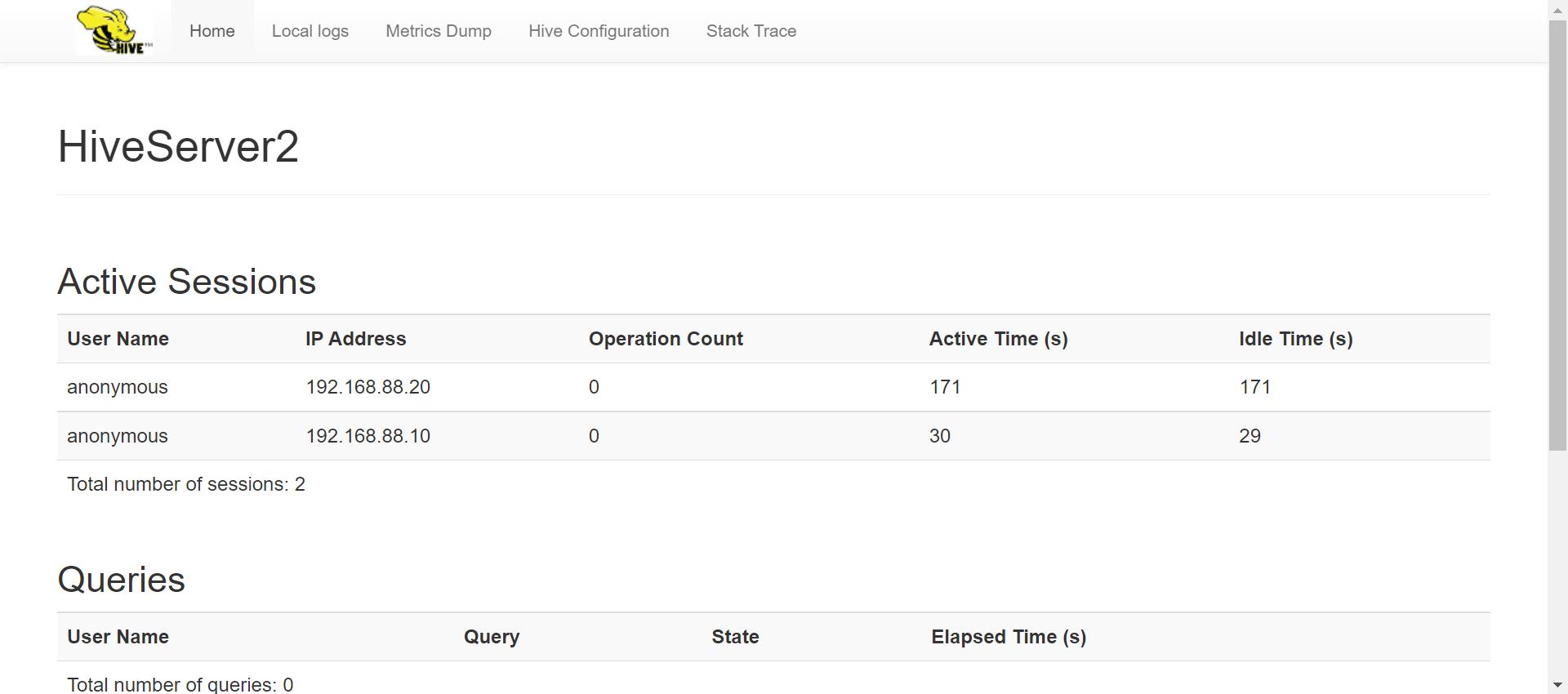

查看页面是否生成

添加远程访问节点

#假设在 slaves1 节点进行远程访问,首先需要在 slaves1 节点上添加 Hive 组件,从 master 节点复制即可

[root@master ~]# scp -r /usr/local/src/hive/ root@slave1:/usr/local/src/

[root@master ~]# scp -r /etc/profile.d/hive.sh root@slave1:/etc/profile.d/

[root@slave1 ~]# chown -R hadoop.hadoop /usr/local/src/

远程访问

#注意执行完不要关闭页面

[hadoop@slave1 ~]$ beeline -u jdbc:hive2://master:10000

Connecting to jdbc:hive2://master:10000

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connected to: Apache Hive (version 2.0.0)

Driver: Hive JDBC (version 2.0.0)

23/06/07 23:48:47 [main]: WARN jdbc.HiveConnection: Request to set autoCommit to false; Hive does not support autoCommit=false.

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 2.0.0 by Apache Hive

0: jdbc:hive2://master:10000>

#master另启一个终端,同时也开启并发访问

[hadoop@master ~]$ beeline -u jdbc:hive2://master:10000

Connecting to jdbc:hive2://master:10000

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connected to: Apache Hive (version 2.0.0)

Driver: Hive JDBC (version 2.0.0)

23/06/07 23:51:08 [main]: WARN jdbc.HiveConnection: Request to set autoCommit to false; Hive does not support autoCommit=false.

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 2.0.0 by Apache Hive

0: jdbc:hive2://master:10000>

查看访问记录

Hive库操作与表操作

Hive DDL 语句,包括:

CREATE DATABASE/SCHEMA, TABLE, VIEW, FUNCTION, INDEX

create database/schema, table, view, function, index

DROP DATABASE/SCHEMA, TABLE, VIEW, INDEX

drop database/schema, table, view, index

TRUNCATE TABLE

truncate table

ALTER DATABASE/SCHEMA, TABLE, VIEW

alter database/schema, view, index

MSCK REPAIR TABLE (or ALTER TABLE RECOVER PARTITIONS)

msck repair table(or alter table recover partitions)

SHOW DATABASES/SCHEMAS, TABLES, TBLPROPERTIES, VIEWS, PARTITIONS, FUNCTIONS,INDEX[ES], COLUMNS, CREATE TABLE

show databases/schemas, tables, tblproperties, views, partitions, functions, index, columns, create table

DESCRIBE DATABASE/SCHEMA, TABLE_NAME, VIEW_NAME, MATERIALIZED_VIEW_NAME

describe database/schema, table_name, view_name, materialized_view_name

数据库操作

#创建数据库

[hadoop@master ~]$ hive

hive> create database school;

OK

Time taken: 1.794 seconds

#修改数据库并显示数据库详细信息

hive> alter database school set dbproperties(\'create\' = \'H3C\');

OK

Time taken: 0.148 seconds

hive> desc database extended school;

OK

school hdfs://mycluster/user/hive/warehouse/school.db hadoop USER create=H3C

Time taken: 0.535 seconds, Fetched: 1 row(s)

hive> alter database school set owner user root;

OK

Time taken: 0.037 seconds

hive> desc database extended school;

OK

school hdfs://mycluster/user/hive/warehouse/school.db root USER create=H3C

Time taken: 0.018 seconds, Fetched: 1 row(s)

#删除数据库并显示全部数据库

hive> drop database school;

Moved: \'hdfs://mycluster/user/hive/warehouse/school.db\' to trash at: hdfs://mycluster/user/hadoop/.Trash/Current

OK

Time taken: 0.373 seconds

hive> show databases;

OK

default

hive_test_db

Time taken: 0.017 seconds, Fetched: 2 row(s)

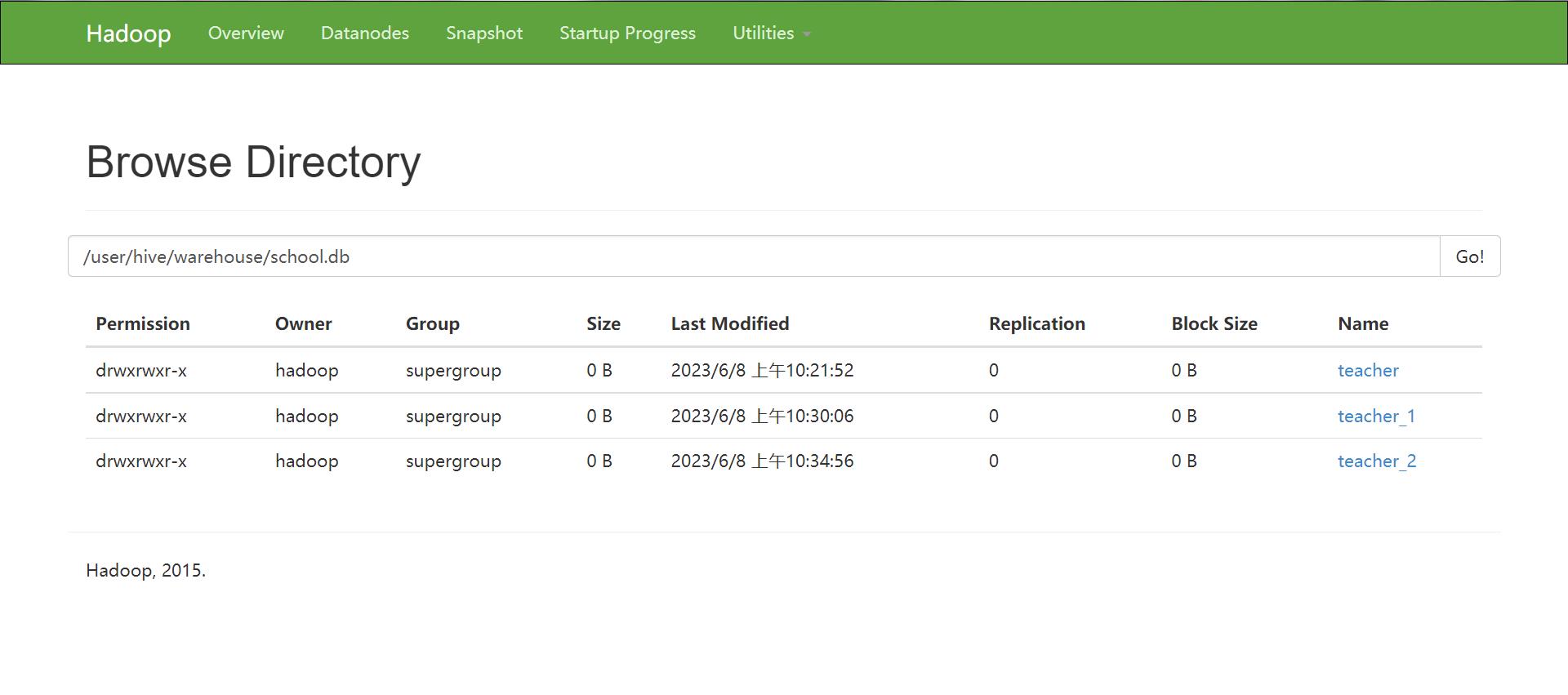

Hive表操作

#创建表,Hive 默认创建的普通表被称为管理表或内部表

hive> use school;

OK

Time taken: 0.024 seconds

hive> create table teacher(

> num int,

> name string,

> email map<string,int>,

> class array<string>);

OK

Time taken: 0.905 seconds

#分区表:目的是为了避免暴力扫描,一个分区就是 HDFS 上的一个独立文件夹,创建表时指定的表的列中不应该包含分区列,分区列需要使用关键词 partitioned by 在后面单独指定

hive> create table teacher_1(

> num int,

> name string,

> email map<string,int>,

> class array<string>)

> partitioned by (age int)

> row format delimited fields terminated by ",";

OK

Time taken: 0.253 seconds

#分桶表:可以将表或者分区进一步细化成桶,是对数据进行更细粒度的划分,以便获得更高的查询效率,创建桶表时指定桶列需要提前创建,使用关键词 clustered by 在后面单独指定,并指定分为多少个桶(buckets)

hive> create table teacher_2(

> num int,

> name string,

> email map<string,int>,

> class array<string>)

> clustered by (num) into 4 buckets

> row format delimited fields terminated by ",";

OK

Time taken: 0.135 seconds

查看

#查看所有表与详细信息

hive> show tables;

OK

teacher

teacher_1

teacher_2

Time taken: 1.235 seconds, Fetched: 3 row(s)

hive> desc teacher;

OK

num int

name string

email map<string,int>

class array<string>

Time taken: 0.101 seconds, Fetched: 4 row(s)

hive> desc formatted teacher;

OK

# col_name data_type comment

num int

name string

email map<string,int>

class array<string>

# Detailed Table Information

Database: school

Owner: hadoop

CreateTime: Thu Jun 08 10:21:52 CST 2023

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://mycluster/user/hive/warehouse/school.db/teacher

Table Type: MANAGED_TABLE

Table Parameters:

transient_lastDdlTime 1686190912

# Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -1

Bucket Columns: []

Sort Columns: []

Storage Desc Params:

serialization.format 1

Time taken: 0.076 seconds, Fetched: 28 row(s)

#复制一个已经存在的表

hive> create table teacher2 like teacher;

OK

Time taken: 1.087 seconds

#修改表名

hive> alter table teacher rename to new_teacher;

OK

Time taken: 0.296 seconds

#修改表的列名,数据类型,列注释和列所在的位置,FIRST 将列放在第一列,AFTER col_name 将列放在 col_name 后面一列。下面的语句将列名 num 修改为 number,数据类型为更

改为 string 并添加注释,最后将这一列放在 name 后面

hive> alter table new_teacher change num number string comment \'the num of teacher, change datatype to string\' after name;

OK

Time taken: 0.237 seconds

#增加/更新列,add columns 允许用户在当前列的末尾,分区列之前添加新的列,replace columns 允许用户更新列,更新的过程是先删除当前的列,然后在加入新的列

hive> alter table new_teacher add columns(age int);

OK

Time taken: 0.145 seconds

#删除表

hive> drop table teacher2;

Moved: \'hdfs://mycluster/user/hive/warehouse/school.db/teacher2\' to trash at: hdfs://mycluster/user/hadoop/.Trash/Current

OK

Time taken: 0.6 seconds

#清空表

hive> truncate table new_teacher;

OK

Time taken: 0.119 seconds

Hive查询

Select操作:

- select * from <table_name>

- where表达式

- distinct and all

- Group by 分组查询

- Limit限制查询

- union多表联合

数据导入

hive> create table student(

> num int,

> name string,

> class string,

> body map<string,int>,

> exam array<string>)

> row format delimited

> fields terminated by \'|\'

> collection items terminated by \',\'

> map keys terminated by \':\'

> lines terminated by \'\\n\';

OK

Time taken: 1.125 seconds

hive> create table lib(

> num int,

> book string)

> row format delimited

> fields terminated by \'|\'

> collection items terminated by \',\'

> map keys terminated by \':\'

> lines terminated by \'\\n\';

OK

Time taken: 0.077 seconds

hive> create table price(

> book string,

> price int)

> row format delimited

> fields terminated by \'|\'

> collection items terminated by \',\'

> map keys terminated by \':\'

> lines terminated by \'\\n\';

OK

Time taken: 0.21 seconds

hive> insert into student (num,name,class,body,exam) select 20200101,\'Wlen\',\'grade 2\',map(\'height\',175,\'weight\',60),array(\'80\',\'70\');

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20230610143907_1d1596ab-515c-4936-a92d-675f10ec33ea

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there\'s no reduce operator

Starting Job = job_1686378855196_0001, Tracking URL = http://master:8088/proxy/application_1686378855196_0001/

Kill Command = /usr/local/src/hadoop/bin/hadoop job -kill job_1686378855196_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2023-06-10 14:47:34,598 Stage-1 map = 0%, reduce = 0%

2023-06-10 14:47:48,996 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.4 sec

MapReduce Total cumulative CPU time: 1 seconds 400 msec

Ended Job = job_1686378855196_0001

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to: hdfs://mycluster/user/hive/warehouse/student/.hive-staging_hive_2023-06-10_14-47-04_438_1739126719635289685-1/-ext-10000

Loading data to table default.student

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 1.4 sec HDFS Read: 5096 HDFS Write: 120 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 400 msec

OK

Time taken: 45.917 seconds

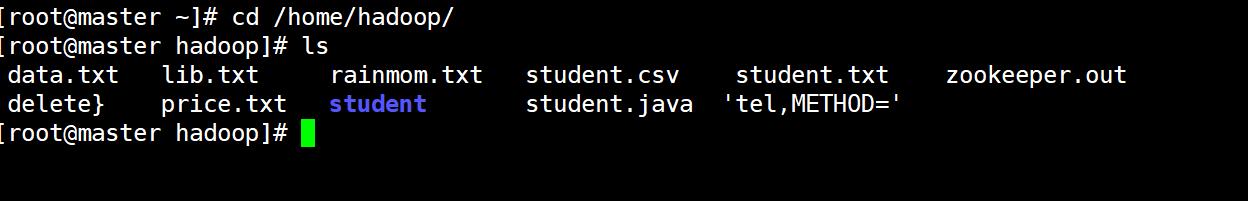

批量导入(上传所需的文件)

#使用 Load 函数批量导入数据

hive> load data local inpath \'/home/hadoop/student.txt\' into table student;

Loading data to table default.student

OK

Time taken: 1.095 seconds

hive> load data local inpath \'/home/hadoop/lib.txt\' into table lib;

Loading data to table default.lib

OK

Time taken: 0.179 seconds

hive> load data local inpath \'/home/hadoop/price.txt\' into table price;

Loading data to table default.price

OK

Time taken: 0.158 seconds

select 查询

#简单查询

hive> select * from student;

OK

20200101 Wlen grade 2 "height":175,"weight":60 ["80","70"]

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"]

20200103 Will grade 3 "height":170,"weight":61 ["66","77"]

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"]

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"]

20200106 ZhangSan grade 2 "height":170,"weight":61 ["85","63"]

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"]

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"]

Time taken: 0.639 seconds, Fetched: 8 row(s)

#条件查询之where语句,where 语句是属于布尔表达式,其后可以与“且”,“或”进行联动进行复杂查询,注意特殊字段的查找方式

hive> select * from student where class = \'grade 4\';

OK

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"]

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"]

Time taken: 0.252 seconds, Fetched: 2 row(s)

hive> select * from student where exam[0] = 96 or exam[1]=77;

OK

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"]

20200103 Will grade 3 "height":170,"weight":61 ["66","77"]

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"]

Time taken: 0.109 seconds, Fetched: 3 row(s)

hive> select * from student where body[\'height\'] = 170;

OK

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"]

20200103 Will grade 3 "height":170,"weight":61 ["66","77"]

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"]

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"]

20200106 ZhangSan grade 2 "height":170,"weight":61 ["85","63"]

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"]

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"]

Time taken: 0.064 seconds, Fetched: 7 row(s)

#条件查询之distinct和all语句,distinct 用途为去除重复的项,不加参数则默认为 all。distinct 参数执行时调用 MR进行排序,对大规模数据效率较高

hive> select class from student;

OK

grade 2

grade 1

grade 3

grade 4

grade 5

grade 2

grade 4

grade 2

Time taken: 0.066 seconds, Fetched: 8 row(s)

hive> select distinct class from student;

Total MapReduce CPU Time Spent: 3 seconds 300 msec

OK

grade 1

grade 2

grade 3

grade 4

grade 5

Time taken: 43.126 seconds, Fetched: 5 row(s)

#条件查询之group by与having语句,group by 是对列进行分组查询,having 可以对 group by 结果进行进一步的过滤。having 与 where 的区别是:where 后面不能写分组函数,而 having 后面可以使用分组函数

hive> select class ,count(*) from student group by class;

Total MapReduce CPU Time Spent: 3 seconds 30 msec

OK

grade 1 1

grade 2 3

grade 3 1

grade 4 2

grade 5 1

Time taken: 65.826 seconds, Fetched: 5 row(s)

hive> select class ,count(*) num from student group by class having num >= 2;

Total MapReduce CPU Time Spent: 3 seconds 360 msec

OK

grade 2 3

grade 4 2

Time taken: 57.369 seconds, Fetched: 2 row(s)

limit 限制语句与 union 联合

#limit 限制查询的说明范围,当大数据查询时,行出过多需要限制查询。union 把多个 select 的结果并集展示出来,可以多表联动

hive> select * from student limit 2,4;

OK

20200103 Will grade 3 "height":170,"weight":61 ["66","77"]

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"]

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"]

20200106 ZhangSan grade 2 "height":170,"weight":61 ["85","63"]

Time taken: 0.321 seconds, Fetched: 4 row(s)

hive> select class from student union select num from student;

Total MapReduce CPU Time Spent: 3 seconds 940 msec

OK

20200101

20200102

20200103

20200104

20200105

20200106

20200107

20200117

grade 1

grade 2

grade 3

grade 4

grade 5

Time taken: 38.079 seconds, Fetched: 13 row(s)

order by 排序与 sort by 排序

#order by 为全局排序,后面可以有多列进行排序,默认按字典排序。对于大规模的数据集order by 的效率非常低。在很多情况下,并不需要全局排序,此时可以使用 sort by。以下对成绩进行排序,结果虽然一样。当遇到大规模数据时,sort by 可以通过修改 reducer 个数,为每个 reducer 产生一个排序文件。每个 Reducer 内部进行排序,对全局结果集来说不是排序。而 order by 只调用一个 Reducer 进行计算

hive> set mapreduce.job.reduces=3;

hive> select * from student sort by exam[0];

Total MapReduce CPU Time Spent: 4 seconds 680 msec

OK

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"]

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"]

20200106 ZhangSan grade 2 "height":170,"weight":61 ["85","63"]

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"]

20200103 Will grade 3 "height":170,"weight":61 ["66","77"]

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"]

20200101 Wlen grade 2 "height":175,"weight":60 ["80","70"]

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"]

Time taken: 56.286 seconds, Fetched: 8 row(s)

hive> select * from student order by exam[0];

Total MapReduce CPU Time Spent: 2 seconds 640 msec

OK

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"]

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"]

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"]

20200103 Will grade 3 "height":170,"weight":61 ["66","77"]

20200101 Wlen grade 2 "height":175,"weight":60 ["80","70"]

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"]

20200106 ZhangSan grade 2 "height":170,"weight":61 ["85","63"]

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"]

Time taken: 62.906 seconds, Fetched: 8 row(s)

JOIN 多表查询

join 可以连接多表联合查询,要求查询的条件在连个表中,而且连接 n 个表,至少需要 n-1 个连接条件。例如:连接三个表,至少需要两个连接条件。本例有两个表,以为学生表 具有学号 num,一个图书馆表,具有学号以及借书名称

#两表查询

hive> select * from student join lib on student.num=lib.num;

Total MapReduce CPU Time Spent: 2 seconds 390 msec

OK

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 War and Peace

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Chronicles

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Hadoop

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 Math

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 how to use hive?

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 hbase

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"] 20200105 Notre Dame DE Paris

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"] 20200107 Romance of The Three Kingdoms

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Shuihu Quanchuan

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Feng Menglong

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Waking World Hengyan

Time taken: 62.893 seconds, Fetched: 11 row(s)

#左连接查询,查询每个人的借书的名称。区别是左表的信息都显示

hive> select * from student left outer join lib on student.num=lib.num;

Total MapReduce CPU Time Spent: 1 seconds 580 msec

OK

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 War and Peace

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Chronicles

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Hadoop

20200103 Will grade 3 "height":170,"weight":61 ["66","77"] NULL NULL

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 Math

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 how to use hive?

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 hbase

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"] 20200105 Notre Dame DE Paris

20200106 ZhangSan grade 2 "height":170,"weight":61 ["85","63"] NULL NULL

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"] 20200107 Romance of The Three Kingdoms

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Shuihu Quanchuan

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Feng Menglong

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Waking World Hengyan

20200101 Wlen grade 2 "height":175,"weight":60 ["80","70"] NULL NULL

Time taken: 45.738 seconds, Fetched: 14 row(s)

#右连接查询,右表全部显示

hive> select * from student right outer join lib on student.num=lib.num;

Total MapReduce CPU Time Spent: 820 msec

OK

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 War and Peace

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 Math

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"] 20200105 Notre Dame DE Paris

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"] 20200107 Romance of The Three Kingdoms

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Shuihu Quanchuan

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Feng Menglong

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Waking World Hengyan

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 how to use hive?

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 hbase

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Chronicles

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Hadoop

Time taken: 36.074 seconds, Fetched: 11 row(s)

#全连接查询,左右连接的综合使用,显示两张表的所有信息。若没有关联项目则放到最后显示为 NULL

hive> select * from student full outer join lib on student.num=lib.num;

Total MapReduce CPU Time Spent: 6 seconds 570 msec

OK

20200101 Wlen grade 2 "height":175,"weight":60 ["80","70"] NULL NULL

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 hbase

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 how to use hive?

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 Math

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"] 20200107 Romance of The Three Kingdoms

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Hadoop

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Chronicles

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 War and Peace

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"] 20200105 Notre Dame DE Paris

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Waking World Hengyan

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Feng Menglong

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Shuihu Quanchuan

20200103 Will grade 3 "height":170,"weight":61 ["66","77"] NULL NULL

20200106 ZhangSan grade 2 "height":170,"weight":61 ["85","63"] NULL NULL

Time taken: 40.76 seconds, Fetched: 14 row(s)

#半连接查询,半连接只显示左表内容,即显示跟连接的右表有关系的左表内容

hive> select * from student left semi join lib on student.num=lib.num;

Total MapReduce CPU Time Spent: 2 seconds 570 msec

OK

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"]

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"]

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"]

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"]

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"]

Time taken: 49.347 seconds, Fetched: 5 row(s)

#多表查询,多表(两个以上),例如:查询学生租借的书以及书的价格。大多数情况下,Hive 会对每对 jion 连接对象启动一个 MapReduce 任务

hive> select * from student join lib on student.num=lib.num join price on lib.book=price.book;

Total MapReduce CPU Time Spent: 2 seconds 170 msec

OK

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 War and Peace War and Peace 55

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Chronicles Chronicles 22

20200102 Michael grade 1 "height":170,"weight":61 ["81","77"] 20200102 Hadoop Hadoop 45

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 Math Math 40

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 how to use hive? how to use hive? 40

20200104 Shelley grade 4 "height":170,"weight":61 ["63","79"] 20200104 hbase hbase 66

20200105 Lucy grade 5 "height":170,"weight":61 ["96","72"] 20200105 Notre Dame DE Paris Notre Dame DE Paris 36

20200107 WangWu grade 4 "height":170,"weight":61 ["0","71"] 20200107 Romance of The Three Kingdoms Romance of The Three Kingdoms 22

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Shuihu Quanchuan Shuihu Quanchuan 202

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Feng Menglong Feng Menglong 100

20200117 LiSi grade 2 "height":170,"weight":61 ["55","70"] 20200117 Waking World Hengyan Waking World Hengyan 40

Time taken: 43.306 seconds, Fetched: 11 row(s)

以上是关于高可用Hive的主要内容,如果未能解决你的问题,请参考以下文章