python调用scala或java包

Posted 凯文の博客

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了python调用scala或java包相关的知识,希望对你有一定的参考价值。

项目中用到python操作hdfs的问题,一般都是使用python的hdfs包,然而这个包初始化起来太麻烦,需要:

from pyspark impport SparkConf, SparkContext

from hdfs import * client = Client("http://127.0.0.1:50070")

可以看到python需要指定master的地址,平时Scala使用的时候不用这样,如下:

import org.apache.hadoop.fs.{FileSystem, Path} import org.apache.spark.{SparkConf, SparkContext} hdfs = FileSystem.get(sc.hadoopConfiguration)

如果我们要在本地测试和生产打包发布的时候,python这样需要每次修改master地址的方式很不方便,而且一般本地调试的时候一般hadoop需要的时候才开起来,Scala启动的时候是在项目目录的根目录直接启动hdfs,但是python调用hadoop的话需要本地开启hadoop服务,通过localhost:50070监听。于是想通过python调用Scala的Filesystem来实现这个操作。

阅读spark的源码发现python是使用py4j这个py文件和java交互的,通过gateway启动jvm,这里的源码有很大用途,于是我做了修改:

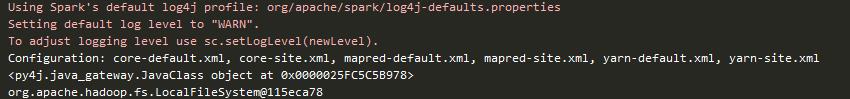

#!/usr/bin/env python # coding:utf-8 import re import jieba import atexit import os import select import signal import shlex import socket import platform from subprocess import Popen, PIPE from py4j.java_gateway import java_import, JavaGateway, GatewayClient from common.Tools import loadData from pyspark import SparkContext from pyspark.serializers import read_int if "PYSPARK_GATEWAY_PORT" in os.environ: gateway_port = int(os.environ["PYSPARK_GATEWAY_PORT"]) else: SPARK_HOME = os.environ["SPARK_HOME"] # Launch the Py4j gateway using Spark\'s run command so that we pick up the # proper classpath and settings from spark-env.sh on_windows = platform.system() == "Windows" script = "./bin/spark-submit.cmd" if on_windows else "./bin/spark-submit" submit_args = os.environ.get("PYSPARK_SUBMIT_ARGS", "pyspark-shell") if os.environ.get("SPARK_TESTING"): submit_args = \' \'.join([ "--conf spark.ui.enabled=false", submit_args ]) command = [os.path.join(SPARK_HOME, script)] + shlex.split(submit_args) # Start a socket that will be used by PythonGatewayServer to communicate its port to us callback_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM) callback_socket.bind((\'127.0.0.1\', 0)) callback_socket.listen(1) callback_host, callback_port = callback_socket.getsockname() env = dict(os.environ) env[\'_PYSPARK_DRIVER_CALLBACK_HOST\'] = callback_host env[\'_PYSPARK_DRIVER_CALLBACK_PORT\'] = str(callback_port) # Launch the Java gateway. # We open a pipe to stdin so that the Java gateway can die when the pipe is broken if not on_windows: # Don\'t send ctrl-c / SIGINT to the Java gateway: def preexec_func(): signal.signal(signal.SIGINT, signal.SIG_IGN) proc = Popen(command, stdin=PIPE, preexec_fn=preexec_func, env=env) else: # preexec_fn not supported on Windows proc = Popen(command, stdin=PIPE, env=env) gateway_port = None # We use select() here in order to avoid blocking indefinitely if the subprocess dies # before connecting while gateway_port is None and proc.poll() is None: timeout = 1 # (seconds) readable, _, _ = select.select([callback_socket], [], [], timeout) if callback_socket in readable: gateway_connection = callback_socket.accept()[0] # Determine which ephemeral port the server started on: gateway_port = read_int(gateway_connection.makefile(mode="rb")) gateway_connection.close() callback_socket.close() if gateway_port is None: raise Exception("Java gateway process exited before sending the driver its port number") # In Windows, ensure the Java child processes do not linger after Python has exited. # In UNIX-based systems, the child process can kill itself on broken pipe (i.e. when # the parent process\' stdin sends an EOF). In Windows, however, this is not possible # because java.lang.Process reads directly from the parent process\' stdin, contending # with any opportunity to read an EOF from the parent. Note that this is only best # effort and will not take effect if the python process is violently terminated. if on_windows: # In Windows, the child process here is "spark-submit.cmd", not the JVM itself # (because the UNIX "exec" command is not available). This means we cannot simply # call proc.kill(), which kills only the "spark-submit.cmd" process but not the # JVMs. Instead, we use "taskkill" with the tree-kill option "/t" to terminate all # child processes in the tree (http://technet.microsoft.com/en-us/library/bb491009.aspx) def killChild(): Popen(["cmd", "/c", "taskkill", "/f", "/t", "/pid", str(proc.pid)]) atexit.register(killChild) # Connect to the gateway gateway = JavaGateway(GatewayClient(port=gateway_port), auto_convert=True) # Import the classes used by PySpark java_import(gateway.jvm, "org.apache.spark.SparkConf") java_import(gateway.jvm, "org.apache.spark.api.java.*") java_import(gateway.jvm, "org.apache.spark.api.python.*") java_import(gateway.jvm, "org.apache.spark.ml.python.*") java_import(gateway.jvm, "org.apache.spark.mllib.api.python.*") # TODO(davies): move into sql java_import(gateway.jvm, "org.apache.spark.sql.*") java_import(gateway.jvm, "org.apache.spark.sql.hive.*") java_import(gateway.jvm, "scala.Tuple2") java_import(gateway.jvm, "org.apache.hadoop.fs.{FileSystem, Path}") java_import(gateway.jvm, "org.apache.hadoop.conf.Configuration") java_import(gateway.jvm, "org.apache.hadoop.*") java_import(gateway.jvm, "org.apache.spark.{SparkConf, SparkContext}") jvm = gateway.jvm conf = jvm.org.apache.spark.SparkConf() conf.setMaster("local").setAppName("test hdfs") sc = jvm.org.apache.spark.SparkContext(conf) print(sc.hadoopConfiguration()) FileSystem = jvm.org.apache.hadoop.fs.FileSystem print(repr(FileSystem)) Path = jvm.org.apache.hadoop.fs.Path hdfs = FileSystem.get(sc.hadoopConfiguration()) hdfs.delete(Path("/DATA/*/*/TMP/KAIVEN/*")) print(‘目录删除成功’)

可以参考py4j.java_gateway.launch_gateway,这个方法是python启动jvm的,本人做了一点小小的修改用java_import 调用了

org.apache.hadoop.fs.{FileSystem, Path},

org.apache.hadoop.conf.Configuration

这样的话sparkConf起来的时候就自动配置了Scala的配置。

以上是关于python调用scala或java包的主要内容,如果未能解决你的问题,请参考以下文章

通过python扩展spark mllib 算法包(e.g.基于spark使用孤立森林进行异常检测)

通过python扩展spark mllib 算法包(e.g.基于spark使用孤立森林进行异常检测)

linux打开终端如何启动scala,如何在终端下运行Scala代码片段?