Python-爬虫-抓取头条街拍图片-1.1

Posted ygzhaof_100

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Python-爬虫-抓取头条街拍图片-1.1相关的知识,希望对你有一定的参考价值。

下面实例是抓取头条图片信息,只是抓取了查询列表返回的json中image,大图标,由于该结果不会包含该链接详情页的所有图片列表;因此这里抓取不全;后续有时间在完善;

1、抓取头条街拍相关图片请求如下:

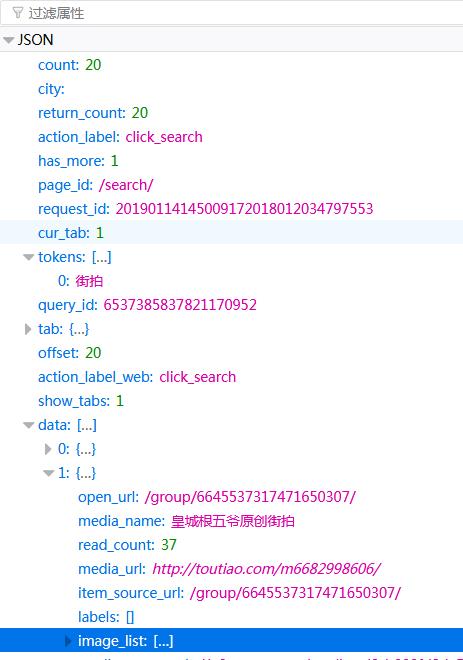

2、通过debug可以看到请求参数以及相应结果数据:

3、响应结果,比较重要的是data(group_id,image_list、large_image_url等字段):

主程序如下:

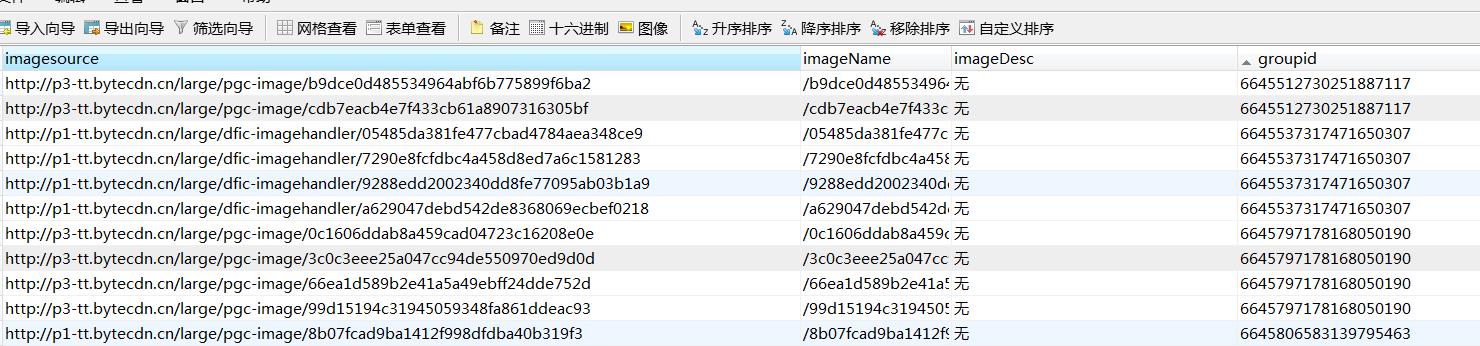

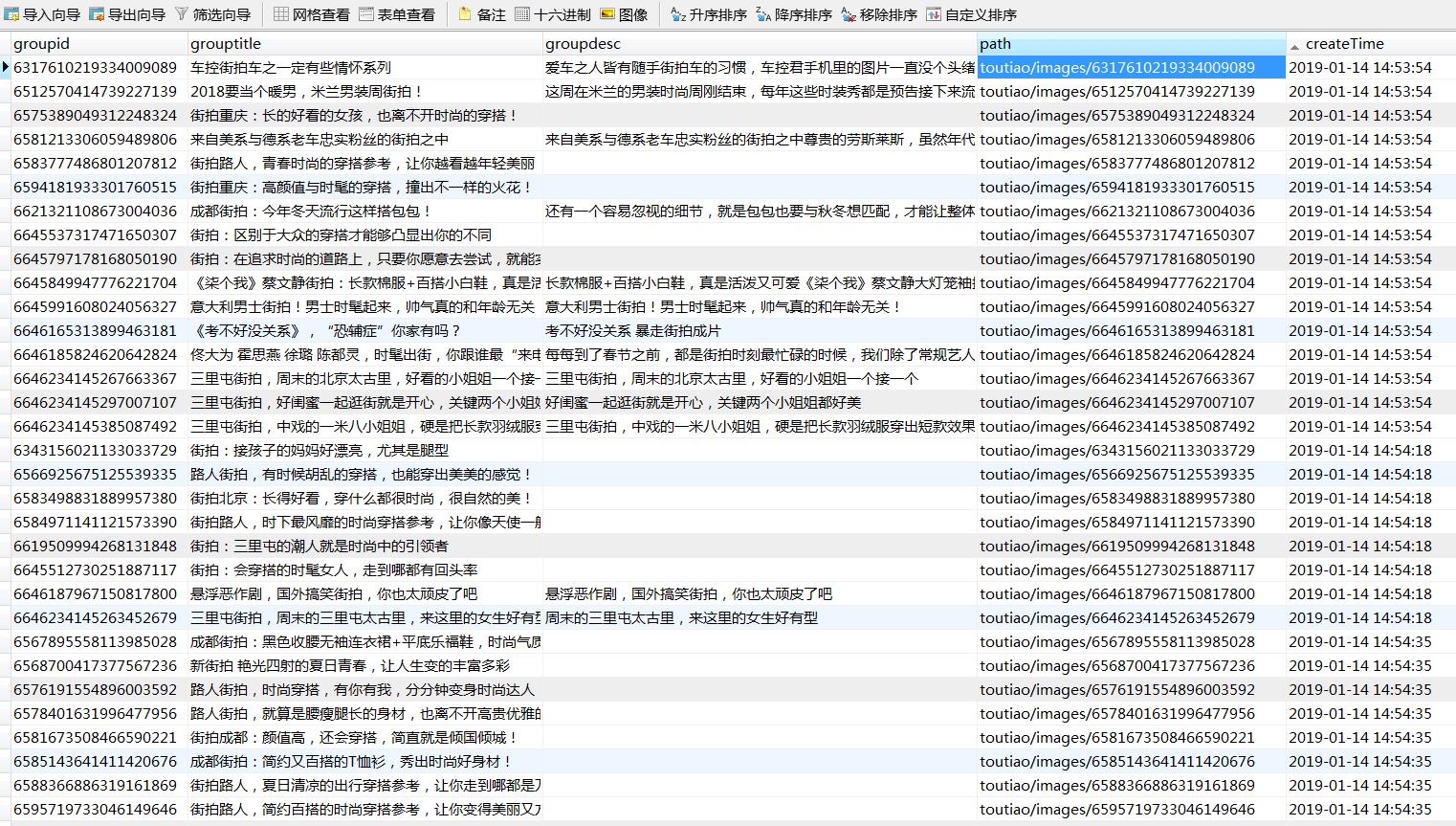

抓取图片信息保存本地,然后将图片组和图片信息保存至mysql数据库;

1 #今日头条街拍数据抓取,将图片存入文件目录,将文件目录存放至mysql数据库 2 import requests 3 import time 4 from urllib.parse import urlencode 5 import urllib.parse 6 import os 7 from requests import Request, Session 8 import pymysql 9 class TouTiaoDeep: 10 def __init__(self): 11 self.url=\'https://www.toutiao.com/search_content/\' 12 self.imagePath=\'D:/toutiao/images/\' 13 self.headers={ 14 \'Accept\':\'application/json, text/javascript\', 15 \'Accept-Encoding\':\'gzip, deflate, br\', 16 \'Content-Type\':\'application/x-www-form-urlencoded\', 17 \'Host\': \'www.toutiao.com\', 18 \'User-Agent\': \'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:64.0) Gecko/20100101 Firefox/64.0\', 19 \'X-Requested-With\': \'XMLHttpRequest\' 20 } 21 self.param={ 22 \'offset\':0, 23 \'format\':\'json\', 24 \'keyword\': \'街拍\', 25 \'autoload\':\'true\', 26 \'count\':20, 27 \'cur_tab\':1, 28 \'form\':\'search_tab\', 29 \'pd\':\'synthesis\' 30 } 31 self.filePath="D:/toutiaoImages" 32 self.imgDict={} #{rows:[{title:\'\',pathName:\'\',images:[{name:\'\',desc:\'\',date:\'\',downloadUrl:\'\'}...},...] ]} 33 34 def getImgDict(self,offset): 35 self.param[\'offset\']=offset#偏移量 36 session=Session() 37 req=Request(method=\'GET\',url=self.url ,params=self.param,headers=self.headers ) 38 prep = session.prepare_request(req) 39 res = session.send(prep) 40 #print(res.status_code) 41 if res.status_code==200: 42 json=res.json() 43 #print(json) 44 for i in range(len(json[\'data\'])): 45 if \'has_image\' in json[\'data\'][i].keys() and json[\'data\'][i][\'has_image\']:#其中有视频列表组,因此排除那些视频组 46 # print("标题:",json[\'data\'][i][\'title\']) 47 # print("图库:",json[\'data\'][i][\'image_list\']) 48 # print("图库简介:",json[\'data\'][i][\'abstract\']) 49 # print("图片个数:",(len(json[\'data\'][i][\'image_list\']))) 50 yield { 51 \'group_id\':json[\'data\'][i][\'group_id\'], 52 \'groupTitle\':json[\'data\'][i][\'title\'], 53 \'groupImages\':json[\'data\'][i][\'image_list\'], 54 \'total\':len(json[\'data\'][i][\'image_list\']), 55 \'abstract\':json[\'data\'][i][\'abstract\'], 56 \'large_image_url\':json[\'data\'][i][\'large_image_url\'][:json[\'data\'][i][\'large_image_url\'].rindex(\'/\')] 57 # 例如:http://p3-tt.bytecdn.cn/large/pgc-image/2dc7e3cd2e0c46f69ee67c11c13ff58e 最后一个是图片id,前面是大图片地址(每一组大图片地址不同) 58 # print(item[\'large_image_url\'][:item[\'large_image_url\'].rindex(\'/\')])#获取组大图片的地址url 59 } 60 def imagesDownLoad(self,offset): 61 # 获得当前时间时间戳 62 now = int(time.time()) 63 #转换为其他日期格式,如:"%Y-%m-%d %H:%M:%S" 64 timeStruct = time.localtime(now) 65 strTime = time.strftime("%Y-%m-%d %H:%M:%S", timeStruct) 66 67 datas=self.getImgDict(offset) 68 for item in datas: 69 #print(item) 70 #下载图片信息 71 groupImages=item[\'groupImages\'] 72 print(item[\'groupTitle\']) 73 for i in groupImages: 74 #print(i[\'url\'][(i[\'url\'].rindex(\'/\')):])截取图片id即,图片地址最有一个namespace 75 imgURL=item[\'large_image_url\']+i[\'url\'][(i[\'url\'].rindex(\'/\')):]#拼成完成的image URL 76 print(imgURL) 77 #创建存储文件夹,组id命名 78 if not os.path.exists(self.imagePath+item[\'group_id\']): 79 os.makedirs(self.imagePath+item[\'group_id\']) 80 #获取图片存上面指定目录中 81 try: 82 a = urllib.request.urlopen(imgURL) 83 except : 84 a=urllib.request.urlopen("http://p1.pstatp.com/origin/pgc-image/"+i[\'url\'][(i[\'url\'].rindex(\'/\')):])#注意有一部分图片url路径是:http://p1.pstatp.com/origin/pgc-image/7290e8fcfdbc4a458d8ed7a6c1581283[前面的p1 可以任意换成p任意数字即可] 85 #注意;改程序在二十左右页抓取会出现图片路径资源错误 86 try: 87 f = open(self.imagePath+item[\'group_id\']+"/"+i[\'url\'][(i[\'url\'].rindex(\'/\')):]+\'.jpg\', "wb") 88 f.write(a.read()) 89 f.close() 90 #持久化图片信息 91 rows_1={ 92 \'imageId\': i[\'url\'][(i[\'url\'].rindex(\'/\')):], 93 \'imagesource\': imgURL, 94 \'imageName\':i[\'url\'][(i[\'url\'].rindex(\'/\')):]+\'.jpg\', 95 \'imageDesc\': \'无\', 96 \'groupid\': item[\'group_id\'] 97 } 98 self.imageInfPersistent(rows_1) 99 except: 100 print(\'文件下载失败\') 101 #持久化图片组信息 102 rows_2 = { 103 \'groupid\':item[\'group_id\'], 104 \'grouptitle\':item[\'groupTitle\'], 105 \'groupdesc\':item[\'abstract\'], 106 \'path\':\'toutiao/images/\'+item[\'group_id\'], 107 \'createTime\':strTime 108 } 109 self.imgGroupPersistent(rows_2) 110 111 112 113 #mysql数据库持久化 114 def mysqlPersistent(self,tableName,data): 115 db = pymysql.connect(host=\'localhost\', user=\'root\', password=\'admin\', port=3306, db=\'test\') 116 cursor = db.cursor() 117 try: 118 columns = \',\'.join(data.keys()) 119 values = \',\'.join([\'%s\'] * len(data)) 120 sql = \'insert into {table}({keys}) VALUES ({values}) \'.format(table=tableName, keys=columns, values=values) 121 cursor.execute(sql, tuple(data.values())) 122 db.commit() 123 except: 124 db.rollback() 125 finally: 126 db.close() 127 128 #持久化图片组信息 129 def imgGroupPersistent(self,groupDict): 130 #图组信息表:组id、组标题、组简介、本地存储路径、创建时间 131 self.mysqlPersistent(\'imageGroup\',groupDict) 132 133 #持久化图片信息 134 def imageInfPersistent(self,imageInfDict): 135 #图片信息表:图片id、来源地址、简介、所属组id 136 self.mysqlPersistent(\'imageInfo\', imageInfDict) 137 138 #创建表 139 def createImgTable(self): 140 sql_imgGroup= \'create table imageGroup(groupid varchar(50) primary key,grouptitle varchar(200) ,groupdesc text,path varchar(500),createTime varchar(50))\' 141 sql_imgInf=\'create table imageInfo(imageId varchar(50) primary key,imagesource varchar(200) ,imageName varchar(100),imageDesc text,groupid varchar(50) )\' 142 db = pymysql.connect(host=\'localhost\', user=\'root\', password=\'admin\', port=3306, db=\'test\') 143 144 cursor = db.cursor() 145 try : 146 cursor.execute(sql_imgGroup) 147 cursor.execute(sql_imgInf) 148 except: 149 print(\'表创建失败!\') 150 finally: 151 cursor.close() 152 153 #删除表 154 def dropImgTables(self): 155 sql_dropImageGroup = \' drop table if exists imageGroup \' 156 sql_dropImageInfo = \' drop table if exists imageInfo \' 157 db = pymysql.connect(host=\'localhost\', user=\'root\', password=\'admin\', port=3306, db=\'test\') 158 159 cursor = db.cursor() 160 try: 161 cursor.execute(sql_dropImageGroup) 162 cursor.execute(sql_dropImageInfo) 163 except: 164 print(\'表删除失败!\') 165 finally: 166 cursor.close() 167 168 169 if __name__==\'__main__\': 170 deep=TouTiaoDeep() 171 deep.dropImgTables()#删除表 172 deep.createImgTable()#创建表 173 #print(deep.getImgDict()) 174 for i in range(0,10*20,10): 175 deep.imagesDownLoad(i) 176 #deep.createImgTable()

操作后结果:注意,由于图片url拼接不能完全百分百正确,因此抓取数据会因为图片地址错误报异常;

以上是关于Python-爬虫-抓取头条街拍图片-1.1的主要内容,如果未能解决你的问题,请参考以下文章