一、简介

XPath 是一门在 XML 文档中查找信息的语言。XPath 可用来在 XML 文档中对元素和属性进行遍历。XPath 是 W3C XSLT 标准的主要元素,并且 XQuery 和 XPointer 都构建于 XPath 表达之上。

二、安装

pip3 install lxml

三、使用

1、导入

from lxml import etree

2、基本使用

from lxml import etree

wb_data = """

<div>

<ul>

<li class="item-0"><a href="link1.html">first item</a></li>

<li class="item-1"><a href="link2.html">second item</a></li>

<li class="item-inactive"><a href="link3.html">third item</a></li>

<li class="item-1"><a href="link4.html">fourth item</a></li>

<li class="item-0"><a href="link5.html">fifth item</a>

</ul>

</div>

"""

html = etree.HTML(wb_data)

print(html)

result = etree.tostring(html)

print(result.decode("utf-8"))

从下面的结果来看,我们打印机html其实就是一个python对象,etree.tostring(html)则是不全里html的基本写法,补全了缺胳膊少腿的标签。

<Element html at 0x39e58f0>

<html><body><div>

<ul>

<li class="item-0"><a href="link1.html">first item</a></li>

<li class="item-1"><a href="link2.html">second item</a></li>

<li class="item-inactive"><a href="link3.html">third item</a></li>

<li class="item-1"><a href="link4.html">fourth item</a></li>

<li class="item-0"><a href="link5.html">fifth item</a>

</li></ul>

</div>

</body></html>

3、获取某个标签的内容(基本使用),注意,获取a标签的所有内容,a后面就不用再加正斜杠,否则报错。

写法一

html = etree.HTML(wb_data)

html_data = html.xpath(\'/html/body/div/ul/li/a\')

print(html)

for i in html_data:

print(i.text)

<Element html at 0x12fe4b8>

first item

second item

third item

fourth item

fifth item

写法二(直接在需要查找内容的标签后面加一个/text()就行)

html = etree.HTML(wb_data)

html_data = html.xpath(\'/html/body/div/ul/li/a/text()\')

print(html)

for i in html_data:

print(i)

<Element html at 0x138e4b8>

first item

second item

third item

fourth item

fifth item

4、打开读取html文件

#使用parse打开html的文件 html = etree.parse(\'test.html\') html_data = html.xpath(\'//*\')

#打印是一个列表,需要遍历 print(html_data) for i in html_data: print(i.text)

html = etree.parse(\'test.html\')

html_data = etree.tostring(html,pretty_print=True)

res = html_data.decode(\'utf-8\')

print(res)

打印:

<div>

<ul>

<li class="item-0"><a href="link1.html">first item</a></li>

<li class="item-1"><a href="link2.html">second item</a></li>

<li class="item-inactive"><a href="link3.html">third item</a></li>

<li class="item-1"><a href="link4.html">fourth item</a></li>

<li class="item-0"><a href="link5.html">fifth item</a></li>

</ul>

</div>

5、打印指定路径下a标签的属性(可以通过遍历拿到某个属性的值,查找标签的内容)

html = etree.HTML(wb_data)

html_data = html.xpath(\'/html/body/div/ul/li/a/@href\')

for i in html_data:

print(i)

打印:

link1.html

link2.html

link3.html

link4.html

link5.html

6、我们知道我们使用xpath拿到得都是一个个的ElementTree对象,所以如果需要查找内容的话,还需要遍历拿到数据的列表。

查到绝对路径下a标签属性等于link2.html的内容。

html = etree.HTML(wb_data)

html_data = html.xpath(\'/html/body/div/ul/li/a[@href="link2.html"]/text()\')

print(html_data)

for i in html_data:

print(i)

打印:

[\'second item\']

second item

7、上面我们找到全部都是绝对路径(每一个都是从根开始查找),下面我们查找相对路径,例如,查找所有li标签下的a标签内容。

html = etree.HTML(wb_data)

html_data = html.xpath(\'//li/a/text()\')

print(html_data)

for i in html_data:

print(i)

打印:

[\'first item\', \'second item\', \'third item\', \'fourth item\', \'fifth item\']

first item

second item

third item

fourth item

fifth item

8、上面我们使用绝对路径,查找了所有a标签的属性等于href属性值,利用的是/---绝对路径,下面我们使用相对路径,查找一下l相对路径下li标签下的a标签下的href属性的值,注意,a标签后面需要双//。

html = etree.HTML(wb_data)

html_data = html.xpath(\'//li/a//@href\')

print(html_data)

for i in html_data:

print(i)

打印:

[\'link1.html\', \'link2.html\', \'link3.html\', \'link4.html\', \'link5.html\']

link1.html

link2.html

link3.html

link4.html

link5.html

9、相对路径下跟绝对路径下查特定属性的方法类似,也可以说相同。

html = etree.HTML(wb_data)

html_data = html.xpath(\'//li/a[@href="link2.html"]\')

print(html_data)

for i in html_data:

print(i.text)

打印:

[<Element a at 0x216e468>]

second item

10、查找最后一个li标签里的a标签的href属性

html = etree.HTML(wb_data)

html_data = html.xpath(\'//li[last()]/a/text()\')

print(html_data)

for i in html_data:

print(i)

打印:

[\'fifth item\']

fifth item

11、查找倒数第二个li标签里的a标签的href属性

html = etree.HTML(wb_data)

html_data = html.xpath(\'//li[last()-1]/a/text()\')

print(html_data)

for i in html_data:

print(i)

打印:

[\'fourth item\']

fourth item

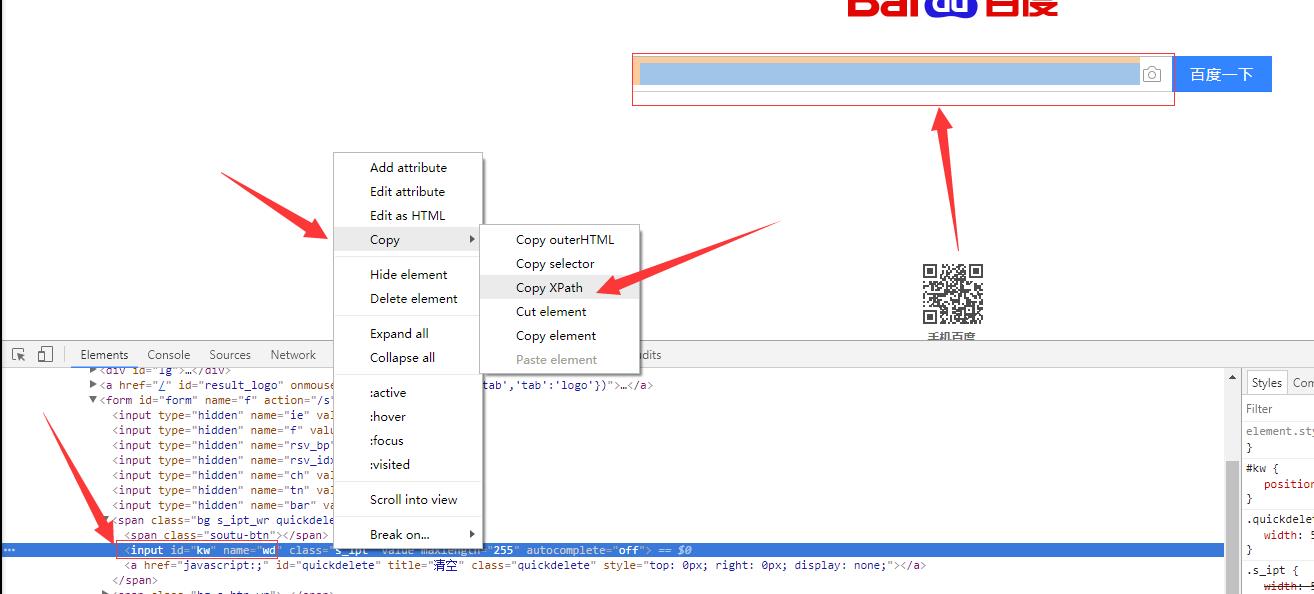

12、如果在提取某个页面的某个标签的xpath路径的话,可以如下图:

//*[@id="kw"]

解释:使用相对路径查找所有的标签,属性id等于kw的标签。

#!/usr/bin/env python # -*- coding:utf-8 -*- from scrapy.selector import Selector, HtmlXPathSelector from scrapy.http import HtmlResponse html = """<!DOCTYPE html> <html> <head lang="en"> <meta charset="UTF-8"> <title></title> </head> <body> <ul> <li class="item-"><a id=\'i1\' href="link.html">first item</a></li> <li class="item-0"><a id=\'i2\' href="llink.html">first item</a></li> <li class="item-1"><a href="llink2.html">second item<span>vv</span></a></li> </ul> <div><a href="llink2.html">second item</a></div> </body> </html> """ response = HtmlResponse(url=\'http://example.com\', body=html,encoding=\'utf-8\') # hxs = HtmlXPathSelector(response) # print(hxs) # hxs = Selector(response=response).xpath(\'//a\') # print(hxs) # hxs = Selector(response=response).xpath(\'//a[2]\') # print(hxs) # hxs = Selector(response=response).xpath(\'//a[@id]\') # print(hxs) # hxs = Selector(response=response).xpath(\'//a[@id="i1"]\') # print(hxs) # hxs = Selector(response=response).xpath(\'//a[@href="link.html"][@id="i1"]\') # print(hxs) # hxs = Selector(response=response).xpath(\'//a[contains(@href, "link")]\') # print(hxs) # hxs = Selector(response=response).xpath(\'//a[starts-with(@href, "link")]\') # print(hxs) # hxs = Selector(response=response).xpath(\'//a[re:test(@id, "i\\d+")]\') # print(hxs) # hxs = Selector(response=response).xpath(\'//a[re:test(@id, "i\\d+")]/text()\').extract() # print(hxs) # hxs = Selector(response=response).xpath(\'//a[re:test(@id, "i\\d+")]/@href\').extract() # print(hxs) # hxs = Selector(response=response).xpath(\'/html/body/ul/li/a/@href\').extract() # print(hxs) # hxs = Selector(response=response).xpath(\'//body/ul/li/a/@href\').extract_first() # print(hxs) # ul_list = Selector(response=response).xpath(\'//body/ul/li\') # for item in ul_list: # v = item.xpath(\'./a/span\') # # 或 # # v = item.xpath(\'a/span\') # # 或 # # v = item.xpath(\'*/a/span\') # print(v)