python解析字体反爬

Posted eastonliu

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了python解析字体反爬相关的知识,希望对你有一定的参考价值。

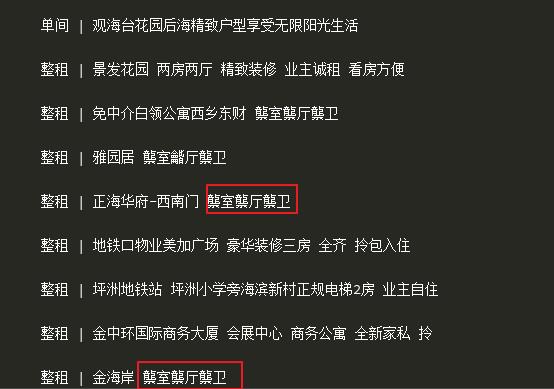

爬取一些网站的信息时,偶尔会碰到这样一种情况:网页浏览显示是正常的,用python爬取下来是乱码,F12用开发者模式查看网页源代码也是乱码。这种一般是网站设置了字体反爬

一、58同城

用谷歌浏览器打开58同城:https://sz.58.com/chuzu/,按F12用开发者模式查看网页源代码,可以看到有些房屋出租标题和月租是乱码,但是在网页上浏览却显示是正常的。

用python爬取下来也是乱码:

回到网页上,右键查看网页源代码,搜索font-face关键字,可以看到一大串用base64加密的字符,把这些加密字符复制下来

在python中用base64对复制下来的加密字符进行解码并保存为58.ttf

import base64 font_face=\'AAEAAAALAIAAAwAwR1NVQiCLJXoAAAE4AAAAVE9TLzL4XQjtAAABjAAAAFZjbWFwq8B/ZwAAAhAAAAIuZ2x5ZuWIN0cAAARYAAADdGhlYWQTmDvfAAAA4AAAADZoaGVhCtADIwAAALwAAAAkaG10eC7qAAAAAAHkAAAALGxvY2ED7gSyAAAEQAAAABhtYXhwARgANgAAARgAAAAgbmFtZTd6VP8AAAfMAAACanBvc3QFRAYqAAAKOAAAAEUAAQAABmb+ZgAABLEAAAAABGgAAQAAAAAAAAAAAAAAAAAAAAsAAQAAAAEAAOu1IchfDzz1AAsIAAAAAADYCHhnAAAAANgIeGcAAP/mBGgGLgAAAAgAAgAAAAAAAAABAAAACwAqAAMAAAAAAAIAAAAKAAoAAAD/AAAAAAAAAAEAAAAKADAAPgACREZMVAAObGF0bgAaAAQAAAAAAAAAAQAAAAQAAAAAAAAAAQAAAAFsaWdhAAgAAAABAAAAAQAEAAQAAAABAAgAAQAGAAAAAQAAAAEERAGQAAUAAAUTBZkAAAEeBRMFmQAAA9cAZAIQAAACAAUDAAAAAAAAAAAAAAAAAAAAAAAAAAAAAFBmRWQAQJR2n6UGZv5mALgGZgGaAAAAAQAAAAAAAAAAAAAEsQAABLEAAASxAAAEsQAABLEAAASxAAAEsQAABLEAAASxAAAEsQAAAAAABQAAAAMAAAAsAAAABAAAAaYAAQAAAAAAoAADAAEAAAAsAAMACgAAAaYABAB0AAAAFAAQAAMABJR2lY+ZPJpLnjqeo59kn5Kfpf//AACUdpWPmTyaS546nqOfZJ+Sn6T//wAAAAAAAAAAAAAAAAAAAAAAAAABABQAFAAUABQAFAAUABQAFAAUAAAABgAIAAEABQAKAAIABwADAAQACQAAAQYAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAADAAAAAAAiAAAAAAAAAAKAACUdgAAlHYAAAAGAACVjwAAlY8AAAAIAACZPAAAmTwAAAABAACaSwAAmksAAAAFAACeOgAAnjoAAAAKAACeowAAnqMAAAACAACfZAAAn2QAAAAHAACfkgAAn5IAAAADAACfpAAAn6QAAAAEAACfpQAAn6UAAAAJAAAAAAAAACgAPgBmAJoAvgDoASQBOAF+AboAAgAA/+YEWQYnAAoAEgAAExAAISAREAAjIgATECEgERAhIFsBEAECAez+6/rs/v3IATkBNP7S/sEC6AGaAaX85v54/mEBigGB/ZcCcwKJAAABAAAAAAQ1Bi4ACQAAKQE1IREFNSURIQQ1/IgBW/6cAicBWqkEmGe0oPp7AAEAAAAABCYGJwAXAAApATUBPgE1NCYjIgc1NjMyFhUUAgcBFSEEGPxSAcK6fpSMz7y389Hym9j+nwLGqgHButl0hI2wx43iv5D+69b+pwQAAQAA/+YEGQYnACEAABMWMzI2NRAhIzUzIBE0ISIHNTYzMhYVEAUVHgEVFAAjIiePn8igu/5bgXsBdf7jo5CYy8bw/sqow/7T+tyHAQN7nYQBJqIBFP9uuVjPpf7QVwQSyZbR/wBSAAACAAAAAARoBg0ACgASAAABIxEjESE1ATMRMyERNDcjBgcBBGjGvv0uAq3jxv58BAQOLf4zAZL+bgGSfwP8/CACiUVaJlH9TwABAAD/5gQhBg0AGAAANxYzMjYQJiMiBxEhFSERNjMyBBUUACEiJ7GcqaDEx71bmgL6/bxXLPUBEv7a/v3Zbu5mswEppA4DE63+SgX42uH+6kAAAAACAAD/5gRbBicAFgAiAAABJiMiAgMzNjMyEhUUACMiABEQACEyFwEUFjMyNjU0JiMiBgP6eYTJ9AIFbvHJ8P7r1+z+8wFhASClXv1Qo4eAoJeLhKQFRj7+ov7R1f762eP+3AFxAVMBmgHjLfwBmdq8lKCytAAAAAABAAAAAARNBg0ABgAACQEjASE1IQRN/aLLAkD8+gPvBcn6NwVgrQAAAwAA/+YESgYnABUAHwApAAABJDU0JDMyFhUQBRUEERQEIyIkNRAlATQmIyIGFRQXNgEEFRQWMzI2NTQBtv7rAQTKufD+3wFT/un6zf7+AUwBnIJvaJLz+P78/uGoh4OkAy+B9avXyqD+/osEev7aweXitAEohwF7aHh9YcJlZ/7qdNhwkI9r4QAAAAACAAD/5gRGBicAFwAjAAA3FjMyEhEGJwYjIgA1NAAzMgAREAAhIicTFBYzMjY1NCYjIga5gJTQ5QICZvHD/wABGN/nAQT+sP7Xo3FxoI16pqWHfaTSSgFIAS4CAsIBDNbkASX+lf6l/lP+MjUEHJy3p3en274AAAAAABAAxgABAAAAAAABAA8AAAABAAAAAAACAAcADwABAAAAAAADAA8AFgABAAAAAAAEAA8AJQABAAAAAAAFAAsANAABAAAAAAAGAA8APwABAAAAAAAKACsATgABAAAAAAALABMAeQADAAEECQABAB4AjAADAAEECQACAA4AqgADAAEECQADAB4AuAADAAEECQAEAB4A1gADAAEECQAFABYA9AADAAEECQAGAB4BCgADAAEECQAKAFYBKAADAAEECQALACYBfmZhbmdjaGFuLXNlY3JldFJlZ3VsYXJmYW5nY2hhbi1zZWNyZXRmYW5nY2hhbi1zZWNyZXRWZXJzaW9uIDEuMGZhbmdjaGFuLXNlY3JldEdlbmVyYXRlZCBieSBzdmcydHRmIGZyb20gRm9udGVsbG8gcHJvamVjdC5odHRwOi8vZm9udGVsbG8uY29tAGYAYQBuAGcAYwBoAGEAbgAtAHMAZQBjAHIAZQB0AFIAZQBnAHUAbABhAHIAZgBhAG4AZwBjAGgAYQBuAC0AcwBlAGMAcgBlAHQAZgBhAG4AZwBjAGgAYQBuAC0AcwBlAGMAcgBlAHQAVgBlAHIAcwBpAG8AbgAgADEALgAwAGYAYQBuAGcAYwBoAGEAbgAtAHMAZQBjAHIAZQB0AEcAZQBuAGUAcgBhAHQAZQBkACAAYgB5ACAAcwB2AGcAMgB0AHQAZgAgAGYAcgBvAG0AIABGAG8AbgB0AGUAbABsAG8AIABwAHIAbwBqAGUAYwB0AC4AaAB0AHQAcAA6AC8ALwBmAG8AbgB0AGUAbABsAG8ALgBjAG8AbQAAAAIAAAAAAAAAFAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACwECAQMBBAEFAQYBBwEIAQkBCgELAQwAAAAAAAAAAAAAAAAAAAAA\' b = base64.b64decode(font_face) with open(\'58.ttf\',\'wb\') as f: f.write(b)

在网上搜索下载并安装字体处理软件FontCreator,用软件打开保存的解码文件58.ttf

现在我们可以得到解决问题的思路了:

1、获取自定义字体和正常字体的映射表,比如:9F92对应的数字是2,9EA3对应的是1。

2、把页面上的自定义字体替换成正常字体,这样就可以正常爬取了。

怎样来获取字体映射表呢?静态的还好,我们用FontCreator工具解析后,直接写死到字典中。但是如果字体映射关系是动态的呢?比如,我们刷新当前页面后,再来查看页面源码:

字体映射关系变了,这样的话,就只能请求一次页面,就获取一次映射关系,用第三方库fontTools来实现。

安装fontTools库,直接pip install fontTools

先来看下ttf文件中有哪些信息,直接打开ttf文件那当然看不了,把它转换成xml文件就可以查看了

from fontTools.ttLib import TTFont font = TTFont(\'58.ttf\') # 打开本地的ttf文件 font.saveXML(\'58.xml\') # 转换成xml

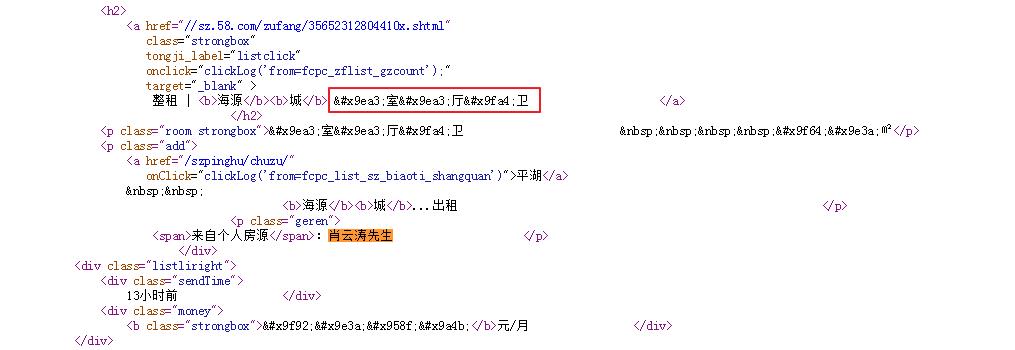

打开xml文件,可以看到类似html标签的文件结构:

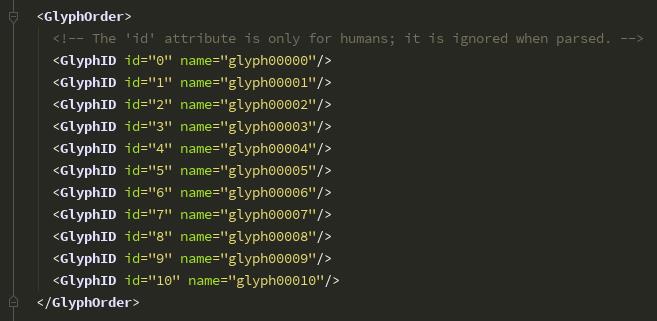

点开GlyphOrder标签,可以看到Id和name

点开glyf标签,看到的是name和一些坐标点,这些座标点就是描绘字体形状的,这里不需要关注这些坐标点。

点开cmap标签,是编码和name的对应关系

从这张图我们可以发现,glyph00001对应的是数字0,glyph00002对应的是数字1,以此类推......glyph00010对应的是数字9

用代码来获取编码和name的对应关系:

from fontTools.ttLib import TTFont font = TTFont(\'58.ttf\') #打开本地的ttf文件 bestcmap = font[\'cmap\'].getBestCmap() print(bestcmap)

输出如下:

{38006: \'glyph00006\', 38287: \'glyph00008\', 39228: \'glyph00001\', 39499: \'glyph00005\', 40506: \'glyph00010\', 40611: \'glyph00002\', 40804: \'glyph00007\', 40850: \'glyph00003\', 40868: \'glyph00004\', 40869: \'glyph00009\'}

输出的是一个字典,key是编码的int型

我们把这个字典转一下,变成编码和正常字体的映射关系:

import re from fontTools.ttLib import TTFont font = TTFont(\'58.ttf\') #打开本地的ttf文件 bestcmap = font[\'cmap\'].getBestCmap() newmap = dict() for key in bestcmap.keys(): value = int(re.search(r\'(\\d+)\', bestcmap[key]).group(1)) - 1 key = hex(key) newmap[key] = value print(newmap)

输出:

{\'0x9476\': 5, \'0x958f\': 7, \'0x993c\': 0, \'0x9a4b\': 4, \'0x9e3a\': 9, \'0x9ea3\': 1, \'0x9f64\': 6, \'0x9f92\': 2, \'0x9fa4\': 3, \'0x9fa5\': 8}

现在就可以把页面上的自定义字体替换成正常字体,再解析了,全部代码如下:

import requests import re import base64 import io from lxml import etree from fontTools.ttLib import TTFont url = r\'https://sz.58.com/chuzu/\' headers = { \'User-Agent\':\'Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)\' } response = requests.get(url=url,headers=headers) # 获取加密字符串 base64_str = re.search("base64,(.*?)\'\\)",response.text).group(1) b = base64.b64decode(base64_str) font = TTFont(io.BytesIO(b)) bestcmap = font[\'cmap\'].getBestCmap() newmap = dict() for key in bestcmap.keys(): value = int(re.search(r\'(\\d+)\', bestcmap[key]).group(1)) - 1 key = hex(key) newmap[key] = value # 把页面上自定义字体替换成正常字体 response_ = response.text for key,value in newmap.items(): key_ = key.replace(\'0x\',\'&#x\') + \';\' if key_ in response_: response_ = response_.replace(key_,str(value)) # 获取标题 rec = etree.HTML(response_) lis = rec.xpath(\'//ul[@class="listUl"]/li\') for li in lis: title = li.xpath(\'./div[@class="des"]/h2/a/text()\') if title: title = title[0] print(title)

以上是关于python解析字体反爬的主要内容,如果未能解决你的问题,请参考以下文章