python 爬虫学习--Beautiful Soup插件

Posted 音译昌

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了python 爬虫学习--Beautiful Soup插件相关的知识,希望对你有一定的参考价值。

Beautiful Soup插件学习使用参考:https://www.crummy.com/software/BeautifulSoup/bs4/doc.zh/#recursive

使用Beautiful Soup前的准备,先确保安装了该插件(该版本为:beautifulsoup4):

C:\\Program Files (x86)\\Python36-32\\Scripts>pip install beautifulsoup4 Requirement already satisfied: beautifulsoup4 in c:\\program files (x86)\\python36-32\\lib\\site-packages (4.6.3)

通过python自带的urllib.request模块解析目标网址:

#!/usr/bin/python # -*- coding: UTF-8 -*- from bs4 import BeautifulSoup import urllib.request import time from python.common.ConnectDataBase import ConnectionMyslq # 解析栏目数据 def getHtmlData(url): # 请求 request = urllib.request.Request(url) # 结果 response = urllib.request.urlopen(request) data = response.read() data = data.decode(\'gbk\') return data

获取到需要解析的页面数据后,开始调用Beautiful Soup插件解析页面,提取需要的数据:

# 通过select样式选择器,选择需要的内容 def soupData2(data): soup = BeautifulSoup(data) # 获取今日推荐内容(使用样式选择器) infostag = soup.select(\'.today_news > ul > li > a\') infos = list() for child in infostag: info = {} info[\'title\'] = child.get_text() info[\'href\'] = child.get(\'href\') info[\'time\'] = time.strftime(\'%Y-%m-%d\', time.localtime()) # detailData = getHtmlData(child.get(\'href\')) # content = soupDetial(detailData) # info[\'content\'] = content #调用数据库方法,将数据入库 insertInfo(info) # infos.append(info) # print(infos)

==============================================分割线===================================================================

接下来数据库的操作,前提条件安装了pymysql:

pymysql参考地址:https://github.com/PyMySQL/PyMySQL

C:\\Program Files (x86)\\Python36-32\\Scripts>python -m pip install PyMySQL Requirement already satisfied: PyMySQL in c:\\program files (x86)\\python36-32\\lib\\site-packages\\pymysql-0.7.4-py3.6.egg (0.7.4)

导入数据库连接模块:

# 导入 pymysql import pymysql

创建数据库连接类 和 对应的数据库操作方法:

\'\'\' 数据库连接 \'\'\' class ConnectionMyslq(object): def __init__(self, ip, user_name, passwd, db, port, char=\'utf8\'): self.ip = ip self.port = port self.username = user_name self.passwd = passwd self.mysqldb = db self.char = char self.MySQL_db = pymysql.connect( host=self.ip, user=self.username, passwd=self.passwd, db=self.mysqldb, port=self.port, charset=self.char) # 查询数据 def findList(self, sql): cursor = self.MySQL_db.cursor() MySQL_sql = sql try: # 执行SQL语句 cursor.execute(MySQL_sql) # 获取所有记录列表 results = cursor.fetchall() except Exception: print("Error: unable to fetch data") print(Exception) self.MySQL_db.close() self.MySQL_db.close() return results # 数据增删改查 def exe_sql(self, sql): cursor = self.MySQL_db.cursor() MySQL_sql = sql try: # 执行SQL语句 cursor.execute(MySQL_sql) self.MySQL_db.commit() except Exception: print("Error: unable to fetch data") print(Exception) self.MySQL_db.close() self.MySQL_db.close()

编写数据库操作的方法:

# 数据入库 def insertInfo(info): conn = ConnectionMyslq("localhost", "root", "Gepoint", "pythondb", 3306) sql = \'insert into news_info(title, link, time) values("%s", "%s", "%s")\' % ( info[\'title\'], info[\'href\'], info[\'time\']) conn.exe_sql(sql)

# 数据查询 def findAll(): conn = ConnectionMyslq("localhost", "root", "Gepoint", "pythondb", 3306) sql = \'select * from news_info\' result = conn.findList(sql) print(result)

最后执行方法:

if __name__ == "__main__": # 中间列数据接口 http://temp.163.com/special/00804KVA/cm_guonei_02.js?callback=data01_callback # 网易的今日推荐新闻 data = getHtmlData(url=\'http://news.163.com/domestic/\') soupData2(data)

findAll()

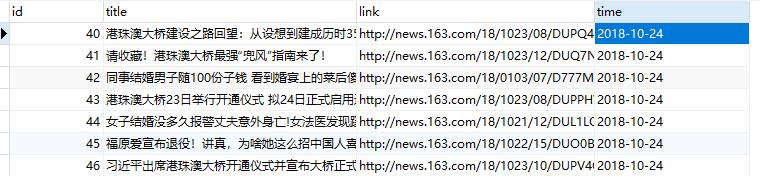

执行结果:

以上是关于python 爬虫学习--Beautiful Soup插件的主要内容,如果未能解决你的问题,请参考以下文章