通过PythonBeautifulSoup爬取Gitee热门开源项目

Posted wyl-0120

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了通过PythonBeautifulSoup爬取Gitee热门开源项目相关的知识,希望对你有一定的参考价值。

一、安装

1、通过requests 对响应内容进行处理,requests.get()方法会返回一个Response对象

pip install requests

2、beautifulSoup对网页解析不仅灵活、高效而且非常方便,支持多种解析器

pip install beautifulsoup4

3、pymongo是python操作mongo的工具包

pip install pymongo

4、安装mongo

二、分析网页&源代码

1、确定目标:首先要知道要抓取哪个页面的哪个版块

2、分析目标:确定抓取目标之后要分析URL链接格式以及拼接参数的含义其次还要分析页面源代码确定数据格式

3、编写爬虫代码 并 执行

三、编写代码

# -*- coding: utf-8 -*- # __author__ : "初一丶" 公众号:程序员共成长 # __time__ : 2018/8/22 18:51 # __file__ : spider_mayun.py # 导入相关库 import requests from bs4 import BeautifulSoup import pymongo """ 通过分析页面url 查询不同语言的热门信息是有language这个参数决定的 """ # language = ‘java‘ language = ‘python‘ domain = ‘https://gitee.com‘ uri = ‘/explore/starred?lang=%s‘ % language url = domain + uri # 用户代理 user_agent = ‘Mozilla/5.0 (Macintosh;Intel Mac OS X 10_12_6) ‘ ‘AppleWebKit/537.36(KHTML, like Gecko) ‘ ‘Chrome/67.0.3396.99Safari/537.36‘ # 构建header header = {‘User_Agent‘: user_agent} # 获取页面源代码 html = requests.get(url, headers=header).text # 获取Beautiful对象 soup = BeautifulSoup(html) # 热门类型分类 今日热门 本周热门 data-tab标签来区分当日热门和本周热门 hot_type = [‘today-trending‘, ‘week-trending‘] # divs = soup.find_all(‘div‘, class_=‘ui tab active‘) # 创建热门列表 hot_gitee = [] for i in hot_type: # 通过热门标签 查询该热门下的数据 divs = soup.find_all(‘div‘, attrs={‘data-tab‘: i}) divs = divs[0].select(‘div.row‘) for div in divs: gitee = {} a_content = div.select(‘div.sixteen > h3 > a‘) div_content = div.select(‘div.project-desc‘) # 项目描述 script = div_content[0].string # title属性 title = a_content[0][‘title‘] arr = title.split(‘/‘) # 作者名字 author_name = arr[0] # 项目名字 project_name = arr[1] # 项目url href = domain + a_content[0][‘href‘] # 进入热门项目子页面 child_page = requests.get(href, headers=header).text child_soup = BeautifulSoup(child_page) child_div = child_soup.find(‘div‘, class_=‘ui small secondary pointing menu‘) """ <div class="ui small secondary pointing menu"> <a class="item active" data-type="http" data-url="https://gitee.com/dlg_center/cms.git">HTTPS</a> <a class="item" data-type="ssh" data-url="[email protected]:dlg_center/cms.git">SSH</a> </div> """ a_arr = child_div.findAll(‘a‘) # git http下载链接 http_url = a_arr[0][‘data-url‘] # git ssh下载链接 ssh_url = a_arr[1][‘data-url‘] gitee[‘project_name‘] = project_name gitee[‘author_name‘] = author_name gitee[‘href‘] = href gitee[‘script‘] = script gitee[‘http_url‘] = http_url gitee[‘ssh_url‘] = ssh_url gitee[‘hot_type‘] = i # 连接mongo hot_gitee.append(gitee) print(hot_gitee) # 链接mongo参数 HOST, PORT, DB, TABLE = ‘127.0.0.1‘, 27017, ‘spider‘, ‘gitee‘ # 创建链接 client = pymongo.MongoClient(host=HOST, port=PORT) # 选定库 db = client[DB] tables = db[TABLE] # 插入mongo库 tables.insert_many(hot_gitee)

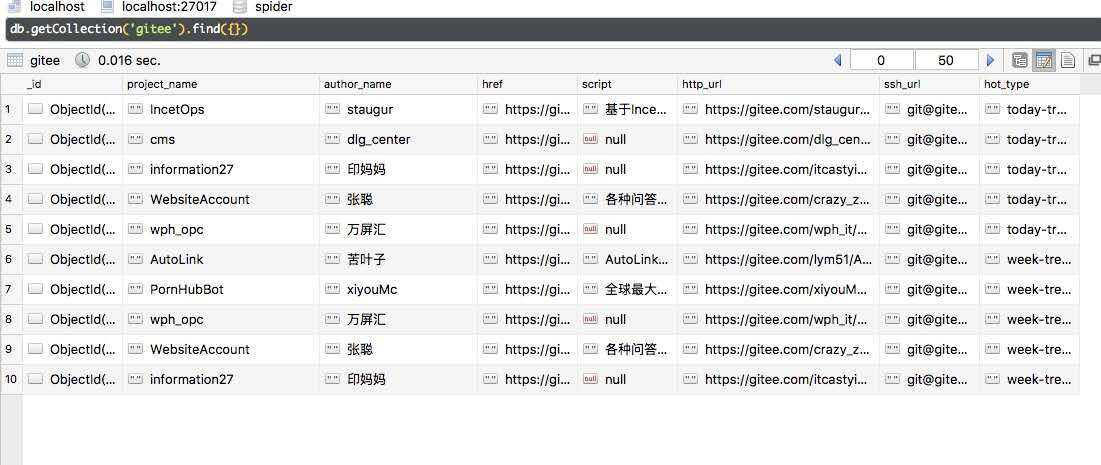

四、执行结果

[{

‘project_name‘: ‘IncetOps‘,

‘author_name‘: ‘staugur‘,

‘href‘: ‘https://gitee.com/staugur/IncetOps‘,

‘script‘: ‘基于Inception,一个审计、执行、回滚、统计sql的开源系统‘,

‘http_url‘: ‘https://gitee.com/staugur/IncetOps.git‘,

‘ssh_url‘: ‘[email protected]:staugur/IncetOps.git‘,

‘hot_type‘: ‘today-trending‘

}, {

‘project_name‘: ‘cms‘,

‘author_name‘: ‘dlg_center‘,

‘href‘: ‘https://gitee.com/dlg_center/cms‘,

‘script‘: None,

‘http_url‘: ‘https://gitee.com/dlg_center/cms.git‘,

‘ssh_url‘: ‘[email protected]:dlg_center/cms.git‘,

‘hot_type‘: ‘today-trending‘

}, {

‘project_name‘: ‘WebsiteAccount‘,

‘author_name‘: ‘张聪‘,

‘href‘: ‘https://gitee.com/crazy_zhangcong/WebsiteAccount‘,

‘script‘: ‘各种问答平台账号注册‘,

‘http_url‘: ‘https://gitee.com/crazy_zhangcong/WebsiteAccount.git‘,

‘ssh_url‘: ‘[email protected]:crazy_zhangcong/WebsiteAccount.git‘,

‘hot_type‘: ‘today-trending‘

}, {

‘project_name‘: ‘chain‘,

‘author_name‘: ‘何全‘,

‘href‘: ‘https://gitee.com/hequan2020/chain‘,

‘script‘: ‘linux 云主机 管理系统,包含 CMDB,webssh登录、命令执行、异步执行shell/python/yml等。持续更...‘,

‘http_url‘: ‘https://gitee.com/hequan2020/chain.git‘,

‘ssh_url‘: ‘[email protected]:hequan2020/chain.git‘,

‘hot_type‘: ‘today-trending‘

}, {

‘project_name‘: ‘Lepus‘,

‘author_name‘: ‘茹憶。‘,

‘href‘: ‘https://gitee.com/ruzuojun/Lepus‘,

‘script‘: ‘简洁、直观、强大的开源企业级数据库监控系统,MySQL/Oracle/MongoDB/Redis一站式监控,让数据库监控更简...‘,

‘http_url‘: ‘https://gitee.com/ruzuojun/Lepus.git‘,

‘ssh_url‘: ‘[email protected]:ruzuojun/Lepus.git‘,

‘hot_type‘: ‘today-trending‘

}, {

‘project_name‘: ‘AutoLink‘,

‘author_name‘: ‘苦叶子‘,

‘href‘: ‘https://gitee.com/lym51/AutoLink‘,

‘script‘: ‘AutoLink是一个开源Web IDE自动化测试集成解决方案‘,

‘http_url‘: ‘https://gitee.com/lym51/AutoLink.git‘,

‘ssh_url‘: ‘[email protected]:lym51/AutoLink.git‘,

‘hot_type‘: ‘week-trending‘

}, {

‘project_name‘: ‘PornHubBot‘,

‘author_name‘: ‘xiyouMc‘,

‘href‘: ‘https://gitee.com/xiyouMc/pornhubbot‘,

‘script‘: ‘全球最大成人网站PornHub爬虫 (Scrapy、MongoDB) 一天500w的数据‘,

‘http_url‘: ‘https://gitee.com/xiyouMc/pornhubbot.git‘,

‘ssh_url‘: ‘[email protected]:xiyouMc/pornhubbot.git‘,

‘hot_type‘: ‘week-trending‘

}, {

‘project_name‘: ‘wph_opc‘,

‘author_name‘: ‘万屏汇‘,

‘href‘: ‘https://gitee.com/wph_it/wph_opc‘,

‘script‘: None,

‘http_url‘: ‘https://gitee.com/wph_it/wph_opc.git‘,

‘ssh_url‘: ‘[email protected]:wph_it/wph_opc.git‘,

‘hot_type‘: ‘week-trending‘

}, {

‘project_name‘: ‘WebsiteAccount‘,

‘author_name‘: ‘张聪‘,

‘href‘: ‘https://gitee.com/crazy_zhangcong/WebsiteAccount‘,

‘script‘: ‘各种问答平台账号注册‘,

‘http_url‘: ‘https://gitee.com/crazy_zhangcong/WebsiteAccount.git‘,

‘ssh_url‘: ‘[email protected]:crazy_zhangcong/WebsiteAccount.git‘,

‘hot_type‘: ‘week-trending‘

}, {

‘project_name‘: ‘information27‘,

‘author_name‘: ‘印妈妈‘,

‘href‘: ‘https://gitee.com/itcastyinqiaoyin/information27‘,

‘script‘: None,

‘http_url‘: ‘https://gitee.com/itcastyinqiaoyin/information27.git‘,

‘ssh_url‘: ‘[email protected]:itcastyinqiaoyin/information27.git‘,

‘hot_type‘: ‘week-trending‘

}]

以上是关于通过PythonBeautifulSoup爬取Gitee热门开源项目的主要内容,如果未能解决你的问题,请参考以下文章

Python Beautiful Soup - 通过 Steam 的年龄检查