运行spark时提示 env: ‘python’: No such file or directory

Posted trp

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了运行spark时提示 env: ‘python’: No such file or directory相关的知识,希望对你有一定的参考价值。

运行spark时提示如下信息:

hadoop@MS-YFYCEFQFDMXS:/home/trp$ cd /usr/local/spark hadoop@MS-YFYCEFQFDMXS:/usr/local/spark$ ./bin/pyspark env: ‘python’: No such file or directory

原因:没有配置Spark python的环境变量

解决步骤:

1、添加python相关环境变量

hadoop@MS-YFYCEFQFDMXS:/usr/local/spark$ nano ~/.bashrc

在末尾插入:注: py4j-0.10.9-src.zip 在目录下 \\usr\\local\\spark\\python\\lib

export PYTHONPATH=$SPARK_HOME/python:$SPARK_HOME/python/lib/py4j-0.10.9-src.zip:$PYTHONPATH

export PYSPARK_PYTHON=python3

修改后使用 Ctrl+X,

提示:save modified buffer ...? ,选择 :yes

又提示:file name to write :***.launch ,选择:Ctrl+T

在下一个界面用 “上下左右” 按键 选择要保存的文件名,

然后直接点击 “Enter” 按键即可保存。

2、让环境变量生效

hadoop@MS-YFYCEFQFDMXS:/usr/local/spark$ source ~/.bashrc

3、运行pyspark

hadoop@MS-YFYCEFQFDMXS:/usr/local/spark$ ./bin/pyspark Python 3.8.2 (default, Mar 13 2020, 10:14:16) [GCC 9.3.0] on linux Type "help", "copyright", "credits" or "license" for more information. 2020-07-19 21:35:40,464 WARN util.Utils: Your hostname, MS-YFYCEFQFDMXS resolves to a loopback address: 127.0.1.1; using 192.168.13.139 instead (on interface wifi0) 2020-07-19 21:35:40,466 WARN util.Utils: Set SPARK_LOCAL_IP if you need to bind to another address WARNING: An illegal reflective access operation has occurred WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/usr/local/spark/jars/spark-unsafe_2.12-3.0.0.jar) to constructor java.nio.DirectByteBuffer(long,int) WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations WARNING: All illegal access operations will be denied in a future release 2020-07-19 21:35:41,865 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). Welcome to ____ __ / __/__ ___ _____/ /__ _\\ \\/ _ \\/ _ `/ __/ \'_/ /__ / .__/\\_,_/_/ /_/\\_\\ version 3.0.0 /_/ Using Python version 3.8.2 (default, Mar 13 2020 10:14:16) SparkSession available as \'spark\'. >>>

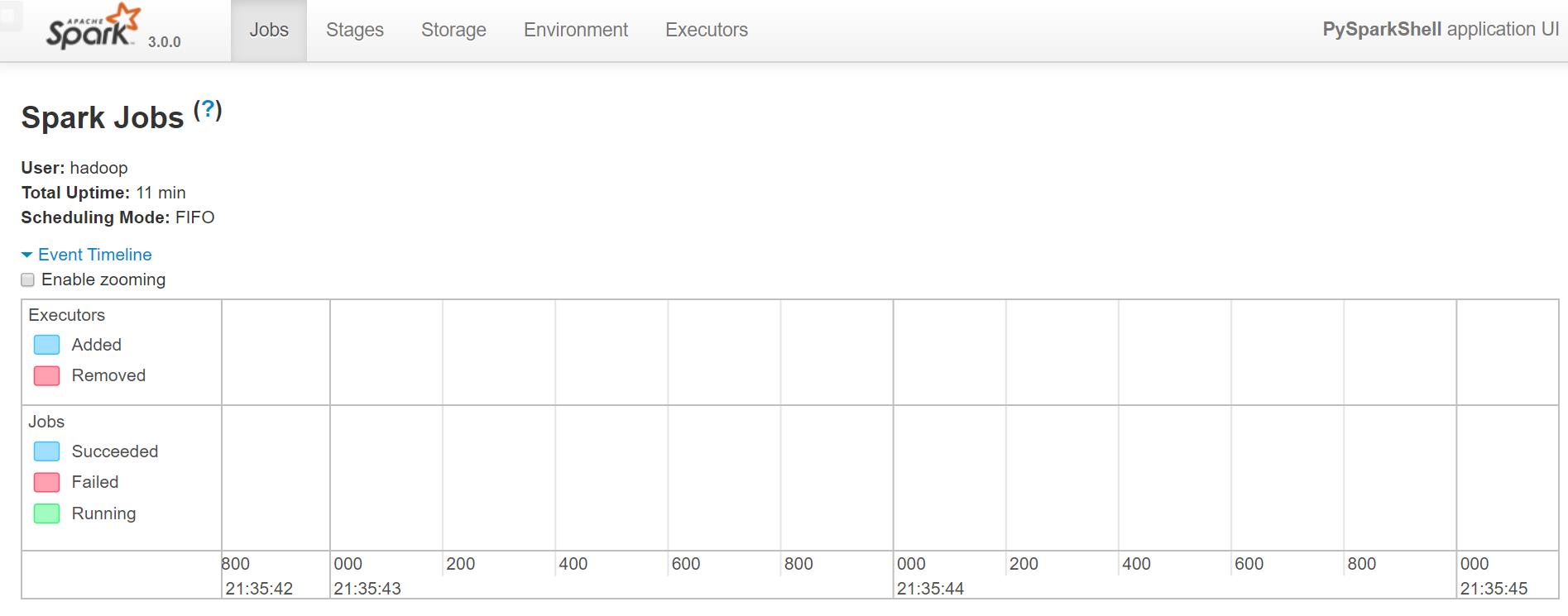

查看Web监控页面

以上是关于运行spark时提示 env: ‘python’: No such file or directory的主要内容,如果未能解决你的问题,请参考以下文章