python数据挖掘

Posted 本心从未变

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了python数据挖掘相关的知识,希望对你有一定的参考价值。

数据挖掘:半自动化地分析大型数据库并从中找出有用模式的过程。

和机器学习或者统计分析一样试图从数据中寻找规则或模式,区别在于它处理大量的存储在银盘上的数据,也就是从数据库中发现知识。

数据挖掘的第一步一般是创建数据集,数据集能够描述真实世界的某一方面。数据集主要包括1.表示真实世界中物体的样本。2.描述数据集中样本的特征

接下来是调整算法。每种数据挖掘算法都有参数,它们或者是算法自身包含的,或者是使用 者添加的。这些参数会影响算法的具体决策

规则的优劣有多种衡量方法,常用的是支持度(support)和置信度(confidence)

支持度指数据集中规则应验的次数,支持度衡量的是给定规则应验的比例,而置信度衡量的则是规则准确率如何,即符合给定条 件(即规则的“如果”语句所表示的前提条件)的所有规则里,跟当前规则结论一致的比例有多 大。计算方法为首先统计当前规则的出现次数,再用它来除以条件(“如果”语句)相同的规则 数量

亲和性分析:根据样本个体(物体)之间的相似度,确定它们关系的亲疏

从数据集中频繁出现的商品中选取共同出现的商品组成频繁项集

Apriori 算法

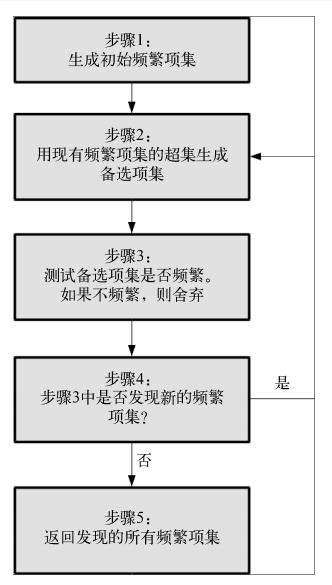

Apriori算法是亲和性分析的一部分,专门用于查找数据集中的频繁项集。基本流程是从前一 步找到的频繁项集中找到新的备选集合,接着检测备选集合的频繁程度是否够高,然后算法像下 面这样进行迭代。

(1) 把各项目放到只包含自己的项集中,生成最初的频繁项集。只使用达到最小支持度的项目。

(2) 查找现有频繁项集的超集,发现新的频繁项集,并用其生成新的备选项集。

(3) 测试新生成的备选项集的频繁程度,如果不够频繁,则舍弃。如果没有新的频繁项集, 就跳到最后一步。

(4) 存储新发现的频繁项集,跳到步骤(2)。

(5) 返回发现的所有频繁项集。

亲和性分析方法推荐电影

# Author:song # coding = utf-8 import os import pandas as pd import sys from collections import defaultdict ratings_filename = os.path.join(os.getcwd(), "Data","ml-100k","u.data") all_ratings = pd.read_csv(ratings_filename,delimiter=\'\\t\', header=None,names=[\'UserID\',\'MovieID\',\'Rating\',\'Datetime\'])#加载数据集时,把分隔符设置为制表符,告诉pandas不要把第一行作为表头(header=None),设置好各列的名称 all_ratings["Datetime"] = pd.to_datetime(all_ratings[\'Datetime\'],unit=\'s\') #解析时间戳数据 #Apriori 算法的实现,规则:如果用户喜欢某些电影,那么他们也会喜欢这部电影。作为对上述规则的扩展,我们还将讨论喜欢某几部电影的用户,是否喜欢另一部电影 all_ratings["Favorable"] = all_ratings["Rating"] > 3 #创建新特征Favorable,确定用户是不是喜欢某一部电影 ratings = all_ratings[all_ratings[\'UserID\'].isin(range(200))] #选取一部分数据用作训练集,减少搜索空间,提升Apriori算法的速度。 favorable_ratings = ratings[ratings["Favorable"]]#数据集(只包括用户喜欢某部电影的数据行) favorable_reviews_by_users = dict((k, frozenset(v.values)) for k,v in favorable_ratings.groupby(\'UserID\')[\'MovieID\'])#每个用户各喜欢哪些电影,按照User ID进行分组,并遍历每个用户看过的每一部电影 num_favorable_by_movie = ratings[["MovieID", "Favorable"]].groupby("MovieID").sum() #每部电影的影迷数量 # print(num_favorable_by_movie.sort("Favorable", ascending=False)[:5]) frequent_itemsets = {} min_support = 50 #设置最小支持度 frequent_itemsets[1] = dict((frozenset((movie_id,)),row["Favorable"]) for movie_id, row in num_favorable_by_movie.iterrows() if row["Favorable"] > min_support) #每一部电影生成只包含它自己的项集,检测它是否够频繁。电影编号使用frozenset def find_frequent_itemsets(favorable_reviews_by_users, k_1_itemsets,min_support): #接收新发现的频繁项集,创建超集,检测频繁程度 counts = defaultdict(int) for user, reviews in favorable_reviews_by_users.items():#遍历所有用户和他们的打分数据 for itemset in k_1_itemsets:#遍历前面找出的项集,判断它们是否是当前评分项集的子集。如果是,表明用户已经为子集中的电影打过分 if itemset.issubset(reviews): for other_reviewed_movie in reviews - itemset:#遍历用户打过分却没有出现在项集里的电影,用它生成超集,更新该项集的计数 current_superset = itemset | frozenset((other_reviewed_movie,)) counts[current_superset] += 1 return dict([(itemset, frequency) for itemset, frequency in counts.items() if frequency >= min_support]) #检测达到支持度要求的项集 for k in range(2, 20): cur_frequent_itemsets =find_frequent_itemsets(favorable_reviews_by_users,frequent_itemsets[k-1],min_support) frequent_itemsets[k] = cur_frequent_itemsets if len(cur_frequent_itemsets) == 0: print("Did not find any frequent itemsets of length {}".format(k)) sys.stdout.flush() break else: print("I found {} frequent itemsets of length{}".format(len(cur_frequent_itemsets), k)) sys.stdout.flush() del frequent_itemsets[1] #删除长度为1的项集 #遍历不同长度的频繁项集,为每个项集生成规则 candidate_rules = [] for itemset_length, itemset_counts in frequent_itemsets.items(): for itemset in itemset_counts.keys(): for conclusion in itemset: premise = itemset - set((conclusion,)) candidate_rules.append((premise, conclusion)) print(candidate_rules[:5]) #创建两个字典,用来存储规则应验(正例)和规则不适用(反例)的次数 correct_counts = defaultdict(int) incorrect_counts = defaultdict(int) for user, reviews in favorable_reviews_by_users.items(): for candidate_rule in candidate_rules: premise, conclusion = candidate_rule if premise.issubset(reviews): if conclusion in reviews: correct_counts[candidate_rule] += 1 else: incorrect_counts[candidate_rule] += 1 #用规则应验的次数除以前提条件出现的总次数,计算每条规则的置信度 rule_confidence = {candidate_rule: correct_counts[candidate_rule]/ float(correct_counts[candidate_rule] +incorrect_counts[candidate_rule]) for candidate_rule in candidate_rules} from operator import itemgetter sorted_confidence = sorted(rule_confidence.items(),key=itemgetter(1), reverse=True) for index in range(5): print("Rule #{0}".format(index + 1)) (premise, conclusion) = sorted_confidence[index][0] print("Rule: If a person recommends {0} they will alsorecommend {1}".format(premise, conclusion)) print(" - Confidence:{0:.3f}".format(rule_confidence[(premise, conclusion)])) print("") #用pandas从u.items文件加载电影名称信息 movie_name_filename = os.path.join(os.getcwd(), "Data","ml-100k","u.item") movie_name_data = pd.read_csv(movie_name_filename, delimiter="|",header=None, encoding = "mac-roman") movie_name_data.columns = ["MovieID", "Title", "Release Date", "Video Release", "IMDB", "<UNK>", "Action", "Adventure","Animation", "Children\'s", "Comedy", "Crime", "Documentary","Drama", "Fantasy", "Film-Noir","Horror", "Musical", "Mystery", "Romance", "Sci-Fi", "Thriller","War", "Western"] def get_movie_name(movie_id):#用电影编号获取名称 title_object = movie_name_data[movie_name_data["MovieID"] == movie_id]["Title"] title = title_object.values[0] return title for index in range(5):#输出的规则 print("Rule #{0}".format(index + 1)) (premise, conclusion) =sorted_confidence[index][0] premise_names = ", ".join(get_movie_name(idx) for idx in premise) conclusion_name = get_movie_name(conclusion) print("Rule: If a person recommends {0} they willalso recommend {1}".format(premise_names, conclusion_name)) print(" - Confidence: {0:.3f}".format(rule_confidence[(premise,conclusion)])) print("") #进行评估,计算规则应验的数量,方法跟之前相同。唯一的不同就是这次使用的是测试数据而不是训练数据 test_dataset = all_ratings[~all_ratings["UserID"].isin(range(200))]#选取除了训练集以外的数据 test_favorable = test_dataset[test_dataset["Favorable"]] test_favorable_by_users = dict((k, frozenset(v.values)) for k, v in test_favorable.groupby("UserID")["MovieID"]) correct_counts = defaultdict(int) incorrect_counts = defaultdict(int) for user, reviews in test_favorable_by_users.items(): for candidate_rule in candidate_rules: premise,conclusion = candidate_rule if premise.issubset(reviews): if conclusion in reviews: correct_counts[candidate_rule] += 1 else: incorrect_counts[candidate_rule] += 1 test_confidence = {candidate_rule: correct_counts[candidate_rule]/float(correct_counts[candidate_rule] + incorrect_counts[candidate_rule])for candidate_rule in rule_confidence} for index in range(5): print("Rule #{0}".format(index + 1)) (premise, conclusion) = sorted_confidence[index][0] premise_names = ", ".join(get_movie_name(idx) for idx in premise) conclusion_name = get_movie_name(conclusion) print("Rule: If a person recommends {0} they will alsorecommend {1}".format(premise_names, conclusion_name)) print(" - Train Confidence:{0:.3f}".format(rule_confidence.get((premise, conclusion), -1))) print(" - Test Confidence:{0:.3f}".format(test_confidence.get((premise, conclusion),-1))) print("")

运行结果

I found 93 frequent itemsets of length2 I found 295 frequent itemsets of length3 I found 593 frequent itemsets of length4 I found 785 frequent itemsets of length5 I found 677 frequent itemsets of length6 I found 373 frequent itemsets of length7 I found 126 frequent itemsets of length8 I found 24 frequent itemsets of length9 I found 2 frequent itemsets of length10 Did not find any frequent itemsets of length 11 [(frozenset({79}), 258), (frozenset({258}), 79), (frozenset({50}), 64), (frozenset({64}), 50), (frozenset({127}), 181)] Rule #1 Rule: If a person recommends frozenset({98, 172, 127, 174, 7}) they will alsorecommend 64 - Confidence:1.000 Rule #2 Rule: If a person recommends frozenset({56, 1, 64, 127}) they will alsorecommend 98 - Confidence:1.000 Rule #3 Rule: If a person recommends frozenset({64, 100, 181, 174, 79}) they will alsorecommend 56 - Confidence:1.000 Rule #4 Rule: If a person recommends frozenset({56, 100, 181, 174, 127}) they will alsorecommend 50 - Confidence:1.000 Rule #5 Rule: If a person recommends frozenset({98, 100, 172, 79, 50, 56}) they will alsorecommend 7 - Confidence:1.000 Rule #1 Rule: If a person recommends Silence of the Lambs, The (1991), Empire Strikes Back, The (1980), Godfather, The (1972), Raiders of the Lost Ark (1981), Twelve Monkeys (1995) they willalso recommend Shawshank Redemption, The (1994) - Confidence: 1.000 Rule #2 Rule: If a person recommends Pulp Fiction (1994), Toy Story (1995), Shawshank Redemption, The (1994), Godfather, The (1972) they willalso recommend Silence of the Lambs, The (1991) - Confidence: 1.000 Rule #3 Rule: If a person recommends Shawshank Redemption, The (1994), Fargo (1996), Return of the Jedi (1983), Raiders of the Lost Ark (1981), Fugitive, The (1993) they willalso recommend Pulp Fiction (1994) - Confidence: 1.000 Rule #4 Rule: If a person recommends Pulp Fiction (1994), Fargo (1996), Return of the Jedi (1983), Raiders of the Lost Ark (1981), Godfather, The (1972) they willalso recommend Star Wars (1977) - Confidence: 1.000 Rule #5 Rule: If a person recommends Silence of the Lambs, The (1991), Fargo (1996), Empire Strikes Back, The (1980), Fugitive, The (1993), Star Wars (1977), Pulp Fiction (1994) they willalso recommend Twelve Monkeys (1995) - Confidence: 1.000 Rule #1 Rule: If a person recommends Silence of the Lambs, The (1991), Empire Strikes Back, The (1980), Godfather, The (1972), Raiders of the Lost Ark (1981), Twelve Monkeys (1995) they will alsorecommend Shawshank Redemption, The (1994) - Train Confidence:1.000 - Test Confidence:0.854 Rule #2 Rule: If a person recommends Pulp Fiction (1994), Toy Story (1995), Shawshank Redemption, The (1994), Godfather, The (1972) they will alsorecommend Silence of the Lambs, The (1991) - Train Confidence:1.000 - Test Confidence:0.870 Rule #3 Rule: If a person recommends Shawshank Redemption, The (1994), Fargo (1996), Return of the Jedi (1983), Raiders of the Lost Ark (1981), Fugitive, The (1993) they will alsorecommend Pulp Fiction (1994) - Train Confidence:1.000 - Test Confidence:0.756 Rule #4 Rule: If a person recommends Pulp Fiction (1994), Fargo (1996), Return of the Jedi (1983), Raiders of the Lost Ark (1981), Godfather, The (1972) they will alsorecommend Star Wars (1977) - Train Confidence:1.000 - Test Confidence:0.975 Rule #5 Rule: If a person recommends Silence of the Lambs, The (1991), Fargo (1996), Empire Strikes Back, The (1980), Fugitive, The (1993), Star Wars (1977), Pulp Fiction (1994) they will alsorecommend Twelve Monkeys (1995) - Train Confidence:1.000 - Test Confidence:0.609 Process finished with exit code 0

以上是关于python数据挖掘的主要内容,如果未能解决你的问题,请参考以下文章