“追新番”网站

追新番网站提供最新的日剧和日影下载地址,更新比较快。

个人比较喜欢看日剧,因此想着通过爬取该网站,做一个资源地图

可以查看网站到底有哪些日剧,并且随时可以下载。

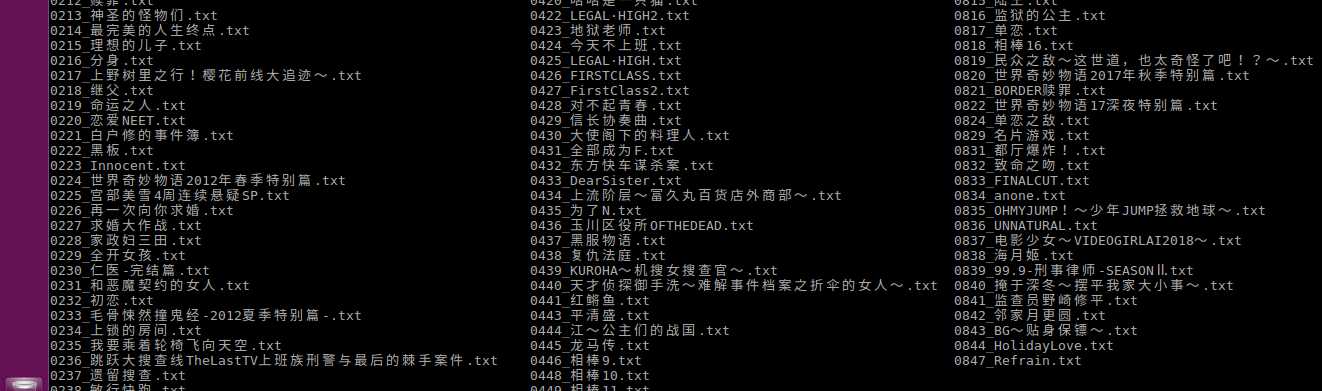

资源地图

爬取的资源地图如下:

在linux系统上通过 ls | grep keywords 可以轻松找到想要的资源(windows直接搜索就行啦)

爬取脚本开发

1. 确定爬取策略

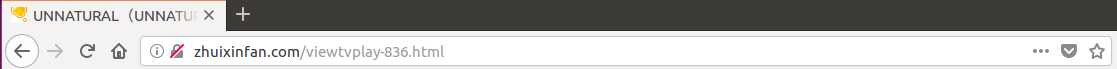

进入多个日剧,可以查看到每个剧的网址都是如下形式:

可以看出,每个日剧网页都对应一个编号。

因此我们可以通过遍历编号来爬取。

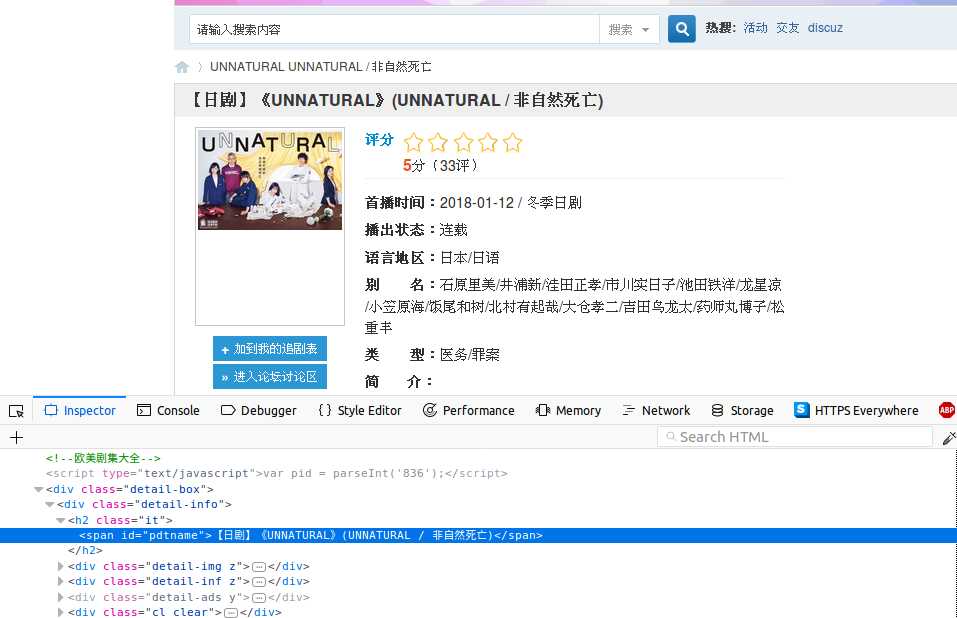

2. 获取日剧的名字

打开其中一个日剧的网页,查看标题的源代码如下:

可以看到,标题的标签ID为"pdtname", 我们只要获取该标签的文本即可获取日剧名字

通过beautifulSoup的接口,获取该标签内容(去除了名字中多余东西)

1 # try get tv name 2 tag_name = soup.find(id=‘pdtname‘) 3 if None == tag_name: 4 print(‘tv_{:0>4d}: not exist.‘.format(num)) 5 return None 6 7 # remove signs not need 8 name = tag_name.get_text().replace(‘ ‘, ‘‘) 9 try: 10 name = name.replace(re.search(‘【.*】‘, name).group(0), ‘‘) 11 name = name.replace(re.search(‘\\(.*\\)‘, name).group(0), ‘‘) 12 name = name.replace(‘《‘, ‘‘) 13 name = name.replace(‘》‘, ‘‘) 14 name = name.replace(‘/‘, ‘‘) 15 except : 16 pass

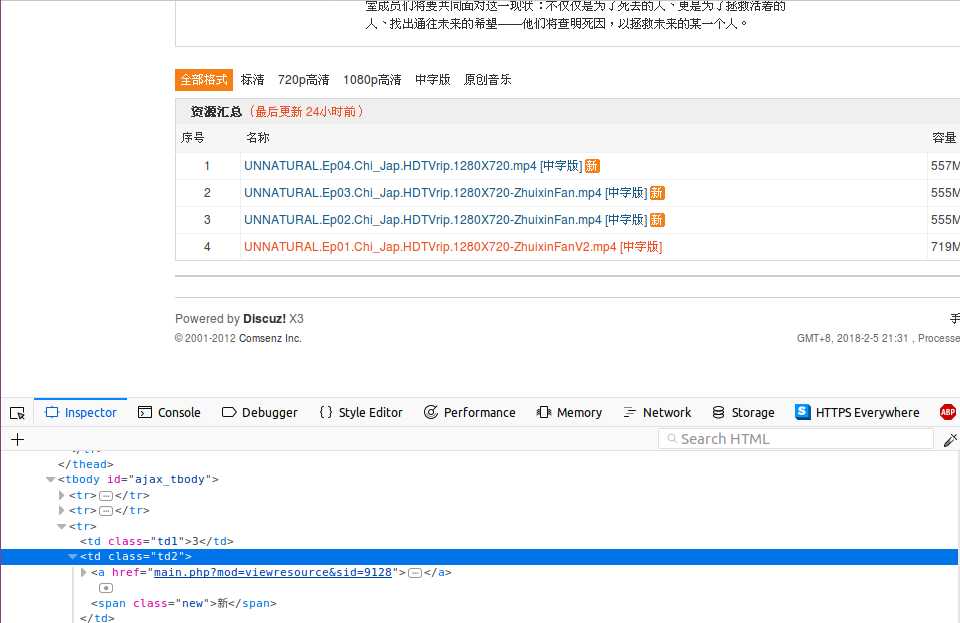

3. 获取资源链接

在每个日剧页面中同时也包含了资源链接的地址,查看源代码如下:

可以看到资源链接使用了一个表块,并且表块的ID为"ajax_tbody"

其中每一集都是表的行元素,每一行又包含了几列来显示资源的各个信息

我们通过遍历表的元素来获取每一集的资源链接

# try get tv resources list tag_resources = soup.find(id=‘ajax_tbody‘) if None == tag_resources: print(‘tv_{:0>4d}: has no resources.‘.format(num)) return None # walk resources for res in tag_resources.find_all(‘tr‘): # get link tag tag_a = res.find(‘a‘) info = res.find_all(‘td‘) print(‘resource: ‘, tag_a.get_text()) # get download link downlink = get_resources_link(session, tag_a.get(‘href‘)) # record resouces tv.resources.append([tag_a.get_text(), info[2].get_text(), downlink, ‘‘]) delay(1)

4. 获取下载链接

点击其中一个资源,进入下载链接页面,查看源代码如下

可以看到电驴的下载链接标签ID为"emule_url",因此我们只需要获取该标签的文本就可以了(磁力链接类似)

不过首先我们还需要先获取该下载页面,整体操作代码如下

def get_resources_link(session, url): ‘‘‘ get tv resources download link ‘‘‘ global domain res_url = domain + url # open resources page resp = session.get(res_url, timeout = 10) resp.raise_for_status() soup = page_decode(resp.content, resp.encoding) tag_emule = soup.find(id=‘emule_url‘) return tag_emule.get_text() if tag_emule != None else ‘‘

5. 将资源下载链接保存到本地

其中,由于爬取所有日剧的下载链接比较耗时,前面做了判断可以只爬取标题,日后根据序号再爬取下载链接

def save_tv(tv): ‘‘‘ save tv infomation on disk ‘‘‘ filename = os.path.join(os.path.abspath(save_dir), ‘{:0>4d}_{}.txt‘.format(tv.num, tv.name)) global only_catalog if only_catalog == True: with open(filename, ‘a+‘) as f: pass else: with open(filename, ‘w‘) as f: for info in tv.resources: f.write(os.linesep.join(info)) f.write(‘========‘ + os.linesep)

以上,就是整个爬取脚本的开发过程。

欢迎关注我的代码仓库: https://gitee.com/github-18274965/Python-Spider

以后还会开发其余网站的爬取脚本。

附录

整体代码:

1 #!/usr/bin/python3 2 # -*- coding:utf-8 -*- 3 4 import os 5 import sys 6 import re 7 import requests 8 from bs4 import BeautifulSoup 9 from time import sleep 10 11 # website domain 12 domain = ‘http://www.zhuixinfan.com/‘ 13 14 # spide infomation save directory 15 save_dir = ‘./tvinfo/‘ 16 17 # only tv catalog 18 only_catalog = False 19 20 class TVInfo: 21 ‘‘‘ TV infomation class‘‘‘ 22 23 def __init__(self, num, name): 24 self.num = num 25 self.name = name 26 self.resources = [] 27 28 29 def delay(seconds): 30 ‘‘‘ sleep for secondes ‘‘‘ 31 32 while seconds > 0: 33 sleep(1) 34 seconds = seconds - 1 35 36 def page_decode(content, encoding): 37 ‘‘‘ decode page ‘‘‘ 38 39 # lxml may failed, then try html.parser 40 try: 41 soup = BeautifulSoup(content, ‘lxml‘, from_encoding=encoding) 42 except: 43 soup = BeautifulSoup(content, ‘html.parser‘, from_encoding=encoding) 44 45 return soup 46 47 def open_home_page(session): 48 ‘‘‘ open home page first as humain being ‘‘‘ 49 50 global domain 51 home_url = domain + ‘main.php‘ 52 53 # open home page 54 resp = session.get(home_url, timeout = 10) 55 resp.raise_for_status() 56 57 # do nothing 58 59 def get_resources_link(session, url): 60 ‘‘‘ get tv resources download link ‘‘‘ 61 62 global domain 63 res_url = domain + url 64 65 # open resources page 66 resp = session.get(res_url, timeout = 10) 67 resp.raise_for_status() 68 69 soup = page_decode(resp.content, resp.encoding) 70 71 tag_emule = soup.find(id=‘emule_url‘) 72 return tag_emule.get_text() if tag_emule != None else ‘‘ 73 74 75 def spider_tv(session, num): 76 ‘‘‘ fetch tv infomaion ‘‘‘ 77 78 global domain 79 tv_url = domain + ‘viewtvplay-{}.html‘.format(num) 80 81 # open tv infomation page 82 resp = session.get(tv_url, timeout = 10) 83 resp.raise_for_status() 84 85 soup = page_decode(resp.content, resp.encoding) 86 87 # try get tv name 88 tag_name = soup.find(id=‘pdtname‘) 89 if None == tag_name: 90 print(‘tv_{:0>4d}: not exist.‘.format(num)) 91 return None 92 93 # try get tv resources list 94 tag_resources = soup.find(id=‘ajax_tbody‘) 95 if None == tag_resources: 96 print(‘tv_{:0>4d}: has no resources.‘.format(num)) 97 return None 98 99 # remove signs not need 100 name = tag_name.get_text().replace(‘ ‘, ‘‘) 101 try: 102 name = name.replace(re.search(‘【.*】‘, name).group(0), ‘‘) 103 name = name.replace(re.search(‘\\(.*\\)‘, name).group(0), ‘‘) 104 name = name.replace(‘《‘, ‘‘) 105 name = name.replace(‘》‘, ‘‘) 106 name = name.replace(‘/‘, ‘‘) 107 except : 108 pass 109 110 print(‘tv_{:0>4d}: {}‘.format(num, name)) 111 112 tv = TVInfo(num, name) 113 114 global only_catalog 115 if only_catalog == True: 116 return tv 117 118 # walk resources 119 for res in tag_resources.find_all(‘tr‘): 120 121 # get link tag 122 tag_a = res.find(‘a‘) 123 info = res.find_all(‘td‘) 124 print(‘resource: ‘, tag_a.get_text()) 125 126 # get download link 127 downlink = get_resources_link(session, tag_a.get(‘href‘)) 128 129 # record resouces 130 tv.resources.append([tag_a.get_text(), info[2].get_text(), downlink, ‘‘]) 131 delay(1) 132 133 return tv 134 135 136 def save_tv(tv): 137 ‘‘‘ save tv infomation on disk ‘‘‘ 138 139 filename = os.path.join(os.path.abspath(save_dir), ‘{:0>4d}_{}.txt‘.format(tv.num, tv.name)) 140 141 global only_catalog 142 if only_catalog == True: 143 with open(filename, ‘a+‘) as f: 144 pass 145 else: 146 with open(filename, ‘w‘) as f: 147 for info in tv.resources: 148 f.write(os.linesep.join(info)) 149 f.write(‘========‘ + os.linesep) 150 151 def main(): 152 153 start = 1 154 end = 999 155 156 if len(sys.argv) > 1: 157 start = int(sys.argv[1]) 158 159 if len(sys.argv) > 2: 160 end = int(sys.argv[2]) 161 162 global only_catalog 163 s = input("Only catalog ?[y/N] ") 164 if s == ‘y‘ or s == ‘Y‘: 165 only_catalog = True 166 167 # headers: firefox_58 on ubuntu 168 headers = { 169 ‘User-Agent‘: ‘Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:58.0)‘ 170 + ‘ Gecko/20100101 Firefox/58.0‘, 171 ‘Accept‘: ‘text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8‘, 172 ‘Accept-Language‘: ‘zh-CN,en-US;q=0.7,en;q=0.3‘, 173 ‘Accept-Encoding‘: ‘gzip, deflate‘, 174 } 175 176 # create spider session 177 with requests.Session() as s: 178 179 try: 180 s.headers.update(headers) 181 open_home_page(s) 182 for num in range(start, end+1): 183 delay(3) 184 tv = spider_tv(s, num) 185 if tv != None: 186 save_tv(tv) 187 188 except Exception as err: 189 print(err) 190 exit(-1) 191 192 if __name__ == ‘__main__‘: 193 main()