python——Scrapy 框架

Posted 想54256

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了python——Scrapy 框架相关的知识,希望对你有一定的参考价值。

爬虫的自我修养_4

一、Scrapy 框架简介

-

Scrapy是用纯Python实现一个为了爬取网站数据、提取结构性数据而编写的应用框架,用途非常广泛。

-

框架的力量,用户只需要定制开发几个模块就可以轻松的实现一个爬虫,用来抓取网页内容以及各种图片,非常之方便。

-

Scrapy 使用了 Twisted

[\'twɪstɪd](其主要对手是Tornado)异步网络框架来处理网络通讯,可以加快我们的下载速度,不用自己去实现异步框架,并且包含了各种中间件接口,可以灵活的完成各种需求。

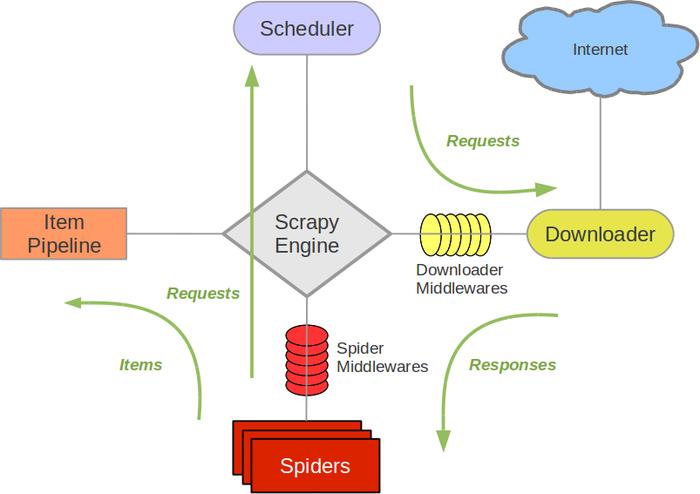

Scrapy架构图(绿线是数据流向):

-

Scrapy Engine(引擎): 负责Spider、ItemPipeline、Downloader、Scheduler中间的通讯,信号、数据传递等。 -

Scheduler(调度器): 它负责接受引擎发送过来的Request请求,并按照一定的方式进行整理排列,入队,当引擎需要时,交还给引擎。 -

Downloader(下载器):负责下载Scrapy Engine(引擎)发送的所有Requests请求,并将其获取到的Responses交还给Scrapy Engine(引擎),由引擎交给Spider来处理, -

Spider(爬虫):它负责处理所有Responses,从中分析提取数据,获取Item字段需要的数据,并将需要跟进的URL提交给引擎,再次进入Scheduler(调度器), -

Item Pipeline(管道):它负责处理Spider中获取到的Item,并进行进行后期处理(详细分析、过滤、存储等)的地方. -

Downloader Middlewares(下载中间件):你可以当作是一个可以自定义扩展下载功能的组件。 -

Spider Middlewares(Spider中间件):你可以理解为是一个可以自定扩展和操作引擎和Spider中间通信的功能组件(比如进入Spider的Responses;和从Spider出去的Requests)

一切的开始是从我们写的爬虫(Spider)开始的,我们向引擎(Scrapu Engine)发送请求,引擎将发送来的Request请求交给调度器,调度器将他们入队,当引擎需要的时候,将他们按先进先出的方式出队,然后引擎把他们交给下载器(Downloader),下载器下载完毕后把Response交给引擎,引擎又交给我们写的爬虫程序,我们通过处理Response将里面要继续爬取的URL交给引擎(重复上面的步骤),需要保存的发送给管道(Item Pipeline)处理

制作 Scrapy 爬虫 一共需要4步:

- 新建项目 (scrapy startproject xxx):新建一个新的爬虫项目(新建项目方法:scrapy crawl + 爬虫项目名)

- 明确目标 (编写items.py):明确你想要抓取的目标

- 制作爬虫 (spiders/xxspider.py):制作爬虫开始爬取网页

- 存储内容 (pipelines.py):设计管道存储爬取内容

pycharm启动工程的start.py文件

from scrapy import cmdline

cmdline.execute("scrapy crawl 起的爬虫名".split())

二、Scrapy Selectors选择器

crapy Selectors 内置 XPath 和 CSS Selector 表达式机制

Selector有四个基本的方法,最常用的还是xpath:

- xpath(): 传入xpath表达式,返回该表达式所对应的所有节点的selector list列表

- extract(): 序列化该节点为Unicode字符串并返回list

- css(): 传入CSS表达式,返回该表达式所对应的所有节点的selector list列表,语法同 BeautifulSoup4

- re(): 根据传入的正则表达式对数据进行提取,返回Unicode字符串list列表

XPath表达式的例子及对应的含义:

/html/head/title: 选择<HTML>文档中 <head> 标签内的 <title> 元素 /html/head/title/text(): 选择上面提到的 <title> 元素的文字 //td: 选择所有的 <td> 元素 //div[@class="mine"]: 选择所有具有 class="mine" 属性的 div 元素

其他的看前两篇博客吧

三、Item Pipeline

当Item在Spider中被收集之后,它将会被传递到Item Pipeline,这些Item Pipeline组件按定义的顺序处理Item。

每个Item Pipeline都是实现了简单方法的Python类,比如决定此Item是丢弃而存储。以下是item pipeline的一些典型应用:

- 验证爬取的数据(检查item包含某些字段,比如说name字段)

- 查重(并丢弃)

- 将爬取结果保存到文件或者数据库中

编写item pipeline

编写item pipeline很简单,item pipiline组件是一个独立的Python类,其中process_item()方法必须实现:

class XingePipeline(object):

def __init__(self):

# 可选实现,做参数初始化等

# 初始函数和结束函数只执行一遍,中间的proces_item函数,来数据就执行一遍,所以不用写ab

self.file = open(\'teacher.json\', \'wb\') # 打开文件

def process_item(self, item, spider):

# item (Item 对象) – 被爬取的item

# spider (Spider 对象) – 爬取该item的spider

# 这个方法必须实现,每个item pipeline组件都需要调用该方法,

# 这个方法必须返回一个 Item 对象,被丢弃的item将不会被之后的pipeline组件所处理。

content = json.dumps(dict(item), ensure_ascii=False) + "\\n"

self.file.write(content)

return item

def open_spider(self, spider):

# spider (Spider 对象) – 被开启的spider

# 可选实现,当spider被开启时,这个方法被调用。

def close_spider(self, spider):

# spider (Spider 对象) – 被关闭的spider

# 可选实现,当spider被关闭时,这个方法被调用

self.file.close()

要启用pipeline,必须要在settings文件中把注释去掉

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

"mySpider.pipelines.ItcastJsonPipeline":300

}

四、Spider类

Spider类定义了如何爬取某个(或某些)网站。包括了爬取的动作(例如:是否跟进链接)以及如何从网页的内容中提取结构化数据(爬取item)。 换句话说,Spider就是您定义爬取的动作及分析某个网页(或者是有些网页)的地方。

class scrapy.Spider是最基本的类,所有编写的爬虫必须继承这个类。

主要用到的函数及调用顺序为:

__init__() : 初始化爬虫名字和start_urls列表

start_requests() 调用make_requests_from url():生成Requests对象交给Scrapy下载并返回response

parse() : 解析response,并返回Item或Requests(需指定回调函数)。Item传给Item pipline持久化 , 而Requests交由Scrapy下载,并由指定的回调函数处理(默认parse()),一直进行循环,直到处理完所有的数据为止。

主要属性和方法

-

name

定义spider名字的字符串。

例如,如果spider爬取 mywebsite.com ,该spider通常会被命名为 mywebsite

-

allowed_domains

包含了spider允许爬取的域名(domain)的列表,可选。

-

start_urls

初始URL元祖/列表。当没有制定特定的URL时,spider将从该列表中开始进行爬取。

-

start_requests(self)

该方法必须返回一个可迭代对象(iterable)。该对象包含了spider用于爬取(默认实现是使用 start_urls 的url)的第一个Request。

当spider启动爬取并且未指定start_urls时,该方法被调用。

-

parse(self, response)

当请求url返回网页没有指定回调函数时,默认的Request对象回调函数。用来处理网页返回的response,以及生成Item或者Request对象。

-

log(self, message[, level, component])

使用 scrapy.log.msg() 方法记录(log)message。 更多数据请参见 logging

parse方法的工作规则

1. 因为使用的yield,而不是return。parse函数将会被当做一个生成器使用。scrapy会逐一获取parse方法中生成的结果,并判断该结果是一个什么样的类型; 2. 如果是request则加入爬取队列,如果是item类型则使用pipeline处理,其他类型则返回错误信息。 3. scrapy取到第一部分的request不会立马就去发送这个request,只是把这个request放到队列里,然后接着从生成器里获取; 4. 取尽第一部分的request,然后再获取第二部分的item,取到item了,就会放到对应的pipeline里处理; 5. parse()方法作为回调函数(callback)赋值给了Request,指定parse()方法来处理这些请求 scrapy.Request(url, callback=self.parse) 6. Request对象经过调度,执行生成 scrapy.http.response()的响应对象,并送回给parse()方法,直到调度器中没有Request(递归的思路) 7. 取尽之后,parse()工作结束,引擎再根据队列和pipelines中的内容去执行相应的操作; 8. 程序在取得各个页面的items前,会先处理完之前所有的request队列里的请求,然后再提取items。 7. 这一切的一切,Scrapy引擎和调度器将负责到底。

小Tips

为什么要用yield? yield的主要作用是将函数 ==> 生成器 通过yield可以给item返回数据 也可以发送下一个的request请求。 如果用return的话,会结束函数。 如果需要返回包含成百上千个元素的list,想必会占用很多计算机资源以及时间。如果用yield 就可以缓和这种情况了。

settings文件

# -*- coding: utf-8 -*-

# Scrapy settings for douyuScripy project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

# http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

BOT_NAME = \'douyuScripy\' # 工程名

SPIDER_MODULES = [\'douyuScripy.spiders\'] # 爬虫文件路径

NEWSPIDER_MODULE = \'douyuScripy.spiders\'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = \'douyuScripy (+http://www.yourdomain.com)\'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True # 是否符合爬虫规则,我们自己写爬虫当然是不遵守了呀,注释掉就好了

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32 # 启动的协程数量,默认是16个

# Configure a delay for requests for the same website (default: 0)

# See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 2 # 每次请求的等待时间

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16 # 将单个域执行的并发请求的最大数量,默认是8

#CONCURRENT_REQUESTS_PER_IP = 16 # 将对单个IP执行的并发请求的最大数量,默认是0,如果非零,并发限制将应用于每个IP,而不是每个域。

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False # 是否保存cookie,默认是True

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False # 指定是否启用telnet控制台(和Windows没关系),默认是True

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = { # 请求头文件

"User-Agent" : "DYZB/1 CFNetwork/808.2.16 Darwin/16.3.0"

# \'Accept\': \'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8\',

# \'Accept-Language\': \'en\',

}

# Enable or disable spider middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# \'douyuScripy.middlewares.DouyuscripySpiderMiddleware\': 543, # 爬虫中间件,后面的值越小,优先级越高

#}

# Enable or disable downloader middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# \'douyuScripy.middlewares.MyCustomDownloaderMiddleware\': 543, # 下载中间件

#}

# Enable or disable extensions

# See http://scrapy.readthedocs.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# \'scrapy.extensions.telnet.TelnetConsole\': None,

#}

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

\'douyuScripy.pipelines.DouyuscripyPipeline\': 300, # 使用哪个管道,多个的话,先走后面值小的

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See http://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = \'httpcache\'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = \'scrapy.extensions.httpcache.FilesystemCacheStorage\'

示例一、爬取itcast的教师姓名+信息

爬虫模块

# -*- coding: utf-8 -*-

import scrapy

from douyu.items import DouyuItem

import json

class DouyumeinvSpider(scrapy.Spider):

name = "douyumeinv"

allowed_domains = ["capi.douyucdn.cn"]

offset = 0

url = "http://capi.douyucdn.cn/api/v1/getVerticalRoom?limit=20&offset="

start_urls = [url + str(offset)]

def parse(self, response):

# scrapy获取html页面用的是response.body ==> 字节类型(bytes) response.text ==> 字符串类型(unicode)

# 把json格式的数据转换为python格式,data段是列表

data = json.loads(response.text)["data"]

for each in data:

item = DouyuItem()

item["nickname"] = each["nickname"]

item["imagelink"] = each["vertical_src"]

yield item

if self.offset < 40:

self.offset += 20

yield scrapy.Request(self.url + str(self.offset), callback = self.parse)

管道模块

import json

class ItcastPipeline(object):

def __init__(self):

self.filename = open(\'teacher.json\',\'wb\')

def process_item(self, item, spider):

text = json.dumps(dict(item),ensure_ascii=False)+\'\\n\'

self.filename.write(text.encode(\'utf-8\'))

return item

def close_spider(self,spider):

self.filename.close()

1 import scrapy 2 3 class ItcastItem(scrapy.Item): 4 # define the fields for your item here like: 5 name = scrapy.Field() 6 title = scrapy.Field() 7 info = scrapy.Field()

1 # -*- coding: utf-8 -*- 2 3 # Scrapy settings for myScripy project 4 # 5 # For simplicity, this file contains only settings considered important or 6 # commonly used. You can find more settings consulting the documentation: 7 # 8 # http://doc.scrapy.org/en/latest/topics/settings.html 9 # http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html 10 # http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html 11 12 BOT_NAME = \'myScripy\' 13 14 SPIDER_MODULES = [\'myScripy.spiders\'] 15 NEWSPIDER_MODULE = \'myScripy.spiders\' 16 17 18 # Crawl responsibly by identifying yourself (and your website) on the user-agent 19 #USER_AGENT = \'myScripy (+http://www.yourdomain.com)\' 20 21 # Obey robots.txt rules 22 ROBOTSTXT_OBEY = True 23 24 # Configure maximum concurrent requests performed by Scrapy (default: 16) 25 #CONCURRENT_REQUESTS = 32 26 27 # Configure a delay for requests for the same website (default: 0) 28 # See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay 29 # See also autothrottle settings and docs 30 #DOWNLOAD_DELAY = 3 31 # The download delay setting will honor only one of: 32 #CONCURRENT_REQUESTS_PER_DOMAIN = 16 33 #CONCURRENT_REQUESTS_PER_IP = 16 34 35 # Disable cookies (enabled by default) 36 #COOKIES_ENABLED = False 37 38 # Disable Telnet Console (enabled by default) 39 #TELNETCONSOLE_ENABLED = False 40 41 # Override the default request headers: 42 #DEFAULT_REQUEST_HEADERS = { 43 # \'Accept\': \'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8\', 44 # \'Accept-Language\': \'en\', 45 #} 46 47 # Enable or disable spider middlewares 48 # See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html 49 #SPIDER_MIDDLEWARES = { 50 # \'myScripy.middlewares.MyscripySpiderMiddleware\': 543, 51 #} 52 53 # Enable or disable downloader middlewares 54 # See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html 55 #DOWNLOADER_MIDDLEWARES = { 56 # \'myScripy.middlewares.MyCustomDownloaderMiddleware\': 543, 57 #} 58 59 # Enable or disable extensions 60 # See http://scrapy.readthedocs.org/en/latest/topics/extensions.html 61 #EXTENSIONS = { 62 # \'scrapy.extensions.telnet.TelnetConsole\': None, 63 #} 64 65 # Configure item pipelines 66 # See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html 67 ITEM_PIPELINES = { 68 \'myScripy.pipelines.ItcastPipeline\': 300, 69 } 70 71 # Enable and configure the AutoThrottle extension (disabled by default) 72 # See http://doc.scrapy.org/en/latest/topics/autothrottle.html 73 #AUTOTHROTTLE_ENABLED = True 74 # The initial download delay 75 #AUTOTHROTTLE_START_DELAY = 5 76 # The maximum download delay to be set in case of high latencies 77 #AUTOTHROTTLE_MAX_DELAY = 60 78 # The average number of requests Scrapy should be sending in parallel to 79 # each remote server 80 #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 81 # Enable showing throttling stats for every response received: 82 #AUTOTHROTTLE_DEBUG = False 83 84 # Enable and configure HTTP caching (disabled by default) 85 # See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings 86 #HTTPCACHE_ENABLED = True 87 #HTTPCACHE_EXPIRATION_SECS = 0 88 #HTTPCACHE_DIR = \'httpcache\' 89 #HTTPCACHE_IGNORE_HTTP_CODES = [] 90 #HTTPCACHE_STORAGE = \'scrapy.extensions.httpcache.FilesystemCacheStorage\'

小Tips:

scrapy获取html页面: response.body ==> 字节类型(bytes) response.text ==> 字符串类型(unicode) requests获取: response.content==> 字节类型(bytes) response.text ==> 字符串类型(unicode) urllib2获取 response.read()

示例二、腾讯招聘网自动翻页采集

爬虫模块

# -*- coding: utf-8 -*-

import scrapy

from day_30.TencentScripy.TencentScripy.items import TencentscripyItem

class TencentSpider(scrapy.Spider):

name = \'tencent\'

allowed_domains = [\'tencent.com\'] # 不要在这上面随便加/,否则有时候只会取一页

url = \'http://hr.tencent.com/position.php?&start=\'

offset = 0

start_urls = [url+str(offset),]

def parse(self, response):

list = response.xpath("//tr[@class=\'even\']|//tr[@class=\'odd\']")

for i in list:

# 初始化模型对象,就在for循环里面实例化吧

item = TencentscripyItem()

name = i.xpath(\'.//a/text()\').extract()[0]

link = i.xpath(\'.//a/@href\').extract()[0]

type = i.xpath(\'./td[2]/text()\').extract()[0]

number = i.xpath(\'./td[3]/text()\').extract()[0]

place = i.xpath(\'./td[4]/text()\').extract()[0]

rtime = i.xpath(\'./td[5]/text()\').extract()[0]

item[\'name\'] = name

item[\'link\'] = link

item[\'type\'] = type

item[\'number\'] = number

item[\'place\'] = place

item[\'rtime\'] = rtime

yield item

if self.offset < 1680:

self.offset += 10

# 每次处理完一页的数据之后,重新发送下一页页面请求

# self.offset自增10,同时拼接为新的url,并调用回调函数self.parse处理Response

yield scrapy.Request(self.url+str(self.offset),callback=self.parse) # 这里的回调函数后面不用加()

小Tips:页码自增高逼格方法

curpage = re.search(\'(\\d+)\',response.url).group(1) # 从任意地方查找数字并赋值给curpage page = int(curpage) + 10 # 将链接中的页码+10 url = re.sub(\'\\d+\', str(page), response.url) # 找到链接中的数字,替换成新的值

管道模块

import json

class TencentscripyPipeline(object):

def __init__(self):

self.filename = open(\'tencent-job.json\',\'wb\')

def process_item(self, item, spider):

text = json.dumps(dict(item),ensure_ascii=False).encode(\'utf-8\')+b\'\\n\'

self.filename.write(text)

return item

def close_spider(self,spider):

self.filename.close()

1 import scrapy 2 3 class TencentItem(scrapy.Item): 4 # define the fields for your item here like: 5 # 职位名 6 positionname = scrapy.Field() 7 # 详情连接 8 positionlink = scrapy.Field() 9 # 职位类别 10 positionType = scrapy.Field() 11 # 招聘人数 12 peopleNum = scrapy.Field() 13 # 工作地点 14 workLocation = scrapy.Field() 15 # 发布时间 16 publishTime = scrapy.Field()

1 # -*- coding: utf-8 -*- 2 3 # Scrapy settings for tencent project 4 # 5 # For simplicity, this file contains only settings considered important or 6 # commonly used. You can find more settings consulting the documentation: 7 # 8 # http://doc.scrapy.org/en/latest/topics/settings.html 9 # http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html 10 # http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html 11 12 BOT_NAME = \'tencent\' 13 14 SPIDER_MODULES = [\'tencent.spiders\'] 15 NEWSPIDER_MODULE = \'tencent.spiders\' 16 17 18 # Crawl responsibly by identifying yourself (and your website) on the user-agent 19 #USER_AGENT = \'tencent (+http://www.yourdomain.com)\' 20 21 # Obey robots.txt rules 22 ROBOTSTXT_OBEY = True 23 24 # Configure maximum concurrent requests performed by Scrapy (default: 16) 25 #CONCURRENT_REQUESTS = 32 26 27 # Configure a delay for requests for the same website (default: 0) 28 # See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay 29 # See also autothrottle settings and docs 30 DOWNLOAD_DELAY = 2 31 # The download delay setting will honor only one of: 32 #CONCURRENT_REQUESTS_PER_DOMAIN = 16 33 #CONCURRENT_REQUESTS_PER_IP = 16 34 35 # Disable cookies (enabled by default) 36 #COOKIES_ENABLED = False 37 38 # Disable Telnet Console (enabled by default) 39 #TELNETCONSOLE_ENABLED = False 40 41 # Override the default request headers: 42 DEFAULT_REQUEST_HEADERS = { 43 "User-Agent" : "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0;", 44 \'Accept\': \'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8\' 45 } 46 47 # Enable or disable spider middlewares 48 # See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html 49 #SPIDER_MIDDLEWARES = { 50 # \'tencent.middlewares.MyCustomSpiderMiddleware\': 543, 51 #} 52 53 # Enable or disable downloader middlewares 54 # See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html 55 #DOWNLOADER_MIDDLEWARES = { 56 # \'tencent.middlewares.MyCustomDownloaderMiddleware\': 543, 57 #} 58 59 # Enable or disable extensions 60 # See http://scrapy.readthedocs.org/en/latest/topics/extensions.html 61 #EXTENSIONS = { 62 # \'scrapy.extensions.telnet.TelnetConsole\': None, 63 #} 64 65 # Configure item pipelines 66 # See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html 67 ITEM_PIPELINES = { 68 \'tencent.pipelines.TencentPipeline\': 300, 69 } 70 71 # Enable and configure the AutoThrottle extension (disabled by default) 72 # See http://doc.scrapy.org/en/latest/topics/autothrottle.html 73 #AUTOTHROTTLE_ENABLED = True 74 # The initial download delay 75 #AUTOTHROTTLE_START_DELAY = 5 76 # The maximum download delay to be set in case of high latencies 77 #AUTOTHROTTLE_MAX_DELAY = 60 78 # The average number of requests Scrapy should be sending in parallel to 79 # each remote server 80 #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 81 # Enable showing throttling stats for every response received: 82 #AUTOTHROTTLE_DEBUG = False 83 84 # Enable and configure HTTP caching (disabled by default) 85 # See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings 86 #HTTPCACHE_ENABLED = True 87 #HTTPCACHE_EXPIRATION_SECS = 0 88 #HTTPCACHE_DIR = \'httpcache\' 89 #HTTPCACHE_IGNORE_HTTP_CODES = [] 90 #HTTPCACHE_STORAGE = \'scrapy.extensions.httpcache.FilesystemCacheStorage\'

示例三、提取json文件里的图片***

爬虫模块

# -*- coding: utf-8 -*-

import scrapy,json

from day_30.douyuScripy.douyuScripy.items import DouyuscripyItem

class DouyuSpider(scrapy.Spider):

name = \'douyu\'

allowed_domains = [\'capi.douyucdn.cn\']

url = \'http://capi.douyucdn.cn/api/v1/getVerticalRoom?limit=20&offset=\'

offset = 0

start_urls = [url+str(offset)]

def parse(self, response):

# scrapy获取html页面用的是response.body ==> 字节类型(bytes) response.text ==> 字符串类型(unicode)

# 把json格式的数据转换为python格式,data段是列表

py_dic = json.loads(response.text)

# {error: 0,data: [{room_id: "2690605",room_src: "https://rpic.douyucdn.cn/live-cover/appCovers/2017/11/22/2690605_20171122081559_small.jpg",...

data_list = py_dic[\'data\']

for data in data_list:

item = DouyuscripyItem() # 这个实例化还是放在循环里面吧,要不然会有意想不到的惊喜哟

item[\'room_id\'] = data[\'room_id\']

item[\'room_name\'] = data[\'room_name\']

item[\'vertical_src\'] = data[\'vertical_src\']

yield item

if self.offset < 40:

self.offset += 20

yield scrapy.Request(self.url+str(self.offset),callback=self.parse)

管道模块***(保存图片+改名)

import scrapy,os

from scrapy.utils.project import get_project_settings

from scrapy.pipelines.images import ImagesPipeline

class DouyuscripyPipeline(ImagesPipeline):

IMAGES_STORE = get_project_settings().get(\'IMAGES_STORE\')

def get_media_requests(self, item, info):

# 获取图片的链接

image_url = item["vertical_src"]

# 向图片的url地址发送请求获取图片

yield scrapy.Request(image_url)

def item_completed(self, result, item, info):

# 获取文件的名字

image_path = [x["path"] for ok, x in result if ok]

# 更改文件的名字为直播间名+房间号

os.rename(self.IMAGES_STORE + "/" + image_path[0], self.IMAGES_STORE + "/" + item["room_name"]+\'-\'+item[\'room_id\'] + ".jpg")

# 将图片储存的路径保存到item中,返回item

item["imagePath"] = self.IMAGES_STORE + "/" + item["room_name"]+item[\'room_id\']

return item

1 import scrapy 2 3 class DouyuscripyItem(scrapy.Item): 4 # define the fields for your item here like: 5 vertical_src = scrapy.Field() # 图片 连接 6 room_name = scrapy.Field() # 房间名 7 room_id = scrapy.Field() # 房间id号 8 imagePath = scrapy.Field() # 本地文件保存路径

1 # -*- coding: utf-8 -*- 2 3 # Scrapy settings for douyuScripy project 4 # 5 # For simplicity, this file contains only settings considered important or 6 # commonly used. You can find more settings consulting the documentation: 7 # 8 # http://doc.scrapy.org/en/latest/topics/settings.html 9 # http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html 10 # http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html 11 12 BOT_NAME = \'douyuScripy\' 13 14 SPIDER_MODULES = [\'douyuScripy.spiders\'] 15 NEWSPIDER_MODULE = \'douyuScripy.spiders\' 16 17 18 # Crawl responsibly by identifying yourself (and your website) on the user-agent 19 #USER_AGENT = \'douyuScripy (+http://www.yourdomain.com)\' 20 21 # Obey robots.txt rules 22 # ROBOTSTXT_OBEY = True 23 24 # Configure maximum concurrent requests performed by Scrapy (default: 16) 25 #CONCURRENT_REQUESTS = 32 26 27 # Configure a delay for requests for the same website (default: 0) 28 # See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay 29 # See also autothrottle settings and docs 30 DOWNLOAD_DELAY = 2 31 # The download delay setting will honor only one of: 32 #