Linux-监控三剑客之prometheus

Posted 缘之世界

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Linux-监控三剑客之prometheus相关的知识,希望对你有一定的参考价值。

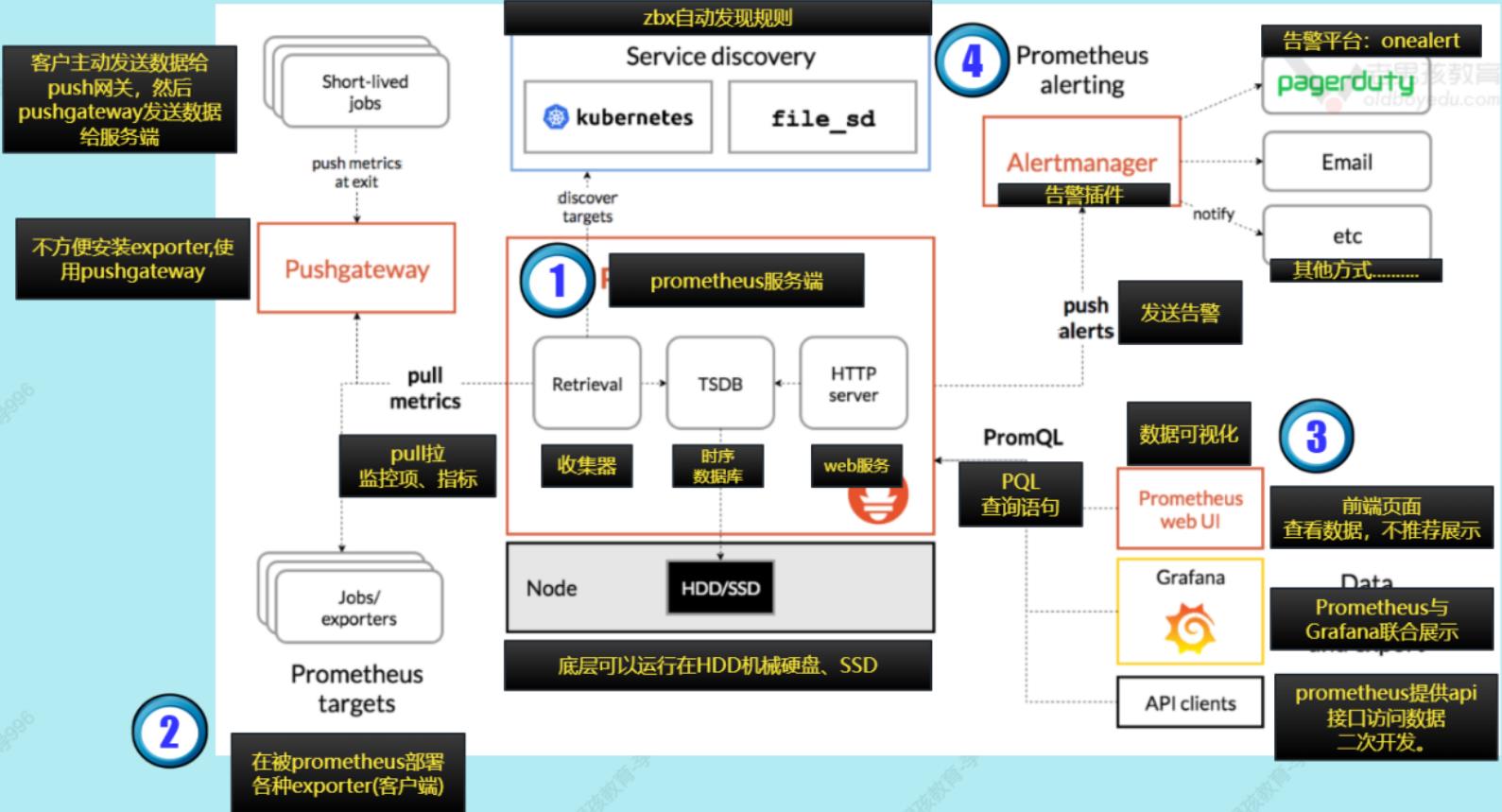

prometheus

一、prometheus监控架构

二、Prometheus vs Zabbix

| 指标 | Prometheus | Zabbix |

|---|---|---|

| 语言 | Golang(Go) | PHP,C,GO |

| 部署 | 二进制,解压即用. | yum,编译,数据库,php依赖 |

| 是否容易掌握 | 门槛较高 | 容易使用 |

| 监控方式 | 通过各种exporter,监控一般都是基于http | 各种模板,客户端,自定义监控,各种协议. |

| 应用场景 | 监控服务,容器,k8s | 监控系统底层,硬件,系统,网络 |

三、Prometheus使用流程

1.环境准备

| 角色 | 主机名 | ip |

|---|---|---|

| prometheus服务端 | prometheus-server | 172.16.1.64 |

| grafana服务端 | grafana-server | 172.16.1.63 |

cat >>/etc/hosts<<EOF

172.16.1.64 m04 pro.cn

172.16.1.63 m03 gra.cn

172.16.1.62 m02 zbx.cn

172.16.1.81 docker01 docker01.cn

EOF

2.时间同步

做了定时任务

[root@prometheus-server ~]# crontab -l

#1.配置时间同步

*/2 * * * * /sbin/ntpdate ntp1.aliyun.com &>/dev/null

3.部署

[root@prometheus-server ~]# ll

total 74048

-rw-------. 1 root root 1340 Jan 9 09:09 anaconda-ks.cfg

-rw-r--r-- 1 root root 75819309 Mar 21 08:16 prometheus-2.33.3.linux-amd64.tar.gz

[root@prometheus-server ~]# mkdir -p /app/tools

[root@prometheus-server ~]# tar xf prometheus-2.33.3.linux-amd64.tar.gz -C /app/tools

[root@prometheus-server ~]# ll /app/tools/

total 0

drwxr-xr-x 4 3434 3434 132 Feb 12 2022 prometheus-2.33.3.linux-amd64

#创建软链接

[root@prometheus-server ~]# ln -s /app/tools/prometheus-2.33.3.linux-amd64/ /app/tools/prometheus

[root@prometheus-server ~]# ll /app/tools/

total 0

lrwxrwxrwx 1 root root 41 Mar 21 21:11 prometheus -> /app/tools/prometheus-2.33.3.linux-amd64/

drwxr-xr-x 4 3434 3434 132 Feb 12 2022 prometheus-2.33.3.linux-amd64

[root@prometheus-server ~]# /app/tools/prometheus/prometheus --version

prometheus, version 2.33.3 (branch: HEAD, revision: 56e14463bccfbb6a8facfb663fed5e0ca9f8b387)

build user: root@4ee34e4f7340

build date: 20220211-20:48:21

go version: go1.17.7

platform: linux/amd64

#启动prometheus

[root@prometheus-server ~]# cd /app/tools/prometheus/

[root@prometheus-server /app/tools/prometheus]# ll

total 196072

drwxr-xr-x 2 3434 3434 38 Feb 12 2022 console_libraries

drwxr-xr-x 2 3434 3434 173 Feb 12 2022 consoles

-rw-r--r-- 1 3434 3434 11357 Feb 12 2022 LICENSE

-rw-r--r-- 1 3434 3434 3773 Feb 12 2022 NOTICE

-rwxr-xr-x 1 3434 3434 104427627 Feb 12 2022 prometheus

-rw-r--r-- 1 3434 3434 934 Feb 12 2022 prometheus.yml

-rwxr-xr-x 1 3434 3434 96322328 Feb 12 2022 promtool

[root@prometheus-server /app/tools/prometheus]# ./prometheus

ts=2023-03-21T13:13:03.228Z caller=main.go:475 level=info msg="No time or size retention was set so using the default time retention" duration=15d

ts=2023-03-21T13:13:03.228Z caller=main.go:512 level=info msg="Starting Prometheus" version="(version=2.33.3, branch=HEAD, revision=56e14463bccfbb6a8facfb663fed5e0ca9f8b387)"

ts=2023-03-21T13:13:03.228Z caller=main.go:517 level=info build_context="(go=go1.17.7, user=root@4ee34e4f7340, date=20220211-20:48:21)"

ts=2023-03-21T13:13:03.228Z caller=main.go:518 level=info host_details="(Linux 3.10.0-1160.el7.x86_64 #1 SMP Mon Oct 19 16:18:59 UTC 2020 x86_64 prometheus-server (none))"

ts=2023-03-21T13:13:03.228Z caller=main.go:519 level=info fd_limits="(soft=1024, hard=4096)"

ts=2023-03-21T13:13:03.228Z caller=main.go:520 level=info vm_limits="(soft=unlimited, hard=unlimited)"

ts=2023-03-21T13:13:03.231Z caller=web.go:570 level=info component=web msg="Start listening for connections" address=0.0.0.0:9090

ts=2023-03-21T13:13:03.231Z caller=main.go:923 level=info msg="Starting TSDB ..."

ts=2023-03-21T13:13:03.249Z caller=head.go:493 level=info component=tsdb msg="Replaying on-disk memory mappable chunks if any"

ts=2023-03-21T13:13:03.249Z caller=head.go:527 level=info component=tsdb msg="On-disk memory mappable chunks replay completed" duration=5.757µs

ts=2023-03-21T13:13:03.249Z caller=head.go:533 level=info component=tsdb msg="Replaying WAL, this may take a while"

ts=2023-03-21T13:13:03.250Z caller=tls_config.go:195 level=info component=web msg="TLS is disabled." http2=false

ts=2023-03-21T13:13:03.250Z caller=head.go:604 level=info component=tsdb msg="WAL segment loaded" segment=0 maxSegment=0

ts=2023-03-21T13:13:03.250Z caller=head.go:610 level=info component=tsdb msg="WAL replay completed" checkpoint_replay_duration=21.734µs wal_replay_duration=1.312807ms total_replay_duration=1.358513ms

ts=2023-03-21T13:13:03.251Z caller=main.go:944 level=info fs_type=XFS_SUPER_MAGIC

ts=2023-03-21T13:13:03.251Z caller=main.go:947 level=info msg="TSDB started"

ts=2023-03-21T13:13:03.251Z caller=main.go:1128 level=info msg="Loading configuration file" filename=prometheus.yml

ts=2023-03-21T13:13:03.300Z caller=main.go:1165 level=info msg="Completed loading of configuration file" filename=prometheus.yml totalDuration=48.892563ms db_storage=956ns remote_storage=22.014µs web_handler=532ns query_engine=1.249µs scrape=10.208578ms scrape_sd=29.288µs notify=29.791µs notify_sd=8.427µs rules=3.212µs

ts=2023-03-21T13:13:03.300Z caller=main.go:896 level=info msg="Server is ready to receive web requests."

注:prometheus默认读取当前目录下面的prometheus.yml配置文件。

4.访问

5.创建systemctl管理

[root@prometheus-server /app/tools/prometheus]# cat /usr/lib/systemd/system/prometheus.service

[Unit]

Description=Prometheus Server

After=network.target

[Service]

Type=simple

ExecStart=/app/tools/prometheus/prometheus --config.file=/app/tools/prometheus/prometheus.yml

KillMode=process

[Install]

WantedBy=multi-user.target

[root@prometheus-server /app/tools/prometheus]# systemctl daemon-reload

[root@prometheus-server /app/tools/prometheus]# systemctl status prometheus.service

● prometheus.service - Prometheus Server

Loaded: loaded (/usr/lib/systemd/system/prometheus.service; disabled; vendor preset: disabled)

Active: active (running) since Tue 2023-03-21 21:18:40 CST; 5s ago

Main PID: 2837 (prometheus)

CGroup: /system.slice/prometheus.service

└─2837 /app/tools/prometheus/prometheus --config.file=/app/tools/prometheus/prometheus.yml

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.696Z caller=head.go:527 level=info component=tsdb msg="On-disk memory m…on=5.263µs

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.696Z caller=head.go:533 level=info component=tsdb msg="Replaying WAL, t...a while"

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.697Z caller=tls_config.go:195 level=info component=web msg="TLS is disa...p2=false

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.698Z caller=head.go:604 level=info component=tsdb msg="WAL segment load...egment=0

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.698Z caller=head.go:610 level=info component=tsdb msg="WAL replay compl…1.888408ms

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.698Z caller=main.go:944 level=info fs_type=XFS_SUPER_MAGIC

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.698Z caller=main.go:947 level=info msg="TSDB started"

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.698Z caller=main.go:1128 level=info msg="Loading configuration file" fi...heus.yml

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.718Z caller=main.go:1165 level=info msg="Completed loading of configuration fil…µs

Mar 21 21:18:40 prometheus-server prometheus[2837]: ts=2023-03-21T13:18:40.718Z caller=main.go:896 level=info msg="Server is ready to receive web requests."

Hint: Some lines were ellipsized, use -l to show in full.

6.配置web页面

查看所有键值

四、Prometheus配置

1.服务端命令行选项

| prometheus命令行核心选项 | 说明 |

|---|---|

| --config.file="prometheus.yml" | 指定配置文件,默认是当前目录下在的prometheus.yml |

| --web.listenaddress="0.0.0.0:9090" | 前端web页面,端口和监听的地址。如果想增加访问认证可以用ngx。 |

| --web.max-connections=512 | 并发连接数 |

| --storage.tsdb.path="data/" | 指定tsdb数据存放目录,相对于安装目录. |

| --log.level=info | 日志级别,info(一般),debug(超级详细).prometheus日志默认输出到屏幕(标准输 出) |

| --log.format=logfmt | 日志格式。logfmt默认格式。 json格式(日志收集的时候使用) |

开机自启动

写入/etc/rc.local

/app/prometheus/prometheus --config.file="/app/prometheus/prometheus.yml"

--web.listen-address="0.0.0.0:9090" --web.maxconnections=512 &>/var/log/prometheus.log &

journalctl -f -u prometheus.service

2. 配置文件

[root@prometheus-server ~]# cat /app/tools/prometheus/prometheus.yml

#全局定义部分

global:

#这个间隔表示,prometheus采集数据的间隔.

scrape_interval: 15s

#执行对应的rules(规则)间隔,一般报警规则.

evaluation_interval: 15s

#采集数据的超时时间,默认是10秒.

# scrape_timeout is set to the global default (10s).

#用于配置警告信息,alertmanager配置。

alerting:

alertmanagers:

- static_configs:

- targets:

rule_files:

#数据采集的配置(客户端)

scrape_configs:

- job_name: "prometheus"

#任务名字.体现采集哪些机器,哪些指标.

static_configs:

#静态配置文件,直接指定被采集的对象. 修改后要重启

- targets: ["localhost:9090"]

file_sd_configs:

#动态配置文件,动态读取文件内容,然后进行采集,实时监控。

#修改prometheus服务端监控配置文件,服务端自我监控设置名字.

[root@prometheus-server ~]# cat /app/tools/prometheus/prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

rule_files:

scrape_configs:

#修改了名字

- job_name: "prometheus-server"

static_configs:

- targets: ["localhost:9090"]

五、Prometheus的exporter

1.概述

prometheus有众多的exporters.基本通过命令或docker运行

| exporter | 说明 |

|---|---|

| node_exporter | 获取节点基础信息(系统监控) |

| 服务_exporter | 监控指定服务的。 |

2.环境准备

| 节点 | ip |

|---|---|

| prometheus-server | 10.0.0.64/172.16.1.64 |

| grafana-server | 10.0.0.63/172.16.1.63 |

3.部署node_exporter

#prometheus-server部署

[root@prometheus-server ~]# ll

total 82872

-rw-------. 1 root root 1340 Jan 9 09:09 anaconda-ks.cfg

-rw-r--r-- 1 root root 9033415 Mar 21 08:16 node_exporter-1.3.1.linux-amd64.tar.gz

[root@prometheus-server ~]# tar xf node_exporter-1.3.1.linux-amd64.tar.gz -C /app/tools/

[root@prometheus-server ~]# ln -s /app/tools/node_exporter-1.3.1.linux-amd64/ /app/tools/node_exporter

[root@prometheus-server ~]# ln -s /app/tools/node_exporter/node_exporter /bin/

#测试是否安装完成

[root@prometheus-server ~]# node_exporter

ts=2023-03-22T12:19:26.355Z caller=node_exporter.go:182 level=info msg="Starting node_exporter" version="(version=1.3.1, branch=HEAD, revision=a2321e7b940ddcff26873612bccdf7cd4c42b6b6)"

ts=2023-03-22T12:19:26.355Z caller=node_exporter.go:183 level=info msg="Build context" build_context="(go=go1.17.3, user=root@243aafa5525c, date=20211205-11:09:49)"

ts=2023-03-22T12:19:26.355Z caller=node_exporter.go:185 level=warn msg="Node Exporter is running as root user. This exporter is designed to run as unpriviledged user, root is not required."

ts=2023-03-22T12:19:26.356Z caller=filesystem_common.go:111 level=info collector=filesystem msg="Parsed flag --collector.filesystem.mount-points-exclude" flag=^/(dev|proc|run/credentials/.+|sys|var/lib/docker/.+)($|/)

ts=2023-03-22T12:19:26.356Z caller=filesystem_common.go:113 level=info collector=filesystem msg="Parsed flag --collector.filesystem.fs-types-exclude" flag=^(autofs|binfmt_misc|bpf|cgroup2?|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|iso9660|mqueue|nsfs|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|selinuxfs|squashfs|sysfs|tracefs)$

ts=2023-03-22T12:19:26.443Z caller=node_exporter.go:108 level=info msg="Enabled collectors"

ts=2023-03-22T12:19:26.443Z caller=node_exporter.go:115 level=info collector=arp

ts=2023-03-22T12:19:26.443Z caller=node_exporter.go:115 level=info collector=bcache

ts=2023-03-22T12:19:26.443Z caller=node_exporter.go:115 level=info collector=bonding

...

...

...

ts=2023-03-22T12:19:26.444Z caller=node_exporter.go:199 level=info msg="Listening on" address=:9100

ts=2023-03-22T12:19:26.444Z caller=tls_config.go:195 level=info msg="TLS is disabled." http2=false

#制作systemctl

[root@prometheus-server ~]# cat /usr/lib/systemd/system/node-exporter.service

[Unit]

Description=node exporter

After=network.target

[Service]

Type=simple

ExecStart=/bin/node_exporter

KillMode=process

[Install]

WantedBy=multi-user.target

[root@prometheus-server ~]# systemctl daemon-reload

[root@prometheus-server ~]# systemctl enable --now node-exporter.service

Created symlink from /etc/systemd/system/multi-user.target.wants/node-exporter.service to /usr/lib/systemd/system/node-exporter.service.

[root@prometheus-server ~]# ss -lntup|grep node

tcp LISTEN 0 128 [::]:9100 [::]:* users:(("node_exporter",pid=3749,fd=3))

#grafana-server部署

[root@grafana-server ~]# mkdir -p /app/tools

[root@grafana-server ~]# tar xf node_exporter-1.3.1.linux-amd64.tar.gz -C /app/tools/

[root@grafana-server ~]# ln -s /app/tools/node_exporter-1.3.1.linux-amd64/ /app/tools/node_exporter

[root@grafana-server ~]# ln -s /app/tools/node_exporter/node_exporter /bin/

[root@grafana-server ~]# cat /usr/lib/systemd/system/node-exporter.service

[Unit]

Description=node exporter

After=network.target

[Service]

Type=simple

ExecStart=/bin/node_exporter

KillMode=process

[Install]

WantedBy=multi-user.target

[root@grafana-server ~]# systemctl daemon-reload

[root@grafana-server ~]# systemctl enable --now node-exporter.service

Created symlink from /etc/systemd/system/multi-user.target.wants/node-exporter.service to /usr/lib/systemd/system/node-exporter.service.

[root@grafana-server ~]# ss -lntup|grep node

tcp LISTEN 0 128 [::]:9100 [::]:* users:(("node_exporter",pid=4042,fd=3))

4. 配置prometheus服务端静态配置文件

静态配置 :static_configs 书写到配置文件,重启Prometheus服务端生效。应用场景:适用于固定的服务器监控.

[root@prometheus-server ~]# cat /app/tools/prometheus/prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

rule_files:

scrape_configs:

- job_name: "prometheus-server"

static_configs:

- targets: ["localhost:9090"]

#添加下边这部分

- job_name: "basic_info_node_exporter"

static_configs:

- targets:

- "prom.cn:9100"

- "gra.cn:9100"

[root@prometheus-server ~]# systemctl restart prometheus.service

5.prometheus动态配置文件

获取的exporter域名+端口,写入配置文件,prometheus可以定时读取并加入到prometheus中。

用于解决,大量主机添加、删除的操作。

动态配置:file_sd_configs 配置书写到文件中,prometheus定时加载。应用场景:网站集群经常发生变化.

file_sd_configs:动态读取与加载配置文件。

files: 指定要加载的配置文件.

refresh_interval:读取间隔.

[root@prometheus-server ~]# cat /app/tools/prometheus/prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

rule_files:

scrape_configs:

- job_name: "prometheus-server"

static_configs:

- targets: ["localhost:9090"]

#添加下边这一段

- job_name: "basic_info_node_exporter_discovery"

file_sd_configs:

- files:

- /app/tools/prometheus/discovery_node_exporter.json

refresh_interval: 5s

[root@prometheus-server ~]# cat /app/tools/prometheus/discovery_node_exporter.json

[

"targets":[

"prom.cn:9100",

"gra.cn:9100"

]

]

[root@prometheus-server ~]# systemctl restart prometheus.service

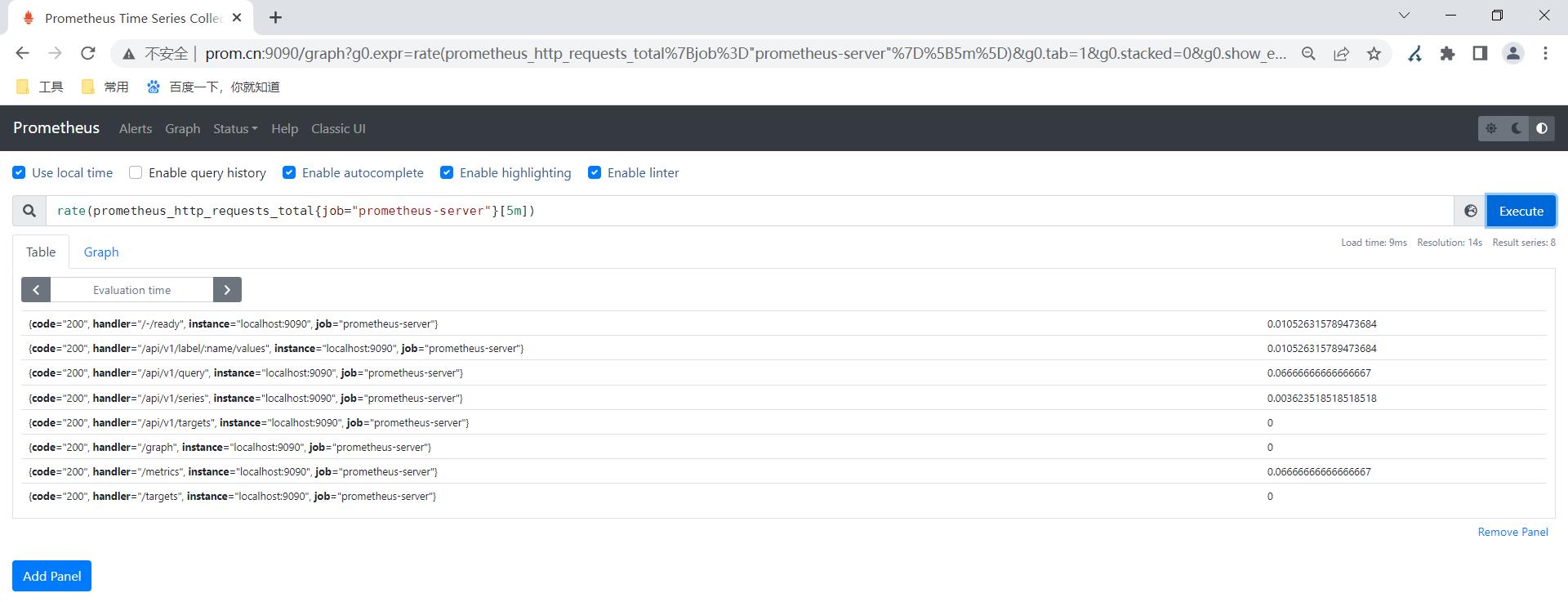

六、 Prometheus过滤语句

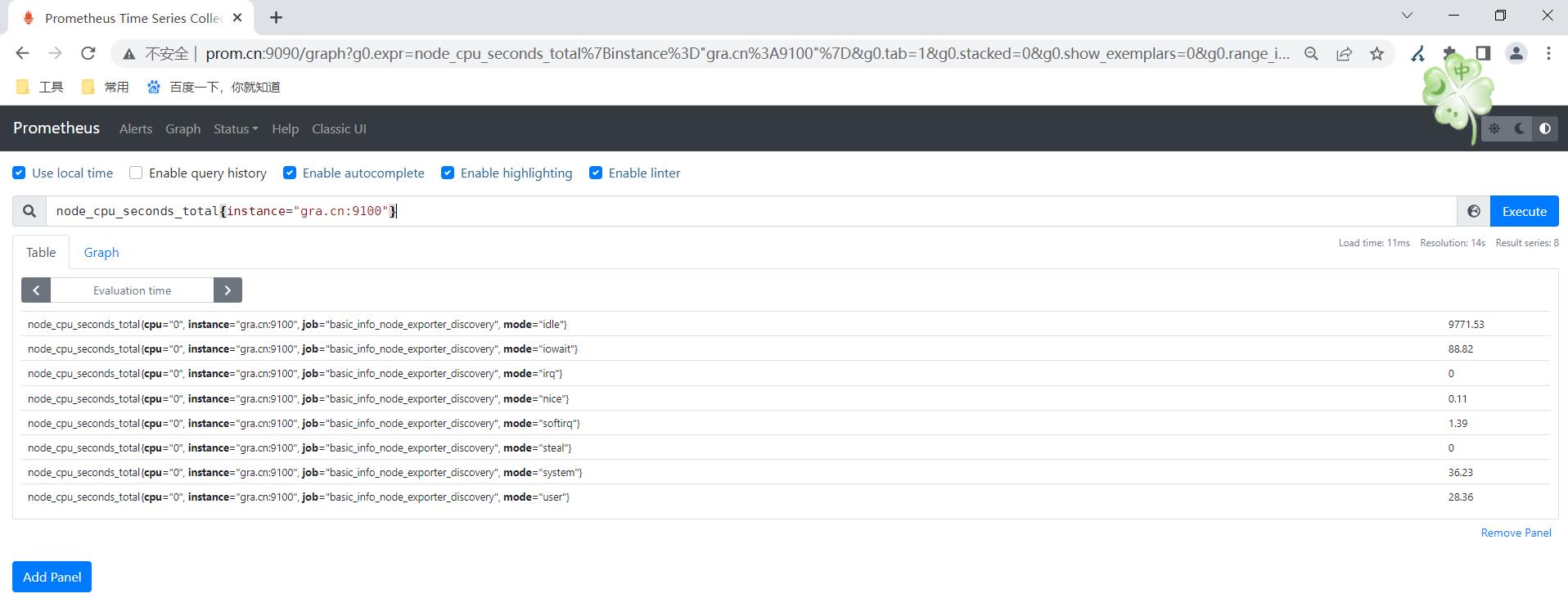

1.案例:基本过滤

获取所有主机可用内存输入

node_memory_MemFree_bytes

换算后

node_memory_MemFree_bytes/1024^2

过滤负载: node_load1

2.案例:包含条件的过滤

过滤指定主机的数据

node_cpu_seconds_totalinstance="gra.cn:9100"

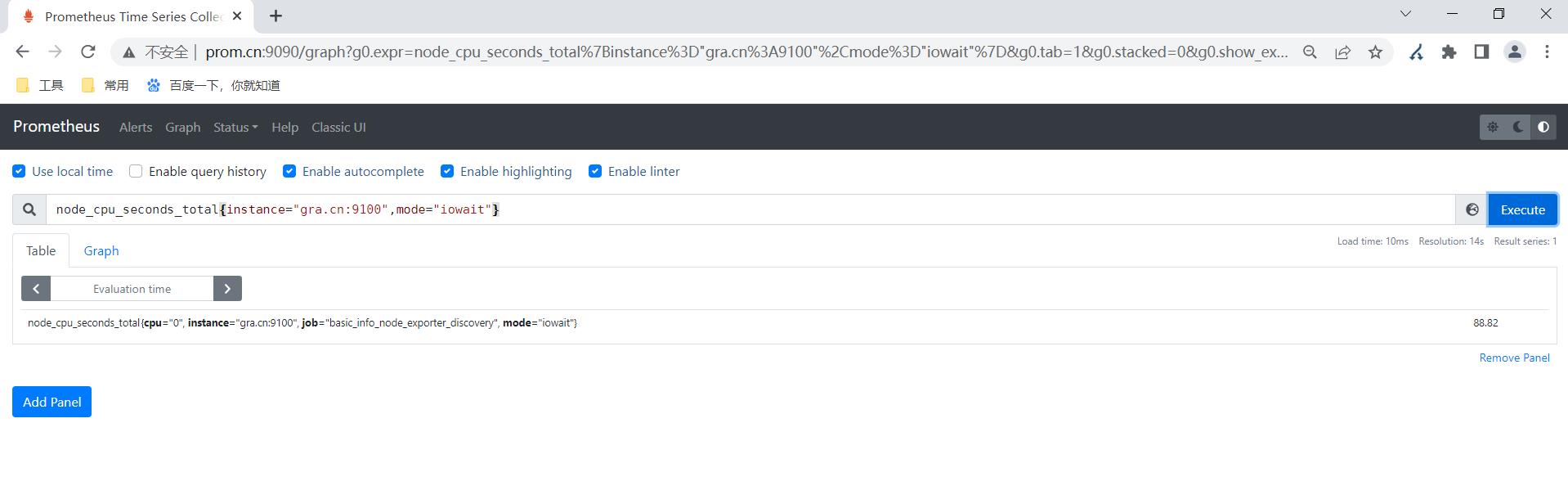

过滤出指定主机cpu信息只要iowait信息

node_cpu_seconds_totalinstance="gra.cn:9100",mode="iowait"

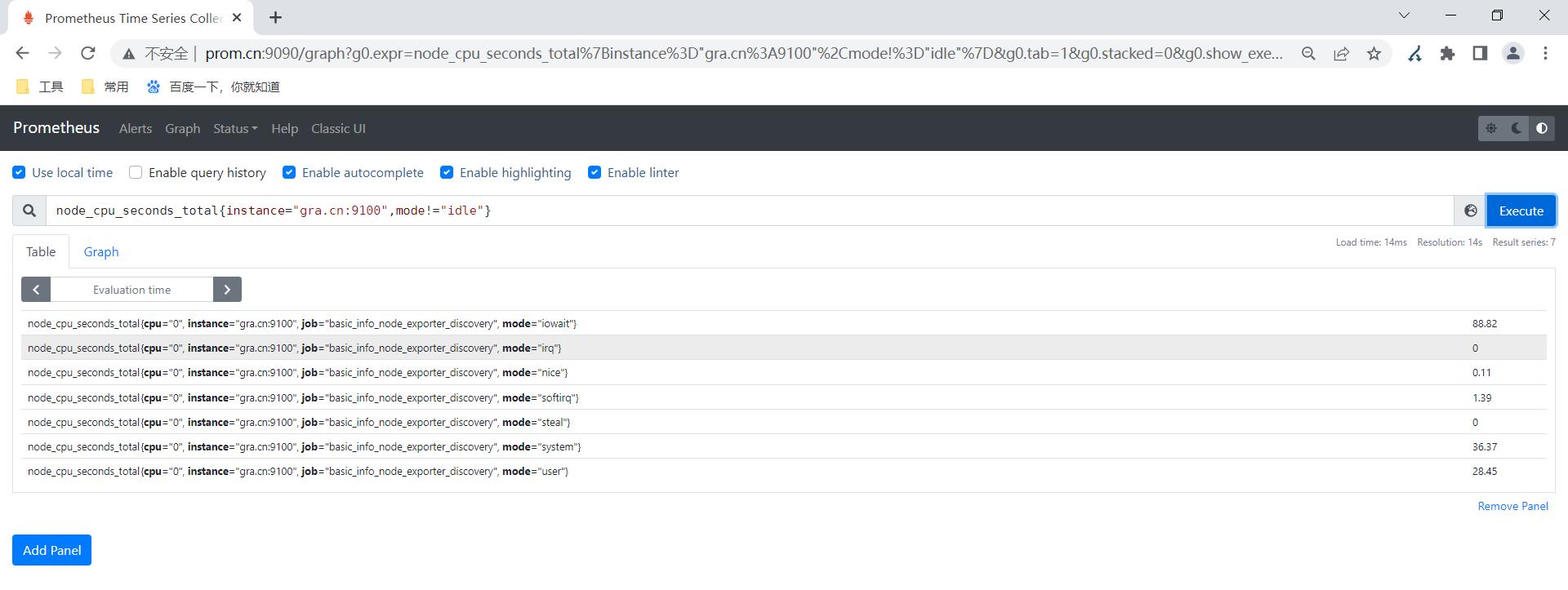

过滤出所有主机非idle的指标

node_cpu_seconds_totalinstance="gra.cn:9100",mode!="idle"

3.常用函数

取出最近1分钟系统cpu信息

node_cpu_seconds_totalcpu="0",instance="gra.cn:9100",mode="iowait"[1m]

[1m] 最近1分钟的所有数据

[]应用场景:配合着prometheus函数使用.

3.1 rate函数

rate(v range-vector) 计算范围向量中时间序列的每秒平均增长率。单调性的中断(例如由于目标重新启动而导致的计数器重置)会自动调 整。此外,计算推断到时间范围的末端,允许错过刮擦或刮擦周期与该范围的时间段的不完美对齐。

以下示例表达式返回在过去 5 分钟内测量的每秒 HTTP 请求速率,范围向量中的每个时间序列:

rate(prometheus_http_requests_totaljob="prometheus-server"[5m])

rate 只能与计数器一起使用。它最适合警报和缓慢移动计数器的图形。

3.2 sum求和

3.3 count计数

3.4其他函数

https://prometheus.io/docs/prometheus/2.37/querying/functions/

4.复杂语句

4.1计算内存使用率

(node_memory_MemTotal_bytes -

node_memory_MemFree_bytes) /

node_memory_MemTotal_bytes * 100

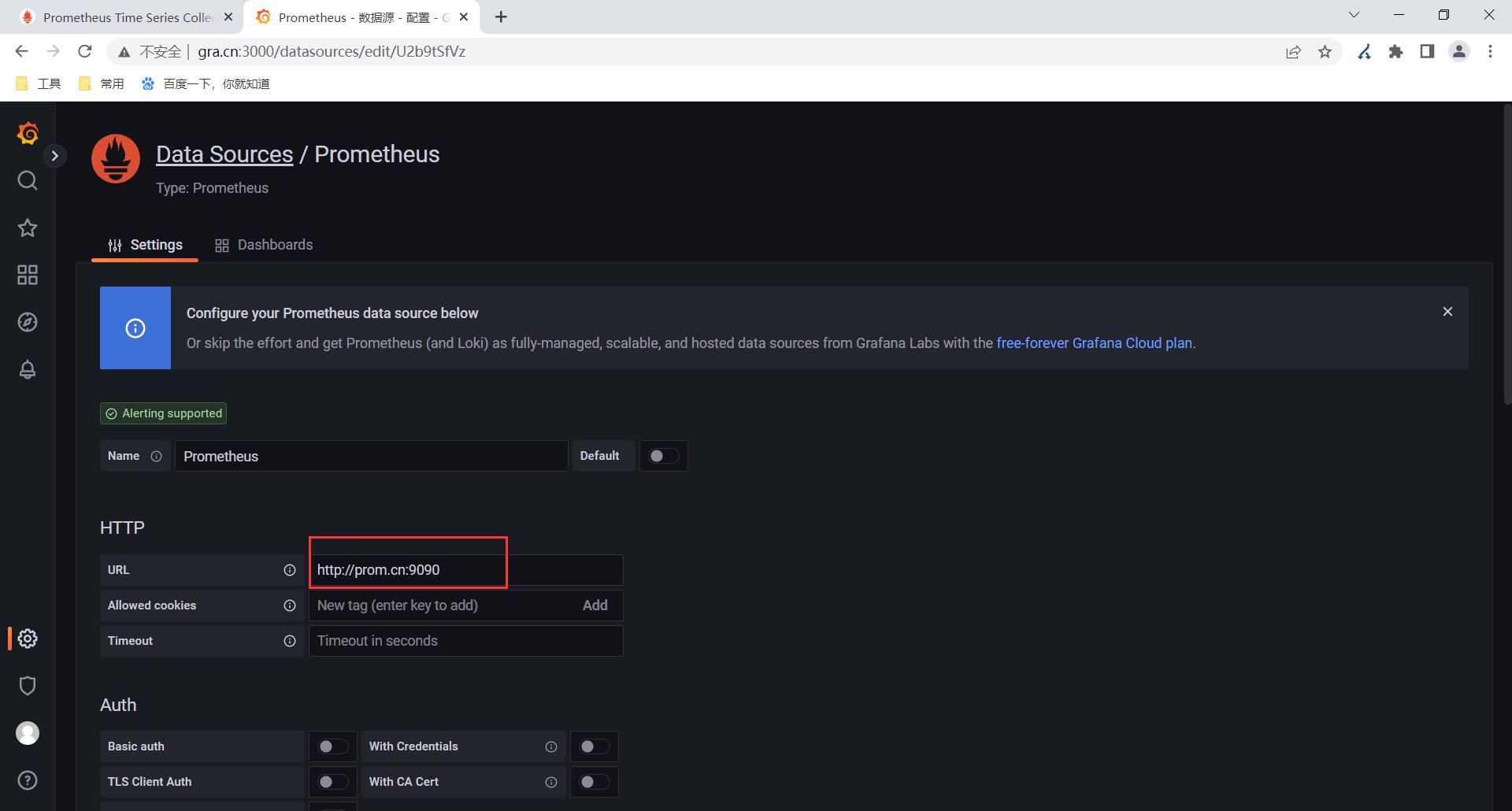

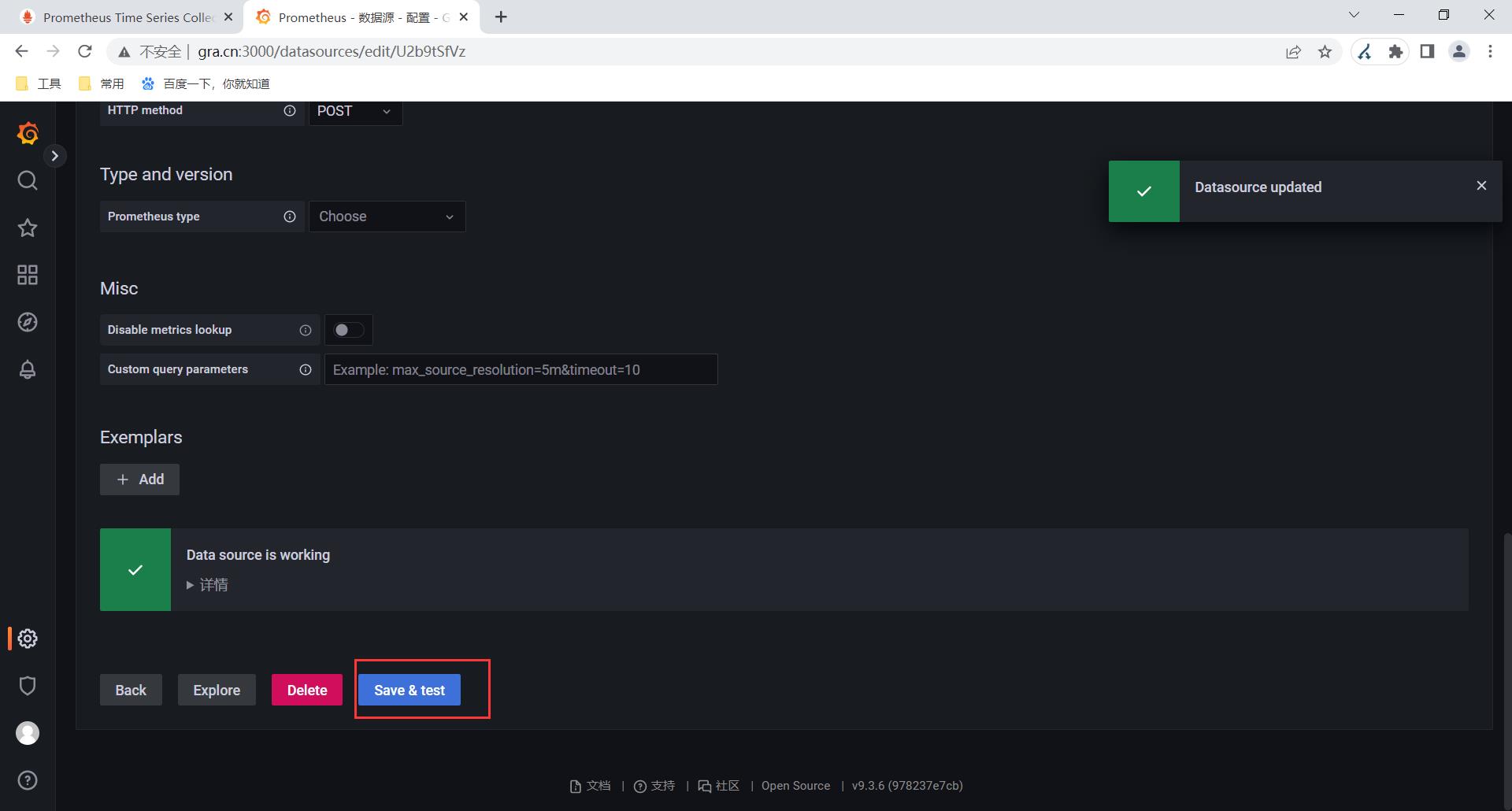

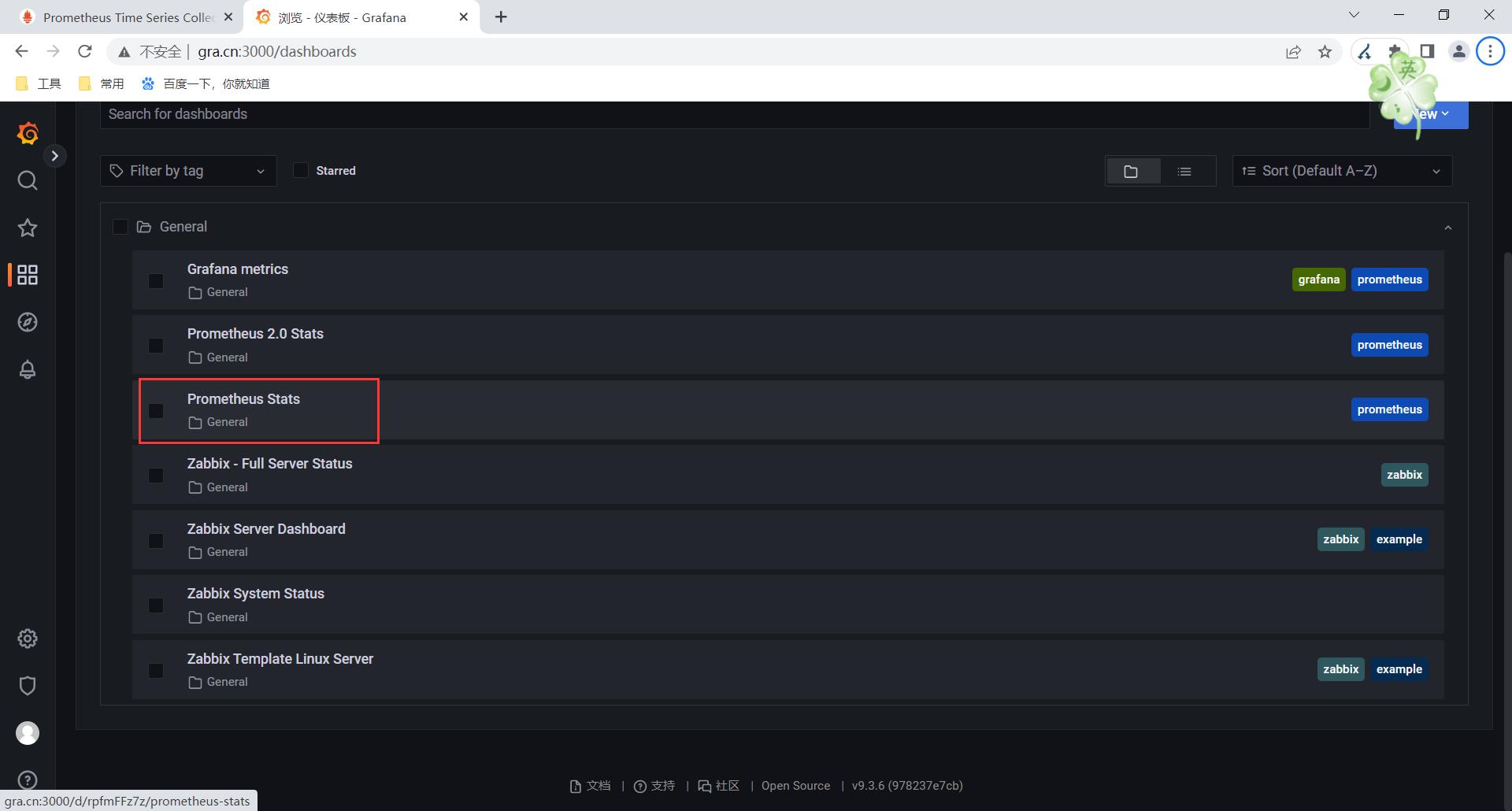

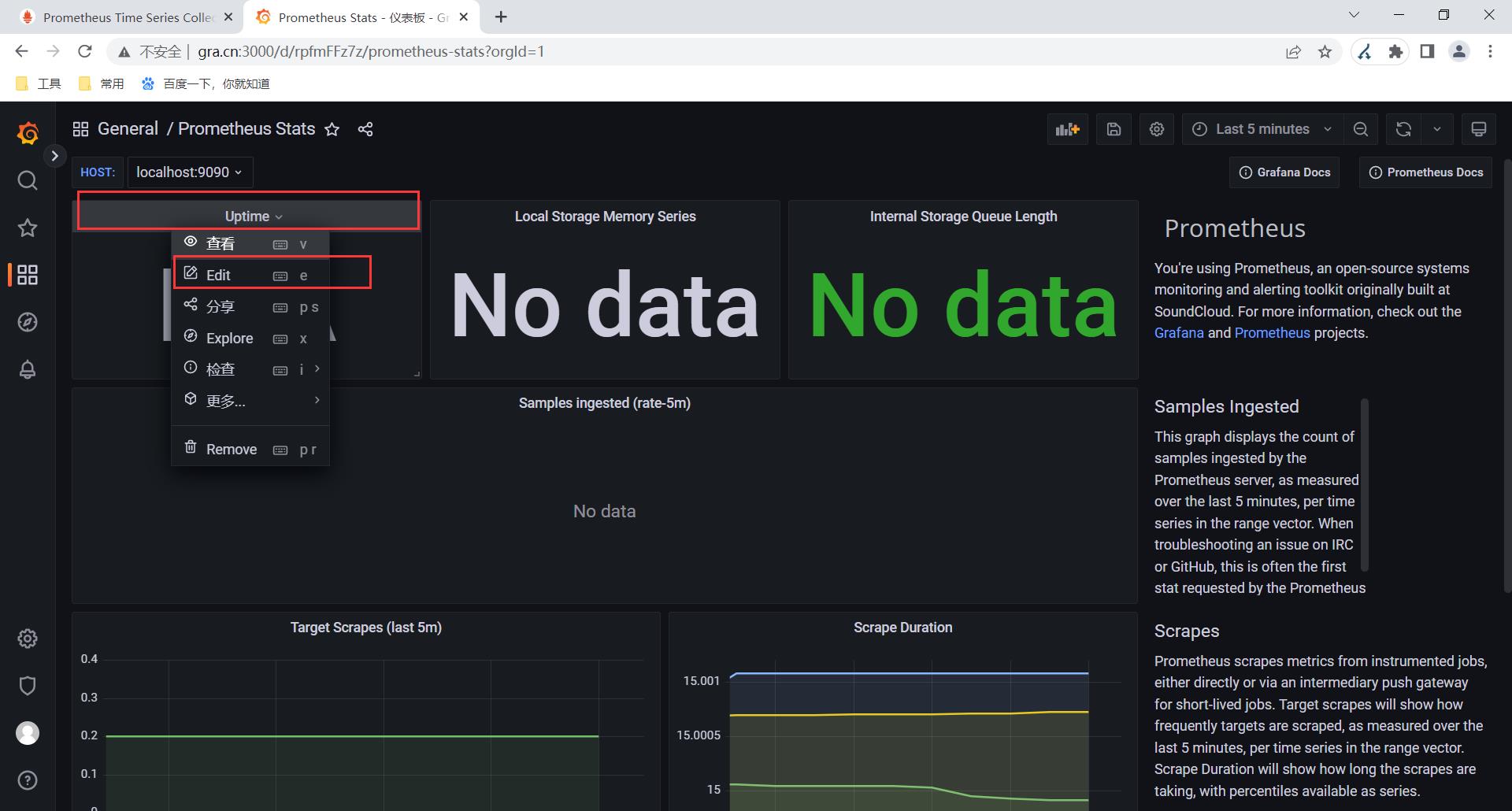

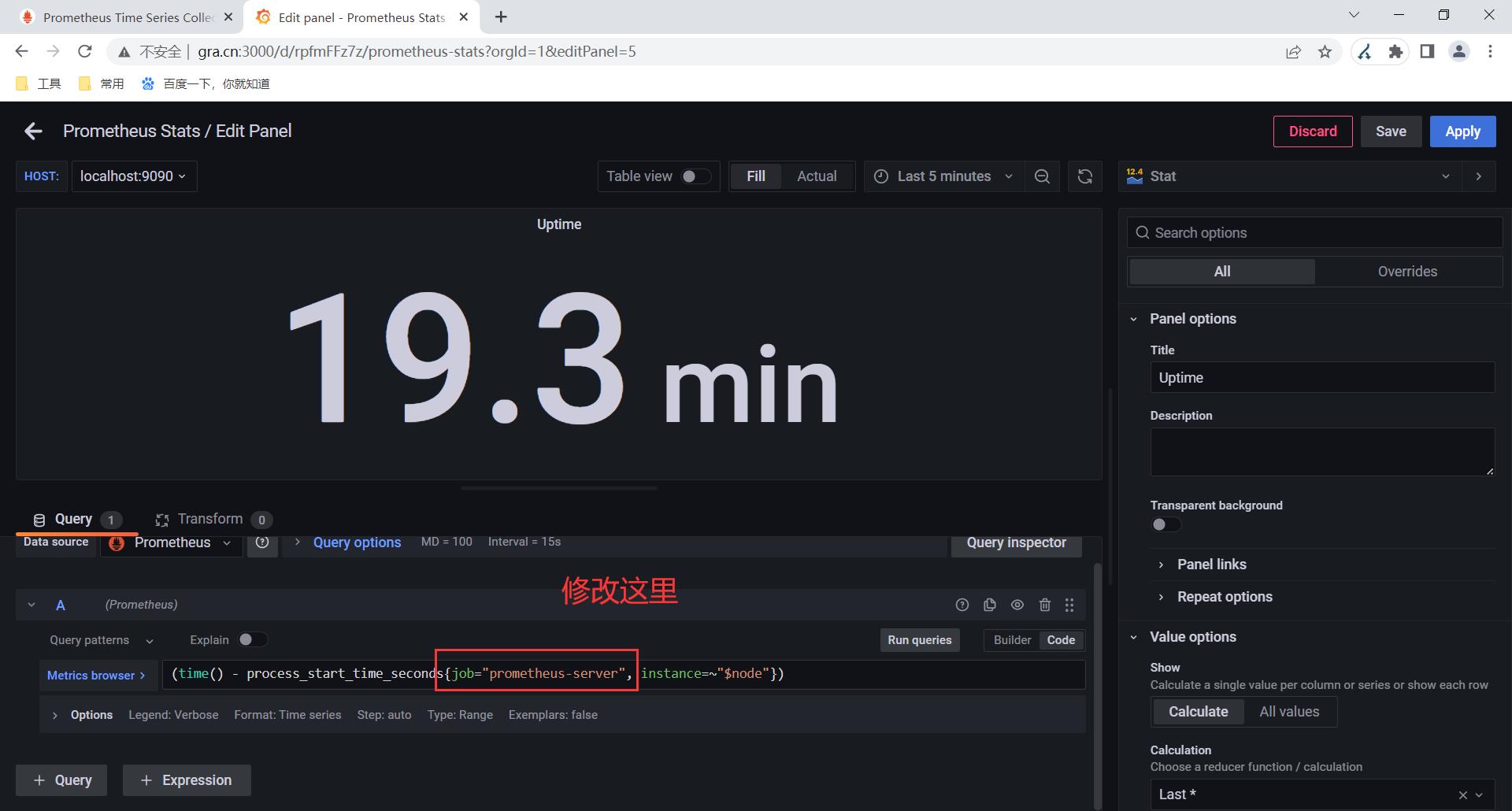

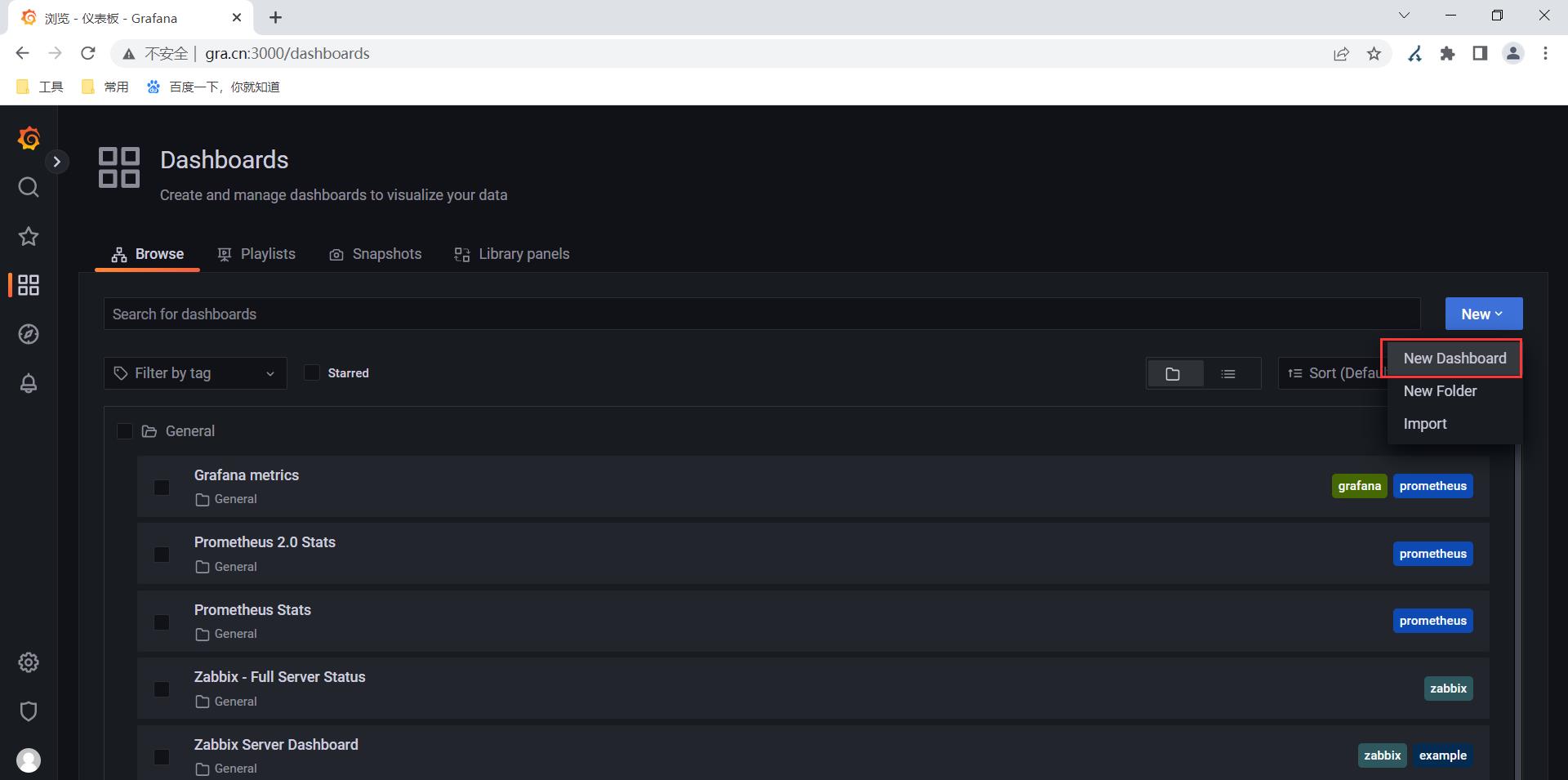

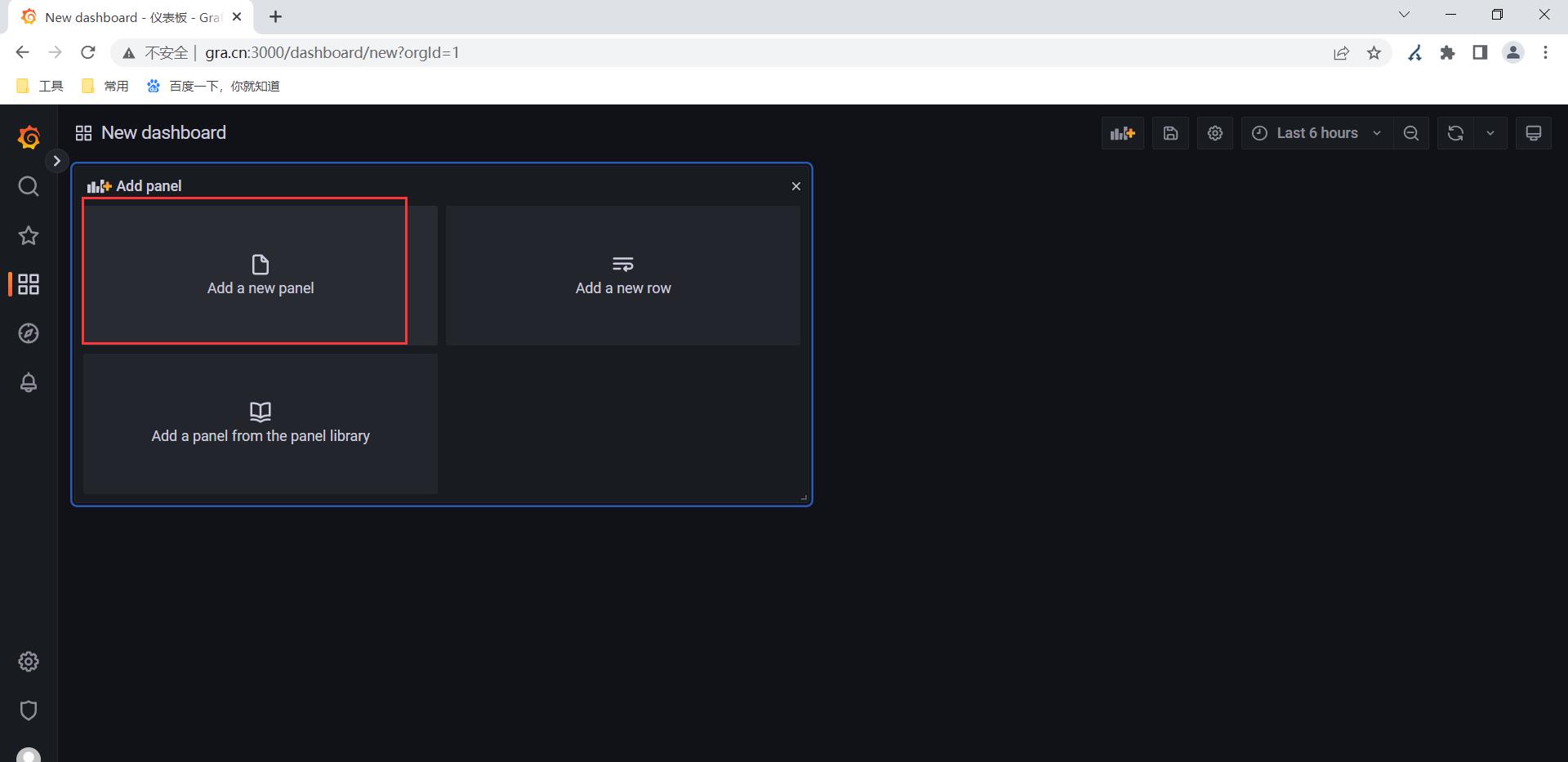

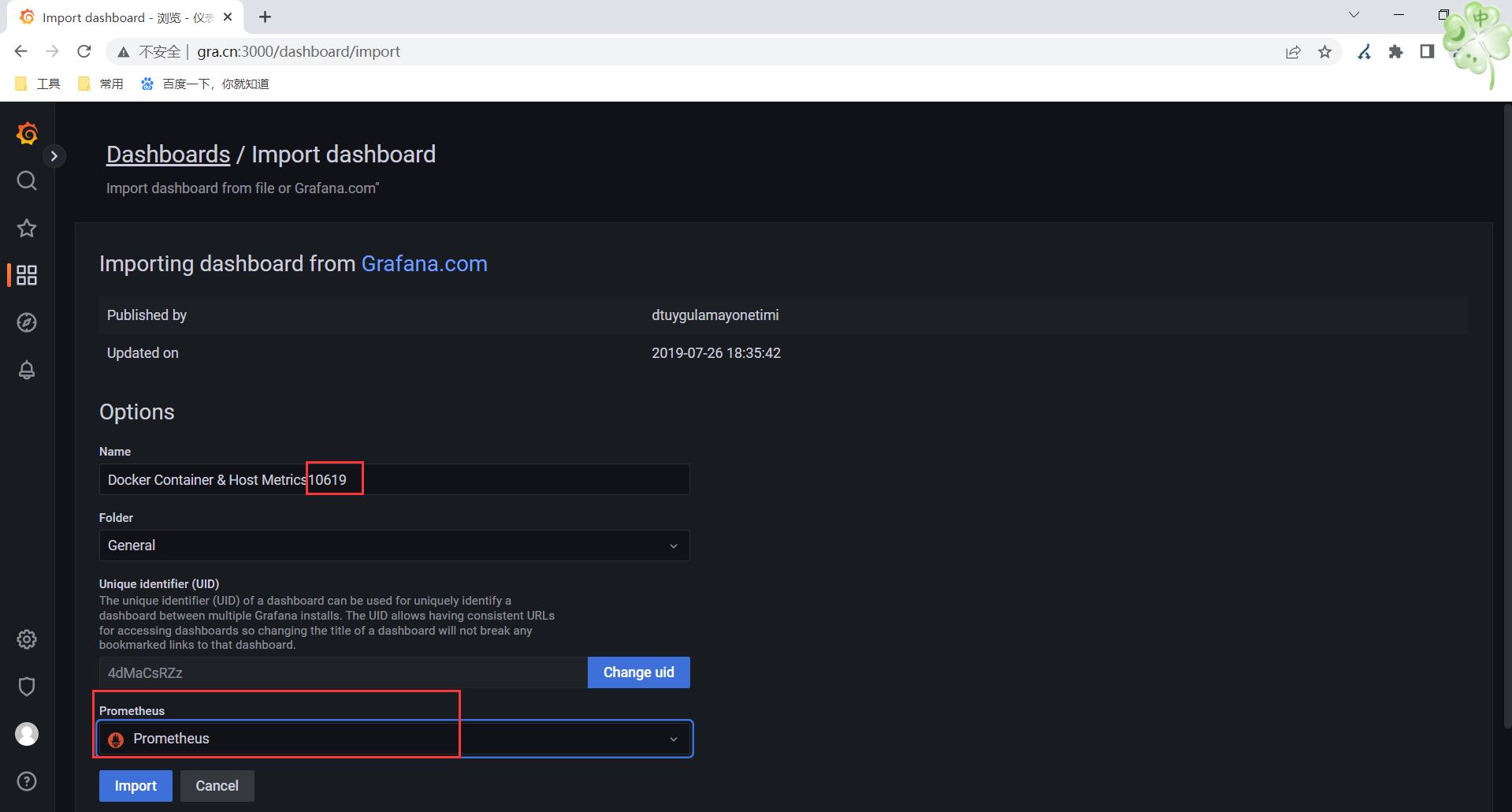

七、接入Grafana

1.添加数据源

2.导入数据源自带的仪表盘

3.解决仪表盘没有数据

补充

count(count(node_cpu_seconds_totalinstance="gra.cn:9100",job="basic_info_node_exporter_discovery") by (cpu)) 第1个步骤: 取出指定的job和指定的节点的cpu信息.如果有多个cpu,cpu部分 会有cpu=0 cpu=1 cpu=2 1 2 3 4 2)解决仪表盘没有数据 修改的查询语句主要关注,job部分和instance。 node_cpu_seconds_totalinstance="gra.cn:9100",job="basic_info_node_exporter_discovery" 第2个步骤: 根据cpu这个部分进行去重并统计次数awk取出cpu这列 sort |uniq count(xxxxxx第1个步骤的指令) by (cpu) #cpu 表示这个 部分, countxxx by (xxx) 根据cpu这个列去重统计次数. 处理后的结果 cpu0 8 cpu1 8 cpu2 8 cpu3 8 ..... 第3个步骤: 最后再次统计次数. count( count(xxxx) by (cpu) )

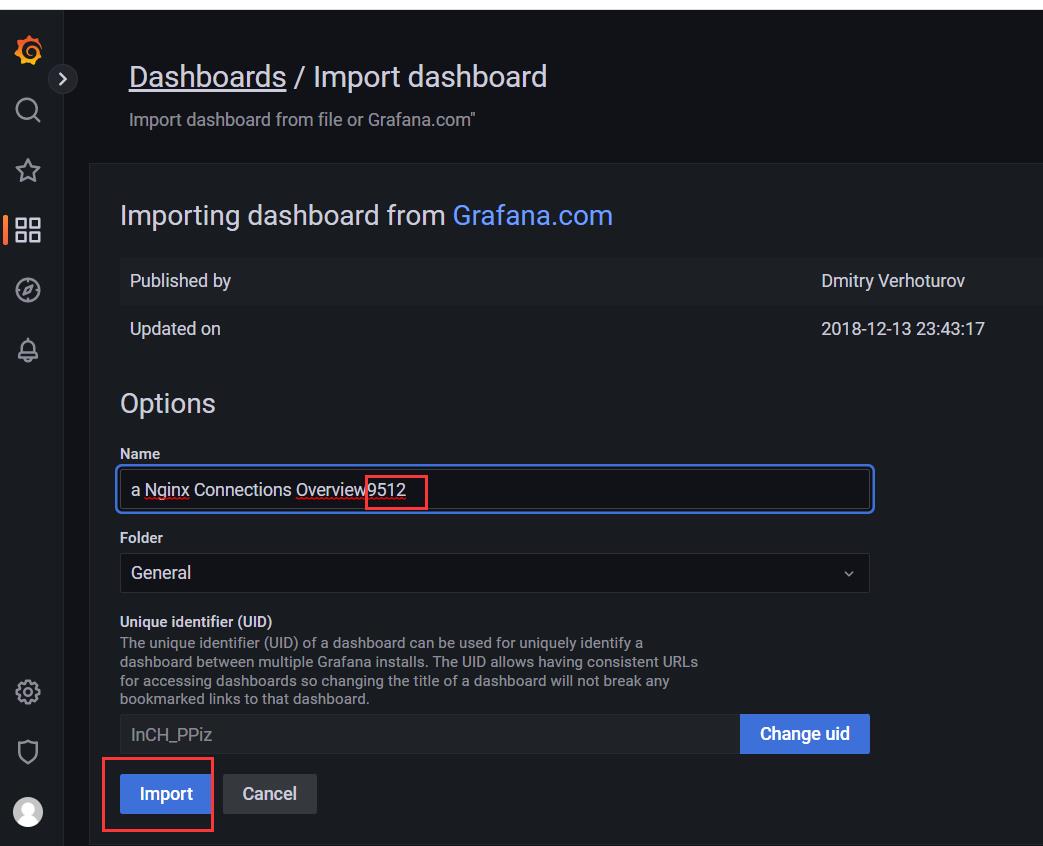

4. 导入第三方仪表盘

https:github.com/nginxinc/nginx-prometheus-exporter

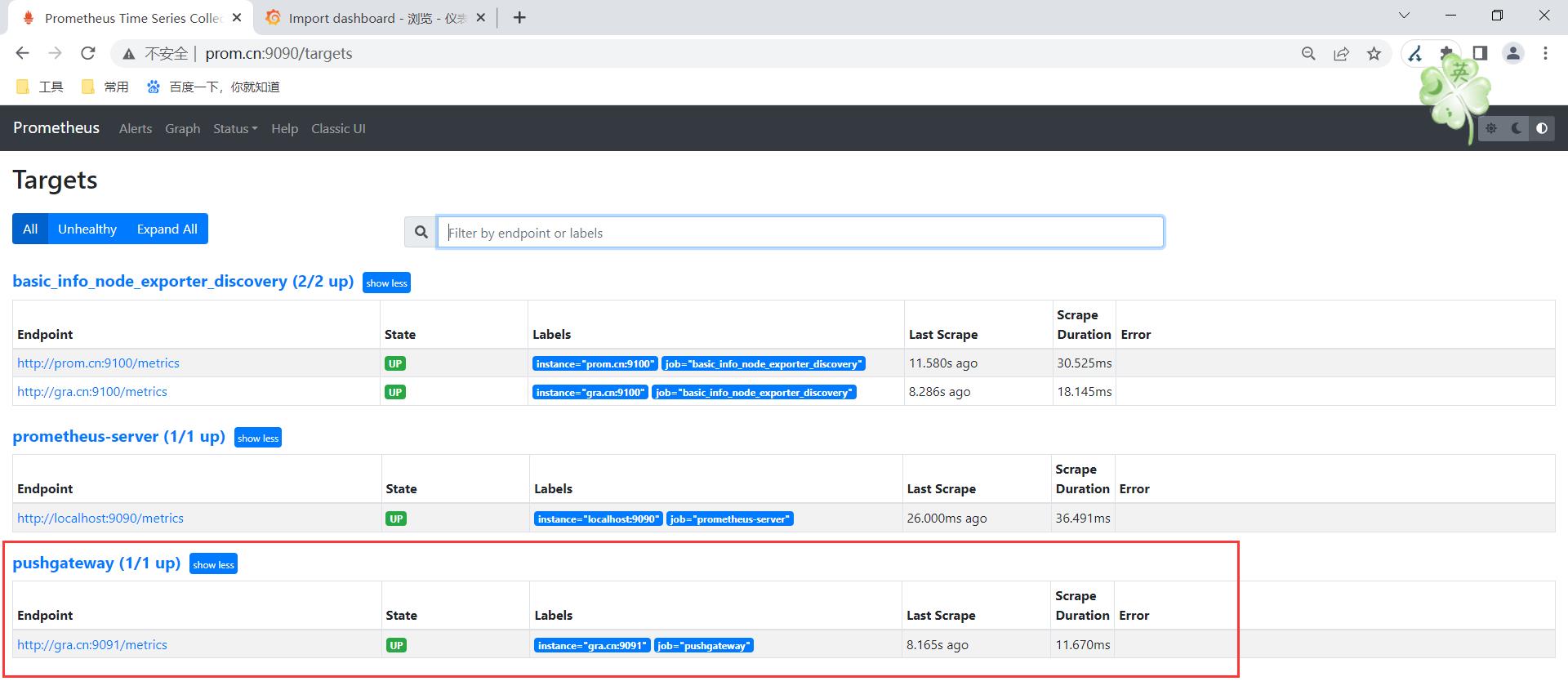

八、Pushgateway

应用场景: 自定义监控项,分布式监控(zbx proxy)

1.Pushgateway部署

| 角色 | 域名 | ip |

|---|---|---|

| pushgatway | gra.cn | 10.0.0.63/172.16.1.63 |

[root@grafana-server ~]# ll

total 136904

-rw-------. 1 root root 1340 Jan 9 09:09 anaconda-ks.cfg

-rw-r--r-- 1 root root 88935132 Mar 21 08:16 grafana-9.3.6-1.x86_64.rpm

-rw-r--r-- 1 root root 33016641 Mar 21 08:16 grafana-9.3.6-alexanderzobnin-zabbix-app-v4.2.10.tar.gz

-rw-r--r-- 1 root root 9033415 Mar 21 08:16 node_exporter-1.3.1.linux-amd64.tar.gz

-rw-r--r-- 1 root root 9193207 Mar 21 08:16 pushgateway-1.4.1.linux-amd64.tar.gz

[root@grafana-server ~]# tar xf pushgateway-1.4.1.linux-amd64.tar.gz -C /app/tools

[root@grafana-server ~]# ln -s /app/tools/pushgateway-1.4.1.linux-amd64/ /app/tools/pushgateway

[root@grafana-server ~]# ln -s /app/tools/pushgateway/pushgateway /bin/

[root@grafana-server ~]# pushgateway &>>/tmp/pushgw.log &

[1] 4128

[root@grafana-server ~]# ss -lnutp|grep push

tcp LISTEN 0 128 [::]:9091 [::]:* users:(("pushgateway",pid=4128,fd=3))

#制作systemd

[root@grafana-server ~]# cat /usr/lib/systemd/system/pushgateway.service

[Unit]

Description=pushgateway

After=network.target

[Service]

Type=simple

ExecStart=/bin/pushgateway

KillMode=process

[Install]

WantedBy=multi-user.target

[root@grafana-server ~]# systemctl daemon-reload

2.prometheus服务端: 修改服务端配置文件(静态)

[root@prometheus-server ~]# cat /app/tools/prometheus/prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

rule_files:

scrape_configs:

- job_name: "prometheus-server"

static_configs:

- targets: ["localhost:9090"]

- job_name: "basic_info_node_exporter_discovery"

file_sd_configs:

- files:

- /app/tools/prometheus/discovery_node_exporter.json

refresh_interval: 5s

#添加下边的配置

- job_name: "pushgateway"

static_configs:

- targets:

- "gra.cn:9091"

[root@prometheus-server ~]# systemctl restart prometheus.service

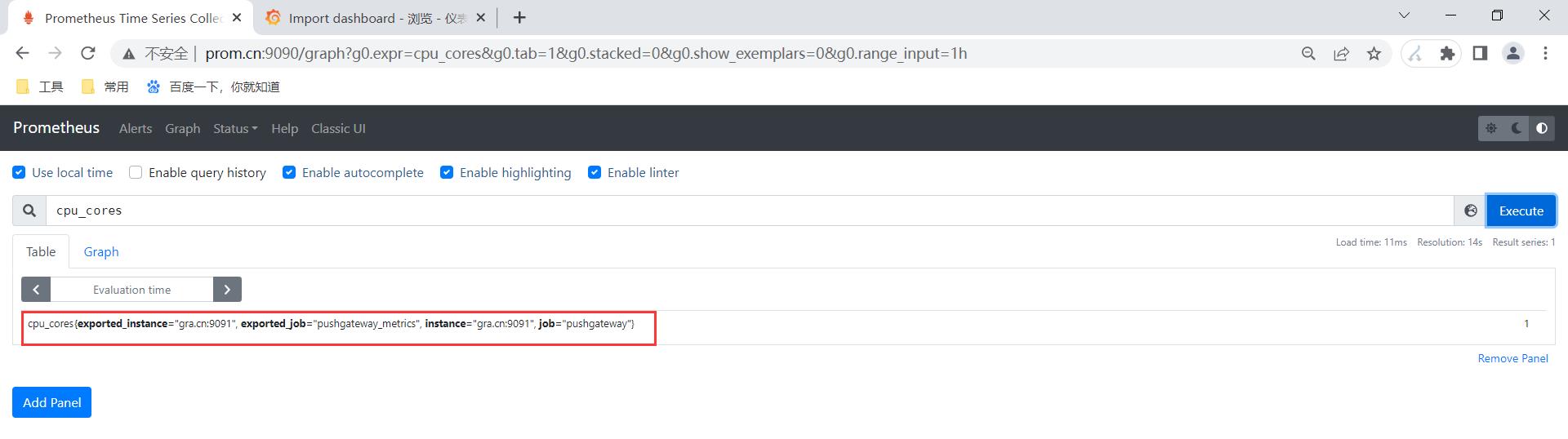

3.书写脚本并提交到pushgateway(自定有监控cpu核心总数)

[root@grafana-server ~]# cat /server/scripts/diy_cpu_cores.sh

#!/bin/bash

#author: wh

#desc: diy prometheus pushgw

#1.vars

pushgw="http://gra.cn:9091"

job="pushgateway_metrics"

ins="gra.cn:9091"

cores=`lscpu |awk \'/^CPU\\(s\\):/print $2\'`

#2.提交到pushgateway

echo "cpu_cores $cores"|\\

curl --data-binary @- $pushgw/metrics/job/$job/instance/$ins

[root@grafana-server ~]# sh /server/scripts/diy_cpu_cores.sh

4.脚本写入定时任务.

[root@grafana-server ~]# crontab -l

#1.配置时间同步

*/2 * * * * /sbin/ntpdate ntp1.aliyun.com &>/dev/null

#2. prometheus pushgateway

00 * * * * sh /server/scripts/diy_cpu_cores.sh &>/dev/null

九、基于Prometheus的全网监控

1.环境

| 监控的项目 | exporter | 主机 |

|---|---|---|

| 系统基本信息 | node_exporter | 所有 |

| 负载均衡,web | nginx_exporter | 负载均衡,web服务器 |

| web中间件:php,java | jmx_exporter | web服务器 |

| 数据库 | mysqld_exporter | 数据库服务器 |

| redis | redis_exporter | 缓存 |

| 存储 | xxx_exporter | nfs(自定义),对象存储(OSS),ceph,minio |

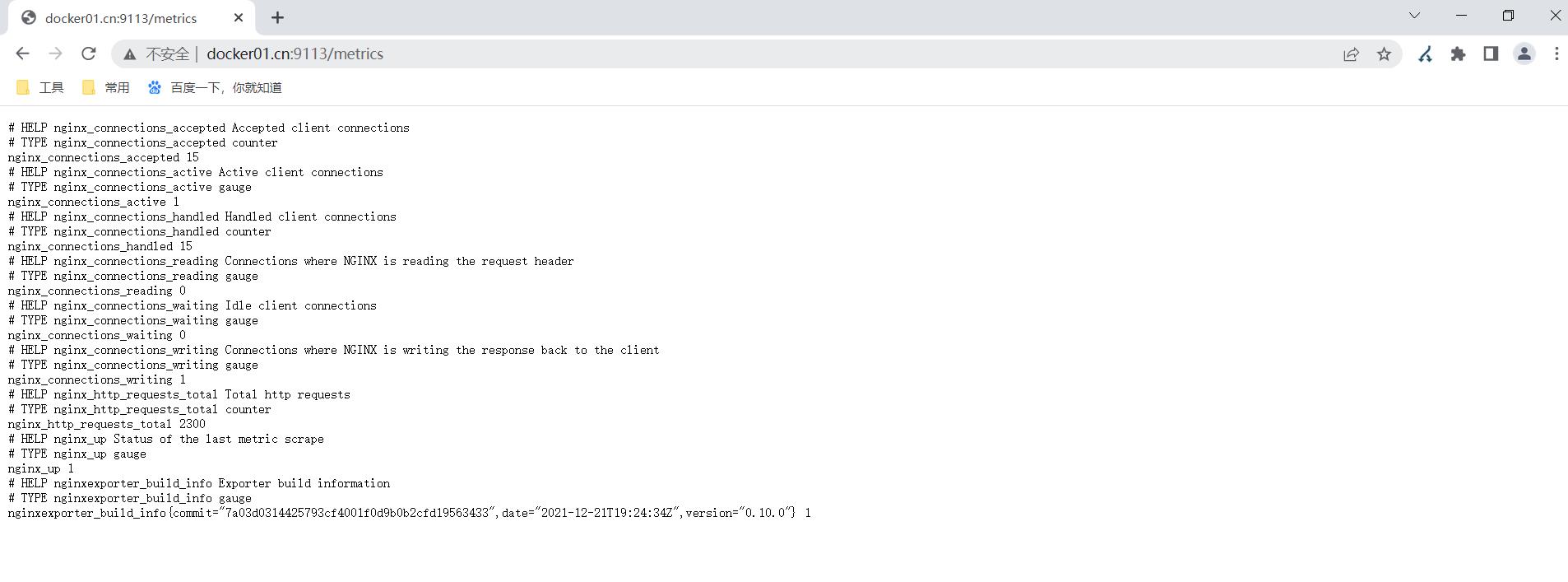

2.搭建nginx_exporter

#访问指定的uri和端口就显示nginx_status页面

[root@docker01 ~]# cat /app/project/nginx/status.conf

server

listen 8000;

location /

stub_status;

#启动nginx容器

[root@docker01 ~]# docker run -d --name "nginx" \\

-v /app/project/nginx/status.conf:/etc/nginx/conf.d/status.conf \\

--restart=always \\

-p 80:80 \\

-p 8000:8000 \\

nginx:1.20.2-alpine

#启动nginx_exporter容器

[root@docker01 ~]# docker run -d -p 9113:9113 --name "nginx_exporter_8000" nginx/nginx-prometheus-exporter:0.10.0 -nginx.scrape-uri "http://172.16.1.81:8000/"

运行容器并指定url+端口+uri

默认是:"http:127.0.0.1:8080/stub_status" #127.0.0.1

是容器内部的ip并非宿主机ip.

http://docker.cn:9113/metrics

#修改prometheus服务端配置

[root@prometheus-server ~]# systemctl restart prometheus.service

[root@prometheus-server ~]# cat /app/tools/prometheus/prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

rule_files:

scrape_configs:

- job_name: "prometheus-server"

static_configs:

- targets: ["localhost:9090"]

- job_name: "basic_info_node_exporter_discovery"

file_sd_configs:

- files:

- /app/tools/prometheus/discovery_node_exporter.json

refresh_interval: 5s

- job_name: "pushgateway"

static_configs:

- targets:

- "gra.cn:9091"

#增加下边这一块

- job_name: "nginx_exporter"

static_configs:

- targets:

- "docker01.cn:9113"

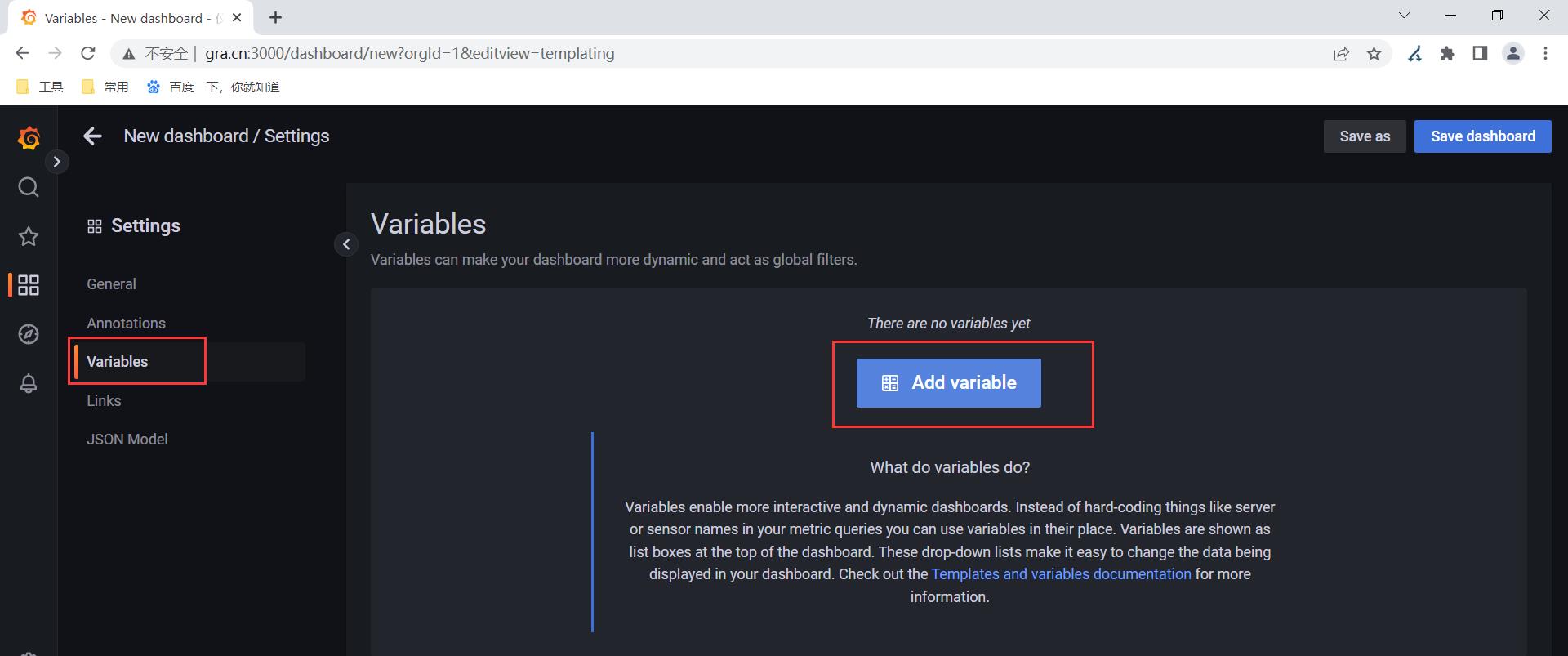

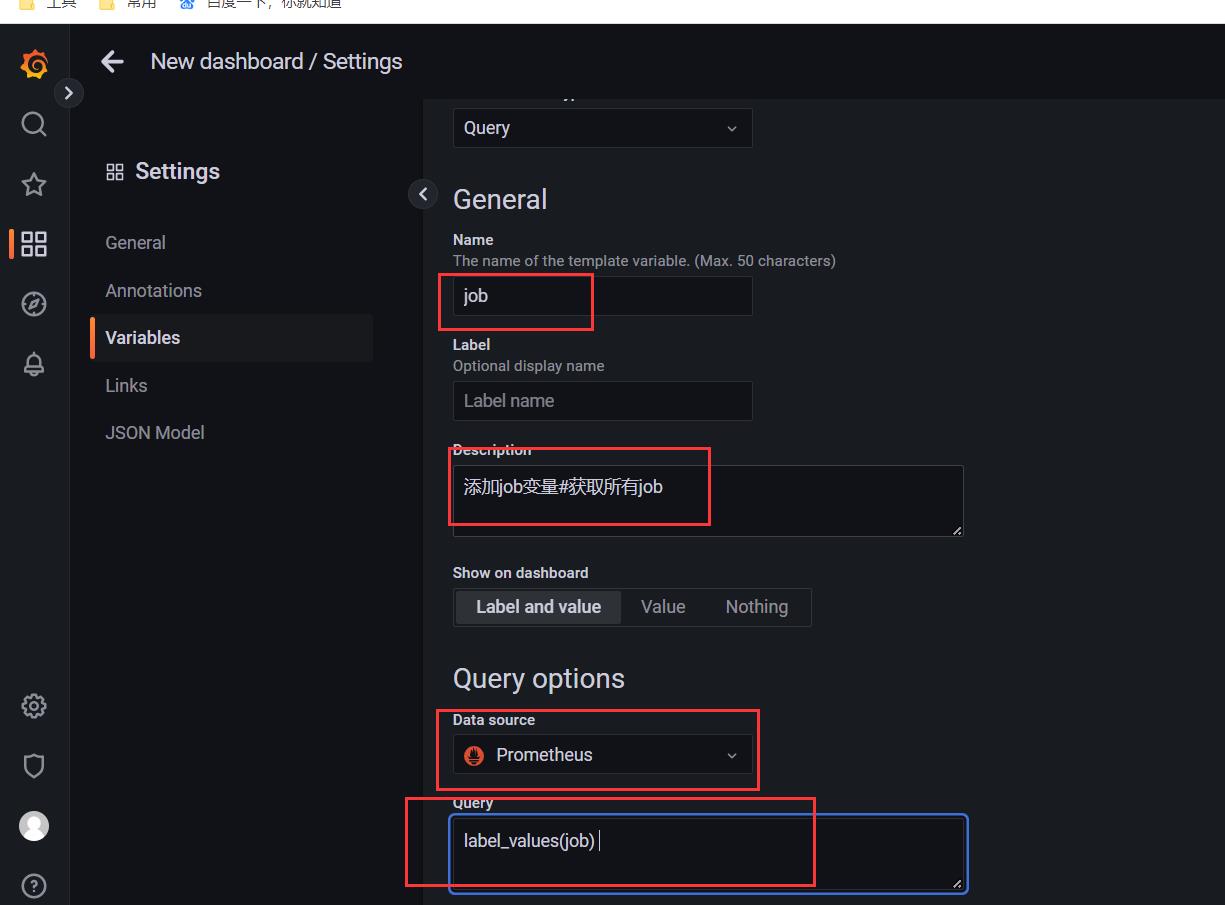

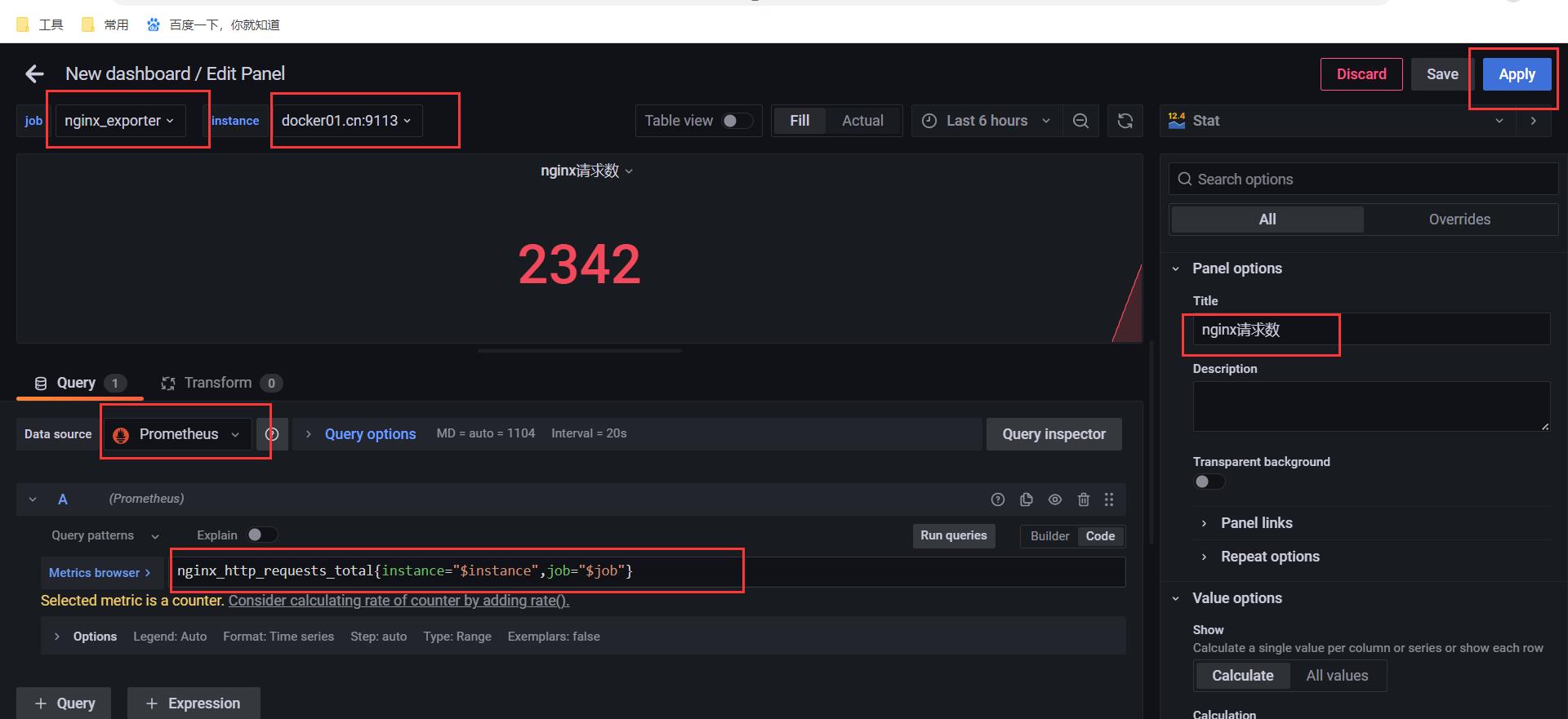

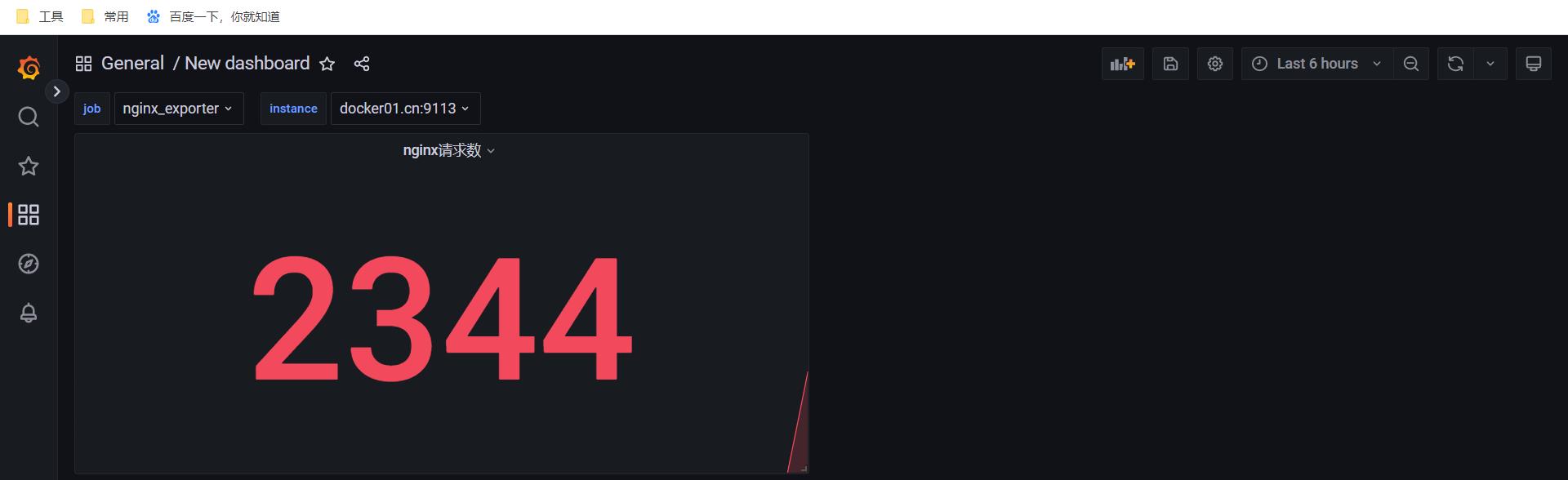

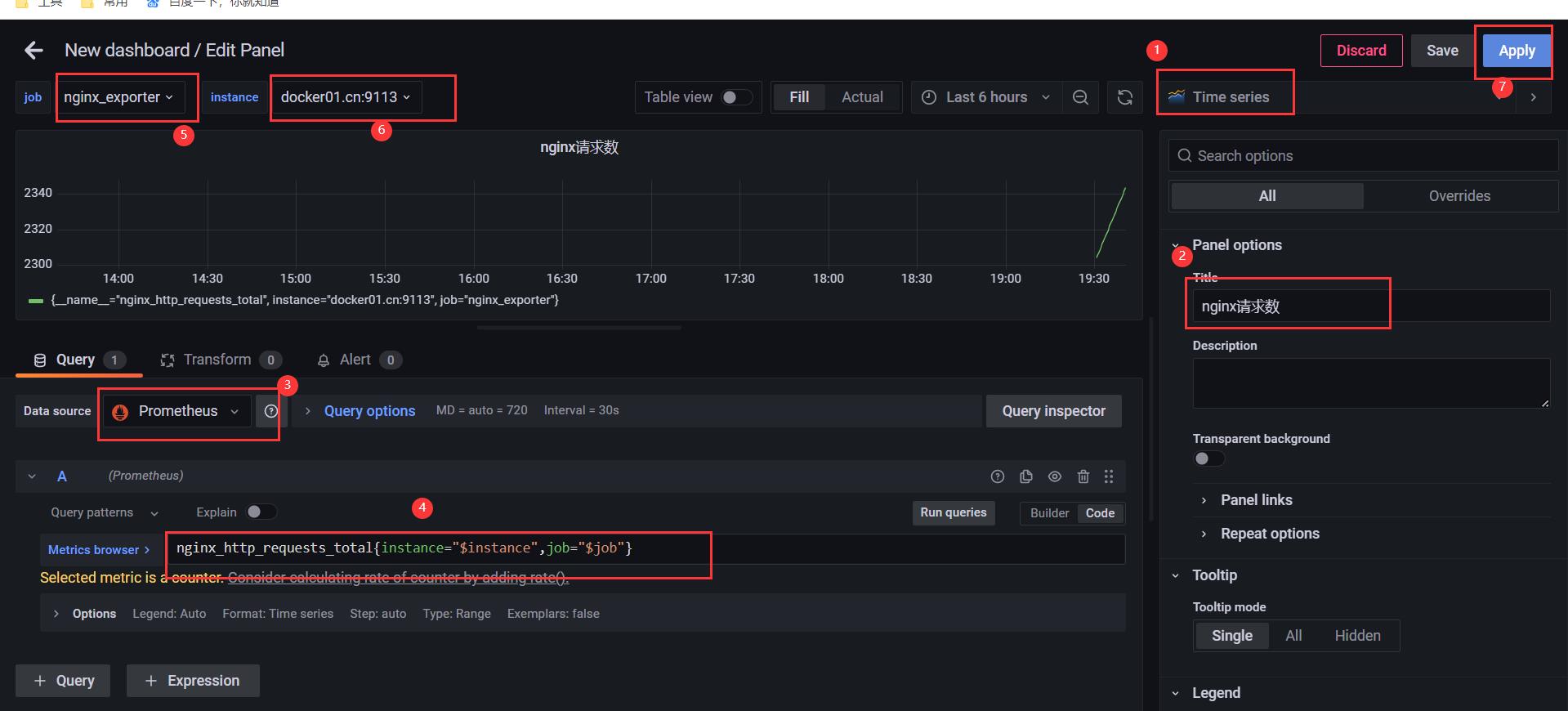

3.自定义面板

新增变量

label_values(job)

label_values(upjob="$job",instance)

nginx_http_requests_totalinstance="$instance",job="$job"

3.搭建db_exporter

#开放数据库权限

[root@zabbix-server ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \\g.

Your MariaDB connection id is 11642

Server version: 10.5.16-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type \'help;\' or \'\\h\' for help. Type \'\\c\' to clear the current input statement.

MariaDB [(none)]> CREATE USER \'exporter\'@\'172.%\' IDENTIFIED BY \'exporter123\';

Query OK, 0 rows affected (0.818 sec)

MariaDB [(none)]> GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO \'exporter\'@\'172.%\';

Query OK, 0 rows affected (0.001 sec)

[root@docker01 ~]# cd /app/project/db/

[root@docker01 /app/project/db]# ll

total 7288

-rw-r--r-- 1 root root 442 Mar 23 19:44 Dockerfile

-rw-r--r-- 1 root root 48 Mar 23 11:56 my.cnf

-rw-r--r-- 1 root root 7450991 Mar 23 12:15 mysqld_exporter-0.14.0.linux-amd64.tar.gz

[root@docker01 /app/project/db]# cat Dockerfile

FROM alpine:latest

LABEL author=wh

ADD mysqld_exporter-0.14.0.linux-amd64.tar.gz /app/tools/

COPY my.cnf /app/tools/mysqld_exporter-0.14.0.linux-amd64/

ENV DATA_SOURCE_NAME=\'exporter:exporter123@(172.16.1.62:3306)/\'

RUN ln -s /app/tools/mysqld_exporter-0.14.0.linux-amd64 /app/tools/mysqld_exporter

WORKDIR /app/tools/mysqld_exporter-0.14.0.linux-amd64/

EXPOSE 9104

CMD ["./mysqld_exporter","--config.my-cnf=./my.cnf"]

[root@docker01 /app/project/db]# docker build -t mysql:mysqld_exporter .

[root@docker01 /app/project/db]# docker images|grep exporter

mysql mysqld_exporter bac639507018 32 seconds ago 22.2MB

[root@docker01 /app/project/db]# docker run -d -p 9104:9104 mysql:mysqld_exporter

23b7509f953d39316054097030f9491c7f623b2f7ba750a4e9d29da95bf68e32

[root@docker01 /app/project/db]# docker ps |grep mysql

23b7509f953d mysql:mysqld_exporter "./mysqld_exporter -…" 18 seconds ago Up 12 seconds 0.0.0.0:9104->9104/tcp, :::9104->9104/tcp amazing_hodgkin

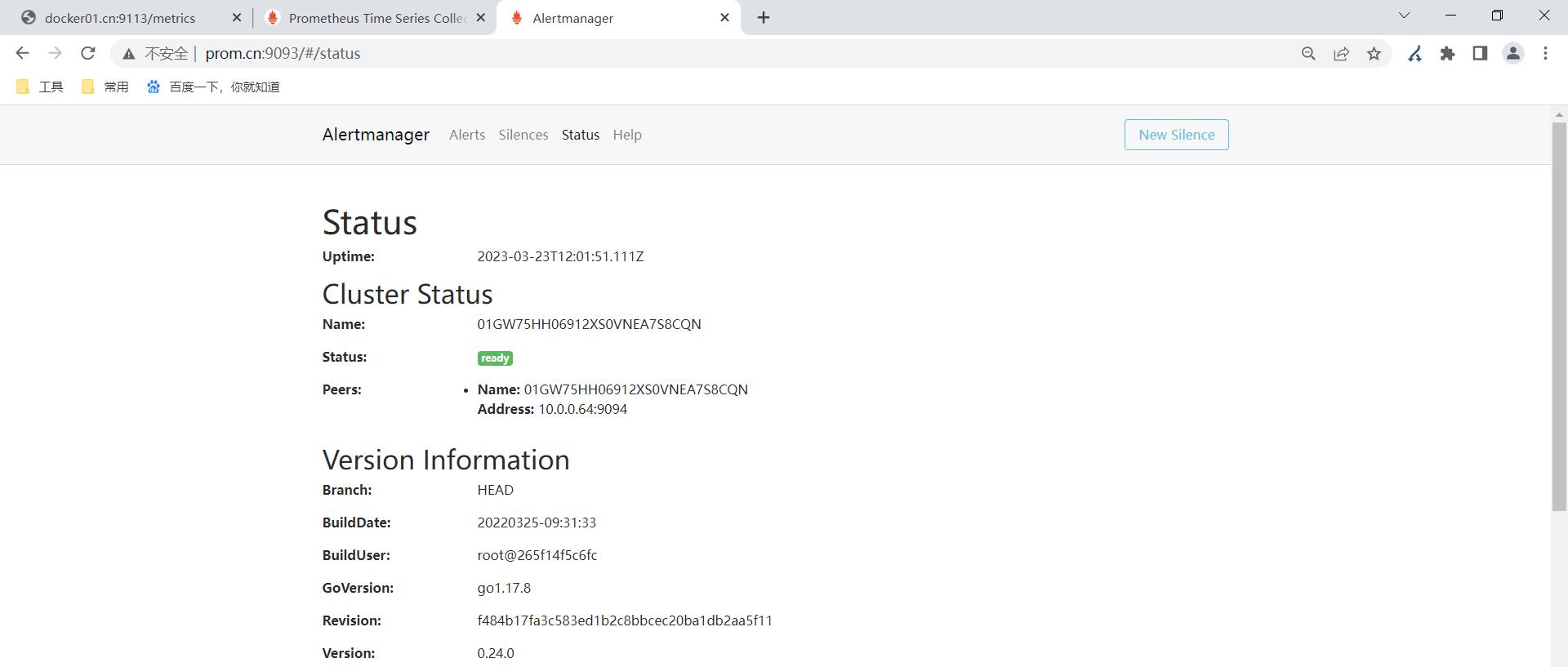

十、Altermanager 告警

1.Alertmanager部署

wget -P /server/tools/ https://github.com/prometheus/alertmaad/v0.24.0/alertmanager-0.24.0.linux-amd64.tar.gz

[root@prometheus-server ~]# ll

total 108148

-rw-r--r-- 1 root root 25880024 Mar 21 08:16 alertmanager-0.24.0.linux-amd64.tar.gz

[root@prometheus-server ~]# tar xf alertmanager-0.24.0.linux-amd64.tar.gz -C /app/tools

[root@prometheus-server ~]# ln -s /app/tools/alertmanager-0.24.0.linux-amd64/ /app/tools/alertmanager

[root@prometheus-server ~]# ln -s /app/tools/alertmanager/alertmanager /bin/

[root@prometheus-server ~]# alertmanager --version

alertmanager, version 0.24.0 (branch: HEAD, revision: f484b17fa3c583ed1b2c8bbcec20ba1db2aa5f11)

build user: root@265f14f5c6fc

build date: 20220325-09:31:33

go version: go1.17.8

platform: linux/amd64

[root@prometheus-server ~]# alertmanager --config.file=/app/tools/alertmanager/alertmanager.yml &

#制作systemctl

[root@prometheus-server ~]# cat /usr/lib/systemd/system/alertmanager.service

[Unit]

Description=Prometheus alertmanager

After=network.target

[Service]

Type=simple

ExecStart=/bin/alertmanager --config.file=/app/tools/alertmanager/alertmanager.yml

KillMode=process

[Install]

WantedBy=multi-user.target

[root@prometheus-server ~]# systemctl daemon-reload

http://prom.cn:9093/

#配置prometheus服务端

[root@prometheus-server ~]# cat /app/tools/prometheus/prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

- "prom.cn:9093" #这个地址修改为alertmanager地址

rule_files:

- "/app/tools/prometheus/alerts_check.node.yml" #这个地址里写的是告警的规则

scrape_configs:

- job_name: "prometheus-server"

static_configs:

- targets: ["localhost:9090"]

- job_name: "basic_info_node_exporter_discovery"

file_sd_configs:

- files:

- /app/tools/prometheus/discovery_node_exporter.json

refresh_interval: 5s

- job_name: "pushgateway"

static_configs:

- targets:

- "gra.cn:9091"

- job_name: "nginx_exporter"

static_configs:

- targets:

- "docker01.cn:9113"

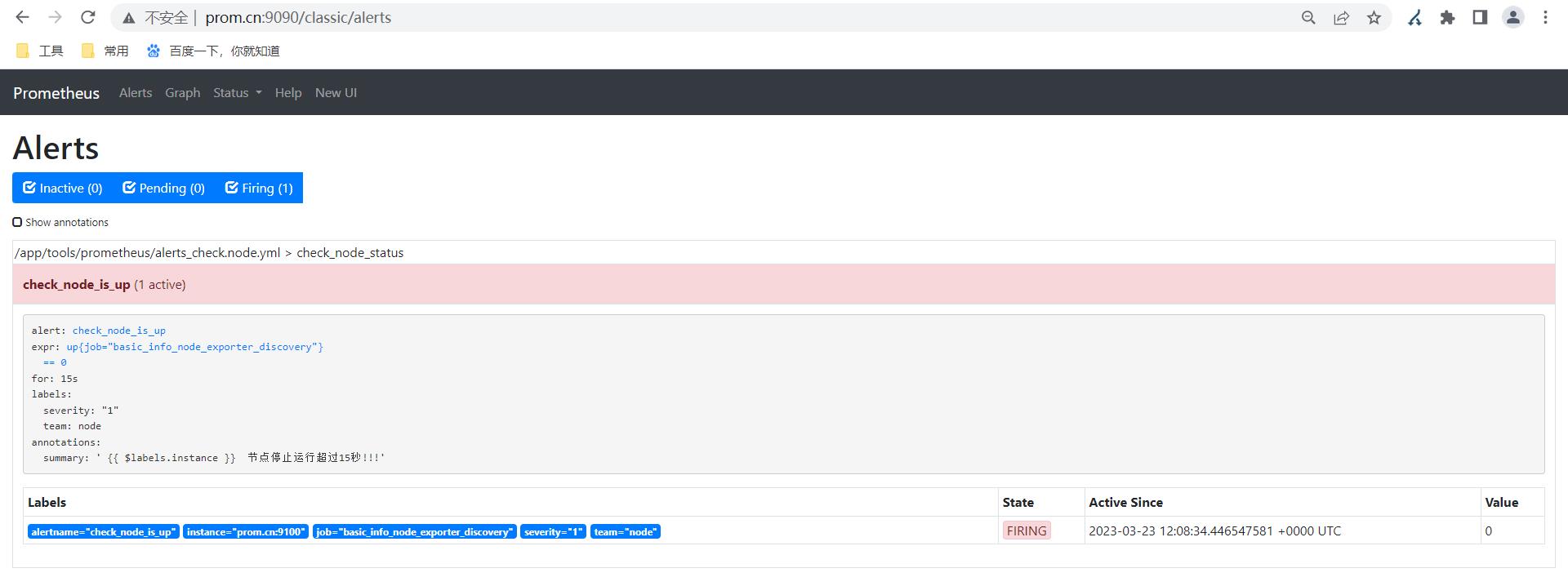

#配置规则

[root@prometheus-server ~]# cat /app/tools/prometheus/alerts_check.node.yml

groups:

- name: check_node_status

rules:

- alert: check_node_is_up

expr: upjob="basic_info_node_exporter_discovery" == 0

for: 15s

labels:

severity: 1

team: node

annotations:

summary: " $labels.instance 节点停止运行超过15秒!!!"

[root@prometheus-server ~]# systemctl restart prometheus

#制造故障

[root@prometheus-server ~]# systemctl stop node-exporter.service

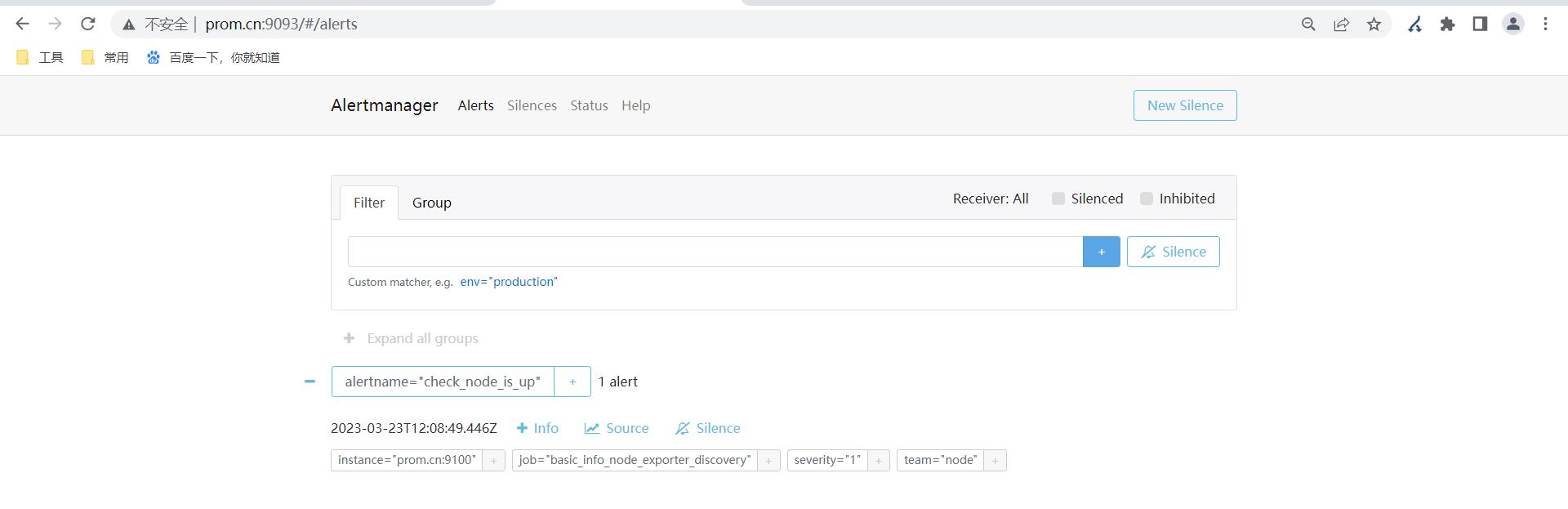

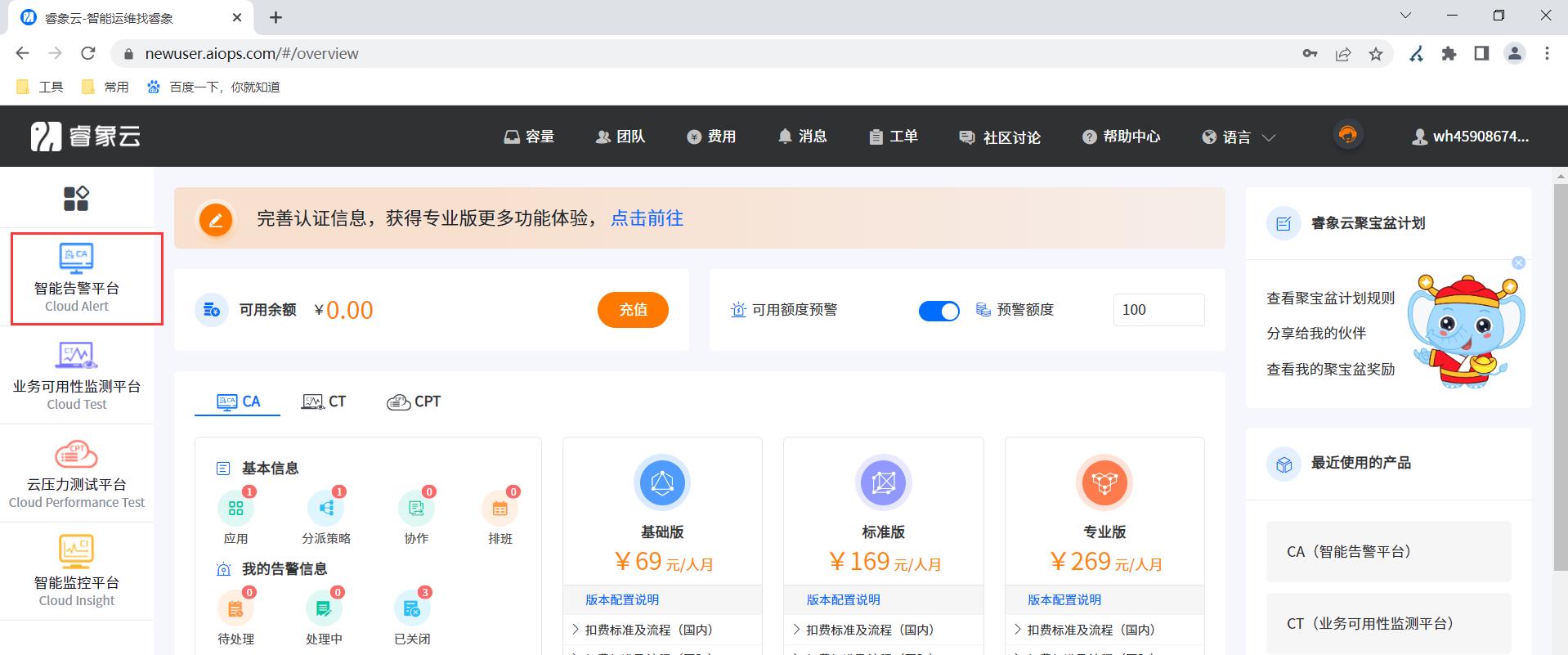

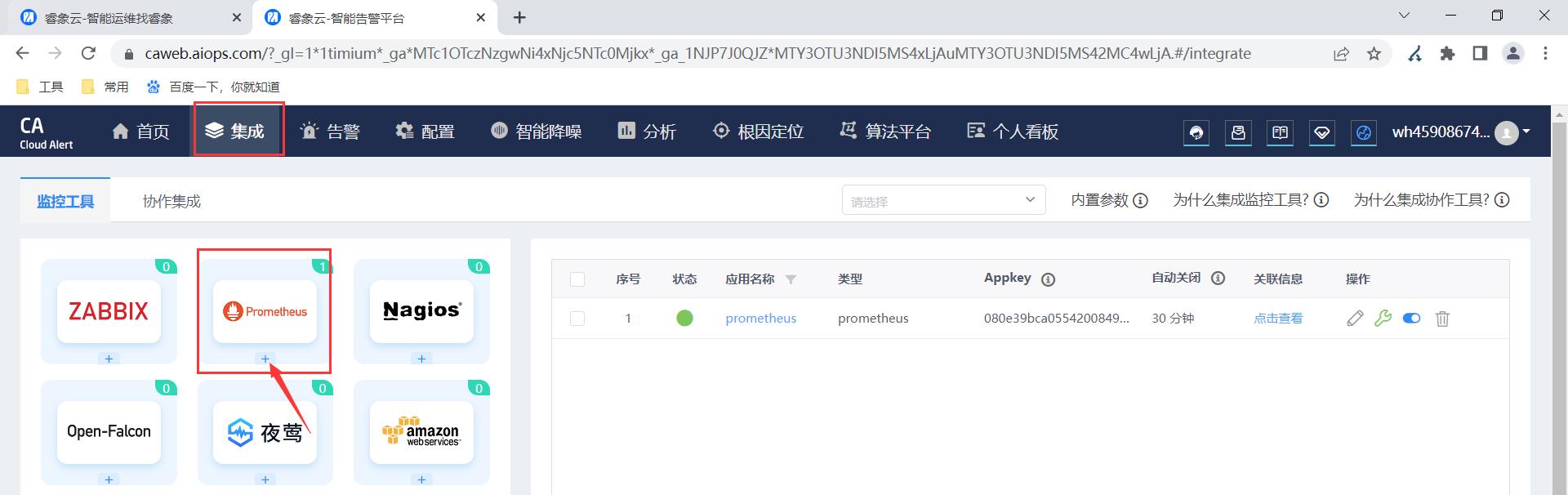

2.配置alertmanager第三方平台方式(睿象云)

[root@prometheus-server ~]# cat /app/tools/alertmanager/alertmanager.yml

route:

group_by: [\'alertname\']

group_wait: 30s

group_interval: 5m

repeat_interval: 1h

receiver: \'web.hook\'

receivers:

- name: \'web.hook\'

webhook_configs:

#这个url修改为第三方的

- url: \'http://api.aiops.com/alert/api/event/prometheus/Appkey\'

inhibit_rules:

- source_match:

severity: \'critical\'

target_match:

severity: \'warning\'

equal: [\'alertname\', \'dev\', \'instance\']

睿象云配置方式

3.配置邮件告警

alertmanager配置详解

global: 全局定义部分。配置发件人信息.

resolve_timeout: 5m dns解析的超时时间.

smtp_from: 发件人

smtp_smarthost: smtp服务器

smtp_hello: qq.com 163.com 邮箱厂商.

smtp_auth_username: 邮箱名字

smtp_auth_password: 授权码

smtp_require_tls: false

route: 配置收件人间隔时间,收件方式.

group_by: [\'alertname\']

group_wait: 30s

group_interval: 5m

repeat_interval: 1h 重复告警时间. eg。11:00 发送了1次告警,12:00 再发送1次.

receiver: \'email\' 采取什么方式接受告警.

#cat alertmanager.yml

global:

resolve_timeout: 5m

smtp_from: \'xxxx@163.com\'

smtp_smarthost: \'smtp.163.com:465\'

smtp_hello: \'163.com\'

smtp_auth_username: \'xxxx@163.com\'

smtp_auth_password: \'xxxxx\'

smtp_require_tls: false

route:

group_by: [\'alertname\']

group_wait: 30s

group_interval: 5m

repeat_interval: 1h

receiver: \'email\'

receivers:

- name: "email"

email_configs:

- to: \'xxxxx@qq.com\'

send_resolved: true

inhibit_rules:

- source_match:

severity: \'critical\'

target_match:

severity: \'warning\'

equal: [\'alertname\', \'dev\', \'instance\']

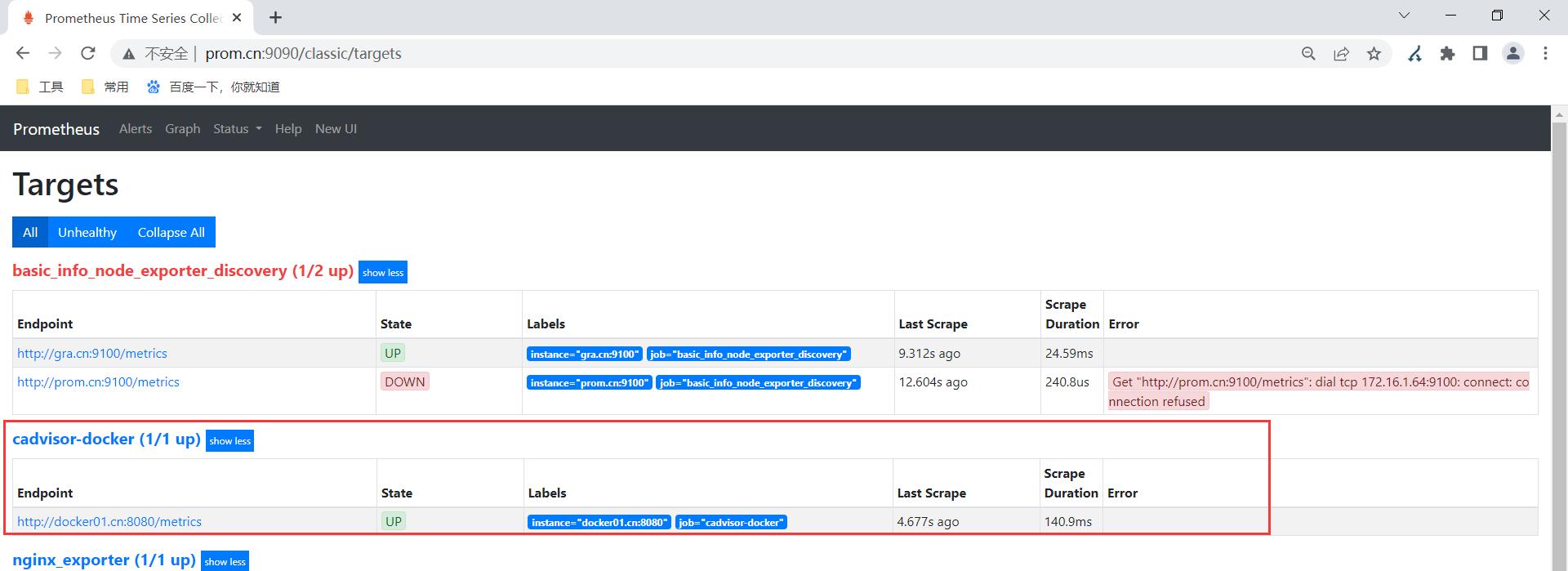

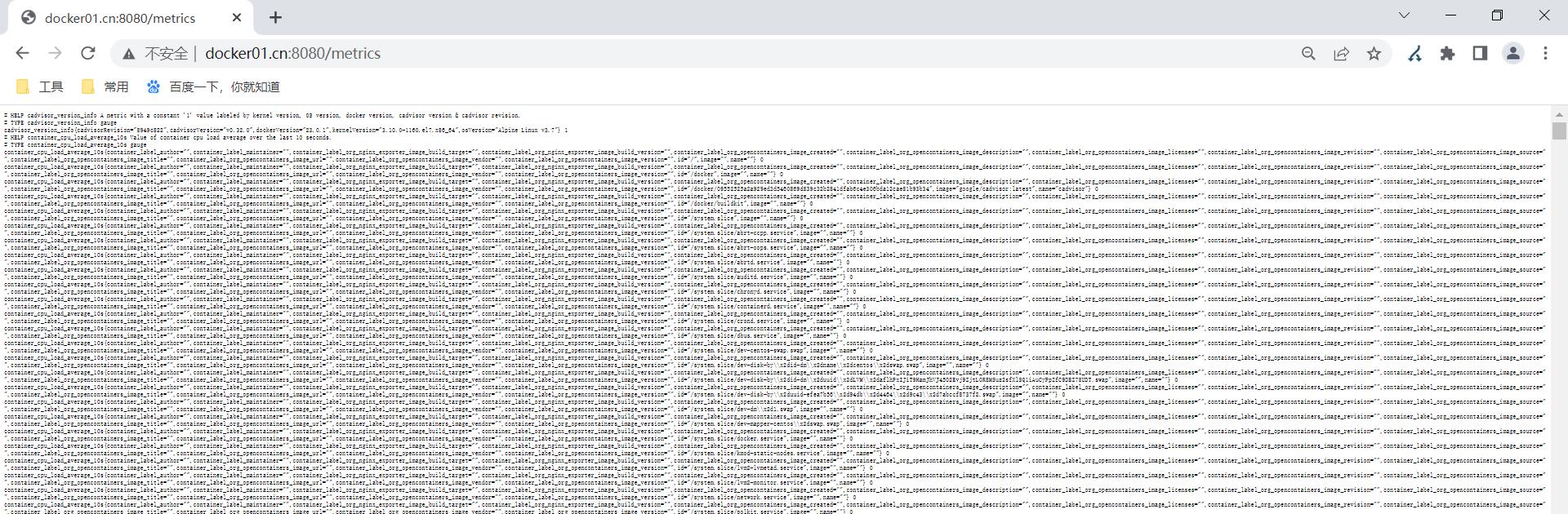

十一、监控docker

#启动cadvisor

[root@docker01 /app/project/db]# docker run \\

> --volume=/:/rootfs:ro \\

> --volume=/var/run:/var/run:ro \\

> --volume=/sys:/sys:ro \\

> --volume=/var/lib/docker/:/var/lib/docker:ro \\

> --volume=/dev/disk/:/dev/disk:ro \\

> --publish=8080:8080 \\

> --detach=true \\

> --name=cadvisor \\

> --privileged \\

> --device=/dev/kmsg \\

> google/cadvisor:latest

09552525a2a929ed3d5460869d839c32b2841dfab6c4e306bda12cae81b93b34

http://docker01.cn:8080/

#配置prometheus服务端

[root@prometheus-server ~]# cat /app/tools/prometheus/prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

- "prom.cn:9093"

rule_files:

- "/app/tools/prometheus/alerts_check.node.yml"

scrape_configs:

- job_name: "prometheus-server"

static_configs:

- targets: ["localhost:9090"]

- job_name: "basic_info_node_exporter_discovery"

file_sd_configs:

- files:

- /app/tools/prometheus/discovery_node_exporter.json

refresh_interval: 5s

- job_name: "pushgateway"

static_configs:

- targets:

- "gra.cn:9091"

- job_name: "nginx_exporter"

static_configs:

- targets:

- "docker01.cn:9113"

#添加下边这一块

- job_name: "cadvisor-docker"

static_configs:

- targets:

- "docker01.cn:8080"

[root@prometheus-server ~]# systemctl restart prometheus

接入grafana

以上是关于Linux-监控三剑客之prometheus的主要内容,如果未能解决你的问题,请参考以下文章