Python 网络爬虫(新闻收集脚本)

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Python 网络爬虫(新闻收集脚本)相关的知识,希望对你有一定的参考价值。

=====================爬虫原理=====================

通过Python访问新闻首页,并用正则表达式获取新闻排行榜链接。

依次访问这些链接,从网页的html代码中获取文章信息,并将信息保存到Article对象中。

将Article对象中的数据通过pymysql【第三方模块】保存到数据库中。

=====================数据结构=====================

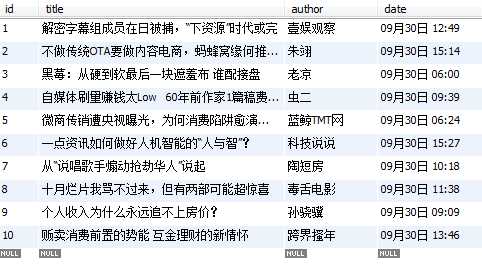

CREATE TABLE `news` ( `id` int(6) unsigned AUTO_INCREMENT NOT NULL, `title` varchar(45) NOT NULL, `author` varchar(12) NOT NULL, `date` varchar(12) NOT NULL, `about` varchar(255) NOT NULL, `content` text NOT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8;

=====================脚本代码=====================

# 百度百家文章收集 import re import urllib.request import pymysql.cursors # 数据库配置参数 config = { ‘host‘: ‘localhost‘, ‘port‘: ‘3310‘, ‘username‘: ‘woider‘, ‘password‘: ‘3243‘, ‘database‘: ‘python‘, ‘charset‘: ‘utf8‘ } # 数据表创建语句 ‘‘‘ CREATE TABLE `news` ( `id` int(6) unsigned AUTO_INCREMENT NOT NULL, `title` varchar(45) NOT NULL, `author` varchar(12) NOT NULL, `date` varchar(12) NOT NULL, `about` varchar(255) NOT NULL, `content` text NOT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8; ‘‘‘ # 文章对象 class Article(object): title = None author = None date = None about = None content = None pass # 正则表达式 patArticle = ‘<p\s*class="title"><a\s*href="(.+?)"‘ # 匹配文章链接 patTitle = ‘<div\s*id="page">\s*<h1>(.+)</h1>‘ # 匹配文章标题 patAuthor = ‘<div\s*class="article-info">\s*<a.+?>(.+)</a>‘ # 匹配文章作者 patDate = ‘<span\s*class="time">(.+)</span>‘ # 匹配发布日期 patAbout = ‘<blockquote><i\s*class="i\siquote"></i>(.+)</blockquote>‘ # 匹配文章简介 patContent = ‘<div\s*class="article-detail">((.|\s)+)‘ # 匹配文章内容 patCopy = ‘<div\s*class="copyright">(.|\s)+‘ # 匹配版权声明 patTag = ‘(<script((.|\s)*?)</script>)|(<.*?>\s*)‘ # 匹配HTML标签 # 文章信息 def collect_article(url): article = Article() html = urllib.request.urlopen(url).read().decode(‘utf8‘) article.title = re.findall(patTitle, html)[0] article.author = re.findall(patAuthor, html)[0] article.date = re.findall(patDate, html)[0] article.about = re.findall(patAbout, html)[0] content = re.findall(patContent, html)[0] content = re.sub(patCopy, ‘‘, content[0]) content = re.sub(‘</p>‘, ‘\n‘, content) content = re.sub(patTag, ‘‘, content) article.content = content return article # 储存信息 def save_article(connect, article): message = None try: cursor = connect.cursor() sql = "INSERT INTO news (title, author, date, about, content) VALUES ( %s, %s, %s, %s, %s)" data = (article.title, article.author, article.date, article.about, article.content) cursor.execute(sql, data) connect.commit() except Exception as e: message = str(e) else: message = article.title finally: cursor.close() return message # 抓取链接 home = ‘http://baijia.baidu.com/‘ # 百度百家首页 html = urllib.request.urlopen(home).read().decode(‘utf8‘) # 获取页面源码 links = re.findall(patArticle, html)[0:10] # 每日热点新闻 # 连接数据库 connect = pymysql.connect( host=config[‘host‘], port=int(config[‘port‘]), user=config[‘username‘], passwd=config[‘password‘], db=config[‘database‘], charset=config[‘charset‘] ) for url in links: article = collect_article(url) # 收集文章信息 message = save_article(connect,article) # 储存文章信息 print(message) pass connect.close() # 关闭数据库连接

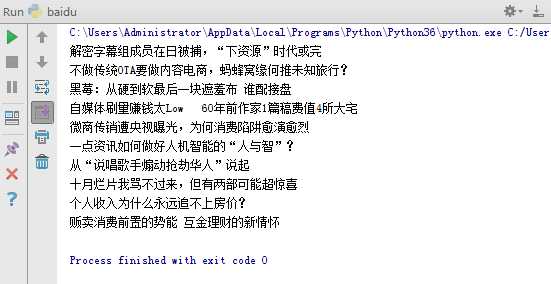

=====================运行结果=====================

以上是关于Python 网络爬虫(新闻收集脚本)的主要内容,如果未能解决你的问题,请参考以下文章