Python爬虫:爬取小说并存储到数据库

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Python爬虫:爬取小说并存储到数据库相关的知识,希望对你有一定的参考价值。

爬取小说网站的小说,并保存到数据库

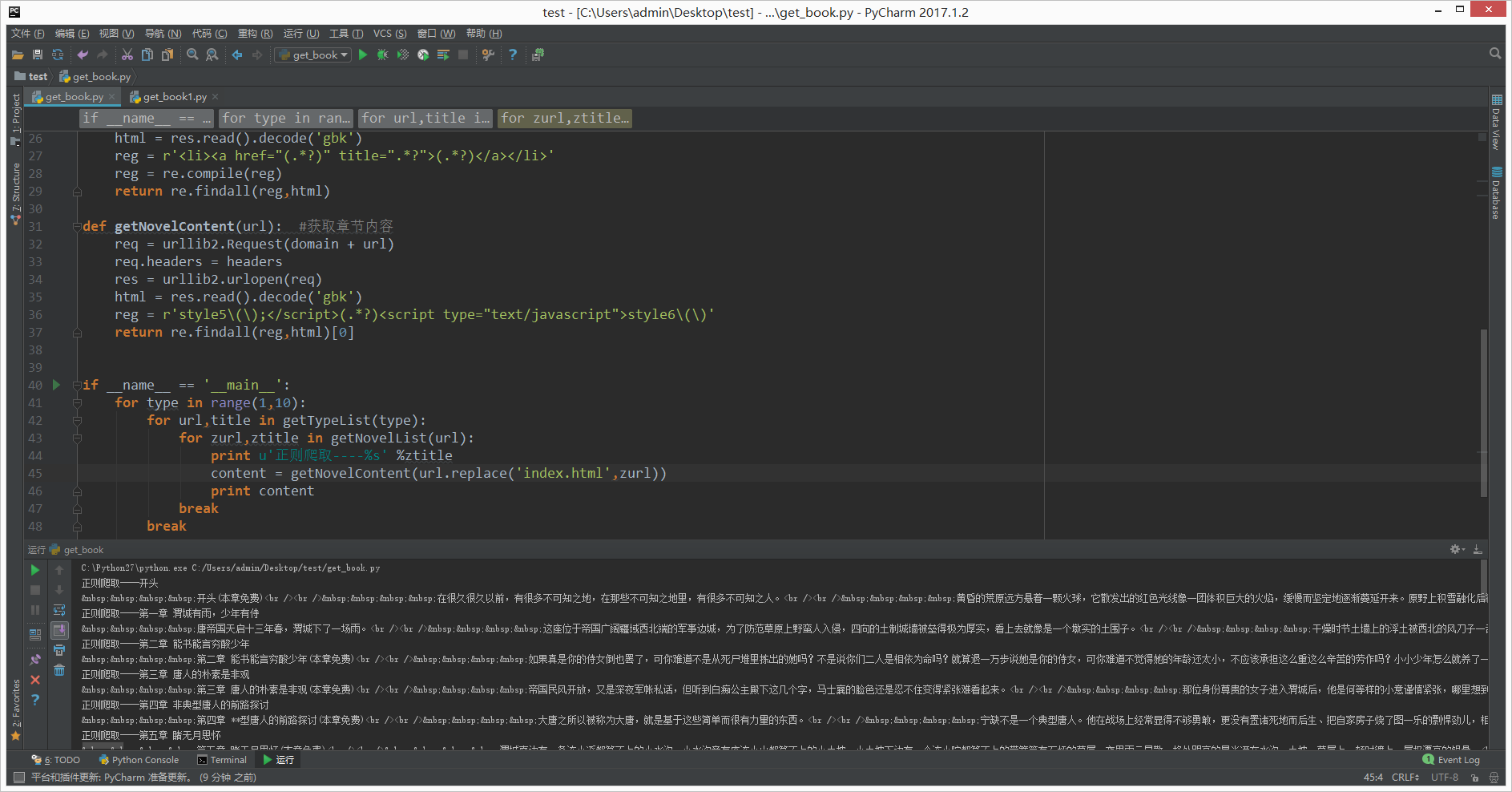

第一步:先获取小说内容

#!/usr/bin/python

# -*- coding: UTF-8 -*-

import urllib2,re

domain = ‘http://www.quanshu.net‘

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36"

}

def getTypeList(pn=1): #获取分类列表的函数

req = urllib2.Request(‘http://www.quanshu.net/map/%s.html‘ % pn) #实例将要请求的对象

req.headers = headers #替换所有头信息

#req.add_header() #添加单个头信息

res = urllib2.urlopen(req) #开始请求

html = res.read().decode(‘gbk‘) #decode解码,解码成Unicode

reg = r‘<a href="(/book/.*?)" target="_blank">(.*?)</a>‘

reg = re.compile(reg) #增加匹配效率 正则匹配返回的类型为List

return re.findall(reg,html)

def getNovelList(url): #获取章节列表函数

req = urllib2.Request(domain + url)

req.headers = headers

res = urllib2.urlopen(req)

html = res.read().decode(‘gbk‘)

reg = r‘<li><a href="(.*?)" title=".*?">(.*?)</a></li>‘

reg = re.compile(reg)

return re.findall(reg,html)

def getNovelContent(url): #获取章节内容

req = urllib2.Request(domain + url)

req.headers = headers

res = urllib2.urlopen(req)

html = res.read().decode(‘gbk‘)

reg = r‘style5\(\);</script>(.*?)<script type="text/javascript">style6\(\)‘

return re.findall(reg,html)[0]

if __name__ == ‘__main__‘:

for type in range(1,10):

for url,title in getTypeList(type):

for zurl,ztitle in getNovelList(url):

print u‘正则爬取----%s‘ %ztitle

content = getNovelContent(url.replace(‘index.html‘,zurl))

print content

break

break执行后结果如下:

第二步:存储到数据库

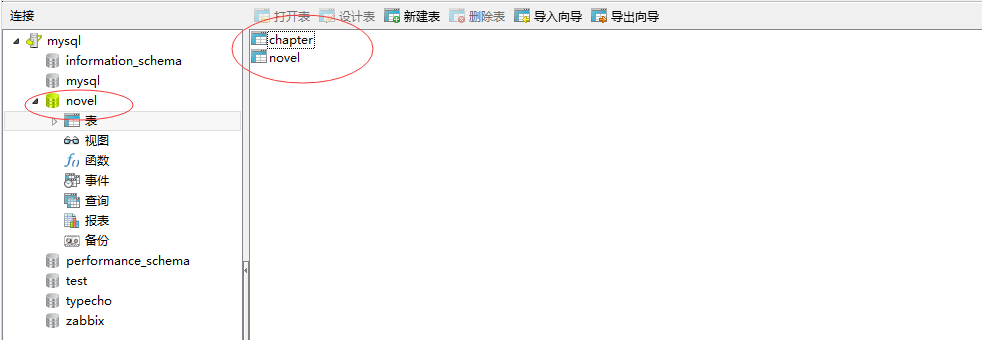

1、设计数据库

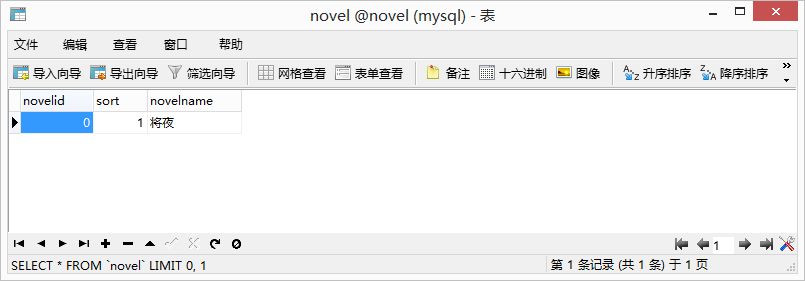

1.1 新建库:novel

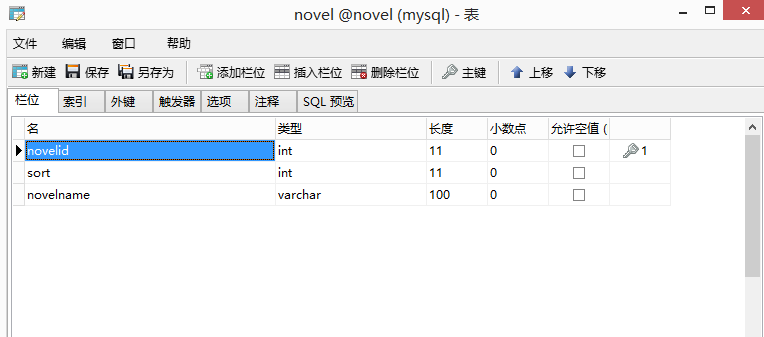

1.2 设计表:novel

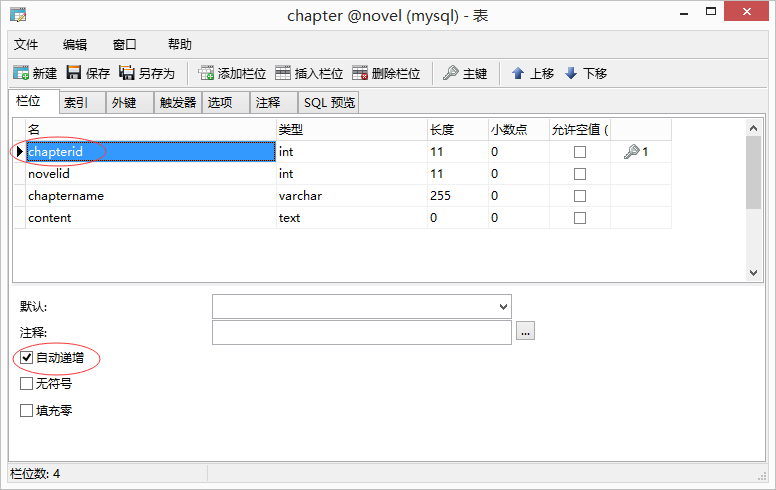

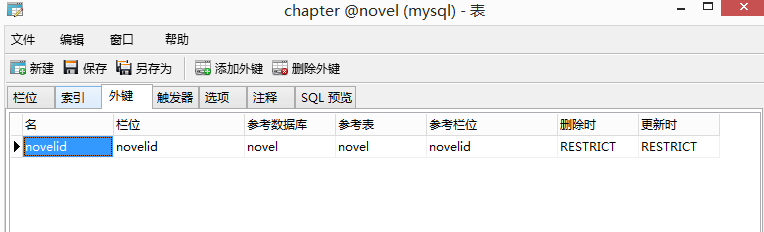

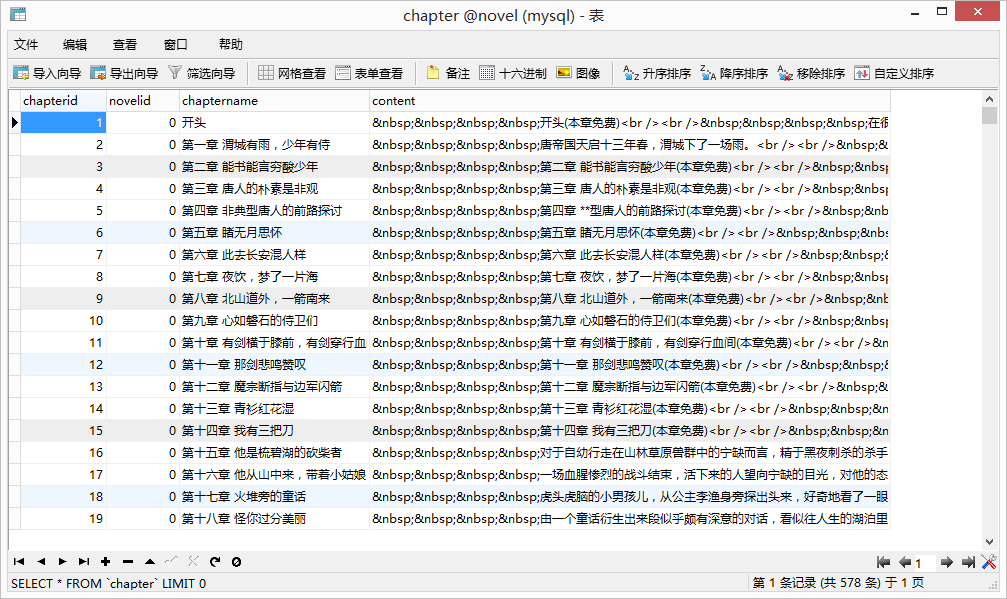

1.3 设计表:chapter

并设置外键

2、编写脚本

#!/usr/bin/python

# -*- coding: UTF-8 -*-

import urllib2,re

import MySQLdb

class Sql(object):

conn = MySQLdb.connect(host=‘192.168.19.213‘,port=3306,user=‘root‘,passwd=‘Admin123‘,db=‘novel‘,charset=‘utf8‘)

def addnovels(self,sort,novelname):

cur = self.conn.cursor()

cur.execute("insert into novel(sort,novelname) values(%s , ‘%s‘)" %(sort,novelname))

lastrowid = cur.lastrowid

cur.close()

self.conn.commit()

return lastrowid

def addchapters(self,novelid,chaptername,content):

cur = self.conn.cursor()

cur.execute("insert into chapter(novelid,chaptername,content) values(%s , ‘%s‘ ,‘%s‘)" %(novelid,chaptername,content))

cur.close()

self.conn.commit()

domain = ‘http://www.quanshu.net‘

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36"

}

def getTypeList(pn=1): #获取分类列表的函数

req = urllib2.Request(‘http://www.quanshu.net/map/%s.html‘ % pn) #实例将要请求的对象

req.headers = headers #替换所有头信息

#req.add_header() #添加单个头信息

res = urllib2.urlopen(req) #开始请求

html = res.read().decode(‘gbk‘) #decode解码,解码成Unicode

reg = r‘<a href="(/book/.*?)" target="_blank">(.*?)</a>‘

reg = re.compile(reg) #增加匹配效率 正则匹配返回的类型为List

return re.findall(reg,html)

def getNovelList(url): #获取章节列表函数

req = urllib2.Request(domain + url)

req.headers = headers

res = urllib2.urlopen(req)

html = res.read().decode(‘gbk‘)

reg = r‘<li><a href="(.*?)" title=".*?">(.*?)</a></li>‘

reg = re.compile(reg)

return re.findall(reg,html)

def getNovelContent(url): #获取章节内容

req = urllib2.Request(domain + url)

req.headers = headers

res = urllib2.urlopen(req)

html = res.read().decode(‘gbk‘)

reg = r‘style5\(\);</script>(.*?)<script type="text/javascript">style6\(\)‘

return re.findall(reg,html)[0]

mysql = Sql()

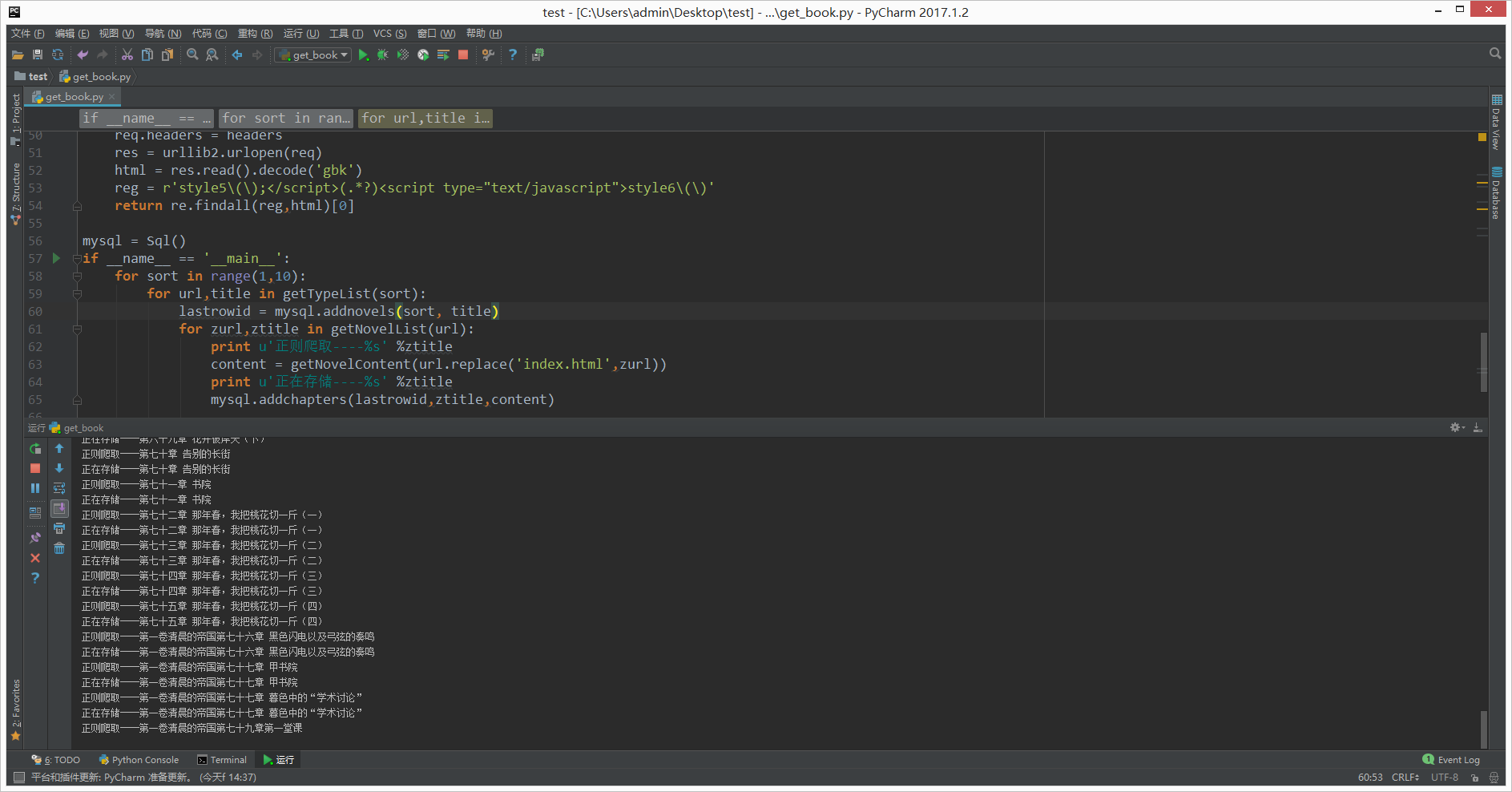

if __name__ == ‘__main__‘:

for sort in range(1,10):

for url,title in getTypeList(sort):

lastrowid = mysql.addnovels(sort, title)

for zurl,ztitle in getNovelList(url):

print u‘正则爬取----%s‘ %ztitle

content = getNovelContent(url.replace(‘index.html‘,zurl))

print u‘正在存储----%s‘ %ztitle

mysql.addchapters(lastrowid,ztitle,content)3、执行脚本

4、查看数据库

可以看到已经存储成功了。

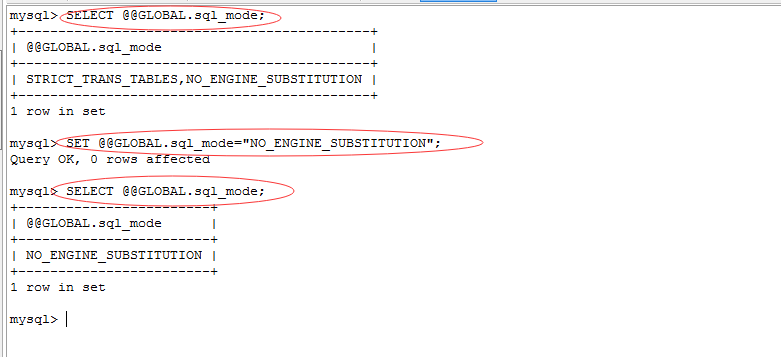

报错:

_mysql_exceptions.OperationalError: (1364, "Field ‘novelid‘ doesn‘t have a default value")

解决:执行sql语句

SELECT @@GLOBAL.sql_mode;

SET @@GLOBAL.sql_mode="NO_ENGINE_SUBSTITUTION";

报错参考:http://blog.sina.com.cn/s/blog_6d2b3e4901011j9w.html

本文出自 “M四月天” 博客,请务必保留此出处http://msiyuetian.blog.51cto.com/8637744/1931102

以上是关于Python爬虫:爬取小说并存储到数据库的主要内容,如果未能解决你的问题,请参考以下文章