[Chapter 5] Reinforcement LearningFunction Approximation

Posted 超级超级小天才

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[Chapter 5] Reinforcement LearningFunction Approximation相关的知识,希望对你有一定的参考价值。

Function Approximation

While we are learning the Q-functions, but how to represent or record the Q-values? For discrete and finite state space and action space, we can use a big table with size of ∣ S ∣ × ∣ A ∣ |S| \\times |A| ∣S∣×∣A∣ to represent the Q-values for all ( s , a ) (s,a) (s,a) pairs. However, if the state space or action space is very huge, or actually, usually they are continuous and infinite, a tabular method doesn’t work anymore.

We need function approximation to represent utility and Q-functions with some parameters θ \\theta θ to be learnt. Also take the grid environment as our example, we can represent the state using a pair of coordiantes ( x , y ) (x,y) (x,y), then one simple function approximation can be like this:

U ^ θ ( x , y ) = θ 0 + θ 1 x + θ 2 y \\hatU_\\theta (x,y)=\\theta_0+\\theta_1 x+\\theta_2 y U^θ(x,y)=θ0+θ1x+θ2y

Of course, you can design more complex functions when you have a much larger state space.

In this case, our reinforcement learning agent turns to learn the parameters θ \\theta θ to approximate the evaluation functions ( U ^ θ \\hatU_\\theta U^θ or Q ^ θ \\hatQ_\\theta Q^θ).

For Monte Carlo learning, we can collect a set of training samples (trails) with input and label, then this turns to be a supervised learning problem. With squared error and linear function, we get a standard linear regression problem.

For Temporal Difference learning, the agent aims to adjust the parameters to reduce the temporal difference (to reduce the TD error. To update the parameters using gradient decrease method:

- For SARSA (on-policy method):

θ i ← θ i + α ( R ( s ) + γ Q ^ θ ( s ′ , a ′ ) − Q ^ θ ( s , a ) ) ∂ Q ^ θ ( s , a ) ∂ θ i \\theta_i \\leftarrow \\theta_i+\\alpha(R(s)+\\gamma\\hatQ_\\theta (s^′,a′)−\\hatQ_\\theta(s,a)) \\frac\\partial \\hatQ_\\theta (s,a)\\partial\\theta_i θi←θi+α(R(s)+γQ^θ(s′,a′)−Q^θ(s,a))∂θi∂Q^θ(s,a)

- For Q-learning (off-policy method):

θ i ← θ i + α ( R ( s ) + γ m a x a ′ Q ^ θ ( s ′ , a ′ ) − Q ^ θ ( s , a ) ) ∂ Q ^ θ ( s , a ) ∂ θ i \\theta_i \\leftarrow \\theta_i+\\alpha(R(s)+\\gamma max_a'\\hatQ_\\theta (s^′,a′)−\\hatQ_\\theta(s,a)) \\frac\\partial \\hatQ_\\theta (s,a)\\partial\\theta_i θi←θi+α(R(s)+γmaxa′Q^θ(s′,a′)−Q^θ(s,a))∂θi∂Q^θ(s,a)

Going Deep

One of the greatest advancement in reinforcement learning is to combine it with deep learning. As we have stated above, mostly, we cannot use a tabular method to represent the evaluation functions, we need approximation! I know what you want to say: you must have thought that deep network is a good function approximation. We have input for a network and output the Q-values or utilities, that’s it! So, using deep network in RL is deep reinforcement learning (DRL).

Why we need deep network?

- Firstly, for the environment that has nearly infinite state space, the deep network can hold a large set of parameters θ \\theta θ to be learnt and can map a large set of states to their expected Q-values.

- Secondly, for some environment with complex observation, only deep network can solve them. For example, if the observation is an RGB image, we need convolutional neural network (CNN) in the first layers to read them; if the observation is a piece of audio, we need recurrent neural network (RNN) in the first layers.

- Nowadays, designing and training a deep neural network can be done much easier based on the advanced hardware and software technology.

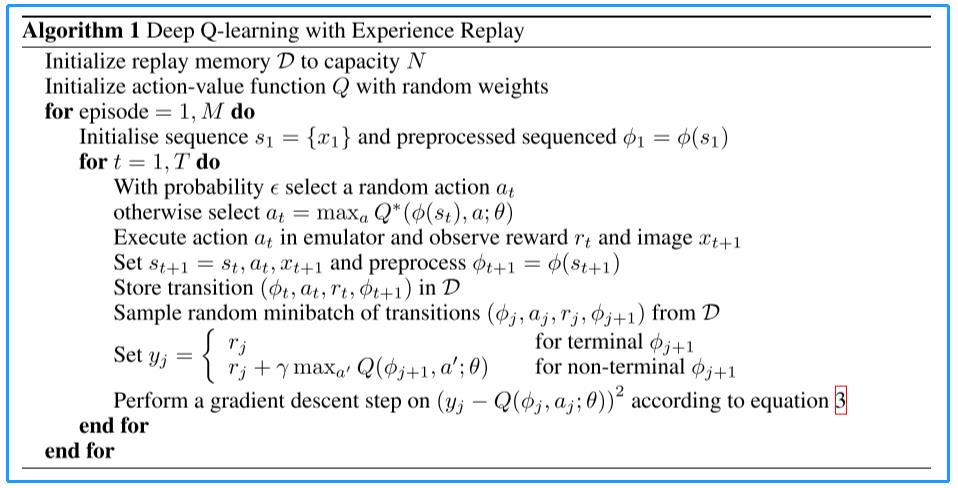

One of the DRL algorithms is Deep Q-learning Network (DQN), we have the pseudo code here but will not go into it:

以上是关于[Chapter 5] Reinforcement LearningFunction Approximation的主要内容,如果未能解决你的问题,请参考以下文章