Elasticsearch基础

Posted Amelie11

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Elasticsearch基础相关的知识,希望对你有一定的参考价值。

介绍

什么是全文搜索引擎

众所周知,常用的搜索网站有:百度、谷歌等。那么要对数据进行搜索,是不是需要先了解数据的分类

数据的分类

结构化数据:指具有固定格式或有限长度的数据,如数据库、元数据等

对于结构化的数据,一般是通过关系型数据库(mysql、oracle等)进行存储和搜索,也可以建立索引。通过b-tree等数据结构快速搜索。

非结构化数据:全文数据,指不定长度或无固定格式的数据,如文档等

对于非结构化的数据,也就是全文数据:顺序扫描法、全文搜索法

顺序扫描

按照顺序扫描的⽅式查找特定的关键字。⽐如在关于海贼王的帖子中,找出"路飞"这个名字在哪些段落出现过。那你肯定需要从头到尾把⽂章阅读⼀遍,然后标记出关键字在哪些地⽅出现过。这种⽅法想想都是最低效的。

全文搜索

对全文数据进⾏顺序扫描很慢,那怎么进行优化?把我们的⾮结构化数据想办法弄得有⼀定结构不就⾏了吗?将⾮结构化数据中的⼀部分信息提取出来,重新组织,使其变得有⼀定结构,然后对这些有⼀定结构的数据进⾏搜索,从⽽达到搜索相对较快的⽬的。这种⽅式就构成了全⽂搜索的基本思路。这部分从⾮结构化数据中提取出的然后重新组织的信息,我们称为索引。

什么是全文搜索引擎

全文搜索引擎是目前广泛应用的主流搜索引擎。它的工作原理是计算机索引程序通过扫描文章中的每一个词,对每一个词建立一个索引,指明该词在文章中出现的次数和位置,当用户查询时,检索程序就根据事先建立的索引进行查找,并将查找的结果反馈给用户的检索方式。

常见的搜索引擎

Lucene,Solr,Elasticsearch

Lucene

Lucene是⼀个Java全⽂搜索引擎,只是⼀个框架,⼀个代码库和API,要充分利⽤它的功能,需要使⽤java,并且在程序中集成,这样可以很容易地⽤于向应⽤程序添加搜索功能。

通过简单的API提供强⼤的功能 :

可扩展的⾼性能索引

强⼤,准确,⾼效的搜索算法

跨平台解决⽅案

Solr

Solr是⼀个基于Lucene的Java库构建的开源搜索平台。它以⽤户友好的⽅式提供Apache Lucene的搜索功能。它是⼀个成熟的产品,拥有强⼤⽽⼴泛的⽤户社区。它能提供分布式索引,复制,负载均衡查询以及⾃动故障转移和恢复。如果它被正确部署然后管理得好,它就能够成为⼀个⾼度可靠,可扩展且容错的搜索引擎

强⼤的功能

全⽂搜索

突出

分⾯搜索

实时索引

动态群集

数据库集成

NoSQL功能和丰富的⽂档处理

Elasticsearch

Elasticsearch是⼀个开源,是⼀个基于Apache Lucene库构建的Restful搜索引擎.Elasticsearch是在Solr之后⼏年推出的。它提供了⼀个分布式,多租户能⼒的全⽂搜索引擎,具有HTTP Web界⾯(REST)和⽆架构JSON⽂档。Elasticsearch的官⽅客户端库提供Java,Groovy,php,Ruby,Perl,Python,.NET和javascript。

主要功能

分布式搜索

数据分析

分组和聚合

应⽤场景

维基百科

电商⽹站

⽇志数据分析

为什么不用mysql做搜索引擎

我们的所有数据都是放在数据库⾥的,⽽且 Mysql,Oracle,SQL Server 等数据库也能提供查询搜索功能,直接通过数据库查询不就可以了?确实,我们⼤部分的查询都能通过数据库查询,如果查询效率低,还可以通过新建数据库索引,优化SQL等⽅式提升效率,也可以通过引⼊缓存⽐如redis,memcache来加快数据的返回速度。如果数据量更⼤,还可以通过分库分表来分担查询压⼒。那为什么还要全⽂搜索引擎呢

数据类型

全⽂索引搜索很好的⽀持⾮结构化数据的搜索,可以更好地快速搜索⼤量存在的任何单词⾮结构化⽂本。例如 Google,百度类的⽹站搜索,它们都是根据⽹⻚中的关键字⽣成索引,我们在搜索的时候输⼊关键字,它们会将该关键字即索引匹配到的所有⽹⻚返回;还有常⻅的项⽬中应⽤⽇志的搜索等等。对于这些⾮结构化的数据⽂本,关系型数据库搜索不能很好的⽀持。

搜索性能

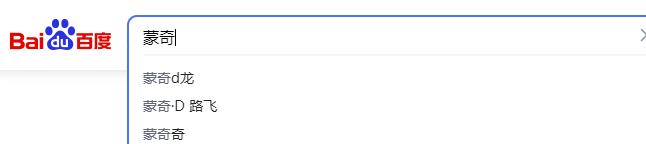

如果使⽤mysql做搜索,⽐如有个人物表character,有字段名称name,要查找出

名称以“蒙奇”开头的人物,和含有蒙奇的人物。数据量达到千万级别的时候怎么办?

--该查询还好

select * from charact where name like '蒙奇%';

--无法走索引

select * from charact where name like '%蒙奇%';灵活的索引

如果我们想查出名字叫蒙奇D路飞的人物,但是⽤户输⼊了蒙奇,我们想提示他⼀些关键字

索引的维护

⼀般传统数据库,全⽂搜索都实现的很鸡肋,因为⼀般也没⼈⽤数据库存⻓⽂本字段,因为进⾏全⽂搜索的时候需要扫描整个表,如果数据量⼤的话即使对SQL的语法进⾏优化,也是效果甚微。即使建⽴了索引,但是维护起来也很麻烦,对于 insert 和 update 操作都会重新 构建索引。

适合全⽂索引引擎的场景

搜索的数据对象是⼤量的⾮结构化的⽂本数据。

⽂本数据量达到数⼗万或数百万级别,甚⾄更多。

⽀持⼤量基于交互式⽂本的查询。

需求⾮常灵活的全⽂搜索查询。

读多写少。

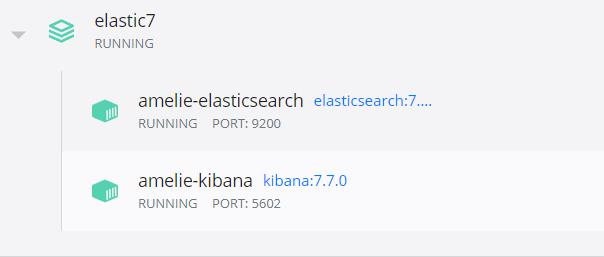

快速搭建elasticsearch

可以通过docker进行快速搭建,docker-compose.xml如下,

version: '3'

services:

elasticsearch:

image: elasticsearch:7.7.0

container_name: amelie-elasticsearch

environment:

ES_JAVA_OPTS: -Djava.net.preferIPv4Stack=true -Xms512m -Xmx512m

transport.host: 0.0.0.0

discovery.type: single-node

bootstrap.memory_lock: "true"

discovery.zen.minimum_master_nodes: 1

discovery.zen.ping.unicast.hosts: elasticsearch

volumes:

- elasticsearch-volume:/usr/share/elasticsearch/data

ports:

- "9200:9200"

- "9300:9300"

kibana:

image: kibana:7.7.0

container_name: amelie-kibana

environment:

ELASTICSEARCH_URL: http://elasticsearch:9200

links:

- elasticsearch:elasticsearch

ports:

- "5602:5601"

depends_on:

- elasticsearch

volumes:

elasticsearch-volume:启动:docker-compose up -d

访问http://localhost:5602即可看到kibana的界面

访问http://localhost:9200/可看到elastic相关信息

基础使用

elastic search核⼼概念

Elasticsearch | 关系型数据库 |

索引(index) | 数据库 |

类型(type): 注意: ES 5.x中⼀个index可以有多种type。 ES 6.x中⼀个index只能有⼀种type。 ES 7.x以后已经移除type这个概念 | 表:如用户表、角色表 |

映射(mapping),定义了每个字段的类型等信息 | 表结构 |

⽂档(document) | ⼀⾏记录 |

字段(field) | 字段 |

集群(cluster)

集群由⼀个或多个节点组成,⼀个集群有⼀个默认名称"elasticsearch"。

节点(node)

集群的节点,⼀台机器或者⼀个进程

分⽚和副本(shard)

副本是分⽚的副本。分⽚有主分⽚(primary Shard)和副本分⽚(replica Shard)。⼀个Index数据在物理上被分布在多个主分⽚中,每个主分⽚只存放部分数据。每个主分⽚可以有多个副本,叫副本分⽚,是主分⽚的复制。

快速入门

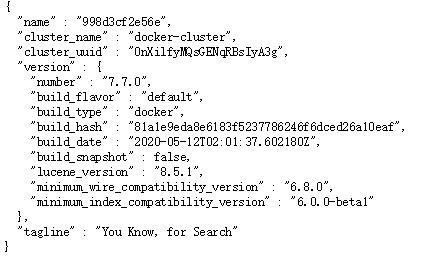

获取elasticsearch状态

"name" : "998d3cf2e56e",

"cluster_name" : "docker-cluster",

"cluster_uuid" : "0nXilfyMQsGENqRBsIyA3g",

"version" :

"number" : "7.7.0",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "81a1e9eda8e6183f5237786246f6dced26a10eaf",

"build_date" : "2020-05-12T02:01:37.602180Z",

"build_snapshot" : false,

"lucene_version" : "8.5.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

,

"tagline" : "You Know, for Search"

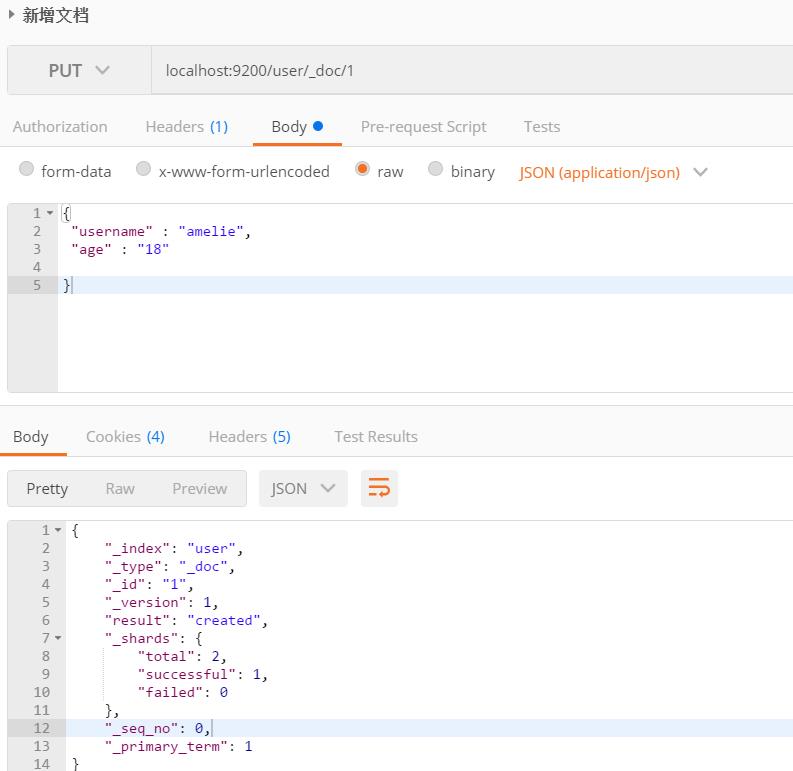

新增文档

PUT localhost:9200/user/_doc/1

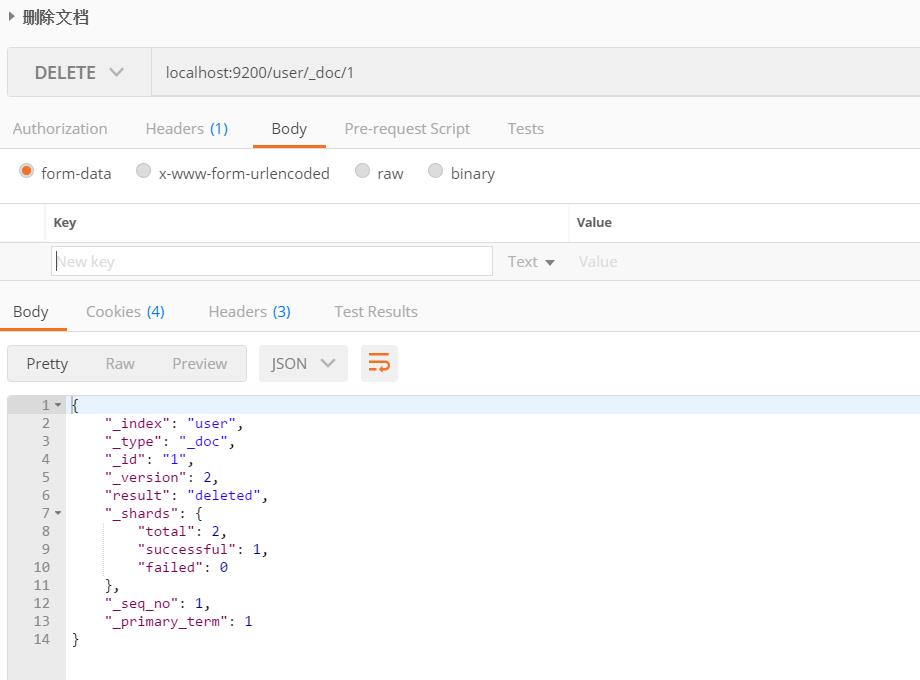

删除文档

DELETE localhost:9200/user/_doc/1

索引的使用

方法 | URL | 结果 |

新增 | PUT localhost:9200/onepiece | |

获取 | GET localhost:9200/onepiece | |

删除 | DELETE localhost:9200/onepiece | |

批量获取 | GET localhost:9200/onepiece,user | |

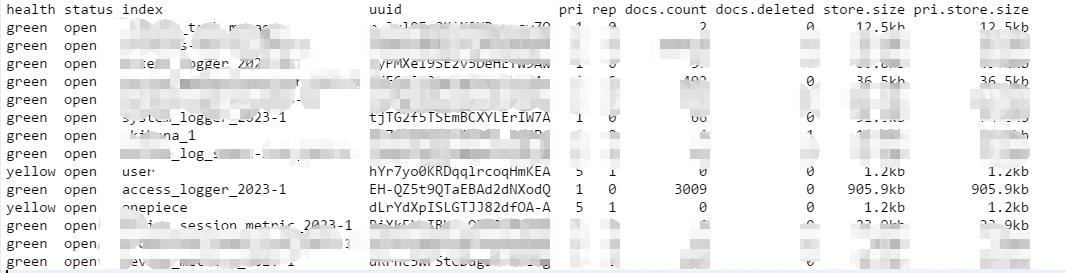

获取所有 | GET localhost:9200/_all GET localhost:9200/_cat/indices?v | 第二种方式返回:

|

关闭 | POST localhost:9200/onepiece/_close | 关闭索引后,就无法再创建文档,若创建则会报错: |

打开 | POST localhost:9200/onepiece/_open | |

映射的使用

操作 | URL | 数据 | 结果 |

新增 | post localhost:9200/onepiece/_mapping | | |

获取 | get localhost:9200/_mapping | ||

修改 | put localhost:9200/onepiece/_mapping |

文档的使用

新增文档

操作 | URL | 数据 | 结果 |

新增 | PUT localhost:9200/onepiece/_doc/1 必须指定id | | |

新增2 | POST localhost:9200/nba/_doc 不指定id | | |

查看 | GET localhost:9200/onepiece/_doc/1 | | |

查看多个 | POST localhost:9200/_mget | | |

查看多个指定索引 | POST localhost:9200/onepiece/_mget | | |

修改 | POST localhost:9200/onepiece/_doc/1 | | |

删除 | DELETE localhost:9200/onepiece/_doc/1 |

搜索的简单使用

term(词条)查询和full text(全⽂)查询

词条查询:词条查询不会分析查询条件,只有当词条和查询字符串完全匹配时,才匹配搜 索。

全⽂查询:ElasticSearch引擎会先分析查询字符串,将其拆分成多个分词,只要已分析的字段中包含词条的任意⼀个,或全部包含,就匹配查询条件,返回该⽂档;如果不包含任意⼀个分词,表示没有任何⽂档匹配查询条件

单条term

关键字查询,精确查询,mapping中type为keyword

post localhost:9200/onepiece/_search

"query":

"term":

"name":"路飞"

多条term

post localhost:9200/onepiece/_search

"query":

"terms":

"name":["路飞","索隆"]

match_all

post localhost:9200/onepiece/_search

"query":

"match_all":

,

"from": 0,

"size": 10

match

post localhost:9200/onepiece/_search

"query":

"match":

"role": "船小长"

会进行分词: 船、小、长,发现有2个在文档中

结果:

"took": 1,

"timed_out": false,

"_shards":

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

,

"hits":

"total":

"value": 1,

"relation": "eq"

,

"max_score": 2.0834165,

"hits": [

"_index": "onepiece",

"_type": "_doc",

"_id": "1",

"_score": 2.0834165,

"_source":

"name": "路飞",

"role": "船长",

"skill": "橡胶巨人手枪"

]

multi_match

多字段匹配

post localhost:9200/onepiece/_search

"query":

"multi_match":

"query":"船长",

"fields":["name","role"]

match_phrase

类似词条查询,精确

post localhost:9200/onepiece/_search

"query":

"match_phrase":

"role":"厨师"

match_phrase_prefix

post localhost:9200/onepiece/_search

"query":

"match_phrase_prefix":

"skill":"三"

#结果

"took": 3,

"timed_out": false,

"_shards":

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

,

"hits":

"total":

"value": 1,

"relation": "eq"

,

"max_score": 1.0925692,

"hits": [

"_index": "onepiece",

"_type": "_doc",

"_id": "2",

"_score": 1.0925692,

"_source":

"name": "索隆",

"role": "战斗员",

"skill": "三刀流"

]

分词器

什么是分词器

将⽤户输⼊的⼀段⽂本,按照⼀定逻辑,分析成多个词语的⼀种⼯具

常⽤的内置分词器

standard analyzer

标准分析器:默认分词器,如果未指定,则使用该分词器

POST localhost:9200/_analyze

"analyzer": "standard",

"text":"1-Piece that is so much fun that I never want to miss a chapter or episode"

结果:

"tokens": [

"token": "1",

"start_offset": 0,

"end_offset": 1,

"type": "<NUM>",

"position": 0

,

"token": "piece",

"start_offset": 2,

"end_offset": 7,

"type": "<ALPHANUM>",

"position": 1

,

"token": "that",

"start_offset": 8,

"end_offset": 12,

"type": "<ALPHANUM>",

"position": 2

,

"token": "is",

"start_offset": 13,

"end_offset": 15,

"type": "<ALPHANUM>",

"position": 3

,

"token": "so",

"start_offset": 16,

"end_offset": 18,

"type": "<ALPHANUM>",

"position": 4

,

"token": "much",

"start_offset": 19,

"end_offset": 23,

"type": "<ALPHANUM>",

"position": 5

,

"token": "fun",

"start_offset": 24,

"end_offset": 27,

"type": "<ALPHANUM>",

"position": 6

,

"token": "that",

"start_offset": 28,

"end_offset": 32,

"type": "<ALPHANUM>",

"position": 7

,

"token": "i",

"start_offset": 33,

"end_offset": 34,

"type": "<ALPHANUM>",

"position": 8

,

"token": "never",

"start_offset": 35,

"end_offset": 40,

"type": "<ALPHANUM>",

"position": 9

,

"token": "want",

"start_offset": 41,

"end_offset": 45,

"type": "<ALPHANUM>",

"position": 10

,

"token": "to",

"start_offset": 46,

"end_offset": 48,

"type": "<ALPHANUM>",

"position": 11

,

"token": "miss",

"start_offset": 49,

"end_offset": 53,

"type": "<ALPHANUM>",

"position": 12

,

"token": "a",

"start_offset": 54,

"end_offset": 55,

"type": "<ALPHANUM>",

"position": 13

,

"token": "chapter",

"start_offset": 56,

"end_offset": 63,

"type": "<ALPHANUM>",

"position": 14

,

"token": "or",

"start_offset": 64,

"end_offset": 66,

"type": "<ALPHANUM>",

"position": 15

,

"token": "episode",

"start_offset": 67,

"end_offset": 74,

"type": "<ALPHANUM>",

"position": 16

]

simple analyzer

simple 分析器当它遇到只要不是字⺟的字符,就将⽂本解析成term,⽽且所有的term都是⼩写的。

POST localhost:9200/_analyze

"analyzer": "simple",

"text":"1-Piece that is so much fun that I never want to miss a chapter or episode!"

与上面的区别是数字没了

"tokens": [

"token": "piece",

"start_offset": 2,

"end_offset": 7,

"type": "word",

"position": 0

,

"token": "that",

"start_offset": 8,

"end_offset": 12,

"type": "word",

"position": 1

,

"token": "is",

"start_offset": 13,

"end_offset": 15,

"type": "word",

"position": 2

,

"token": "so",

"start_offset": 16,

"end_offset": 18,

"type": "word",

"position": 3

,

"token": "much",

"start_offset": 19,

"end_offset": 23,

"type": "word",

"position": 4

,

"token": "fun",

"start_offset": 24,

"end_offset": 27,

"type": "word",

"position": 5

]

whitespace analyze

whitespace 分析器,当它遇到空⽩字符时,就将⽂本解析成term,数字、大小写、标点符号都不会做处理

POST localhost:9200/_analyze

"analyzer": "whitespace",

"text":"1-Piece that is so much Fun !"

结果:

"tokens": [

"token": "1-Piece",

"start_offset": 0,

"end_offset": 7,

"type": "word",

"position": 0

,

"token": "that",

"start_offset": 8,

"end_offset": 12,

"type": "word",

"position": 1

,

"token": "is",

"start_offset": 13,

"end_offset": 15,

"type": "word",

"position": 2

,

"token": "so",

"start_offset": 16,

"end_offset": 18,

"type": "word",

"position": 3

,

"token": "much",

"start_offset": 19,

"end_offset": 23,

"type": "word",

"position": 4

,

"token": "Fun",

"start_offset": 24,

"end_offset": 27,

"type": "word",

"position": 5

,

"token": "!",

"start_offset": 28,

"end_offset": 29,

"type": "word",

"position": 6

]

stop analyzer

stop 分析器 和 simple 分析器很像,唯⼀不同的是,stop 分析器增加了对删除停⽌词的⽀持,默认使⽤了english停⽌词。stopwords 预定义的停⽌词列表,⽐如 (the,a,an,this,of,at)等

"analyzer": "stop",

"text":"1-Piece that is so much Fun !"

"tokens": [

"token": "piece",

"start_offset": 2,

"end_offset": 7,

"type": "word",

"position": 0

,

"token": "so",

"start_offset": 16,

"end_offset": 18,

"type": "word",

"position": 3

,

"token": "much",

"start_offset": 19,

"end_offset": 23,

"type": "word",

"position": 4

,

"token": "fun",

"start_offset": 24,

"end_offset": 27,

"type": "word",

"position": 5

]

language analyzer

特定的语⾔的分词器,⽐如说,english,英语分词器),内置语⾔:arabic, armenian,basque, bengali, brazilian, bulgarian, catalan, cjk, czech, danish, dutch, english, finnish,french, galician, german, greek, hindi, hungarian, indonesian, irish, italian, latvian,lithuanian, norwegian, persian, portuguese, romanian, russian, sorani, spanish,swedish, turkish,

"analyzer": "english",

"text":"1-Piece that is so much Fun !"

"tokens": [

"token": "1",

"start_offset": 0,

"end_offset": 1,

"type": "<NUM>",

"position": 0

,

"token": "piec",

"start_offset": 2,

"end_offset": 7,

"type": "<ALPHANUM>",

"position": 1

,

"token": "so",

"start_offset": 16,

"end_offset": 18,

"type": "<ALPHANUM>",

"position": 4

,

"token": "much",

"start_offset": 19,

"end_offset": 23,

"type": "<ALPHANUM>",

"position": 5

,

"token": "fun",

"start_offset": 24,

"end_offset": 27,

"type": "<ALPHANUM>",

"position": 6

]

pattern analyzer

⽤正则表达式来将⽂本分割成terms,默认的正则表达式是\\W+(⾮单词字符)

"analyzer": "pattern",

"text":"1-Piece that is so much Fun !"

"tokens": [

"token": "1",

"start_offset": 0,

"end_offset": 1,

"type": "word",

"position": 0

,

"token": "piece",

"start_offset": 2,

"end_offset": 7,

"type": "word",

"position": 1

,

"token": "that",

"start_offset": 8,

"end_offset": 12,

"type": "word",

"position": 2

,

"token": "is",

"start_offset": 13,

"end_offset": 15,

"type": "word",

"position": 3

,

"token": "so",

"start_offset": 16,

"end_offset": 18,

"type": "word",

"position": 4

,

"token": "much",

"start_offset": 19,

"end_offset": 23,

"type": "word",

"position": 5

,

"token": "fun",

"start_offset": 24,

"end_offset": 27,

"type": "word",

"position": 6

]

分词器的使用

1、新建一个索引

PUT localhost:9200/test

"settings":

"analysis":

"analyzer":

"my_analyzer":

"type": "whitespace"

,

"mappings":

"properties":

"name":

"type": "keyword"

,

"role":

"type": "text"

,

"skill":

"type": "text"

,

"desc":

"type": "text",

"analyzer": "my_analyzer"

2、新建文档

PUT localhost:9200/test/_doc/1

"name":"路飞",

"role":"船长",

"skill":"橡胶巨人手枪",

"desc":"Luffy is funny and crazy!"

3、查找

POST localhost:9200/test/_search

"query":

"match":

"desc": "crazy"

未能找到结果

"query":

"match":

"desc": "crazy!"

即能找到

因为desc用的是whitespace分词器,crazy!会被定义成一个词条中文分词器

smartCN: ⼀个简单的中⽂或中英⽂混合⽂本的分词

IK:更智能更友好的中⽂分词器

在docker中如何使用大家可以自行查阅

以上是关于Elasticsearch基础的主要内容,如果未能解决你的问题,请参考以下文章