激活函数(Activation Functions)

Posted yhl_leo

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了激活函数(Activation Functions)相关的知识,希望对你有一定的参考价值。

本系列文章由 @yhl_leo 出品,转载请注明出处。

文章链接: http://blog.csdn.net/yhl_leo/article/details/56488640

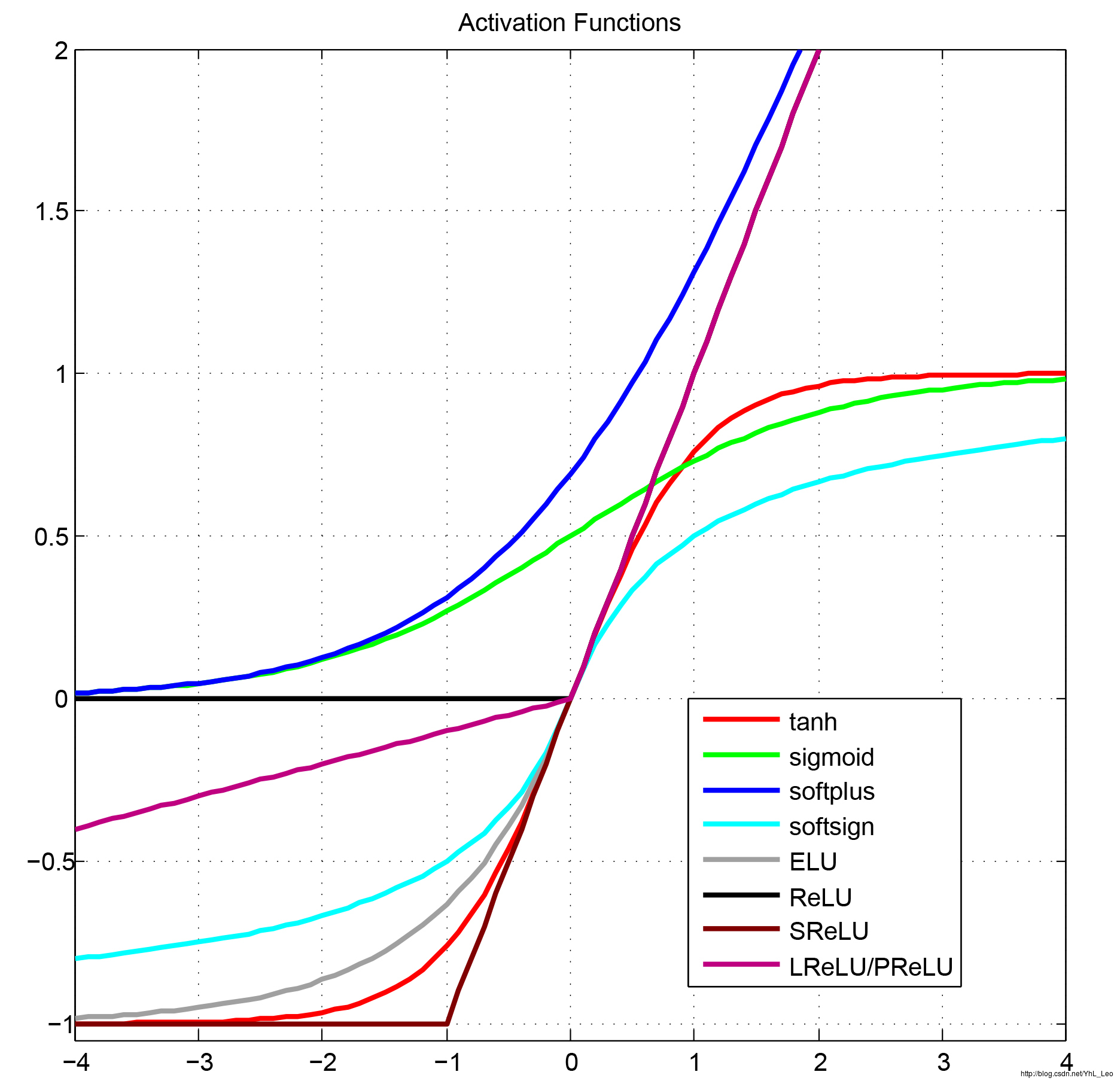

简单整理了一下目前深度学习中提出的激活函数,按照激活函数是否可微的性质分为3类:

论文及资料已上传至GitHub: yhlleo/Activations.

平滑非线性函数(Smooth nonlinearities):

- tanh : Efficient BackProb, Neural Networks 1998

f(x)=ex−e−xex+e−x - sigmoid: Efficient BackProb, Neural Networks 1998

f(x)=11+e−x - softplus: Incorporating Second-Order Functional Knowledge for Better Option Pricing, NIPS 2001

f(x)=ln(1+ex) - softsign:

f(x)=x|x|+1 - ELU: Fast and Accuracy Deep Network Learning by Exponential Linear Units, ICLR 2016

f(x)=xα(ex−1);x>0;x≤0,α=1.0

- tanh : Efficient BackProb, Neural Networks 1998

连续但并不是处处可微(Continuous but not everywhere differentiable)

- ReLU: Deep Sparse Rectifier Neural Networks, AISTATS 2011

f(x)=max(0,x) - ReLU6: tf.nn.relu6

f(x)=min(max(0,x),6) - SReLU: Shift Rectified Linear Unit

f(x)=max(−1,x) - Leaky ReLU: Rectifier Nonlinearities Improve Neural Network Acoustic Models, ICML 2013

f(x)=max(αx,x),α=0.01 - PReLU: Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, arXiv 2015

f(x)=max(ax,x),a∈[0,1),α is learned - RReLU: Empirical Evaluation of Rectified Activations in Convolution Network, arXiv 2015

f(x)=max(αx,x),α~U(l,u),l<u and l,u∈[0,1) - CReLU: Understanding and Improving Convolutional Neural Networks via Concatenated Rectified Linear Units, arXiv 2016

f(x)=concat(relu(x),relu(−x))

- ReLU: Deep Sparse Rectifier Neural Networks, AISTATS 2011

离散的(Discrete)

- NReLU: Rectified Linear Units Improve Restricted Boltzmann Machines, ICML 2010

f(x)=max(0,x+N(0,σ(x))) - Noisy Activation Functions: Noisy Activation Functions, ICML 2016

- NReLU: Rectified Linear Units Improve Restricted Boltzmann Machines, ICML 2010

简单绘制部分激活函数曲线:

References:

- http://cs224d.stanford.edu/lecture_notes/LectureNotes3.pdf

- https://www.tensorflow.org/api_guides/python/nn

- http://www.cnblogs.com/rgvb178/p/6055213.html

以上是关于激活函数(Activation Functions)的主要内容,如果未能解决你的问题,请参考以下文章