以金融证券游戏电商等案例详解SQL强化

Posted 黑马程序员官方

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了以金融证券游戏电商等案例详解SQL强化相关的知识,希望对你有一定的参考价值。

SQL强化

SQL执行顺序

--举例:

select

a.sex,

b.city,

count(1) as cnt,

sum(salary) as sum1

from table1 a

join table2 b on a.id=b.id

where a.name=b.name

group by a.sex,b.city

having cnt>=2

order by a.sex,b.city

limit 10

--或者是

select distinct

a.sex,

b.city,

a.age

from table1 a

join table2 b on a.id=b.id

where a.name=b.name

order by a.sex,b.city

limit 10

上面的SQL语句的执行顺序是: from (去加载table1 和 table2这2个表 ) -> join -> on -> where -> group by->select 后面的聚合函数count,sum -> having -> distinct -> order by -> limit

--on 和where的先后顺序讨论

--下面用left join 各得到结果,结果不一样。

--下面可知,先执行on,再执行where

select *

from table1 a

left join table2 b

on a.id=b.id

where a.name=b.name;

--下面的条数可能会比上面多。

select *

from table1 a

left join table2 b

on a.id=b.id

and a.name=b.name;

--下面用inner join 各得到结果,结果是一样的

select *

from table1 a

join table2 b

on a.id=b.id

where a.name=b.name;

select *

from table1 a

join table2 b

on a.id=b.id

and a.name=b.name;

hivesql与sparkSQL的区别:

子查询hive必须起别名,SparkSQL可以不用起别名

group by xx,yy,hive不用能用别名,spark可以用别名

hive不支持临时视图和缓存表,SparkSQL都支持

--用SparkSQL的临时视图 use interview_db; create or replace temporary view t_view1 as select *, if(month=1,amount,0) as a1, if(month=2,amount,0) as a2, if(month=3,amount,0) as a3, if(month=4,amount,0) as a4 from table2;select year,

sum(a1) as m1,

sum(a2) as m2,

sum(a3) as m3,

sum(a4) as m4

from t_view1

group by year;–使用SparkSQL的缓存表

cache table cached1 as

select *,

if(month=1,amount,0) as a1,

if(month=2,amount,0) as a2,

if(month=3,amount,0) as a3,

if(month=4,amount,0) as a4

from table2;select * from cached1;

select year,

sum(a1) as m1,

sum(a2) as m2,

sum(a3) as m3,

sum(a4) as m4

from cached1

group by year;* 爆炸函数,hive不支持explode与普通字段联合使用,需要用侧视图分开,SparkSQL支持联合使用 * ```sql use interview_db; select qq,game1 from tableB lateral view explode(split(game,'_')) view1 as game1 ; --spark还支持这样,但是hive不支持: select qq,explode(split(game,'_')) game1 from tableB ;

sparkSQL支持300多种函数,hiveSQL支持200多种函数。sparkSQL函数比hiveSQL要多。

- 比如SparkSQL有sequence函数,hive就没有

-

先配置环境

-

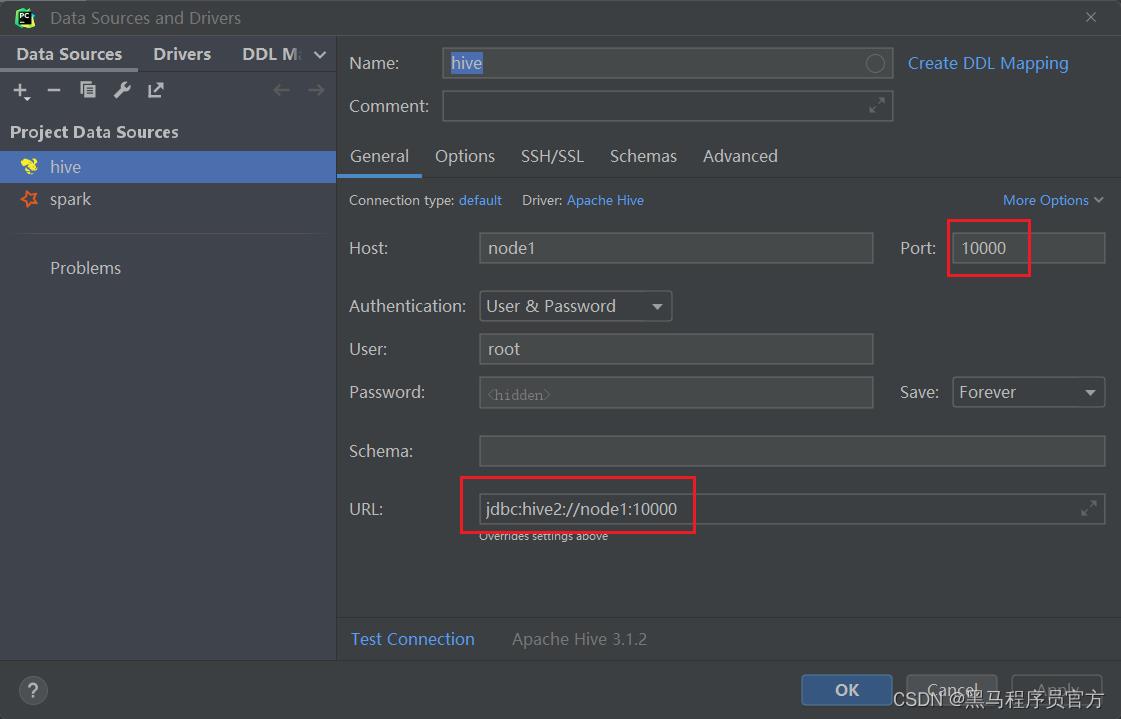

在pycharm或datagrip或idea中配置hive数据源。也可以配置一个sparkSQL数据源,来加快速度。

-

如果配置hive数据源:

-

需要提前启动hdfs和yarn,hive的metastore,hive的hiveserver2

-

#启动hdfs和yarn start-all.sh # hive的metastore nohup /export/server/hive/bin/hive --service metastore 2>&1 > /tmp/hive-metastore.log & #hive的hiveserver2 #hiveserver2开启后,等过2分钟后才能生效。 nohup /export/server/hive/bin/hive --service hiveserver2 2>&1 > /tmp/hive-hiveserver2.log &

-

- 如果遇到下面的问题

-

解决办法

-

hive/conf/hive-env.sh中加入 export HADOOP_CLIENT_OPTS=" -Xmx512m" export HADOOP_HEAPSIZE=1024 改完重启hiveserver2

-

-

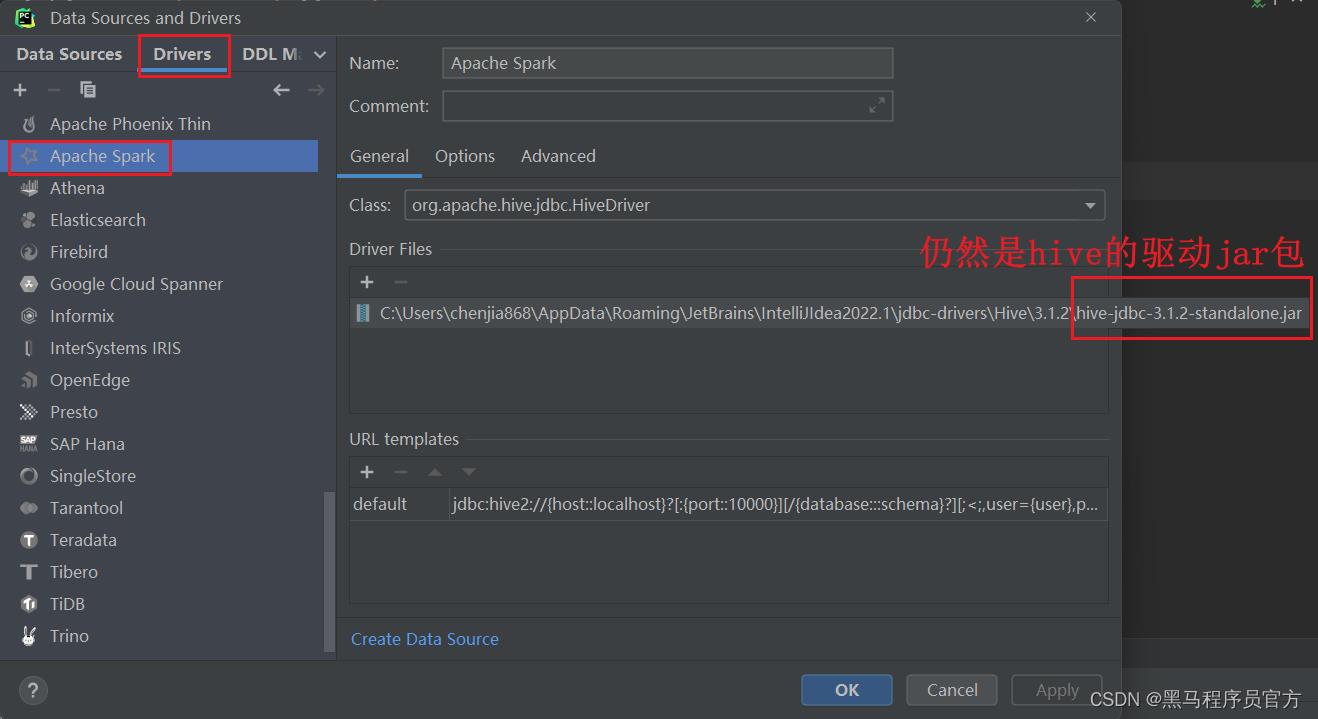

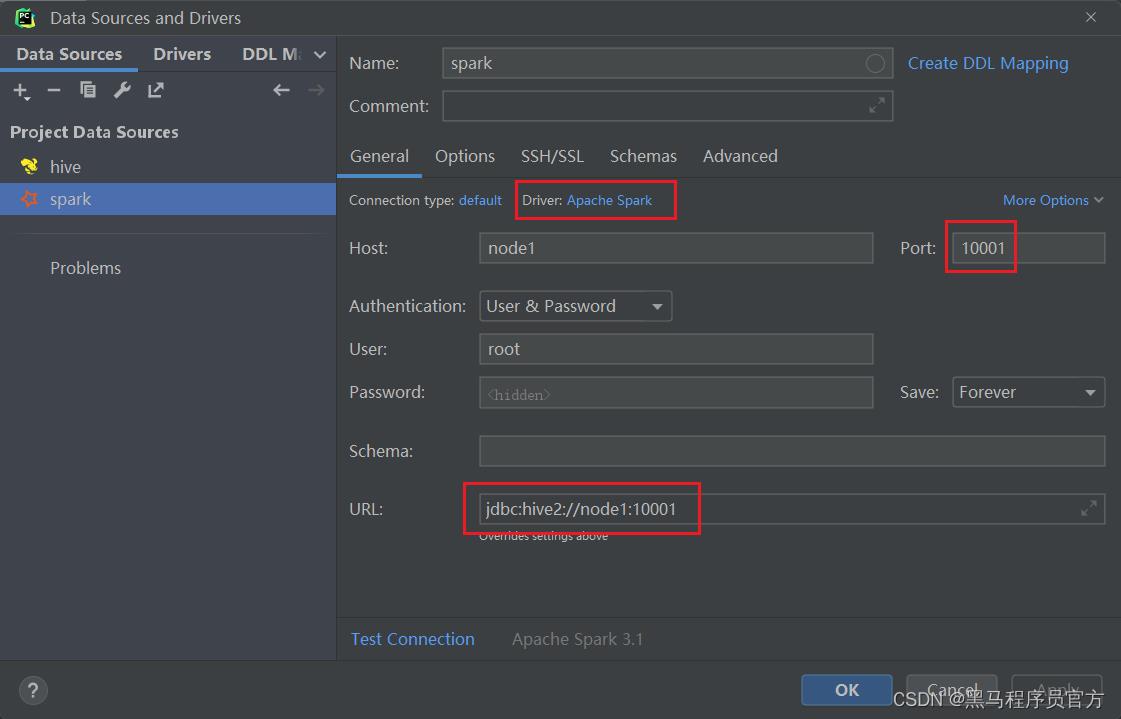

如果配置SparkSQL数据源

-

需要提前启动hdfs,hive的metastore,Spark的Thriftserver服务。

-

#启动hdfs和yarn start-all.sh # hive的metastore nohup /export/server/hive/bin/hive --service metastore 2>&1 > /tmp/hive-metastore.log & #Spark的Thriftserver服务 /export/server/spark/sbin/start-thriftserver.sh \\ --hiveconf hive.server2.thrift.port=10001 \\ --hiveconf hive.server2.thrift.bind.host=node1 \\ --master local[*] -

下面是spark3集成hive3需要的jar包,如果是spark2集成hive2,则jar包不一样。

-

-

show databases ;

create database if not exists test_sql;

use test_sql;

-- 一些语句会走 MapReduce,所以慢。 可以开启本地化执行的优化。

set hive.exec.mode.local.auto=true;-- (默认为false)

--第1题:访问量统计

CREATE TABLE test_sql.test1 (

userId string,

visitDate string,

visitCount INT )

ROW format delimited FIELDS TERMINATED BY "\\t";

INSERT overwrite TABLE test_sql.test1

VALUES

( 'u01', '2017/1/21', 5 ),

( 'u02', '2017/1/23', 6 ),

( 'u03', '2017/1/22', 8 ),

( 'u04', '2017/1/20', 3 ),

( 'u01', '2017/1/23', 6 ),

( 'u01', '2017/2/21', 8 ),

( 'u02', '2017/1/23', 6 ),

( 'u01', '2017/2/22', 4 );

select *,

sum(sum1) over(partition by userid order by month1 /*rows between unbounded preceding and current row*/ ) as `累积`

from

(select userid,

date_format(replace(visitdate,'/','-'),'yyyy-MM') as month1,

sum(visitcount) sum1

from test_sql.test1

group by userid,

date_format(replace(visitdate,'/','-'),'yyyy-MM')) as t;

-- 第2题:电商场景TopK统计

CREATE TABLE test_sql.test2 (

user_id string,

shop string )

ROW format delimited FIELDS TERMINATED BY '\\t';

INSERT INTO TABLE test_sql.test2 VALUES

( 'u1', 'a' ),

( 'u2', 'b' ),

( 'u1', 'b' ),

( 'u1', 'a' ),

( 'u3', 'c' ),

( 'u4', 'b' ),

( 'u1', 'a' ),

( 'u2', 'c' ),

( 'u5', 'b' ),

( 'u4', 'b' ),

( 'u6', 'c' ),

( 'u2', 'c' ),

( 'u1', 'b' ),

( 'u2', 'a' ),

( 'u2', 'a' ),

( 'u3', 'a' ),

( 'u5', 'a' ),

( 'u5', 'a' ),

( 'u5', 'a' );

--(1)每个店铺的UV(访客数)

-- UV和PV

-- PV是访问当前网站所有的次数

-- UV是访问当前网站的客户数(需要去重)

--(2)每个店铺访问次数top3的访客信息。输出店铺名称、访客id、访问次数

select shop,

count(distinct user_id) as uv

from test_sql.test2 group by shop ;

--上面的拆解来看,等价于

--distinct后可以接多个字段,表示联合去重

select shop,

count(user_id) as uv

from

(select distinct shop,

user_id

from test_sql.test2 ) as t

group by shop ;

--也等价于

select shop,

count(user_id) as uv

from

(select shop,

user_id

from test_sql.test2 group by shop, user_id) as t

group by shop ;

select * from

(select *,

row_number() over (partition by shop order by cnt desc) as rn

from

(select shop,user_id,count(1) as cnt from test_sql.test2 group by shop,user_id ) as t) t2

where t2.rn<=3;

-- 第3题:订单量统计

CREATE TABLE test_sql.test3 (

dt string,

order_id string,

user_id string,

amount DECIMAL ( 10, 2 ) )

ROW format delimited FIELDS TERMINATED BY '\\t';

INSERT overwrite TABLE test_sql.test3 VALUES

('2017-01-01','10029028','1000003251',33.57),

('2017-01-01','10029029','1000003251',33.57),

('2017-01-01','100290288','1000003252',33.57),

('2017-02-02','10029088','1000003251',33.57),

('2017-02-02','100290281','1000003251',33.57),

('2017-02-02','100290282','1000003253',33.57),

('2017-11-02','10290282','100003253',234),

('2018-11-02','10290284','100003243',234);

-- (1)给出 2017年每个月的订单数、用户数、总成交金额。

-- (2)给出2017年11月的新客数(指在11月才有第一笔订单)

select date_format(dt,'yyyy-MM') as month1,

count(distinct order_id) as cnt1,

count(distinct user_id) as cnt2,

sum(amount) as amt

from test_sql.test3

where year(dt)=2017

group by date_format(dt,'yyyy-MM');

select count(user_id) cnt from

(select user_id,

min(date_format(dt,'yyyy-MM')) min_month

from test3 group by user_id) as t where min_month='2017-11';

--统计每个月的新客户数

select min_month,

count(user_id) cnt

from (select user_id,

min(date_format(dt, 'yyyy-MM')) min_month

from test3

group by user_id) as t

group by min_month;

-- 第4题:大数据排序统计

CREATE TABLE test_sql.test4user

(user_id string,name string,age int);

CREATE TABLE test_sql.test4log

(user_id string,url string);

INSERT INTO TABLE test_sql.test4user VALUES('001','u1',10),

('002','u2',15),

('003','u3',15),

('004','u4',20),

('005','u5',25),

('006','u6',35),

('007','u7',40),

('008','u8',45),

('009','u9',50),

('0010','u10',65);

INSERT INTO TABLE test_sql.test4log VALUES('001','url1'),

('002','url1'),

('003','url2'),

('004','url3'),

('005','url3'),

('006','url1'),

('007','url5'),

('008','url7'),

('009','url5'),

('0010','url1');

select * from test_sql.test4user ;

select * from test_sql.test4log ;

--有一个5000万的用户文件(user_id,name,age),

-- 一个2亿记录的用户看电影的记录文件(user_id,url),根据年龄段观看电影的次数进行排序?

--取整函数有 round,floor,ceil

select *,

round(x,0) as r,--四舍五入

floor(x) as f,--向下取整

ceil(x) as c--向上取整

from

(select 15/10 as x union all

select 18/10 as x union all

select 24/10 as x union all

select 27/10 as x ) as t;

select type,

sum(cnt) as sum1

from

(select *,

concat(floor(age/10)*10,'-',floor(age/10)*10+10) as type

from test_sql.test4user as a

-- join前最好提前减小数据量

join (select user_id,count(url) as cnt from test_sql.test4log group by user_id) as b

on a.user_id=b.user_id) as t

group by type

order by sum(cnt) desc;

-- 第5题:活跃用户统计

CREATE TABLE test5(

dt string,

user_id string,

age int)

ROW format delimited fields terminated BY ',';

INSERT overwrite TABLE test_sql.test5 VALUES ('2019-02-11','test_1',23),

('2019-02-11','test_2',19),

('2019-02-11','test_3',39),

('2019-02-11','test_1',23),

('2019-02-11','test_3',39),

('2019-02-11','test_1',23),

('2019-02-12','test_2',19),

('2019-02-13','test_1',23),

('2019-02-15','test_2',19),

('2019-02-16','test_2',19);

select * from test_sql.test5 order by dt,user_id;

--有日志如下,请写出代码求得所有用户和活跃用户的总数及平均年龄。(活跃用户指连续两天都有访问记录的用户)

-- type 总数 平均年龄

-- '所有用户' 3 27

-- '活跃用户' 1 19

with t1 as (select distinct dt, user_id,age from test_sql.test5),

t2 as (select *,row_number() over (partition by user_id order by dt) as rn from t1 ),

t3 as (select *,date_sub(dt,rn) as dt2 from t2),

t4 as (select dt2,user_id,age,count(1) cnt from t3 group by dt2,user_id,age),

t5 as (select * from t4 where cnt>=2),

t6 as (select distinct user_id,age from t5)

select '所有用户' as type, count(user_id) cnt,avg(age) as avg_age

from (select distinct user_id,age from test_sql.test5) t union all

select '活跃用户' as type, count(user_id) cnt,avg(age) as avg_age from t6;

-- 用思路2来分析连续2天登录

with t1 as (select distinct dt, user_id from test_sql.test5),

t2 as (select *,

date_add(dt,1) as dt2,

lead(dt,1)over(partition by user_id order by dt) as dt3

from t1)

select count(distinct user_id) from t2 where dt2=dt3;

-- 第6题:电商购买金额统计实战

CREATE TABLE test_sql.test6 (

userid string,

money decimal(10,2),

paymenttime string,

orderid string);

INSERT INTO TABLE test_sql.test6 VALUES(以上是关于以金融证券游戏电商等案例详解SQL强化的主要内容,如果未能解决你的问题,请参考以下文章