k8s --> 19 k8s集群down机

Posted FikL-09-19

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了k8s --> 19 k8s集群down机相关的知识,希望对你有一定的参考价值。

文章目录

一、master节点

1、在masster节点操作

kubectl get node

` kubectl get 报错:Unable to connect to the server: dial tcp 10.20.2.224:6443: connect: no route to host

二、故障排查

1、查看6443(api-server)

[mss@z0rzpsap9003 ~]$ netstat -lntp |grep 6443

(No info could be read for "-p": geteuid()=1001 but you should be root.)

tcp6 0 0 :::6443 :::* LISTEN -

2、检查docker和kubelet服务

[root@z0rzpsap9003 ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since 二 2022-08-09 17:14:45 CST; 2 months 3 days ago

Docs: https://docs.docker.com

Main PID: 1213 (dockerd)

Tasks: 43

Memory: 1.6G

CGroup: /system.slice/docker.service

Hint: Some lines were ellipsized, use -l to show in full.

[root@z0rzpsap9003 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since 三 2022-10-12 16:20:50 CST; 4h 12min ago

Docs: https://kubernetes.io/docs/

Main PID: 211732 (kubelet)

Tasks: 42

Memory: 83.5M

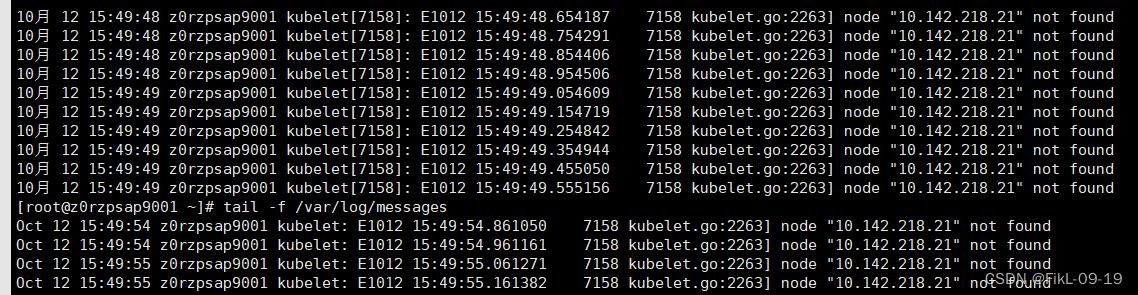

3、查看日志

[root@z0rzpsap9003 log]# tail -f /var/log/messages

Oct 9 03:20:01 z0rzpsap9003 kubelet: E1009 03:20:01.852901 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:01 z0rzpsap9003 kubelet: E1009 03:20:01.953018 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.053132 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.153240 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.253357 137657 kubelet.go:2263] node "10.142.218.23" not found

` Oct 9 03:20:02 z0rzpsap9003 Keepalived_vrrp[1294]: /etc/keepalived/keepalived_checkkubeapiserver.sh exited due to signal 15`

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.353485 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.453609 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.553696 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.653820 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.753930 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.854088 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:02 z0rzpsap9003 kubelet: E1009 03:20:02.954273 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.054377 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.154482 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.254588 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.354694 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.454810 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.554902 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.655019 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.755149 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.855256 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:03 z0rzpsap9003 kubelet: E1009 03:20:03.955363 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:04 z0rzpsap9003 kubelet: E1009 03:20:04.055466 137657 kubelet.go:2263] node "10.142.218.23" not found

Oct 9 03:20:04 z0rzpsap9003 kubelet: E1009 03:20:04.155553 137657 kubelet.go:2263] node "10.142.218.23" not found

` Oct 9 03:20:04 z0rzpsap9003 kubelet: E1009 03:20:04.241147 137657 reflector.go:153] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to list *v1.Pod: Get https://10.142.218.241:6443/api/v1/pods? `fieldSelector=spec.nodeName%3D10.142.218.23&limit=500&resourceVersion=0: dial tcp 10.142.218.241:6443: connect: no route to host

# 通哥查看日志发现kubelet一直报not found 而且报https://10.142.218.241:6443/api/v1/错误

4、登录master机器

# 1、 查看master节点的vip 无法找到

[root@z0rzpsap9003 log]# ip -4 a |grep 10.

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 10.142.218.23/24 brd 10.142.218.255 scope global eth0 # 三台同理

# 2、查看keepalived服务

[root@z0rzpsap9003 log]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2022-10-12 16:25:26 CST; 4h 12min ago # 服务正常

Process: 220321 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 220323 (keepalived)

Tasks: 3

# 3、查看keepaived检测脚本

[root@z0rzpsap9003 ~]# cat keepalived_checkkubeapiserver.sh

#!/bin/bash

TOKEN=$(kubectl --kubeconfig=/root/admin.conf get secrets -o jsonpath=".items[?(@.metadata.annotations['kubernetes\\.io/service-account\\.name']=='default')].data.token"|base64 -d)

STATUS_CODE=$(curl -I -m 10 -o /dev/null -s -w %http_code -X GET https://localhost:6443/api --header "Authorization: Bearer $TOKEN" --insecure)

if test $STATUS_CODE -eq '200'

then

exit 0

else

exit 1

fi

# 手动执行 curl -I -m 10 -o /dev/null -s -w %http_code -X GET https://localhost:6443/api --header "Authorization: Bearer $TOKEN" --insecure 报错非200

5、故障处理

# 1、keepaived服务

`通过查看keepaived脚本,发现使用的是 https://localhost:6443(实际执行脚本用的是vip:6443)

# 2、查看那个脚本调用keepaivd检测脚本

修改server: https://localhost:6443

# 3、执行执行keepavied检测脚本 # (是成功的)

# 4、重新keepaived服务

systemctl restart keepalived

# 5、查看集群是否正常

kubectl get node

以上是关于k8s --> 19 k8s集群down机的主要内容,如果未能解决你的问题,请参考以下文章