在K8S1.24使用Helm3部署Alluxio2.8.1

Posted 虎鲸不是鱼

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了在K8S1.24使用Helm3部署Alluxio2.8.1相关的知识,希望对你有一定的参考价值。

在K8S1.24使用Helm3部署Alluxio2.8.1

前言

Alluxio官网:https://www.alluxio.io/

关于什么是Alluxio不再赘述,多去官网看看就明白了。笔者更关心的是它的功能。

我们去年就白piao了Alluxio,用作热数据缓存及统一文件层:https://mp.weixin.qq.com/s/kBetfi_LxAQGwgMBpI70ow

可以搜索标题:

【Alluxio&大型银行】科技赋能金融,兴业银行按下“大数据处理加速键”

先了解下Primo Ramdisk这款软件:https://www.romexsoftware.com/zh-cn/primo-ramdisk/overview.html

Alluxio用作内存盘,就类似于Primo Ramdisk,将持久化到硬盘及文件系统的数据预先读取到内存中,之后应用程序直接从Alluxio集群的内存中读取数据,那速度自然是爽的飞起。笔者之前有做了个4G的内存盘,专用于拷贝U盘文件。现在Win10开机为神马占用很多内存?其实就是预加载了硬盘的热数据【咳咳咳,此处点名批评细数SN550的冷数据门】,加速读写提升IO的同时,减少了对SSD的读写,提高了硬盘寿命。

使用Alluxio将热数据缓存到内存实现读写提速就是这个原理,由于加载到内存时数据离计算节点更近,还能显著减少对网络带宽的占用,降低交换机负载【对于云服务器ECS计量付费而言,节省的就是白花花的银子】。

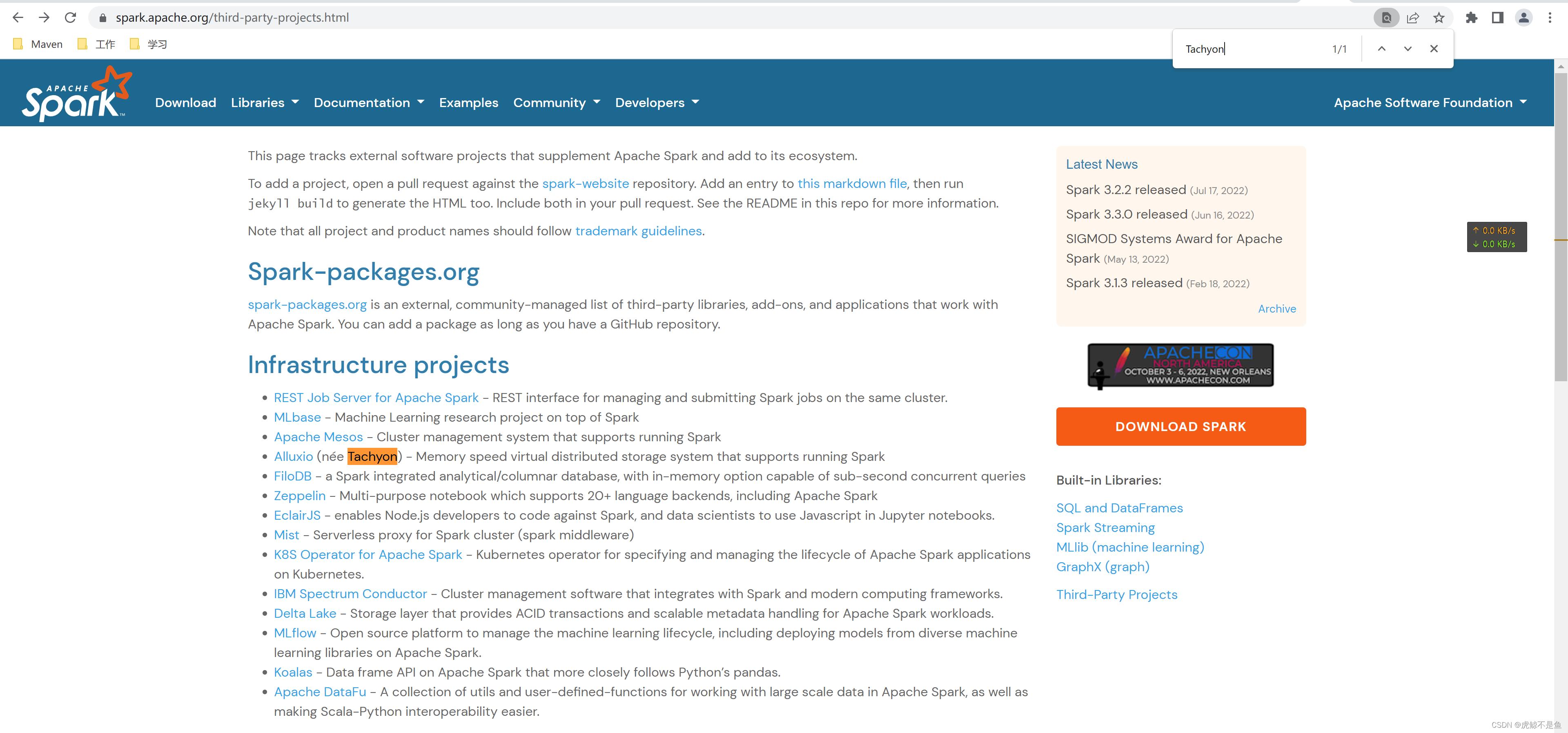

使用Alluxio的这个特性,还可以给Spark等计算引擎做RSS,Alluxio正是做这个起家的【当它还是叫Tachyon时,就是给Spark做堆外缓存使用】:https://spark.apache.org/third-party-projects.html

在Spark的第三方项目中,至今也可以看到Alluxio的踪影:

另一个重要的功能就是统一文件层了。由于其可以兼容多种文件系统协议,不管是Amazon的S3对象存储,还是HDFS或者NFS等,都可以Mount到Alluxio,实现统一接口访问。屏蔽了不同文件系统的差异,处理异构数据源时还是能方便不少,开发人员也不必掌握很多种文件系统的API了,Alluxio的API一套即可通吃。

笔者已经有不少虚拟机了,本着方便挂起,随时使用的原则,还是搭单节点。这次部署在K8S上。

官网文档:https://docs.alluxio.io/os/user/stable/cn/deploy/Running-Alluxio-On-Kubernetes.html

后续主要参照这篇官网的文档安装Alluxio2.8.1 On K8S1.24。

当前环境

虚拟机及K8S环境:https://lizhiyong.blog.csdn.net/article/details/126236516

root@zhiyong-ksp1:/home/zhiyong# kubectl get pods -owide --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

argocd devops-argocd-application-controller-0 1/1 Running 0 3h27m 10.233.107.78 zhiyong-ksp1 <none> <none>

argocd devops-argocd-applicationset-controller-5864597bfc-pf8ht 1/1 Running 0 3h27m 10.233.107.79 zhiyong-ksp1 <none> <none>

argocd devops-argocd-dex-server-f885fb4b4-fkpls 1/1 Running 0 3h27m 10.233.107.77 zhiyong-ksp1 <none> <none>

argocd devops-argocd-notifications-controller-54b744556f-f4g24 1/1 Running 0 3h27m 10.233.107.74 zhiyong-ksp1 <none> <none>

argocd devops-argocd-redis-556fdd5876-xftmq 1/1 Running 0 3h27m 10.233.107.73 zhiyong-ksp1 <none> <none>

argocd devops-argocd-repo-server-5dbf9b87db-9tw2c 1/1 Running 0 3h27m 10.233.107.76 zhiyong-ksp1 <none> <none>

argocd devops-argocd-server-6f9898cc75-s7jkm 1/1 Running 0 3h27m 10.233.107.75 zhiyong-ksp1 <none> <none>

istio-system istiod-1-11-2-54dd699c87-99krn 1/1 Running 0 23h 10.233.107.41 zhiyong-ksp1 <none> <none>

istio-system jaeger-collector-67cfc55477-7757f 1/1 Running 5 (22h ago) 22h 10.233.107.61 zhiyong-ksp1 <none> <none>

istio-system jaeger-operator-fccc48b86-vtcr8 1/1 Running 0 23h 10.233.107.47 zhiyong-ksp1 <none> <none>

istio-system jaeger-query-8497bdbfd7-csbts 2/2 Running 0 22h 10.233.107.67 zhiyong-ksp1 <none> <none>

istio-system kiali-75c777bdf6-xhbq7 1/1 Running 0 22h 10.233.107.58 zhiyong-ksp1 <none> <none>

istio-system kiali-operator-c459985f7-sttfs 1/1 Running 0 23h 10.233.107.38 zhiyong-ksp1 <none> <none>

kube-system calico-kube-controllers-f9f9bbcc9-2v7lm 1/1 Running 2 (22h ago) 9d 10.233.107.45 zhiyong-ksp1 <none> <none>

kube-system calico-node-4mgc7 1/1 Running 2 (22h ago) 9d 192.168.88.20 zhiyong-ksp1 <none> <none>

kube-system coredns-f657fccfd-2gw7h 1/1 Running 2 (22h ago) 9d 10.233.107.39 zhiyong-ksp1 <none> <none>

kube-system coredns-f657fccfd-pflwf 1/1 Running 2 (22h ago) 9d 10.233.107.43 zhiyong-ksp1 <none> <none>

kube-system kube-apiserver-zhiyong-ksp1 1/1 Running 2 (22h ago) 9d 192.168.88.20 zhiyong-ksp1 <none> <none>

kube-system kube-controller-manager-zhiyong-ksp1 1/1 Running 2 (22h ago) 9d 192.168.88.20 zhiyong-ksp1 <none> <none>

kube-system kube-proxy-cn68l 1/1 Running 2 (22h ago) 9d 192.168.88.20 zhiyong-ksp1 <none> <none>

kube-system kube-scheduler-zhiyong-ksp1 1/1 Running 2 (22h ago) 9d 192.168.88.20 zhiyong-ksp1 <none> <none>

kube-system nodelocaldns-96gtw 1/1 Running 2 (22h ago) 9d 192.168.88.20 zhiyong-ksp1 <none> <none>

kube-system openebs-localpv-provisioner-68db4d895d-p9527 1/1 Running 1 (22h ago) 9d 10.233.107.40 zhiyong-ksp1 <none> <none>

kube-system snapshot-controller-0 1/1 Running 2 (22h ago) 9d 10.233.107.42 zhiyong-ksp1 <none> <none>

kubesphere-controls-system default-http-backend-587748d6b4-ccg59 1/1 Running 2 (22h ago) 9d 10.233.107.50 zhiyong-ksp1 <none> <none>

kubesphere-controls-system kubectl-admin-5d588c455b-82cnk 1/1 Running 2 (22h ago) 9d 10.233.107.48 zhiyong-ksp1 <none> <none>

kubesphere-devops-system devops-27679170-8nrzx 0/1 Completed 0 65m 10.233.107.90 zhiyong-ksp1 <none> <none>

kubesphere-devops-system devops-27679200-kdgvk 0/1 Completed 0 35m 10.233.107.91 zhiyong-ksp1 <none> <none>

kubesphere-devops-system devops-27679230-v9h2l 0/1 Completed 0 5m34s 10.233.107.92 zhiyong-ksp1 <none> <none>

kubesphere-devops-system devops-apiserver-6b468c95cb-9s7lz 1/1 Running 0 3h27m 10.233.107.82 zhiyong-ksp1 <none> <none>

kubesphere-devops-system devops-controller-667f8449d7-gjgj8 1/1 Running 0 3h27m 10.233.107.80 zhiyong-ksp1 <none> <none>

kubesphere-devops-system devops-jenkins-bf85c664c-c6qnq 1/1 Running 0 3h27m 10.233.107.84 zhiyong-ksp1 <none> <none>

kubesphere-devops-system s2ioperator-0 1/1 Running 0 3h27m 10.233.107.83 zhiyong-ksp1 <none> <none>

kubesphere-logging-system elasticsearch-logging-curator-elasticsearch-curator-2767784rhhk 0/1 Completed 0 23h 10.233.107.51 zhiyong-ksp1 <none> <none>

kubesphere-logging-system elasticsearch-logging-data-0 1/1 Running 0 23h 10.233.107.65 zhiyong-ksp1 <none> <none>

kubesphere-logging-system elasticsearch-logging-discovery-0 1/1 Running 0 23h 10.233.107.64 zhiyong-ksp1 <none> <none>

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 4 (22h ago) 9d 10.233.107.56 zhiyong-ksp1 <none> <none>

kubesphere-monitoring-system kube-state-metrics-6d6786b44-bbb4f 3/3 Running 6 (22h ago) 9d 10.233.107.44 zhiyong-ksp1 <none> <none>

kubesphere-monitoring-system node-exporter-8sz74 2/2 Running 4 (22h ago) 9d 192.168.88.20 zhiyong-ksp1 <none> <none>

kubesphere-monitoring-system notification-manager-deployment-6f8c66ff88-pt4l8 2/2 Running 4 (22h ago) 9d 10.233.107.53 zhiyong-ksp1 <none> <none>

kubesphere-monitoring-system notification-manager-operator-6455b45546-nkmx8 2/2 Running 4 (22h ago) 9d 10.233.107.52 zhiyong-ksp1 <none> <none>

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 0 3h25m 10.233.107.85 zhiyong-ksp1 <none> <none>

kubesphere-monitoring-system prometheus-operator-66d997dccf-c968c 2/2 Running 4 (22h ago) 9d 10.233.107.37 zhiyong-ksp1 <none> <none>

kubesphere-system ks-apiserver-6b9bcb86f4-hsdzs 1/1 Running 2 (22h ago) 9d 10.233.107.55 zhiyong-ksp1 <none> <none>

kubesphere-system ks-console-599c49d8f6-ngb6b 1/1 Running 2 (22h ago) 9d 10.233.107.49 zhiyong-ksp1 <none> <none>

kubesphere-system ks-controller-manager-66747fcddc-r7cpt 1/1 Running 2 (22h ago) 9d 10.233.107.54 zhiyong-ksp1 <none> <none>

kubesphere-system ks-installer-5fd8bd46b8-dzhbb 1/1 Running 2 (22h ago) 9d 10.233.107.46 zhiyong-ksp1 <none> <none>

kubesphere-system minio-746f646bfb-hcf5c 1/1 Running 0 3h32m 10.233.107.71 zhiyong-ksp1 <none> <none>

kubesphere-system openldap-0 1/1 Running 1 (3h30m ago) 3h32m 10.233.107.69 zhiyong-ksp1 <none> <none>

root@zhiyong-ksp1:/home/zhiyong#

可以看到Pod们目前状态相当正常。再来看看helm:

root@zhiyong-ksp1:/home/zhiyong# helm

The Kubernetes package manager

Common actions for Helm:

- helm search: search for charts

- helm pull: download a chart to your local directory to view

- helm install: upload the chart to Kubernetes

- helm list: list releases of charts

Environment variables:

| Name | Description |

|------------------------------------|-----------------------------------------------------------------------------------|

| $HELM_CACHE_HOME | set an alternative location for storing cached files. |

| $HELM_CONFIG_HOME | set an alternative location for storing Helm configuration. |

| $HELM_DATA_HOME | set an alternative location for storing Helm data. |

| $HELM_DEBUG | indicate whether or not Helm is running in Debug mode |

| $HELM_DRIVER | set the backend storage driver. Values are: configmap, secret, memory, postgres |

| $HELM_DRIVER_SQL_CONNECTION_STRING | set the connection string the SQL storage driver should use. |

| $HELM_MAX_HISTORY | set the maximum number of helm release history. |

| $HELM_NAMESPACE | set the namespace used for the helm operations. |

| $HELM_NO_PLUGINS | disable plugins. Set HELM_NO_PLUGINS=1 to disable plugins. |

| $HELM_PLUGINS | set the path to the plugins directory |

| $HELM_REGISTRY_CONFIG | set the path to the registry config file. |

| $HELM_REPOSITORY_CACHE | set the path to the repository cache directory |

| $HELM_REPOSITORY_CONFIG | set the path to the repositories file. |

| $KUBECONFIG | set an alternative Kubernetes configuration file (default "~/.kube/config") |

| $HELM_KUBEAPISERVER | set the Kubernetes API Server Endpoint for authentication |

| $HELM_KUBECAFILE | set the Kubernetes certificate authority file. |

| $HELM_KUBEASGROUPS | set the Groups to use for impersonation using a comma-separated list. |

| $HELM_KUBEASUSER | set the Username to impersonate for the operation. |

| $HELM_KUBECONTEXT | set the name of the kubeconfig context. |

| $HELM_KUBETOKEN | set the Bearer KubeToken used for authentication. |

Helm stores cache, configuration, and data based on the following configuration order:

- If a HELM_*_HOME environment variable is set, it will be used

- Otherwise, on systems supporting the XDG base directory specification, the XDG variables will be used

- When no other location is set a default location will be used based on the operating system

By default, the default directories depend on the Operating System. The defaults are listed below:

| Operating System | Cache Path | Configuration Path | Data Path |

|------------------|---------------------------|--------------------------------|-------------------------|

| Linux | $HOME/.cache/helm | $HOME/.config/helm | $HOME/.local/share/helm |

| macOS | $HOME/Library/Caches/helm | $HOME/Library/Preferences/helm | $HOME/Library/helm |

| Windows | %TEMP%\\helm | %APPDATA%\\helm | %APPDATA%\\helm |

Usage:

helm [command]

Available Commands:

completion generate autocompletion scripts for the specified shell

create create a new chart with the given name

dependency manage a chart's dependencies

env helm client environment information

get download extended information of a named release

help Help about any command

history fetch release history

install install a chart

lint examine a chart for possible issues

list list releases

package package a chart directory into a chart archive

plugin install, list, or uninstall Helm plugins

pull download a chart from a repository and (optionally) unpack it in local directory

repo add, list, remove, update, and index chart repositories

rollback roll back a release to a previous revision

search search for a keyword in charts

show show information of a chart

status display the status of the named release

template locally render templates

test run tests for a release

uninstall uninstall a release

upgrade upgrade a release

verify verify that a chart at the given path has been signed and is valid

version print the client version information

Flags:

--debug enable verbose output

-h, --help help for helm

--kube-apiserver string the address and the port for the Kubernetes API server

--kube-as-group stringArray group to impersonate for the operation, this flag can be repeated to specify multiple groups.

--kube-as-user string username to impersonate for the operation

--kube-ca-file string the certificate authority file for the Kubernetes API server connection

--kube-context string name of the kubeconfig context to use

--kube-token string bearer token used for authentication

--kubeconfig string path to the kubeconfig file

-n, --namespace string namespace scope for this request

--registry-config string path to the registry config file (default "/root/.config/helm/registry.json")

--repository-cache string path to the file containing cached repository indexes (default "/root/.cache/helm/repository")

--repository-config string path to the file containing repository names and URLs (default "/root/.config/helm/repositories.yaml")

Use "helm [command] --help" for more information about a command.

root@zhiyong-ksp1:/home/zhiyong#

可以看到KubeSphere已经很贴心地安装好helm,可以给非专业运维的开发人员省不少事情。

接下来就可以使用helm3安装了【Alluxio2.3之后不支持helm2】。当然也可以使用kubectl安装Alluxio,自行查看官网文档。

使用Helm部署Alluxio2.8.1

添加Alluxio helm chart的helm repro

root@zhiyong-ksp1:/home/zhiyong# helm repo add alluxio-charts https://alluxio-charts.storage.googleapis.com/openSource/2.8.1

"alluxio-charts" has been added to your repositories

root@zhiyong-ksp1:/home/zhiyong# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

root@zhiyong-ksp1:/home/zhiyong#

国人开源的组件还是充分考虑了国内特殊的网络环境,好评!!!一次成功。

查看配置

root@zhiyong-ksp1:/home/zhiyong# helm inspect values alluxio-charts/alluxio

#

# The Alluxio Open Foundation licenses this work under the Apache License, version 2.0

# (the "License"). You may not use this work except in compliance with the License, which is

# available at www.apache.org/licenses/LICENSE-2.0

#

# This software is distributed on an "AS IS" basis, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND,

# either express or implied, as more fully set forth in the License.

#

# See the NOTICE file distributed with this work for information regarding copyright ownership.

#

# This should not be modified in the usual case.

fullnameOverride: alluxio

## Common ##

# Docker Image

image: alluxio/alluxio

imageTag: 2.8.1

imagePullPolicy: IfNotPresent

# Security Context

user: 1000

group: 1000

fsGroup: 1000

# Service Account

# If not specified, Kubernetes will assign the 'default'

# ServiceAccount used for the namespace

serviceAccount:

# Image Pull Secret

# The secrets will need to be created externally from

# this Helm chart, but you can configure the Alluxio

# Pods to use the following list of secrets

# eg:

# imagePullSecrets:

# - ecr

# - dev

imagePullSecrets:

# Site properties for all the components

properties:

# alluxio.user.metrics.collection.enabled: 'true'

alluxio.security.stale.channel.purge.interval: 365d

# Recommended JVM Heap options for running in Docker

# Ref: https://developers.redhat.com/blog/2017/03/14/java-inside-docker/

# These JVM options are common to all Alluxio services

# jvmOptions:

# - "-XX:+UnlockExperimentalVMOptions"

# - "-XX:+UseCGroupMemoryLimitForHeap"

# - "-XX:MaxRAMFraction=2"

# Mount Persistent Volumes to all components

# mounts:

# - name: <persistentVolume claimName>

# path: <mountPath>

# Use labels to run Alluxio on a subset of the K8s nodes

# nodeSelector:

# A list of K8s Node taints to allow scheduling on.

# See the Kubernetes docs for more info:

# - https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/

# eg: tolerations: [ "key": "env", "operator": "Equal", "value": "prod", "effect": "NoSchedule" ]

# tolerations: []

## Master ##

master:

enabled: true

count: 1 # Controls the number of StatefulSets. For multiMaster mode increase this to >1.

replicas: 1 # Controls #replicas in a StatefulSet and should not be modified in the usual case.

env:

# Extra environment variables for the master pod

# Example:

# JAVA_HOME: /opt/java

args: # Arguments to Docker entrypoint

- master-only

- --no-format

# Properties for the master component

properties:

# Example: use ROCKS DB instead of Heap

# alluxio.master.metastore: ROCKS

# alluxio.master.metastore.dir: /metastore

resources:

# The default xmx is 8G

limits:

cpu: "4"

memory: "8Gi"

requests:

cpu: "1"

memory: "1Gi"

ports:

embedded: 19200

rpc: 19998

web: 19999

hostPID: false

hostNetwork: false

shareProcessNamespace: false

extraContainers: []

extraVolumeMounts: []

extraVolumes: []

extraServicePorts: []

# dnsPolicy will be ClusterFirstWithHostNet if hostNetwork: true

# and ClusterFirst if hostNetwork: false

# You can specify dnsPolicy here to override this inference

# dnsPolicy: ClusterFirst

# JVM options specific to the master container

jvmOptions:

nodeSelector:

# When using HA Alluxio masters, the expected startup time

# can take over 2-3 minutes (depending on leader elections,

# journal catch-up, etc). In that case it is recommended

# to allow for up to at least 3 minutes with the readinessProbe,

# though higher values may be desired for some leniancy.

# - Note that the livenessProbe does not wait for the

# readinessProbe to succeed first

#

# eg: 3 minute startupProbe and readinessProbe

# readinessProbe:

# initialDelaySeconds: 30

# periodSeconds: 10

# timeoutSeconds: 1

# failureThreshold: 15

# successThreshold: 3

# startupProbe:

# initialDelaySeconds: 60

# periodSeconds: 30

# timeoutSeconds: 5

# failureThreshold: 4

readinessProbe:

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

failureThreshold: 3

successThreshold: 1

livenessProbe:

initialDelaySeconds: 15

periodSeconds: 30

timeoutSeconds: 5

failureThreshold: 2

# If you are using Kubernetes 1.18+ or have the feature gate

# for it enabled, use startupProbe to prevent the livenessProbe

# from running until the startupProbe has succeeded

# startupProbe:

# initialDelaySeconds: 15

# periodSeconds: 30

# timeoutSeconds: 5

# failureThreshold: 2

tolerations: []

podAnnotations:

# The ServiceAccount provided here will have precedence over

# the global `serviceAccount`

serviceAccount:

jobMaster:

args:

- job-master

# Properties for the jobMaster component

properties:

resources:

limits:

cpu: "4"

memory: "8Gi"

requests:

cpu: "1"

memory: "1Gi"

ports:

embedded: 20003

rpc: 20001

web: 20002

# JVM options specific to the jobMaster container

jvmOptions:

readinessProbe:

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

failureThreshold: 3

successThreshold: 1

livenessProbe:

initialDelaySeconds: 15

periodSeconds: 30

timeoutSeconds: 5

failureThreshold: 2

# If you are using Kubernetes 1.18+ or have the feature gate

# for it enabled, use startupProbe to prevent the livenessProbe

# from running until the startupProbe has succeeded

# startupProbe:

# initialDelaySeconds: 15

# periodSeconds: 30

# timeoutSeconds: 5

# failureThreshold: 2

# Alluxio supports journal type of UFS and EMBEDDED

# UFS journal with HDFS example

# journal:

# type: "UFS"

# ufsType: "HDFS"

# folder: "hdfs://$hostname:$hostport/journal"

# EMBEDDED journal to /journal example

# journal:

# type: "EMBEDDED"

# folder: "/journal"

journal:

# [ Required values ]

type: "UFS" # One of "UFS" or "EMBEDDED"

folder: "/journal" # Master journal directory or equivalent storage path

#

# [ Conditionally required values ]

#

## [ UFS-backed journal options ]

## - required when using a UFS-type journal (journal.type="UFS")

##

## ufsType is one of "local" or "HDFS"

## - "local" results in a PV being allocated to each Master Pod as the journal

## - "HDFS" results in no PV allocation, it is up to you to ensure you have

## properly configured the required Alluxio properties for Alluxio to access

## the HDFS URI designated as the journal folder

ufsType: "local"

#

## [ K8s volume options ]

## - required when using an EMBEDDED journal (journal.type="EMBEDDED")

## - required when using a local UFS journal (journal.type="UFS" and journal.ufsType="local")

##

## volumeType controls the type of journal volume.

volumeType: persistentVolumeClaim # One of "persistentVolumeClaim" or "emptyDir"

## size sets the requested storage capacity for a persistentVolumeClaim,

## or the sizeLimit on an emptyDir PV.

size: 1Gi

### Unique attributes to use when the journal is persistentVolumeClaim

storageClass: "standard"

accessModes:

- ReadWriteOnce

### Unique attributes to use when the journal is emptyDir

medium: ""

#

# [ Optional values ]

format: # Configuration for journal formatting job

runFormat: false # Change to true to format journal

# You can enable metastore to use ROCKS DB instead of Heap

# metastore:

# volumeType: persistentVolumeClaim # Options: "persistentVolumeClaim" or "emptyDir"

# size: 1Gi

# mountPath: /metastore

# # Attributes to use when the metastore is persistentVolumeClaim

# storageClass: "standard"

# accessModes:

# - ReadWriteOnce

# # Attributes to use when the metastore is emptyDir

# medium: ""

## Worker ##

worker:

enabled: true

env:

# Extra environment variables for the worker pod

# Example:

# JAVA_HOME: /opt/java

args:

- worker-only

- --no-format

# Properties for the worker component

properties:

resources:

limits:

cpu: "4"

memory: "4Gi"

requests:

cpu: "1"

memory: "2Gi"

ports:

rpc: 29999

web: 30000

# hostPID requires escalated privileges

hostPID: false

hostNetwork: false

shareProcessNamespace: false

extraContainers: []

extraVolumeMounts: []

extraVolumes: []

# dnsPolicy will be ClusterFirstWithHostNet if hostNetwork: true

# and ClusterFirst if hostNetwork: false

# You can specify dnsPolicy here to override this inference

# dnsPolicy: ClusterFirst

# JVM options specific to the worker container

jvmOptions:

nodeSelector:

readinessProbe:

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

failureThreshold: 3

successThreshold: 1

livenessProbe:

initialDelaySeconds: 15

periodSeconds: 30

timeoutSeconds: 5

failureThreshold: 2

# If you are using Kubernetes 1.18+ or have the feature gate

# for it enabled, use startupProbe to prevent the livenessProbe

# from running until the startupProbe has succeeded

# startupProbe:

# initialDelaySeconds: 15

# periodSeconds: 30

# timeoutSeconds: 5

# failureThreshold: 2

tolerations: []

podAnnotations:

# The ServiceAccount provided here will have precedence over

# the global `serviceAccount`

serviceAccount:

# Setting fuseEnabled to true will embed Fuse in worker process. The worker pods will

# launch the Alluxio workers using privileged containers with `SYS_ADMIN` capability.

# Be sure to give root access to the pod by setting the global user/group/fsGroup

# values to `0` to turn on Fuse in worker.

fuseEnabled: false

jobWorker:

args:

- job-worker

# Properties for the jobWorker component

properties:

resources:

limits:

cpu: "4"

memory: "4Gi"

requests:

cpu: "1"

memory: "1Gi"

ports:

rpc: 30001

data: 30002

web: 30003

# JVM options specific to the jobWorker container

jvmOptions:

readinessProbe:

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

failureThreshold: 3

successThreshold: 1

livenessProbe:

initialDelaySeconds: 15

periodSeconds: 30

timeoutSeconds: 5

failureThreshold: 2

# If you are using Kubernetes 1.18+ or have the feature gate

# for it enabled, use startupProbe to prevent the livenessProbe

# from running until the startupProbe has succeeded

# startupProbe:

# initialDelaySeconds: 15

# periodSeconds: 30

以上是关于在K8S1.24使用Helm3部署Alluxio2.8.1的主要内容,如果未能解决你的问题,请参考以下文章