方便的Opencv实现播放有声音的视频+附带图片生成gif

Posted 大气层煮月亮

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了方便的Opencv实现播放有声音的视频+附带图片生成gif相关的知识,希望对你有一定的参考价值。

前言

因为最近老是用到Opencv这个库来处理视频,过程遇到了非常多的细节问题,最后把成品干脆直接放到博客来,这样以后可以随时过来取用。

Opencv读取视频没有声音的原因是因为:视频是分为图像与音频的,Opencv仅仅只是读取了一帧帧图像,并没有读取到音频,所以我们只需要再读取一次音频,并且两者同时播放就OK啦!

【方便的Opencv】实现播放有声音的视频+附带图片生成gif

实现功能

· ❥ 播放视频

· ❥ 播放音频

· ❥ 播放进度展示

· ❥ 帧数统计

· ❥ 按空格键暂停

代码:

# 使用cv2读取显示视频

# 引入math

import math

# 引入opencv

import cv2

from ffpyplayer.player import MediaPlayer

# opencv获取本地视频

def play_video(video_path, audio_play=True):

cap = cv2.VideoCapture(video_path)

if audio_play:

player = MediaPlayer(video_path)

# 打开文件状态

isopen = cap.isOpened()

if not isopen:

print("Err: Video is failure. Exiting ...")

# 视频时长总帧数

total_frame = cap.get(cv2.CAP_PROP_FRAME_COUNT)

# 获取视频宽度

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

# 获取视频高度

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

# 视频帧率

fps = cap.get(cv2.CAP_PROP_FPS)

# 播放帧间隔毫秒数

wait = int(1000 / fps) if fps else 1

# 帧数计数器

read_frame = 0

# 循环读取视频帧

while (isopen):

# 读取帧图像

ret, frame = cap.read()

# 读取错误处理

if not ret:

if read_frame < total_frame:

# 读取错误

print("Err: Can't receive frame. Exiting ...")

else:

# 正常结束

print("Info: Stream is End")

break

# 帧数计数器+1

read_frame = read_frame + 1

cv2.putText(frame, "[/]".format(str(read_frame), str(int(total_frame))), (20, 50),

cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 255, 9), 2)

dst = cv2.resize(frame, (1920//2, 1080//2), interpolation=cv2.INTER_CUBIC) # 窗口大小

# 计算当前播放时码

timecode_h = int(read_frame / fps / 60 / 60)

timecode_m = int(read_frame / fps / 60)

timecode_s = read_frame / fps % 60

s = math.modf(timecode_s)

timecode_s = int(timecode_s)

timecode_f = int(s[0] * fps)

print(":0>2d::0>2d::0>2d.:0>2d".format(timecode_h, timecode_m, timecode_s, timecode_f))

# 显示帧图像

cv2.imshow('image', dst)

# 播放间隔

wk = cv2.waitKey(wait)

# 按键值 & 0xFF是一个二进制AND操作 返回一个不是单字节的代码

keycode = wk & 0xff

# 空格键暂停

if keycode == ord(" "):

cv2.waitKey(0)

# q键退出

if keycode == ord('q'):

print("Info: By user Cancal ...")

break

# 释放实例

cap.release()

# 销毁窗口

cv2.destroyAllWindows()

if __name__ == "__main__":

play_video('', audio_play=False)将一个文件夹下的图片生成gif

'''

Author:

Email:

公众号:

'''

import os

import imageio

def create_gif(img_path, gif_name, duration = 1.0):

'''

:param image_list: 这个列表用于存放生成动图的图片

:param gif_name: 字符串,所生成gif文件名,带.gif后缀

:param duration: 图像间隔时间

:return:

'''

frames = []

for image_name in os.listdir(img_path):

temp = os.path.join(img_path, image_name)

print(temp)

frames.append(imageio.imread(temp))

imageio.mimsave(gif_name, frames, 'GIF', duration=duration)

return

def main():

#这里放上自己所需要合成的图片文件夹路径

image_path = r'C:\\Users\\86137\\Desktop\\gaitRecognition_platform\\Package\\Fgmask\\tds_nm_03'

gif_name = 'new.gif'

duration = 0.08 # 播放速度yuexiaoyuekuai

create_gif(image_path, gif_name, duration)

if __name__ == '__main__':

main()

完毕!

Opencv的学习之路漫漫,希望我们能一起加油,共同进步!最后的最后,如果这篇文章有帮助到大家,麻烦点赞+收藏一下喔!

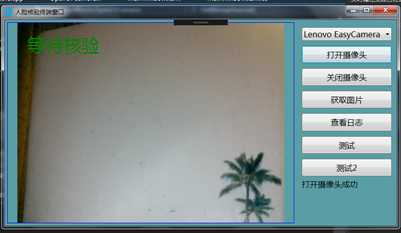

基于opencv,开发摄像头播放程序

前言 Windows下实现摄像视频捕捉有多种实现方式;各种方式的优劣,本文不做对比。但是,opencv是一款老牌开发库,在图像处理领域声名显赫。采用opencv来处理摄像视频,在性能和稳定性上,是有保障的。并且,opencv包含很多图像处理函数,可以更方便的对视频处理。

执行程序是用wpf开发的,所以先将opencv封装成c语言接口,以供调用。opencv也不可能提供现成的控件供wpf使用,两种不同的开发语言“沟通”起来有些困难。其实稍作变通,就可以实现摄像头播放功能。

1 对opencv封装

opencv的类VideoCapture封装了对摄像头的操作,使用起来也非常简单。

bool open(int device); device为摄像头设备序号。

如果有多个摄像头,怎么知道哪个摄像头的序号那?可以通过如下函数,获取摄像头列表。摄像头在list中索引即为设备序号。

int GetCameraDevices(vector<wstring>& list) ICreateDevEnum *pDevEnum = NULL; IEnumMoniker *pEnum = NULL; int deviceCounter = 0; CoInitialize(NULL); HRESULT hr = CoCreateInstance(CLSID_SystemDeviceEnum, NULL, CLSCTX_INPROC_SERVER, IID_ICreateDevEnum, reinterpret_cast<void**>(&pDevEnum)); if (SUCCEEDED(hr)) // Create an enumerator for the video capture category. hr = pDevEnum->CreateClassEnumerator( CLSID_VideoInputDeviceCategory, &pEnum, 0); if (hr == S_OK) //if (!silent)printf("SETUP: Looking For Capture Devices\\n"); IMoniker *pMoniker = NULL; while (pEnum->Next(1, &pMoniker, NULL) == S_OK) IPropertyBag *pPropBag; hr = pMoniker->BindToStorage(0, 0, IID_IPropertyBag, (void**)(&pPropBag)); if (FAILED(hr)) pMoniker->Release(); continue; // Skip this one, maybe the next one will work. // Find the description or friendly name. VARIANT varName; VariantInit(&varName); hr = pPropBag->Read(L"Description", &varName, 0); if (FAILED(hr)) hr = pPropBag->Read(L"FriendlyName", &varName, 0); if (SUCCEEDED(hr)) hr = pPropBag->Read(L"FriendlyName", &varName, 0); int count = 0; wstring str2 = varName.bstrVal; list.push_back(str2); pPropBag->Release(); pPropBag = NULL; pMoniker->Release(); pMoniker = NULL; deviceCounter++; pDevEnum->Release(); pDevEnum = NULL; pEnum->Release(); pEnum = NULL; return deviceCounter;

总之,使用opencv打开摄像头非常简单。

打开之后,就是获取摄像头图像。视频其实就是图像的集合;每秒钟获取25幅图像,将其在控件上显示,就是视频。

Mat cameraImg;

_pCapture >> cameraImg;

Mat类封装了对图像的操作。c#不可能操作Mat,需要将Mat中纯图像部分数据传递出来,图像才能被c#利用。

int Camera_GetImgData(INT64 handle, char* imgBuffer) CameraInfo *pCameraInfo = (CameraInfo*)handle; Mat cameraImg; *(pCameraInfo->_pCapture) >> cameraImg; if (!cameraImg.empty()) int height = cameraImg.rows;int dataLen = height * cameraImg.step; memcpy(imgBuffer, cameraImg.data, dataLen); return 0; else return 1;

cameraImg.data中存有图像数据,data的大小可以根据图像的高度、每行图像的步幅计算出来。c#调用此函数后,imgBuffer存放图像数据。对数据imgBuffer处理后,就可以在控件上显示。

c语言对opencv封装函数列表如下:

extern "C" OpenCVCamera_API int Camera_GetCameraName(char* listName); OpenCVCamera_API INT64 Camera_CreateHandle(); OpenCVCamera_API void Camera_CloseHandle(INT64 handle); OpenCVCamera_API BOOL Camera_IsOpen(INT64 handle); OpenCVCamera_API int Camera_Open(INT64 handle, int index); OpenCVCamera_API int Camera_Close(INT64 handle); OpenCVCamera_API int Camera_GetImgInfo(INT64 handle,int& width,int& height,int& channel, int& step, int& depth); OpenCVCamera_API int Camera_GetImgData(INT64 handle, char* imgBuffer); //flipCode >0: 沿y-轴翻转, 0: 沿x-轴翻转, <0: x、y轴同时翻转 OpenCVCamera_API int Camera_GetImgData_Flip(INT64 handle, char* imgBuffer, int flipCode); OpenCVCamera_API int Camera_ImgData_Compress(int rows, int cols, int type, void* imgBuffer, int param,void* destBuffer,int* destLen);

函数定义如下

class CameraInfo public: VideoCapture * _pCapture; CameraInfo() _pCapture = NULL; ~CameraInfo() if (_pCapture != NULL) delete _pCapture; ; int Camera_GetCameraName(char* listName) vector<wstring> listName2; GetCameraDevices(listName2); wstring all; for (size_t i = 0; i < listName2.size(); i++) all += listName2[i]; all += L";"; int n = all.length(); memcpy(listName, all.data(), n * 2); return listName2.size(); INT64 Camera_CreateHandle() CameraInfo *pCameraInfo = new CameraInfo(); return (INT64)pCameraInfo; void Camera_CloseHandle(INT64 handle) CameraInfo *pCameraInfo = (CameraInfo*)handle; delete pCameraInfo; BOOL Camera_IsOpen(INT64 handle) CameraInfo *pCameraInfo = (CameraInfo*)handle; if (pCameraInfo->_pCapture == NULL) return FALSE; BOOL open = pCameraInfo->_pCapture->isOpened(); return open; int Camera_Open(INT64 handle, int index) Camera_Close(handle); CameraInfo *pCameraInfo = (CameraInfo*)handle; pCameraInfo->_pCapture = new VideoCapture(); bool open = pCameraInfo->_pCapture->open(index); if (open) return 0; return 1; int Camera_Close(INT64 handle) CameraInfo *pCameraInfo = (CameraInfo*)handle; if (pCameraInfo->_pCapture == NULL) return 0; delete pCameraInfo->_pCapture; pCameraInfo->_pCapture = NULL; return 0; //depth == enumCV_8U=0,CV_8S=1,CV_16U=2,CV_16S=3,CV_32S=4,CV_32F=5,CV_64F=6 int Camera_GetImgInfo(INT64 handle, int& width, int& height, int& channel, int& step, int& depth) CameraInfo *pCameraInfo = (CameraInfo*)handle; Mat cameraImg; *(pCameraInfo->_pCapture) >> cameraImg; if (!cameraImg.empty()) height = cameraImg.rows; width = cameraImg.cols; channel = cameraImg.channels(); step = cameraImg.step; depth = cameraImg.depth(); return 0; else return 1; int Camera_GetImgData(INT64 handle, char* imgBuffer) CameraInfo *pCameraInfo = (CameraInfo*)handle; Mat cameraImg; *(pCameraInfo->_pCapture) >> cameraImg; if (!cameraImg.empty()) int height = cameraImg.rows; int width = cameraImg.cols; int channel = cameraImg.channels(); int dataLen = height * cameraImg.step; memcpy(imgBuffer, cameraImg.data, dataLen); return 0; else return 1; int Camera_GetImgData_Flip(INT64 handle, char* imgBuffer, int flipCode) CameraInfo *pCameraInfo = (CameraInfo*)handle; Mat cameraImg; *(pCameraInfo->_pCapture) >> cameraImg; if (!cameraImg.empty()) Mat dest; if (flipCode != 100) flip(cameraImg, dest, flipCode); else dest = cameraImg; int height = dest.rows; int width = dest.cols; int channel = dest.channels(); int dataLen = height * cameraImg.step; memcpy(imgBuffer, dest.data, dataLen); return 0; else return 1; BOOL MatToCompressData(const Mat& mat, std::vector<unsigned char>& buff) if (mat.empty()) return FALSE; //提前分配内存 否则会报错 int n = mat.rows*mat.cols*mat.channels(); buff.reserve(n * 2); std::vector<int> param(2); param.push_back(CV_IMWRITE_JPEG_QUALITY); param.push_back(95);// default(95) 0-100 return cv::imencode(".jpg", mat, buff, param); int Camera_ImgData_Compress(int rows, int cols, int type, void* imgBuffer, int param, void* destBuffer, int* destLen) Mat src(rows, cols, type, imgBuffer); std::vector<unsigned char> buff; MatToCompressData(src, buff); if (*destLen < buff.size()) return -1; memcpy(destBuffer, buff.data(), buff.size()); *destLen = buff.size(); return 0;

2 WPF实现视频播放

WPF的Image控件实现图像的显示。实现视频播放的逻辑为:设定一个定时器(时间间隔为40毫秒),每隔一段时间从opencv获取图像,在控件中显示。

<Image x:Name="imageVideoPlayer" Stretch="Uniform" ></Image>

实现图像显示代码

BitmapSource bitmapSource = _openCVCamera.GetBitmapSource(); if (bitmapSource == null) return false; imageVideoPlayer.Source = bitmapSource;

实现图像显示的关键是构建BitmapSource,暨:如何从opencv中获取图像数据构建BitmapSource。

//获取图像数据 if (!GetImgData(out byte[] imgData)) return null; //构建WriteableBitmap WriteableBitmap img = new WriteableBitmap(_imgWidth, _imgHeight, 96, 96, PixelFormats.Bgr24, null); img.WritePixels(new Int32Rect(0, 0, _imgWidth, _imgHeight), imgData, img.BackBufferStride, 0); img.Freeze();

至此,就可以显示摄像头图像了。

以上是关于方便的Opencv实现播放有声音的视频+附带图片生成gif的主要内容,如果未能解决你的问题,请参考以下文章