Android NDK——实战演练之TextureView的应用之调用外接USB摄像头自动对焦并完成隐蔽拍照

Posted CrazyMo_

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Android NDK——实战演练之TextureView的应用之调用外接USB摄像头自动对焦并完成隐蔽拍照相关的知识,希望对你有一定的参考价值。

文章大纲

引言

前一篇文章Android进阶——多线程间的通信之调用系统标准摄像头自动对焦并自动完成隐蔽拍照实现了通过系统标准摄像头完成自动对焦并隐蔽拍照功能,所谓标准摄像头即是摄像头完完全全由android系统去管理,我们调用只需要封装好的api即可。这一篇使用的是外接USB摄像头就涉及到自己通过USB驱动去访问并拿到摄像头对象,顺便总结下TextureView的使用。此系列相关文章链接:

- Android NDK——实战演练之使用Android Studio引用so库,jar包、module,aar以及导入Eclipse项目并使用JNI的正确姿势(一)

- Android NDK——实战演练之配置NDK及使用Android studio开发Hello JNI并简单打包so库(二)

- Android NDK——实战演练之App端通过串口通信完成实时控制单片机上LED灯的颜色及灯光动画特效(三)

- Android NDK——实战演练之TextureView的应用之调用外接USB摄像头自动对焦并完成隐蔽拍照(四)

一、TextureView 概述

TextureView是Android 4.0引进的一个新的控件,它直接继承自View。以前我们使用SurfaceView或者GLSurfaceView来展示视频或者opengl内容,因为SurfaceView的工作方式是创建一个置于应用窗口之后的新窗口在独立的线程中完成UI更新,因为SurfaceView窗口刷新的时候不需要重绘应用程序的窗口(android普通窗口的视图绘制机制是一层一层的,任何一个子元素或者是局部的刷新都会导致整个视图结构全部重绘一次,因此效率非常低下,不过满足普通应用界面的需求还是绰绰有余),但是SurfaceView也有一些非常不便的限制,不能使用变换(平移、缩放、旋转等),也难以放在ListView或者ScrollView中,不能使用UI控件的一些特性比如View.setAlpha(),此时TextureView应运而生,与SurfaceView相比(),TextureView并没有创建一个单独的Surface用来绘制,它内部封装了一个SurfaceTexture用于呈现内容,所以它可以像一般的View一样通过setTransform(Matrix transform)和setRotation(float r)来执行一些变换操作,setAlpha(float alpha)来设置透明度等,但是Textureview必须在硬件加速开启的窗口中。TextureView的使用也非常简单,唯一要做的就是初始化用于渲染内容的SurfaceTexture,先创建TextureView对象(可以通过xml和java代码动态方式创建),再实现SurfaceTextureListener接口并设置监听 mTextureView.setSurfaceTextureListener(this)。

public class LiveCameraActivity extends Activity implements TextureView.SurfaceTextureListener

private Camera mCamera;

private TextureView mTextureView;

protected void onCreate(Bundle savedInstanceState)

super.onCreate(savedInstanceState);

mTextureView = new TextureView(this);//创建TextureView对象

mTextureView.setSurfaceTextureListener(this);//设置监听

setContentView(mTextureView);

public void onSurfaceTextureAvailable(SurfaceTexture surface, int width, int height

mCamera = Camera.open();//打开摄像头

try

mCamera.setPreviewTexture(surface);//给摄像头设置预览界面,用SurfaceTexture来展示内容

mCamera.startPreview();//开始预览

mTextureView.setAlpha(0.6f);//设置透明度

mTextureView.setRotation(90.0f);//旋转90 °

catch (IOException ioe)

// Something bad happened

public void onSurfaceTextureSizeChanged(SurfaceTexture surface, int width, int height)

// Ignored, Camera does all the work for us

public boolean onSurfaceTextureDestroyed(SurfaceTexture surface)

mCamera.stopPreview();//停止预览

mCamera.release();//释放避免oom

return true;

public void onSurfaceTextureUpdated(SurfaceTexture surface)

// Invoked every time there's a new Camera preview frame

二、调用外接USB摄像头

1、首先引入UVCCamera 第三方开源库用于驱动摄像头

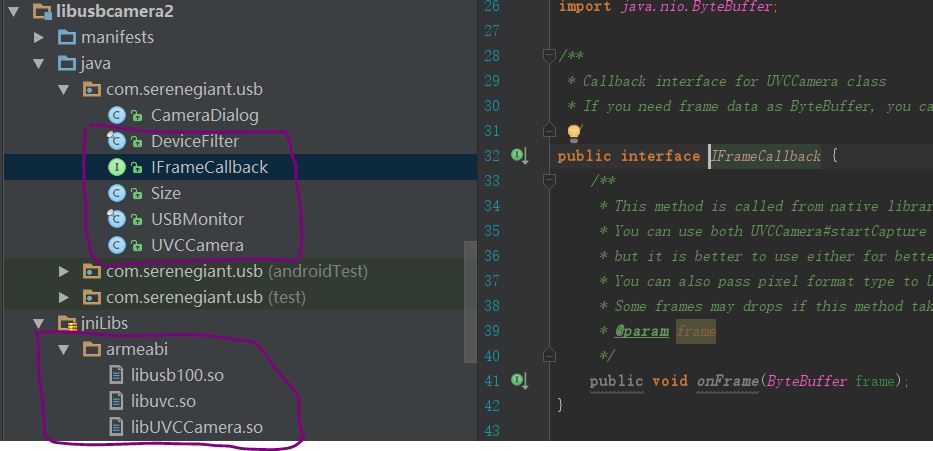

引入第三方很简单,就把主要的类copy到自己的module下,注意包名,我这里喜欢把第三方的库单独放到一个lib module下,结构如下:

其中最重要的是返回摄像头数据的回调接口IFrameCallback(只要设置了回调之后就会无限的再回调这个方法,这也是摄像头预览界面实时刷新的重要方法)

import java.nio.ByteBuffer;

/**

* Callback interface for UVCCamera class

* If you need frame data as ByteBuffer, you can use this callback interface with UVCCamera#setFrameCallback

*/

public interface IFrameCallback

/**

* This method is called from native library via JNI on the same thread as UVCCamera#startCapture.

* You can use both UVCCamera#startCapture and #setFrameCallback

* but it is better to use either for better performance.

* You can also pass pixel format type to UVCCamera#setFrameCallback for this method.

* Some frames may drops if this method takes a time.

* @param frame

*/

public void onFrame(ByteBuffer frame);

封装的一个摄像头对象UVCCamera同时也是JNI本地java 接口类

2、引入驱动USB摄像头的jni文件

具体步骤可参见Android NDK——使用Android Studio引用so库,jar包及module并使用JNI的正确姿势

3、首先是布局文件和R.xml.device_filter文件

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:background="@android:color/black"

android:backgroundTintMode="multiply"

android:padding="5dp">

<custom.crazymo.com.usbcamera.UVCCameraTextureView

android:id="@+id/texture_camera_view"

android:layout_width="2dp"

android:layout_height="2dp"

android:layout_centerInParent="true" />

</RelativeLayout>

<?xml version="1.0" encoding="utf-8"?>

<usb>

<usb-device class="239" subclass="2" /> <!-- all device of UVC -->

</usb>

4、在Module下的gradle引入依赖的Lib module

dependencies

compile fileTree(dir: 'libs', include: ['*.jar'])

androidTestCompile('com.android.support.test.espresso:espresso-core:2.2.2',

exclude group: 'com.android.support', module: 'support-annotations'

)

compile 'com.android.support:appcompat-v7:25.2.0'

compile project(':libusbcamera2')//引入libusbcamera2

testCompile 'junit:junit:4.12'

5、实现自动打开预览界面并完成拍照

package custom.crazymo.com.usbcamera;

import android.app.Activity;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.graphics.SurfaceTexture;

import android.hardware.usb.UsbDevice;

import android.os.Bundle;

import android.os.Looper;

import android.util.Log;

import android.view.Surface;

import android.view.TextureView;

import android.widget.Toast;

import com.serenegiant.usb.DeviceFilter;

import com.serenegiant.usb.IFrameCallback;

import com.serenegiant.usb.USBMonitor;

import com.serenegiant.usb.UVCCamera;

import java.io.ByteArrayInputStream;

import java.io.ByteArrayOutputStream;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.List;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

/**

* auther: MO

* Date: 2017/3/8

* Time:16:56

* Des:

*/

public class DetectActivity extends Activity implements TextureView.SurfaceTextureListener

// for debugging

private static String TAG = "SingleCameraPreview";

private static boolean DEBUG = true;

// for thread pool

private static final int CORE_POOL_SIZE = 1; // initial/minimum threads

private static final int MAX_POOL_SIZE = 4; // maximum threads

private static final int KEEP_ALIVE_TIME = 10; // time periods while keep the idle thread

protected static final ThreadPoolExecutor EXECUTER = new ThreadPoolExecutor(CORE_POOL_SIZE,

MAX_POOL_SIZE,

KEEP_ALIVE_TIME,

TimeUnit.SECONDS,

new LinkedBlockingQueue<Runnable>());

// for accessing USB and USB camera

private USBMonitor mUSBMonitor;

private UVCCamera mCamera = null;

private UVCCameraTextureView mUVCCameraView;

private Surface mPreviewSurface;

private Bitmap bitmap;

private Context ctx;

private boolean isNeedCallBack=true;

@Override

protected void onCreate(Bundle savedInstanceState)

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_detect);

init();

private void init()

getView();

initCamera();

private void getView()

mUVCCameraView = (UVCCameraTextureView) findViewById(R.id.texture_camera_view);

//初始化UVCCamera对象

private void initCamera()

ctx=getBaseContext();

mUVCCameraView.setSurfaceTextureListener(this);

mUVCCameraView.setAspectRatio(

UVCCamera.DEFAULT_PREVIEW_WIDTH * 1.0f / UVCCamera.DEFAULT_PREVIEW_HEIGHT);

mUSBMonitor = new USBMonitor(this, mOnDeviceConnectListener);

@Override

protected void onResume()

super.onResume();

mUVCCameraView.setSurfaceTextureListener(this);

mUSBMonitor.register();

final List<DeviceFilter> filter = DeviceFilter.getDeviceFilters(ctx, com.serenegiant.usb.R.xml.device_filter);

mUSBMonitor.requestPermission(mUSBMonitor.getDeviceList((filter.get(0))).get(0));

if (mCamera != null)

mCamera.startPreview();//开启预览

@Override

protected void onPause()

mUSBMonitor.unregister();

if (mCamera != null)

mCamera.stopPreview();

super.onPause();

@Override

protected void onDestroy()

if (mUSBMonitor != null)

mUSBMonitor.destroy();

if (mCamera != null)

mCamera.destroy();

super.onDestroy();

private USBMonitor.OnDeviceConnectListener mOnDeviceConnectListener = new USBMonitor.OnDeviceConnectListener()

@Override

public void onAttach(UsbDevice device)

Toast.makeText(DetectActivity.this, "USB_DEVICE_ATTACHED", Toast.LENGTH_SHORT)

.show();//获取到权限之后触发

@Override

public void onDettach(UsbDevice device)

Toast.makeText(DetectActivity.this, "USB_DEVICE_DETACHED", Toast.LENGTH_SHORT)

.show();

@Override

public void onConnect(UsbDevice device, final USBMonitor.UsbControlBlock ctrlBlock, boolean createNew)

//接收到USB 事件的广播之后再USBMonitor的processConnect里通过Handler尝试建立与USB 摄像头的连接,建立连接之后会由Monitor去触发onConnect

if (mCamera != null)

return;

final UVCCamera camera = new UVCCamera();

EXECUTER.execute(new Runnable()

@Override

public void run()

// Open Camera

if (Looper.myLooper() == null)

Looper.prepare();//这一步也很重要否则会引发内部Handler 异常

camera.open(ctrlBlock);

// Set Preview Mode

try

camera.setPreviewSize(UVCCamera.DEFAULT_PREVIEW_WIDTH,

UVCCamera.DEFAULT_PREVIEW_HEIGHT,

UVCCamera.FRAME_FORMAT_MJPEG, 0.5f);

catch (IllegalArgumentException e1)

e1.printStackTrace();

try

camera.setPreviewSize(UVCCamera.DEFAULT_PREVIEW_WIDTH,

UVCCamera.DEFAULT_PREVIEW_HEIGHT,

UVCCamera.DEFAULT_PREVIEW_MODE, 0.5f);

catch (IllegalArgumentException e2)

// camera.destroy();

releaseUVCCamera();

e2.printStackTrace();

// Start Preview

if (mCamera == null)

mCamera = camera;

if (mPreviewSurface != null)

mPreviewSurface.release();

mPreviewSurface = null;

final SurfaceTexture surfaceTexture = mUVCCameraView.getSurfaceTexture();

if (surfaceTexture != null)

mPreviewSurface = new Surface(surfaceTexture);

Toast.makeText(DetectActivity.this, " Open Camera——> SurfaceTexture surfaceTexture = mUVCCameraView.getSurfaceTexture();"+camera, Toast.LENGTH_SHORT).show();

camera.setPreviewDisplay(mPreviewSurface);

camera.setFrameCallback(mIFrameCallback, UVCCamera.PIXEL_FORMAT_RGB565);

camera.startPreview();

);

@Override

public void onDisconnect(UsbDevice device, USBMonitor.UsbControlBlock ctrlBlock)

if (DEBUG)

Log.v(TAG, "onDisconnect" + device);

if (mCamera != null && device.equals(mCamera.getDevice()))

releaseUVCCamera();

@Override

public void onCancel()

;

private void releaseUVCCamera()

if (DEBUG)

Log.v(TAG, "releaseUVCCamera");

mCamera.close();

if (mPreviewSurface != null)

mPreviewSurface.release();

mPreviewSurface = null;

mCamera.destroy();

mCamera = null;

public byte[] bitmabToBytes(Bitmap bitmap)

//将图片转化为位图

///Bitmap bitmap = BitmapFactory.decodeResource(getResources(), R.mipmap.ic_launcher);

int size = bitmap.getWidth() * bitmap.getHeight() * 4;

//创建一个字节数组输出流,流的大小为size

ByteArrayOutputStream baos= new ByteArrayOutputStream(size);

try

//设置位图的压缩格式,质量为100%,并放入字节数组输出流中

bitmap.compress(Bitmap.CompressFormat.JPEG, 100, baos);

//将字节数组输出流转化为字节数组byte[]

byte[] imagedata = baos.toByteArray();

return imagedata;

catch (Exception e)

finally

try

bitmap.recycle();

baos.close();

catch (IOException e)

e.printStackTrace();

return new byte[0];

/**

* 将拍下来的照片存放在SD卡中

* @param data

* @throws IOException

*/

public static String saveToSDCard(byte[] data) throws IOException

Date date = new Date();

SimpleDateFormat format = new SimpleDateFormat("yyyyMMddHHmmss"); // 格式化时间

String filename = format.format(date) + ".jpg";

// File fileFolder = new File(getTrueSDCardPath()

// + "/rebot/cache/");

File fileFolder = new File("/mnt/internal_sd"+ "/rebot/cache/");

if (