oracle logminer分析归档日志

Posted lltfjsy

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了oracle logminer分析归档日志相关的知识,希望对你有一定的参考价值。

适用场景:

数据库在某个时间段产生了大量的归档日志,用户想要分析在这段时间数据具体做了什么操作

一、查询目标时间点的归档日志是否存在

set pagesize 900;

set linesize 900;

col name for a55;

ALTER SESSION SET NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS";

select name, first_time, next_time,SEQUENCE# from v$archived_log where first_time > to_date('2021-11-15 16:00:00', 'yyyy-mm-dd hh24:mi:ss') and first_time < to_date('2021-11-15 16:30:00', 'yyyy-mm-dd hh24:mi:ss');

二、添加归档日志文件

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253871_1013686939.dbf',dbms_logmnr.new);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253872_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253873_1013686939.dbf',dbms_logmnr.addfile);

三、启动logminer进行分析

execute dbms_logmnr.start_logmnr;

也可以指定其他条件,比如时间:

execute dbms_logmnr.start_logmnr(StartTime =>to_date('2021-11-15 16:00:00','YYYY-MM-DD HH24:MI:SS') EndTime =>to_date('2021-11-15 16:30:00','YYYY-MM-DD HH24:MI:SS '));

四、输出结果

可以将结果输出到一个文件中

spool test.txt ; --结果输出到当前目录的test.txt文件中

select sql_redo,info from v$logmnr_contents where UPPER(sql_redo) like '%UPDATE%' or UPPER(sql_redo) like '%INSERT%' or UPPER(sql_redo) like '%DELETE%'; --获取DML语句

spool off;

也可以通过建表的方式将结果保存在一个表格中。(如果日志量大,不要建这个临时表)

create table temp_result1 as select * from v$logmnr_contents;

五、结束logminer

execute dbms_logmnr.end_logmnr;

六、分析结果

分析test.txt 或temp_result1表中数据

如果输出的结果中,对象名未知,对象id已知的话,可以根据以下语句查询对应的对象名

select owner,object_name from dba_objects where object_id='xxxxx';

七、示例

例如:

想要查询2021年11月15日16:00到 2011年11月15日16:30,数据库做了哪些dml操作。

1.检查这个目标时间点的归档日志是否存在

set pagesize 900;

set linesize 900;

col name for a55;

ALTER SESSION SET NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS";

select name, first_time, next_time,SEQUENCE# from v$archived_log where first_time > to_date('2021-11-15 16:00:00', 'yyyy-mm-dd hh24:mi:ss') and first_time < to_date('2021-11-15 16:30:00', 'yyyy-mm-dd hh24:mi:ss');

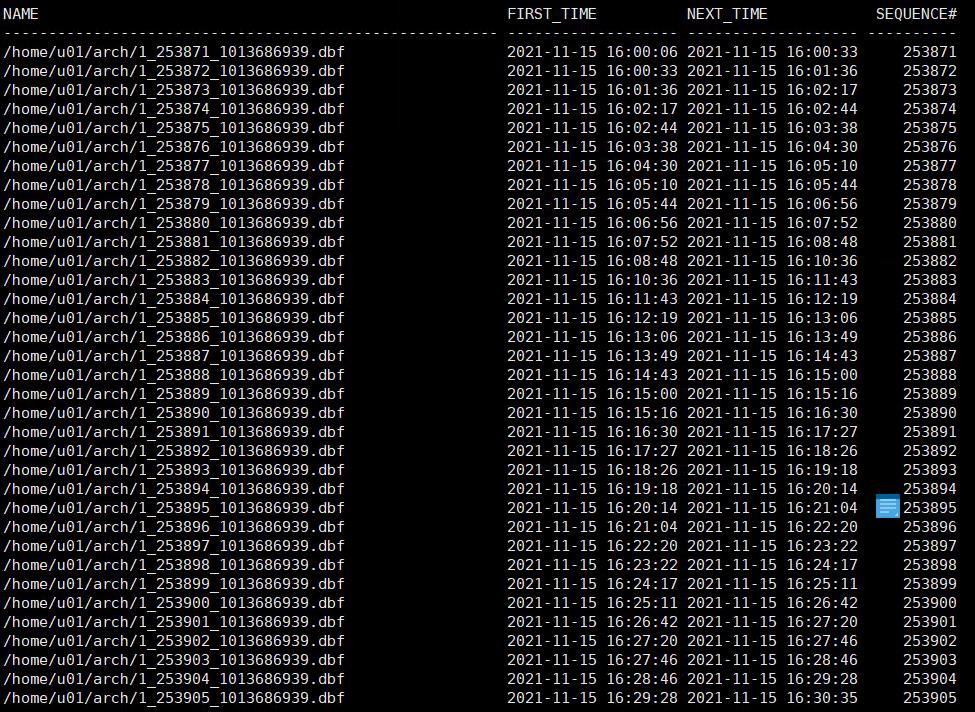

查询结果如下:

2.日志挖掘

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253871_1013686939.dbf',dbms_logmnr.new);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253872_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253873_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253874_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253875_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253876_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253877_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253878_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253879_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253880_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253881_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253882_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253883_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253884_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253885_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253886_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253887_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253888_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253889_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253890_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253891_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253892_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253893_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253894_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253895_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253896_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253897_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253898_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253899_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253900_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253901_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253902_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253903_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253904_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253905_1013686939.dbf',dbms_logmnr.addfile);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE('/home/u01/arch/1_253906_1013686939.dbf',dbms_logmnr.addfile);

3.启用logminer

PROMPT start LogMiner;

execute dbms_logmnr.start_logmnr;

4.结果输出

spool test.txt ; --结果输出到当前目录的test.txt文件中

select sql_redo,info from v$logmnr_contents where UPPER(sql_redo) like '%UPDATE%' or UPPER(sql_redo) like '%INSERT%' or UPPER(sql_redo) like '%DELETE%'; --获取DML语句

spool off;

5.结束logminer

EXECUTE DBMS_LOGMNR.END_LOGMNR();

6.分析结果

分析test.txt具体在做哪些操作。

LogMiner的使用

LogMiner是用于Oracle日志挖掘的利器。

百科解释:

LogMiner 是Oracle公司从产品8i以后提供的一个实际非常有用的分析工具,使用该工具可以轻松获得Oracle 重做日志文件(归档日志文件)中的具体内容,LogMiner分析工具实际上是由一组PL/SQL包和一些动态视图组成,它作为Oracle数据库的一部分来发布,是oracle公司提供的一个完全免费的工具。

本文主要演示LogMiner的使用,直观展示LogMiner的作用。

环境:Oracle 11.2.0.4 RAC

1.查询当前日志组

2.业务用户插入操作

3.归档日志切换

4.业务用户插入操作

5.归档日志切换

6.业务用户更新操作

7.归档日志切换

8.确认需要分析的日志

9.备份归档日志

10.使用LogMiner分析

1.查询当前日志组

使用sys用户查询Oracle数据库的当前日志组:

--1.current log SQL> select * from v$log; GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME ---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------ ------------ ------------ 1 1 29 52428800 512 2 YES INACTIVE 1547838 25-JUN-17 1547840 25-JUN-17 2 1 30 52428800 512 2 NO CURRENT 1567897 27-JUN-17 2.8147E+14 27-JUN-17 3 2 25 52428800 512 2 NO CURRENT 1567902 27-JUN-17 2.8147E+14 4 2 24 52428800 512 2 YES INACTIVE 1567900 27-JUN-17 1567902 27-JUN-17

这里当前日志(current)是:

thread 1 sequence 30

thread 2 sequence 25

2.业务用户插入操作

模拟业务用户jingyu插入T2表数据:

--2.业务用户插入操作 sqlplus jingyu/[email protected] SQL> select count(1) from t2; COUNT(1) ---------- 0 SQL> insert into t2 select rownum, rownum, rownum, dbms_random.string(‘b‘,50) from dual connect by level <= 100000 order by dbms_random.random; commit; 100000 rows created. SQL> Commit complete. SQL> select count(1) from t2; COUNT(1) ---------- 100000

3.归档日志切换

为了区分每个日志的不同操作,这里对数据库进行手工归档切换,模拟现实中实际的归档切换。

--3.模拟归档日志切换 SQL> alter system archive log current; System altered. SQL> select * from v$log; GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME ---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------ ------------ ------------ 1 1 31 52428800 512 2 NO CURRENT 1572517 27-JUN-17 2.8147E+14 2 1 30 52428800 512 2 YES ACTIVE 1567897 27-JUN-17 1572517 27-JUN-17 3 2 25 52428800 512 2 YES ACTIVE 1567902 27-JUN-17 1572521 27-JUN-17 4 2 26 52428800 512 2 NO CURRENT 1572521 27-JUN-17 2.8147E+14

4.业务用户插入操作

模拟业务用户jingyu删除T2表部分数据:

--4.业务用户删除操作 SQL> delete from t2 where id < 10000; 9999 rows deleted. SQL> commit; Commit complete. SQL> select count(1) from t2; COUNT(1) ---------- 90001

5.归档日志切换

为了区分每个日志的不同操作,这里对数据库进行手工归档切换,模拟现实中实际的归档切换。

--5.模拟归档日志切换 SQL> alter system archive log current; System altered. SQL> select * from v$log; GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME ---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------ ------------ ------------ 1 1 31 52428800 512 2 YES ACTIVE 1572517 27-JUN-17 1574293 27-JUN-17 2 1 32 52428800 512 2 NO CURRENT 1574293 27-JUN-17 2.8147E+14 3 2 27 52428800 512 2 NO CURRENT 1574296 27-JUN-17 2.8147E+14 4 2 26 52428800 512 2 YES ACTIVE 1572521 27-JUN-17 1574296 27-JUN-17

6.业务用户更新操作

模拟业务用户jingyu更新T2表部分数据:

--6.业务用户更新操作 SQL> update T2 SET contents = ‘xxx‘ where id > 99998; 2 rows updated. SQL> commit; Commit complete.

7.归档日志切换

为了区分每个日志的不同操作,这里对数据库进行手工归档切换,模拟现实中实际的归档切换。

--7.模拟归档日志切换 SQL> alter system archive log current; System altered. SQL> select * from v$log; GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME ---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------ ------------ ------------ 1 1 33 52428800 512 2 NO CURRENT 1575480 27-JUN-17 2.8147E+14 2 1 32 52428800 512 2 YES ACTIVE 1574293 27-JUN-17 1575480 27-JUN-17 3 2 27 52428800 512 2 YES ACTIVE 1574296 27-JUN-17 1575458 27-JUN-17 4 2 28 52428800 512 2 NO CURRENT 1575458 27-JUN-17 2.8147E+14

8.确认需要分析的日志

确认之后需要使用LogMiner分析的日志:

--8.确认需要分析的日志 thread# 1 sequence# 30 thread# 2 sequence# 25 这部分日志肯定是有记录插入操作 thread# 1 sequence# 31 thread# 2 sequence# 26 这部分日志肯定是有记录删除操作 thread# 1 sequence# 32 thread# 2 sequence# 27 这部分日志肯定是有记录更新操作

9.备份归档日志

将相关的归档都copy备份出来:

--9. 将相关的归档都copy备份出来

RUN {

allocate channel dev1 device type disk format ‘/tmp/backup/arc_%h_%e_%t‘;

backup as copy archivelog sequence 30 thread 1;

backup as copy archivelog sequence 31 thread 1;

backup as copy archivelog sequence 32 thread 1;

backup as copy archivelog sequence 25 thread 2;

backup as copy archivelog sequence 26 thread 2;

backup as copy archivelog sequence 27 thread 2;

release channel dev1;

}备份出来的归档日志文件如下:

[[email protected] backup]$ ls -lrth total 17M -rw-r----- 1 oracle asmadmin 2.3M Jun 27 21:50 arc_1_30_947800247 -rw-r----- 1 oracle asmadmin 591K Jun 27 21:50 arc_1_31_947800249 -rw-r----- 1 oracle asmadmin 143K Jun 27 21:50 arc_1_32_947800250 -rw-r----- 1 oracle asmadmin 9.5M Jun 27 21:50 arc_2_25_947800251 -rw-r----- 1 oracle asmadmin 3.6M Jun 27 21:50 arc_2_26_947800253 -rw-r----- 1 oracle asmadmin 77K Jun 27 21:50 arc_2_27_947800254

10.使用LogMiner分析

使用LogMiner分析归档日志:

--使用LogMiner分析归档日志 --应该有插入操作的日志 begin dbms_logmnr.add_logfile(‘/tmp/backup/arc_1_30_947800247‘); dbms_logmnr.add_logfile(‘/tmp/backup/arc_2_25_947800251‘); dbms_logmnr.start_logmnr(Options=>dbms_logmnr.dict_from_online_catalog); end; / --应该有删除操作的日志 begin dbms_logmnr.add_logfile(‘/tmp/backup/arc_1_31_947800249‘); dbms_logmnr.add_logfile(‘/tmp/backup/arc_2_26_947800253‘); dbms_logmnr.start_logmnr(Options=>dbms_logmnr.dict_from_online_catalog); end; / --应该有更新操作的日志 begin dbms_logmnr.add_logfile(‘/tmp/backup/arc_1_32_947800250‘); dbms_logmnr.add_logfile(‘/tmp/backup/arc_2_27_947800254‘); dbms_logmnr.start_logmnr(Options=>dbms_logmnr.dict_from_online_catalog); end; /

查询v$logmnr_contents

set lines 180 pages 500 col username format a8 col sql_redo format a50 select username,scn,timestamp,sql_redo from v$logmnr_contents where table_name=‘T2‘; select username,scn,timestamp,sql_redo from v$logmnr_contents where username=‘JINGYU‘; select username,scn,timestamp,sql_redo from v$logmnr_contents where sql_redo like ‘%JINGYU%‘; select username,scn,timestamp,sql_redo from v$logmnr_contents where sql_redo like ‘insert%JINGYU%‘; select username,scn,timestamp,sql_redo from v$logmnr_contents where sql_redo like ‘delete%JINGYU%‘; select username,scn,timestamp,sql_redo from v$logmnr_contents where sql_redo like ‘update%JINGYU%‘;

实验发现,以username为条件无法查询到相关记录,最终确认username都是unknown而不是真正执行语句的业务用户jingyu。

而挖掘出的日志sql_redo这个字段是完整的SQL,可以采用like的方式查询,比如我分析更新操作的日志,就可以得到下面这样的结果:

SQL> --应该有更新操作的日志 SQL> begin 2 dbms_logmnr.add_logfile(‘/tmp/backup/arc_1_32_947800250‘); 3 dbms_logmnr.add_logfile(‘/tmp/backup/arc_2_27_947800254‘); 4 dbms_logmnr.start_logmnr(Options=>dbms_logmnr.dict_from_online_catalog); 5 end; 6 / PL/SQL procedure successfully completed. SQL> select count(1) from v$logmnr_contents; COUNT(1) ---------- 388 SQL> select username,scn,timestamp,sql_redo from v$logmnr_contents where username=‘JINGYU‘; no rows selected SQL> select username,scn,timestamp,sql_redo from v$logmnr_contents where sql_redo like ‘%JINGYU%‘; USERNAME SCN TIMESTAMP ------------------------------ ---------- ------------ SQL_REDO -------------------------------------------------------------------------------- UNKNOWN 1575420 27-JUN-17 update "JINGYU"."T2" set "CONTENTS" = ‘xxx‘ where "CONTENTS" = ‘YSWGNNLCLMYWPSLQ ETVLGQJRKQIEAMOEYUFNRUQULVFRVPEDRV‘ and ROWID = ‘AAAVWVAAGAAAAHnABj‘; UNKNOWN 1575420 27-JUN-17 update "JINGYU"."T2" set "CONTENTS" = ‘xxx‘ where "CONTENTS" = ‘WHCWFOZVLJWHFWLJ DNVSMQTORGJFFXYADIOJZWJCDDOYXAOQJG‘ and ROWID = ‘AAAVWVAAGAAAAOYAAE‘; SQL>

至此,LogMiner基本的操作实验已完成。

附:与LogMiner有关的一些操作命令参考:

conn / as sysdba --安装LOGMINER @$ORACLE_HOME/rdbms/admin/dbmslmd.sql; @$ORACLE_HOME/rdbms/admin/dbmslm.sql; @$ORACLE_HOME/rdbms/admin/dbmslms.sql; @$ORACLE_HOME/rdbms/admin/prvtlm.plb; --停止logmnr exec dbms_logmnr.end_logmnr --查询附加日志开启情况: select supplemental_log_data_min, supplemental_log_data_pk, supplemental_log_data_ui from v$database; --开启附加日志 alter database add supplemental log data; --取消补充日志 alter database drop supplemental log data (primary key) columns; alter database drop supplemental log data (unique) columns; alter database drop supplemental log data; --最后一个即为新的归档 select name,dest_id,thread#,sequence# from v$archived_log;

最后确认如果开启了附加日志,username就可以捕获到正确的值:

SQL> set lines 180

SQL> /

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------ ------------ ------------

1 1 35 52428800 512 2 YES INACTIVE 1590589 27-JUN-17 1591935 27-JUN-17

2 1 36 52428800 512 2 NO CURRENT 1591935 27-JUN-17 2.8147E+14

3 2 29 52428800 512 2 YES INACTIVE 1590594 27-JUN-17 1591938 27-JUN-17

4 2 30 52428800 512 2 NO CURRENT 1591938 27-JUN-17 2.8147E+14

1,36

2,30

SQL> update t2 set contents =

2 ‘aaa‘ where id = 44449;

1 row updated.

SQL> commit;

Commit complete.

RUN {

allocate channel dev1 device type disk format ‘/tmp/backup/arc_%h_%e_%t‘;

backup as copy archivelog sequence 36 thread 1;

backup as copy archivelog sequence 30 thread 2;

release channel dev1;

}

begin

dbms_logmnr.add_logfile(‘/tmp/backup/arc_1_36_947808116‘);

dbms_logmnr.add_logfile(‘/tmp/backup/arc_2_30_947808118‘);

dbms_logmnr.start_logmnr(Options=>dbms_logmnr.dict_from_online_catalog);

end;

/

SQL> select username,scn,timestamp,sql_redo from v$logmnr_contents where username=‘JINGYU‘;

USERNAME SCN TIMESTAMP

------------------------------ ---------- ------------

SQL_REDO

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

JINGYU 1593448 27-JUN-17

set transaction read write;

JINGYU 1593448 27-JUN-17

update "JINGYU"."T2" set "CONTENTS" = ‘aaa‘ where "CONTENTS" = ‘WZTSQZWYOCNDFKSMNJQLOLFUBRDOHCBMKXBHAPJSHCMWBYZJVH‘ and ROWID = ‘AAAVWVAAGAAAACLAAL‘;

JINGYU 1593450 27-JUN-17

commit;可以看到,开启了附加日志,就可以正常显示username的信息了。

以上是关于oracle logminer分析归档日志的主要内容,如果未能解决你的问题,请参考以下文章