usb摄像头加live555建立rtspserver

Posted qianbo_insist

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了usb摄像头加live555建立rtspserver相关的知识,希望对你有一定的参考价值。

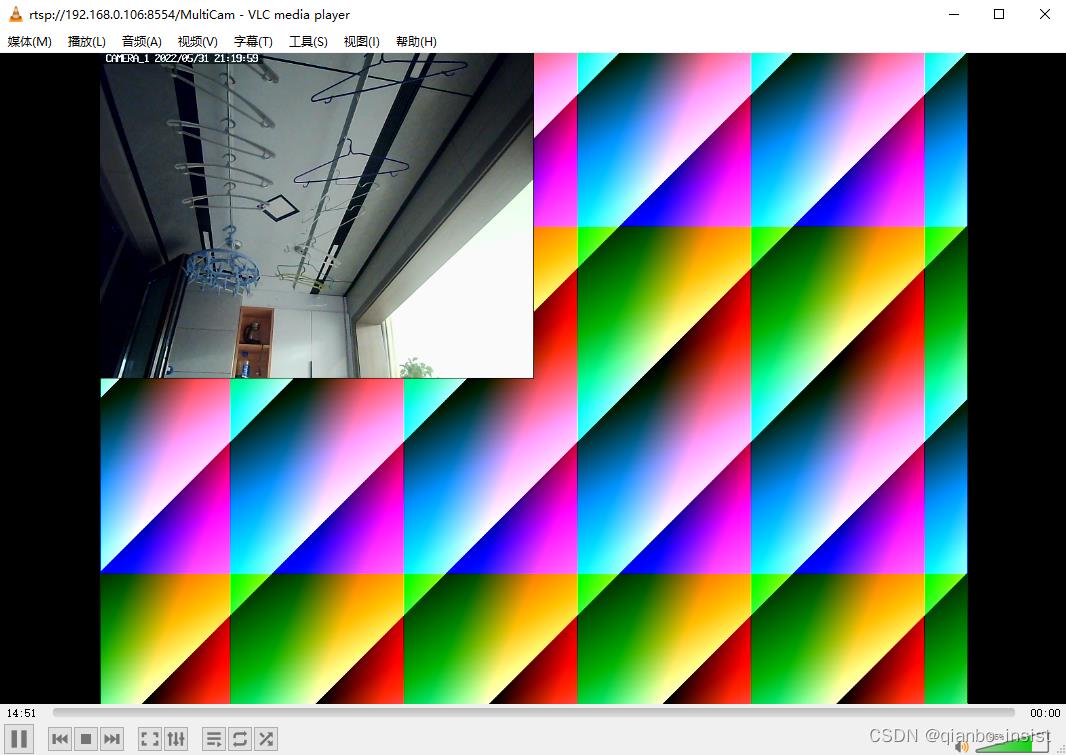

使用vlc来播放rtsp的结果

1、采集usb摄像头

在我的其他文章中可以找到如何使用ffmpeg使用dshow采集usb摄像头,我们也可以直接使用dshow。也可以参考以下代码。

int CameraCapture::initialise()

fState = 0;

if (fCamDesc == NULL)

wprintf("camera description pointer not set!");

return -1;

if (fCamDesc->name[0] == '\\0')

wprintf("camera name is empty!");

return -1;

int ret = 0;

char str_tmp[128];

str_tmp[127] = 0;

AVDictionary* opt = nullptr;

AVDictionaryEntry* dict_entry = nullptr;

AVInputFormat *inFrmt = nullptr;

// Initialize FFmpeg environment

avdevice_register_all();

av_log_set_level(AV_LOG_ERROR);

if (fDecCtx != nullptr)

avcodec_free_context(&fDecCtx);

fDecCtx = nullptr;

if (fInputCtx != nullptr)

avformat_close_input(&fInputCtx);

fInputCtx = nullptr;

if (fFrameOut != nullptr)

if (fFrameOut->data[0] != nullptr)

av_freep(fFrameOut->data[0]);

av_frame_free(&fFrameOut);

fFrameOut = nullptr;

fInputCtx = avformat_alloc_context();

inFrmt = av_find_input_format("dshow");

//video size

ret = snprintf(str_tmp, 127, "video_size=%dx%d;pixel_format=%s;framerate=%g;",

fCamDesc->width, fCamDesc->height, (fCamDesc->pixel_format).c_str(),

fCamDesc->frame_rate);

av_dict_parse_string(&opt, str_tmp, "=", ";", 0);

if (fCamDesc->device_num > 0)

ret += av_dict_set(&opt, "video_device_number", int2str(fCamDesc->device_num).c_str(), 0);

if (!fCamDesc->pin_name.empty())

av_dict_set(&opt, "video_pin_name", fCamDesc->pin_name.c_str(), 0);

if (fCamDesc->rtbuffsize > 102400) //ignore a small rtbuffsize

av_dict_set(&opt, "rtbufsize", byte2str(fCamDesc->rtbuffsize).c_str(), 0);

snprintf(str_tmp, 127, "video=%s", fCamDesc->name.c_str());

if (ret = avformat_open_input(&fInputCtx, str_tmp, inFrmt, &opt) != 0)

//exception

av_dict_free(&opt);

av_strerror(ret, str_tmp, 127);

eprintf("open video input '%s' failed! err = %s", fCamDesc->name.c_str(), str_tmp);

return -1;

if (opt != nullptr)

iprintf("the following options are not used:");

dict_entry = nullptr;

while (dict_entry = av_dict_get(opt, "", dict_entry, AV_DICT_IGNORE_SUFFIX))

fprintf(stdout, " '%s' = '%s'\\n", dict_entry->key, dict_entry->value);

av_dict_free(&opt);

if (avformat_find_stream_info(fInputCtx, nullptr) < 0)

//exception

eprintf("getting audio/video stream information from '%s' failed!", fCamDesc->name.c_str());

return -1;

//print out the video stream information

iprintf("video stream from '%s': ", fCamDesc->name.c_str());

av_dump_format(fInputCtx, 0, fInputCtx->url, 0);

fOutStreamNum = -1;

AVStream* stream = nullptr;

for (unsigned int i = 0; i < fInputCtx->nb_streams; i++)

if (fInputCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

fOutStreamNum = i;

stream = fInputCtx->streams[i];

if (fOutStreamNum == -1 || stream == nullptr)

//exception

eprintf("no video stream found in '%s'!\\n", fCamDesc->name.c_str());

return -1;

AVCodecParameters *codec_param = stream->codecpar;

fBitrate = codec_param->bit_rate;

fDelayClock = (int)((double)(CLOCKS_PER_SEC) * (double)(stream->avg_frame_rate.den) / (double)(stream->avg_frame_rate.num));

AVCodec* decoder = avcodec_find_decoder(codec_param->codec_id);

if (decoder == nullptr)

eprintf("cannot find decoder for '%s'!", avcodec_get_name(codec_param->codec_id));

return -1;

fDecCtx = avcodec_alloc_context3(decoder);

avcodec_parameters_to_context(fDecCtx, codec_param);

av_log_set_level(AV_LOG_FATAL);

if (avcodec_open2(fDecCtx, decoder, nullptr) < 0)

wprintf("Cannot open video decoder for camera!");

return -1;

fpSwsCtx = sws_getCachedContext(nullptr, fDecCtx->width, fDecCtx->height, fDecCtx->pix_fmt,

fDecCtx->width, fDecCtx->height, AV_PIX_FMT_NV12, SWS_BICUBIC, nullptr, nullptr, nullptr);

fFrameOut = av_frame_alloc();

if (fFrameOut == nullptr)

eprintf("allocating output frame failed!");

return -1;

fFrameOut->width = fDecCtx->width;

fFrameOut->height = fDecCtx->height;

fFrameOut->format = AV_PIX_FMT_NV12;

fDataOutSz = av_image_get_buffer_size(AV_PIX_FMT_NV12, fFrameOut->width,

fFrameOut->height, MEM_ALIGN);

ret = av_frame_get_buffer(fFrameOut, MEM_ALIGN);

if (ret < 0)

eprintf("allocating frame data buffer failed!");

return -1;

fDataOut.resize(fDataOutSz);

fState = 1;

return 0;

;

ffmpeg的摄像头采集比较稳定,另外也可以使用qt来采集摄像头,这个

2、h264编码

参考我的其他文章

live555 rtspserver

#include "misc.h"

#include "UsageEnvironment.hh"

#include "BasicUsageEnvironment.hh"

#include "GroupsockHelper.hh"

#include "liveMedia.hh"

#include "CameraMediaSubsession.h"

#include "CameraFrameSource.h"

#include "CameraH264Encoder.h"

namespace CameraStreaming

class CameraRTSPServer

public:

CameraRTSPServer(CameraH264Encoder * enc, int port, int httpPort);

~CameraRTSPServer();

void run();

void signal_exit()

fQuit = 1;

;

void set_bit_rate(uint64_t br)

if (br < 102400)

fBitRate = 100;

else

fBitRate = static_cast<unsigned int>(br / 1024); //in kbs

;

private:

int fPortNum;

int fHttpTunnelingPort;

unsigned int fBitRate;

CameraH264Encoder *fH264Encoder;

char fQuit;

;

#include "CameraRTSPServer.h"

namespace CameraStreaming

CameraRTSPServer::CameraRTSPServer(CameraH264Encoder * enc, int port, int httpPort)

: fH264Encoder(enc), fPortNum(port), fHttpTunnelingPort(httpPort), fQuit(0), fBitRate(5120) //in kbs

fQuit = 0;

;

CameraRTSPServer::~CameraRTSPServer()

;

void CameraRTSPServer::run()

TaskScheduler *scheduler;

UsageEnvironment *env;

char stream_name[] = "MultiCam";

scheduler = BasicTaskScheduler::createNew();

env = BasicUsageEnvironment::createNew(*scheduler);

UserAuthenticationDatabase* authDB = nullptr;

// if (m_Enable_Pass)

// authDB = new UserAuthenticationDatabase;

// authDB->addUserRecord(UserN, PassW);

//

OutPacketBuffer::increaseMaxSizeTo(5242880); // 1M

RTSPServer* rtspServer = RTSPServer::createNew(*env, fPortNum, authDB);

if (rtspServer == nullptr)

*env << "LIVE555: Failed to create RTSP server: %s\\n", env->getResultMsg();

else

if (fHttpTunnelingPort)

rtspServer->setUpTunnelingOverHTTP(fHttpTunnelingPort);

char const* descriptionString = "Combined Multiple Cameras Streaming Session";

CameraFrameSource* source = CameraFrameSource::createNew(*env, fH264Encoder);

StreamReplicator* inputDevice = StreamReplicator::createNew(*env, source, false);

ServerMediaSession* sms = ServerMediaSession::createNew(*env, stream_name, stream_name, descriptionString);

CameraMediaSubsession* sub = CameraMediaSubsession::createNew(*env, inputDevice);

sub->set_PPS_NAL(fH264Encoder->PPS_NAL(), fH264Encoder->PPS_NAL_size());

sub->set_SPS_NAL(fH264Encoder->SPS_NAL(), fH264Encoder->SPS_NAL_size());

if (fH264Encoder->h264_bit_rate() > 102400)

sub->set_bit_rate(fH264Encoder->h264_bit_rate());

else

sub->set_bit_rate(static_cast<uint64_t>(fH264Encoder->pict_width() * fH264Encoder->pict_height() * fH264Encoder->frame_rate() / 20));

sms->addSubsession(sub);

rtspServer->addServerMediaSession(sms);

char* url = rtspServer->rtspURL(sms);

*env << "Play this stream using the URL \\"" << url << "\\"\\n";

delete[] url;

//signal(SIGNIT,sighandler);

env->taskScheduler().doEventLoop(&fQuit); // does not return

Medium::close(rtspServer);

Medium::close(inputDevice);

env->reclaim();

delete scheduler;

;

以上是关于usb摄像头加live555建立rtspserver的主要内容,如果未能解决你的问题,请参考以下文章