高可用的K8s,部署java

Posted 月疯

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了高可用的K8s,部署java相关的知识,希望对你有一定的参考价值。

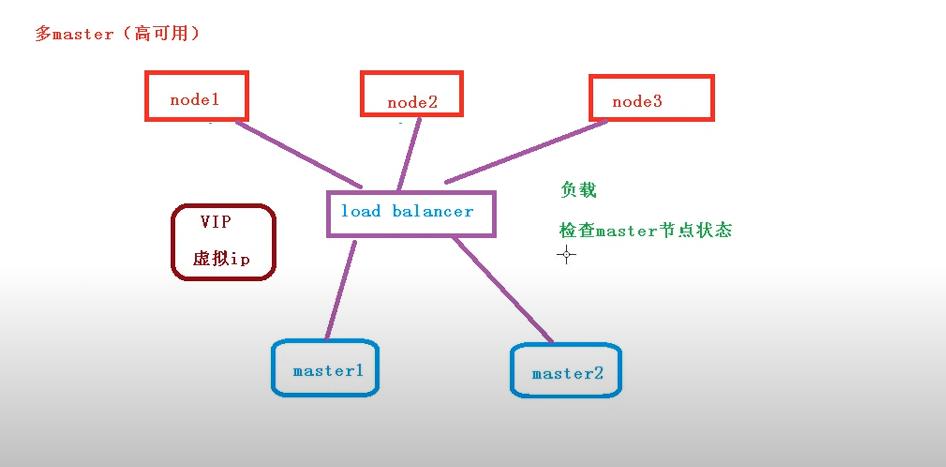

搭建高可用k8s的集群

2台master,一台node

初始化操作:

初始化操作:

参考我的其他博客

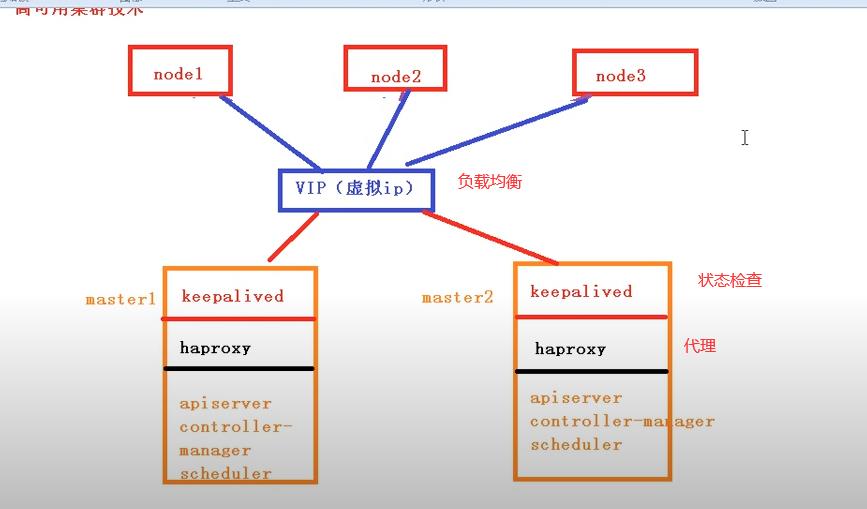

所有master节点部署keepalived

1、安装相关包

yum install -y conntrack-tools libseccomp libtool-ltdl

yum install -y keepalived

2、配置master1节点

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalivedglobal_defs

router_id k8s

vrrp_script check_haproxy

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2vrrp_instance VI_1

state MASTER

interface ens16777736 #添加网卡

virtual_router_id 51

priority 250

advert_int 1

authentication

auth_type PASS

auth_pass ceb1b3ec013d66163d6ab

virtual_ipaddress

192.168.44.158

track_script

check_haproxy

EOF

配置master2节点:

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalivedglobal_defs

router_id k8s

vrrp_script check_haproxy

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2vrrp_instance VI_1

state MASTER

interface ens16777736 #添加网卡

virtual_router_id 51

priority 200

advert_int 1

authentication

auth_type PASS

auth_pass ceb1b3ec013d66163d6ab

virtual_ipaddress

192.168.44.158

track_script

check_haproxy

EOF

3、启动和检查

在俩天master节点都执行

#启动keepalived

systemctl start keepalived.service

设置开机启动

systemctl enable keepalived.service

查看启动状态

systemctl status keepalived.service

启动后查看master1的网卡信息

ip a s en16777736

部署haproxy

安装yum install -y haproxy

修改配置文件:

cat > /etc/haproxy/haproxy.cfg <<EOF

backend kubernetes-apiserver

mode tcp

balance roundrobin

server master01.k8s.io 192.168.44.155:6443 check

server master02.k8s.io 192.168.44.156:6443 checkEOF

启动和检查

俩台master都启动

#设置开机启动

systemctl enable haproxy

开启haproxy

systemctl start haproxy

查看启动状态

systemctl status haproxy

检查端口

netstat -lntup | grep haproxy

所有节点安装Docker/kubeadm/kubelet

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

安装Docker

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum -y install docker-ce-18.06.1.ce-3.el7

systemctl enable docker && systemctl start docker

docker --version

Docker version 18.06.1-ce,build e68fc7acat > /etc/docker/daemon.json <<EOF

"registry-mirrors":["https://b9pmyelo.nirror.aliyuncs.com"]

EOF

添加阿里云YUM软件源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=kubernetes

baseurl=https://mirror.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirror.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

重启docker

systemctl restart docker

安装kubeadm,kubelet和kubectl

由于版本更新频繁,智力指定版本号部署:

yum install -y kubelet-1.16.3 kubeadm-1.16.3 kubectl-1.16.3

systemctl enable kubelet

部署kebernetes master

创建kubeadm配置文件

在具有vip的master上操作,这里为master1

mkdir /usr/local/kubernetes/manifests -p

cd /usr/local/kubernetes/manifests/

vi kubeadm-config.yamlapiServer:

certSANs:

-master1

-master2

-master.k8s.io

-192.168.44.158

-192.168.44.155

-192.168.44.156

-127.0.0.1

extraArgs:

authorization-mode:Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion:kubeadm.k8s.io/v1beta1

certificatesDir: /etc/kubernetes/pki

clucterName: kubernetes

controlPlaneEndpoint: "master.k8s.io:16443"

controllerManager:

dns:

type:CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuns.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.16.3

networking:

dnsDomain: cluster.local

podSubnet:10.244.0.0/16

scheduler:

在master1节点执行

kubeadm init --config kubeadm-config.yaml

按照提示配置环境变量,使用kubectl工具:

mkdir -p $HOME.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $id -u:$id -g $HOME/.kube/config

查看集群状态

kubectl get cs

kubectl get pods = n kube-system

安装集群网络

从官网地址获取flannel的yaml,在master1执行

mkdir flannel

cd flabbel

wget -c https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

安装flannel网络

kubectl apply -f kube-flannel.yml

复制秘钥以及相关文件

从master1复制秘钥及相关文件到master2

mkdir -p /etc/kubernetes/pki/etcd

scp /etc/kubernetes/admin.conf root@192.168.44.156:/etc/kubernetes

scp /etc/kubernetes/pki/ca.*,sa.*,front-proxy-ca.* root@192.168.44.156:/etc/kubernetes/pki

scp /etc/kubernetes/pki/etcd/ca.* root@192.168.44.156:/etc/kubernetes/pki/etcd

master2节点加入集群(master2上执行)

执行在master1上init后输出的join命令,需要带上参数--control-plane表示把master控制节点加入集群

kubeadm join master.k8s.io:16443 --token ckf7bs.30576l0okocepg8b --discovery-token-ca-cert-

hash sha256:19afac8b11182f61073e254fb57b9f19ab4d798b70501036fc69ebef46094aba --control-plane

检查状态

kubectl get node

kubectl get pods --all-namespaces

node1上执行,不需要--control-plane

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令:

kubeadm join master.k8s.io:16443 --token ckf7bs.30576l0okocepg8b --diacovery-token-ca-cert-

hash sha256:19afac8b11182f61073e254fb57b9f19ab4d798b70501036fc69ebef46094aba

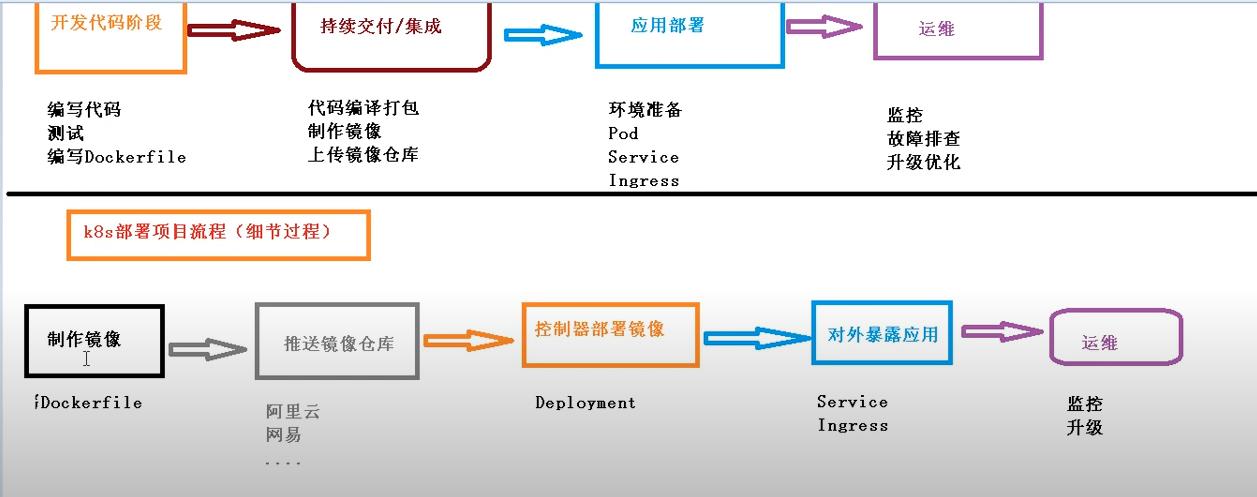

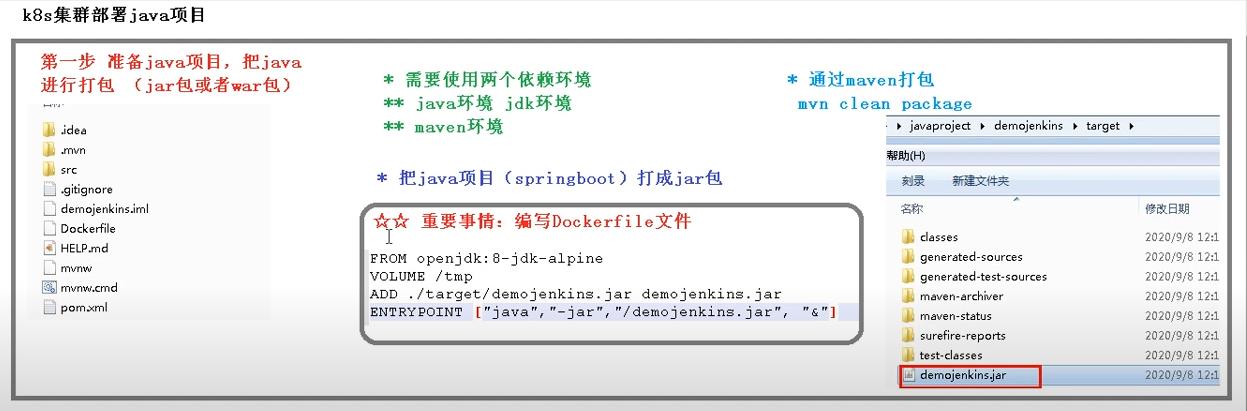

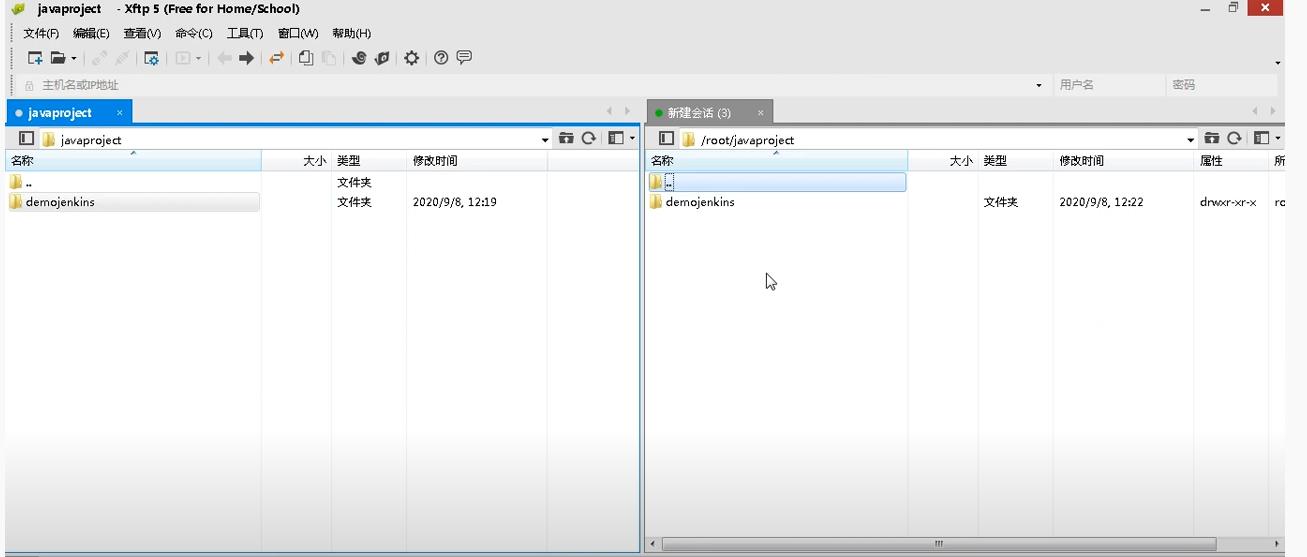

开始部署java项目:

把java项目打包成jar包或者war包(java -jar xxx.jar ,如果war包,直接放到tomcat就可以运行)

打包需要俩个依赖环境

java的jdk环境

maven环境

Dockerfile

FROM openjdk:8-jdk-alpine

VOLUME /tmp

ADD ./target/demojenkins.jar demojenkins.jar #路径 输出的jar包名称

ENTRYPOINT ["java","-jar","/demojenkins.jar","&"] #java -jar xxx.jar 启动

maven先打个jar包

mvn clean package

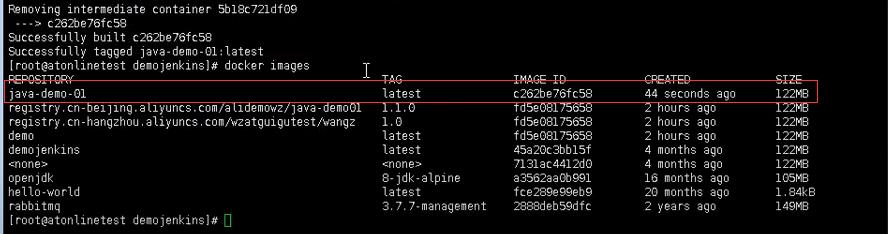

给jar包起个名字:java-demo-01:latest .

//制作镜像

docker打包:

docker build -t java-demo-01:latest .

本地启动制作好的镜像(端口号8111,对外也是8111)

docker run -d -p 8111:8111 java-demo-01:latest -t

处理端口冲突:

netstat -tunlp|grep 8111

//查看进程

ps -ef | grep 8111

//杀死进程

kill -9 1683

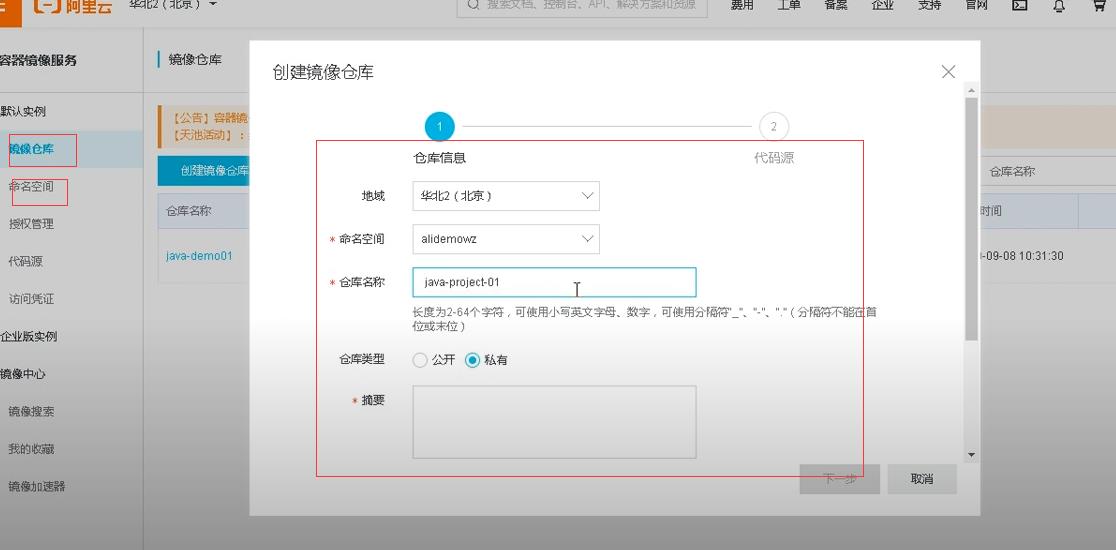

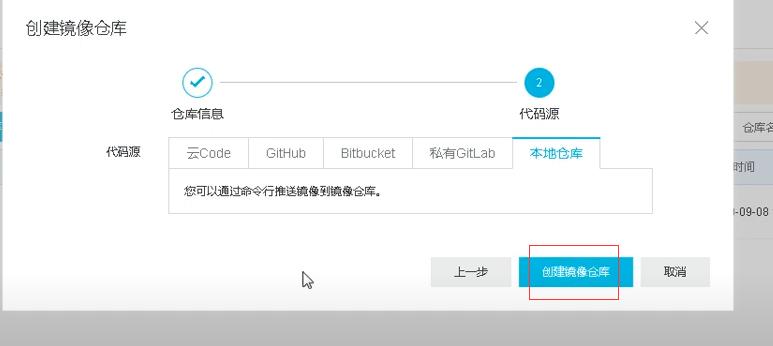

镜像推送:上传镜像到镜像服务器中(阿里云的镜像服务器)

部署镜像,对外暴露接口

kubectl create javademo1 --image=registry.cn-beijing.aliyuns.com/alidemowz/java-project-01:1.0.0 --dry-run -o yaml > javademo1.yaml

kubectl apply -f javademo1.yaml(做一个创建)

kubectl get pods -o wide

做个扩容

kubectl scale deployment javademo1 --replicas=3对外暴露,创建一个service对外暴露端口

kubectl expose deployment javademo1 --port8111 --type=NodePort

以上是关于高可用的K8s,部署java的主要内容,如果未能解决你的问题,请参考以下文章